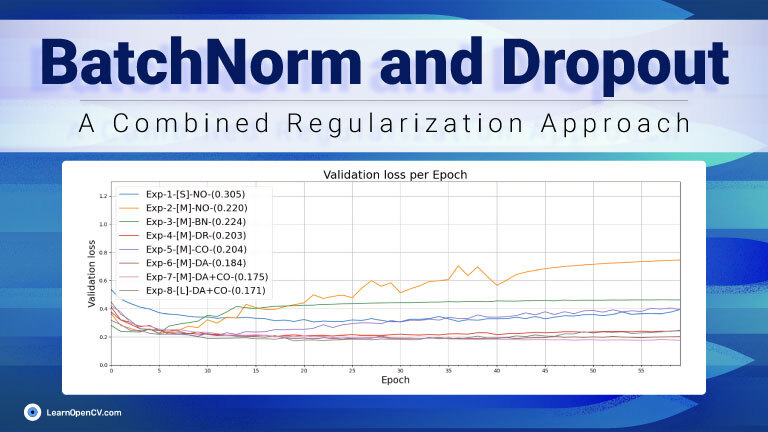

In Deep Learning, Batch Normalization (BatchNorm) and Dropout, as Regularizers, are two powerful techniques used to optimize model performance, prevent overfitting, and speed up convergence. While ...

Latest From the Blog

DINOv2 by Meta: A Self-Supervised foundational vision model

April 24, 2025 5 Comments 12 min read

Share

By 5 Comments

Beginner’s Guide to Embedding Models

April 23, 2025 Leave a Comment 7 min read

Share

MASt3R-SLAM: Real-Time Dense SLAM with 3D Reconstruction Priors

April 22, 2025 Leave a Comment 23 min read

Share

Google’s A2A Protocol: Here’s What You Need to Know

April 21, 2025 Leave a Comment 11 min read

Share

NVIDIA SANA: Fast, High-Resolution Text-to-Image Generation Explained

April 17, 2025 Leave a Comment 8 min read

Share

- « Go to Previous Page

- Page 1

- Interim pages omitted …

- Page 6

- Page 7

- Page 8

- Page 9

- Page 10

- Interim pages omitted …

- Page 83

- Go to Next Page »