Developing a new YOLO-based architecture can redefine state-of-the-art (SOTA) object detection by addressing the existing limitations and incorporating recent advancements in deep learning. Deep learning firm Deci.ai has recently launched YOLO-NAS. This deep learning model delivers superior real-time object detection capabilities and high performance ready for production. These YOLO-NAS models were constructed using Deci’s AutoNAC™ NAS technology and outperformed models like YOLOv7, and YOLOv8, including the recently launched YOLOv6-v3.0.

- What is YOLO-NAS?

- Some Key Architectural Insights into YOLO-NAS

- A Brief Summary Training of YOLO-NAS Models

- How To Use YOLO-NAS For Inference?

- Conclusion

- References

YOLO Master Post – Every Model Explained

Don’t miss out on this comprehensive resource, Mastering All Yolo Models for a richer, more informed perspective on the YOLO series.

What is YOLO-NAS?

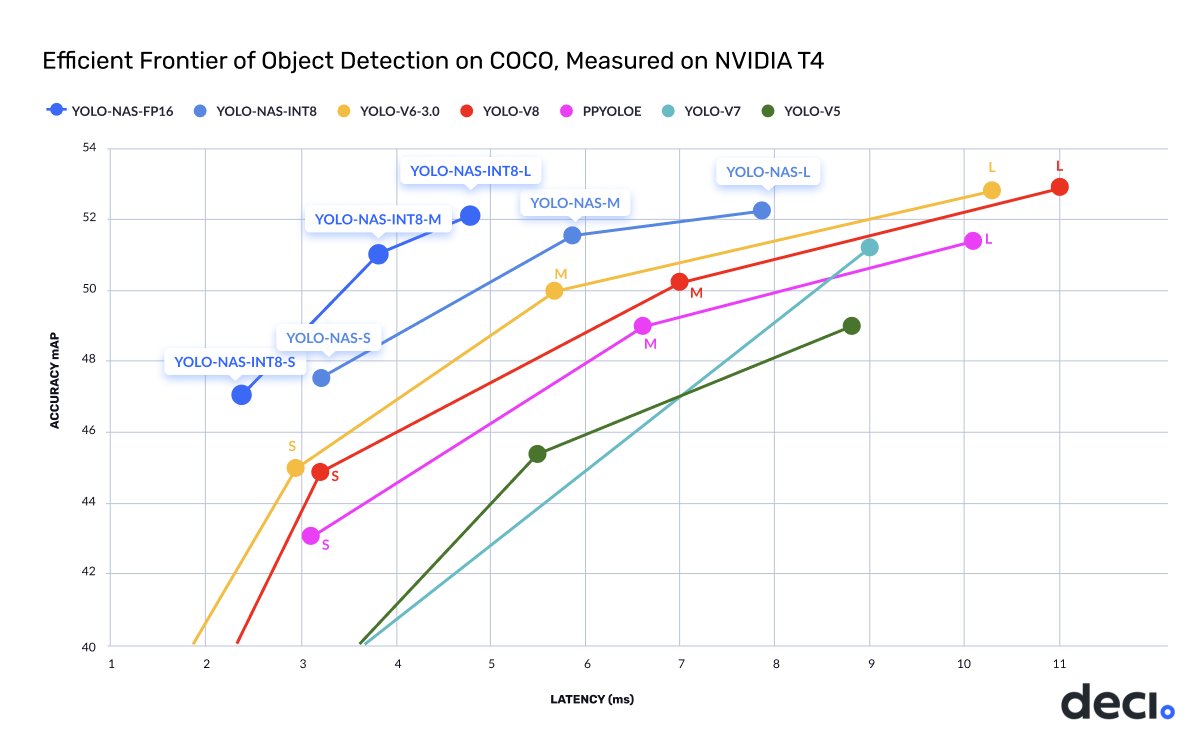

YOLO-NAS is a new real-time state-of-the-art object detection model that outperforms both YOLOv6 & YOLOv8 models in terms of mAP (mean average precision) and inference latency.

Left: YOLO-NAS S || Center: YOLO-NAS M || Right: YOLO-NAS L

Developing a new YOLO-based architecture can redefine state-of-the-art (SOTA) object detection by addressing the existing limitations and incorporating recent advancements in deep learning.

YOLO-NAS is a new foundational model for object detection from Deci.ai. The team has incorporated recent advancements in deep learning to seek out and improve some key limiting factors of current YOLO models, such as inadequate quantization support and insufficient accuracy-latency tradeoffs. In doing so, the team has successfully pushed the boundaries of real-time object detection capabilities.

“Imagine a new YOLO-based architecture that could enhance your ability to detect small objects, improve localization accuracy, and increase the performance-per-compute ratio, making the model more accessible for real-time edge-device applications…..And that’s precisely what we’ve done here at Deci.”

– Deci.ai YOLO-NAS team

The newly released models are:

“Designed specifically for production use, YOLO-NAS is fully compatible with high-performance inference engines like NVIDIA® TensorRT™ and supports INT8 quantization for unprecedented runtime performance.“

– Deci.ai team

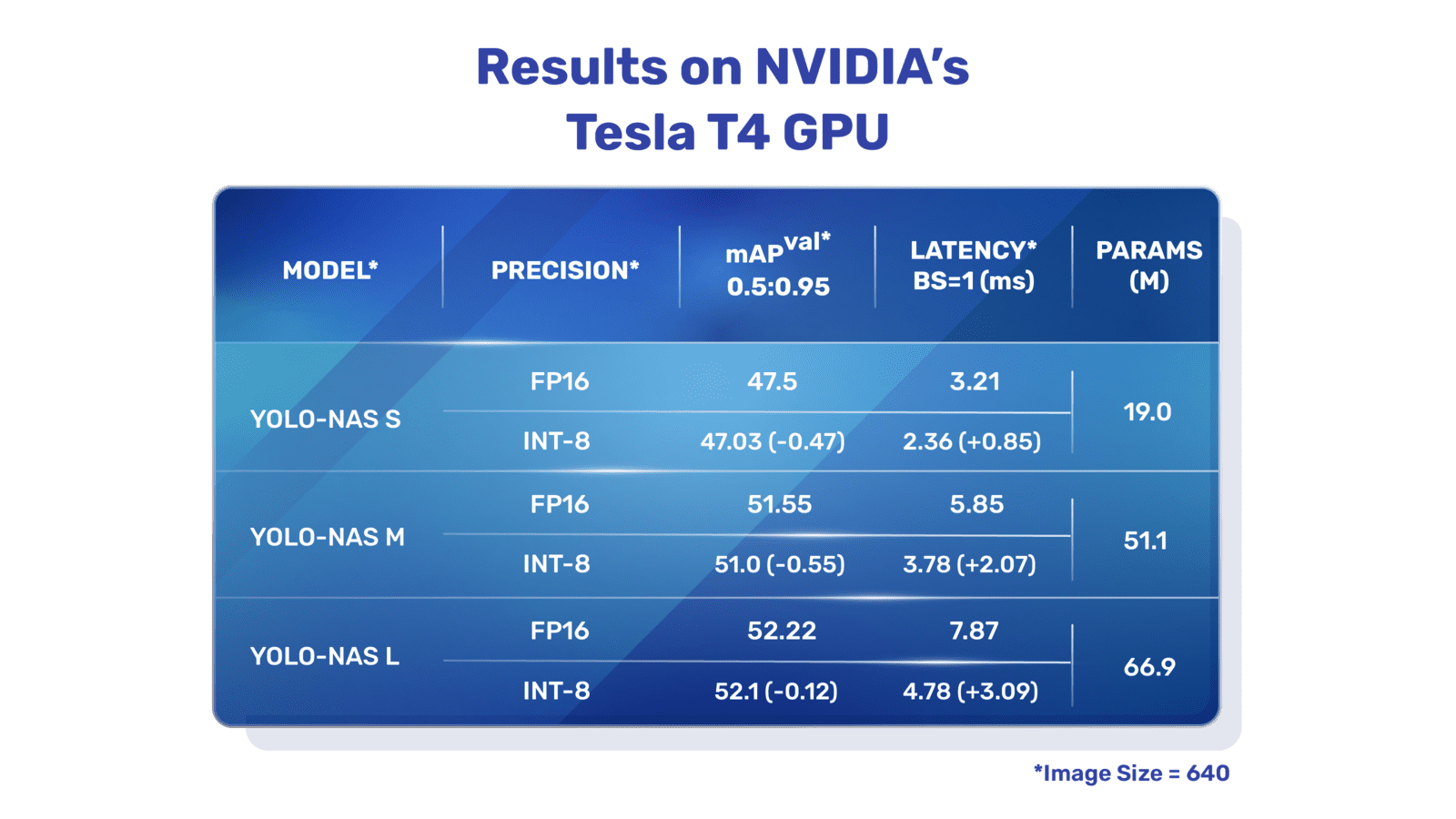

As of writing this article, three YOLO-NAS models have been released that can be used in FP32, FP16, and INT8 precisions.

Mean Average Precision (mAP) is a performance metric for evaluating machine learning models.

Currently, the YOLO-NAS model architectures are available under an open-source license, but the pre-trained weights are available for research use (non-commercial) on Deci’s SuperGradients library only.

What does the “NAS” in YOLO-NAS stand for?

The “NAS” stands for “Neural Architecture Search,” a technique used to automate the design process of neural network architectures. Instead of relying on manual design and human intuition, NAS employs optimization algorithms to discover the most suitable architecture for a given task. NAS aims to find an architecture that achieves the best trade-off between accuracy, computational complexity, and model size.

Some Key Architectural Insights into YOLO-NAS

- The architectures of YOLO-NAS models were “found” using Deci’s proprietary NAS technology, AutoNAC. This engine was used to ascertain the optimal sizes and structures of stages, encompassing block type, the number of blocks, and the number of channels in each stage.

- In all, there were 1014 possible architecture configurations in the NAS search space. Being hardware and data-aware, the AutoNAC engine considers all the components in the inference stack, including compilers and quantization, and honed into a region termed the “efficiency frontier” to find the best models. All three YOLO-NAS models were found in this region of the search space.

- Throughout the NAS process, Quantization-Aware RepVGG (QA-RepVGG) blocks are incorporated into the model architecture, guaranteeing the model’s compatibility with Post-Training Quantization (PTQ).

- Using quantization-aware “QSP” and “QCI” modules consisting of QA-RepVGG blocks provide 8-bit quantization and reparameterization benefits, enabling minimal accuracy loss during PTQ.

- The researchers also use a hybrid quantization method that selectively quantizes specific layers to optimize accuracy and latency tradeoffs while maintaining overall performance.

- YOLO-NAS models also use attention mechanisms and inference time reparametrization to improve object detection capabilities.

A Brief Summary Training of YOLO-NAS Models

The full details of the entire training regimen are not declared at the time of writing this article. We’ll update this section as soon as a paper or any new information is available. From what we can gather from their official press release, the models underwent a coherent and expensive training process.

- The models were pre-trained on the famous Object365 benchmark dataset. A dataset consisting of 2M images and 365 categories.

- Another pretraining round after “pseudo-labeling” 123k COCO unlabeled images.

- Knowledge Distillation (KD) & Distribution Focal Loss (DFL) were also incorporated to enhance the training process of YOLO-NAS models.

“The YOLO-NAS architecture and pre-trained weights define a new frontier in low-latency inference and an excellent starting point for fine-tuning downstream tasks.”

– Deci.ai team

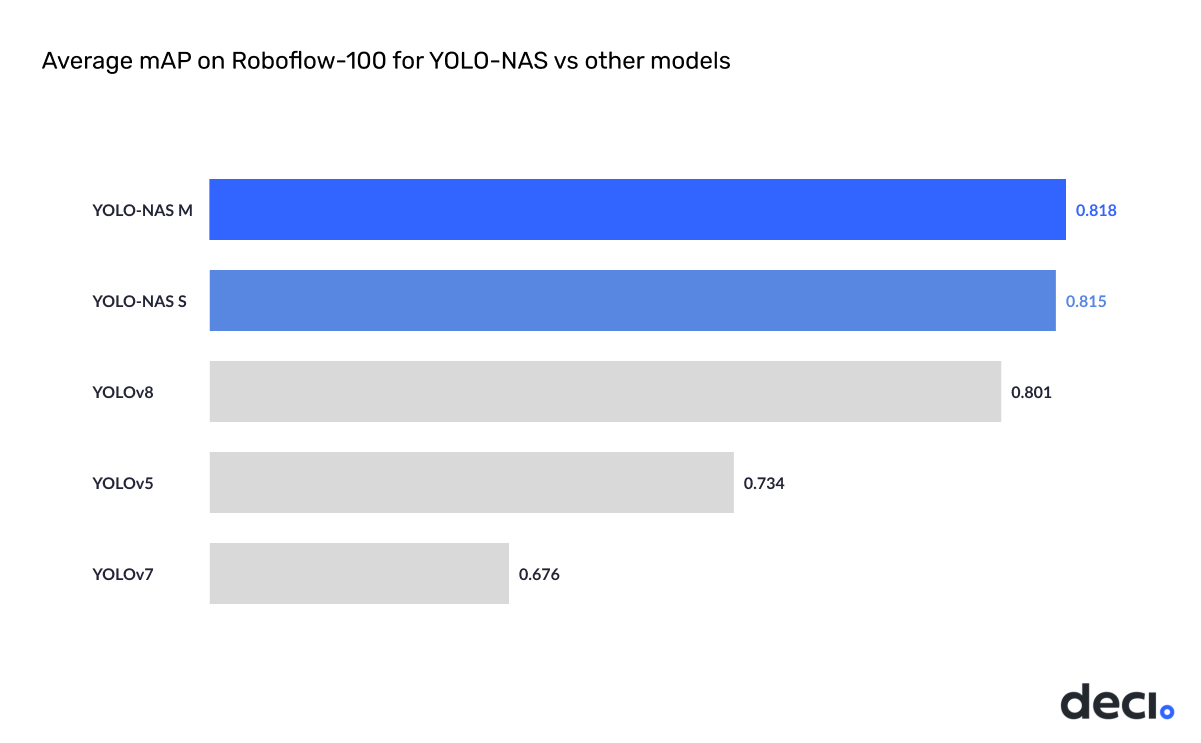

After the models were pre-trained, as an experiment, the team decided to test the performance on RoboFlow’s “RoboFlow100 dataset” to demonstrate its ability to handle complex object detection tasks. Needless to say, YOLO-NAS outperformed other YOLO versions by a considerable margin.

How To Use YOLO-NAS For Inference?

YOLO-NAS models have been tightly coupled and available with SuperGradients, Deci’s PyTorch-based, open-source, computer vision training library. In effect, using these models is really easy.

To use YOLO-NAS models, we first need to install some libraries. In a new development environment, execute the following Python installation commands:

pip install -qU super-gradients imutils

pip install -qU roboflow

pip install -qU pytube

The “super-gradients” package will install all the required packages, such as PyTorch and TorchVision, with Cuda support and other necessary libraries as well.

Object Detection Inference

For inference, first, we’ll import the two necessary packages:

import torch

from super_gradients.training import models

Running inference on images is super easy. The following command loads the model YOLO-NAS S small model.

device = torch.device("cuda:0") if torch.cuda.is_available() else torch.device("cpu")

model = models.get("yolo_nas_s", pretrained_weights="coco").to(device)

# "yolo_nas_m"

# "yolo_nas_l"

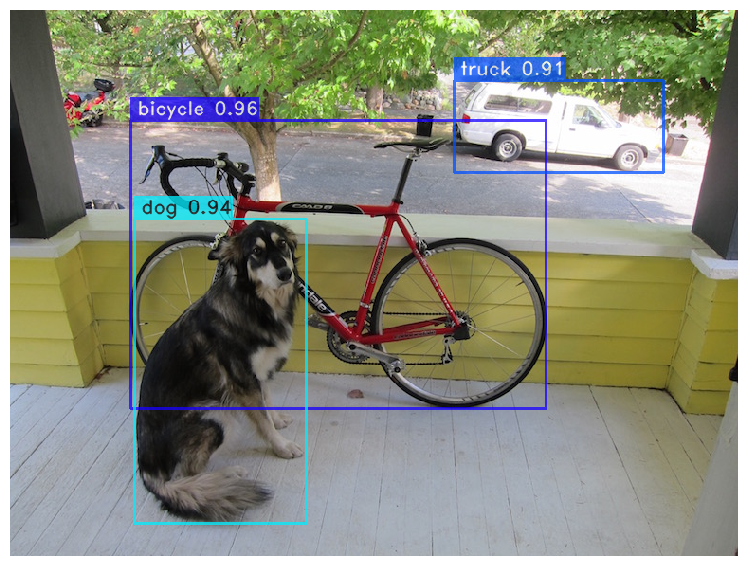

We are testing the model on the classic object detection test image. We need to call the model’s .predict(...) method to perform inference.

out = model.predict("test_1.jpg", conf=0.6)

Finally, to visualize the outputs, simply run: out.show()

To save the predicted image, call:

out.save("save_folder_path")

Similarly, for running inference on videos, the API calls remain the same. Only this time, we will use the largest available model.

model = models.get("yolo_nas_l", pretrained_weights="coco").to(device)

model.predict("/test_videos/kitchen_small_items.mp4").save("kitchen_small_items_detections.mp4")

On free-tier Colab T4 GPU, this inference ran at ~22 iterations/sec, i.e., 22 FPS. The 30-second video took about 35 seconds to be processed completely.

Here are some more video inference results on a drone shot:

The “S” small model took 15 seconds, while the “M” medium model took 22 seconds on T4.

Please note that (at the moment) we’re refraining from making a concrete comparison of the models against YOLOv8 and YOLOv6 because even though YOLO-NAS models are better regarding the number, the gap is small. Any comparison we try to make will be minimal in terms of impact. The accurate comparison will happen when, in the next post of the YOLO-NAS series, we train these models on custom tasks and record the ease and quality of training against the current giants.

When comparing the inference speeds, i.e., pre-processing + forward pass + post-processing. The FP32 YOLO-NAS L model took 24secs while YOLOv8-L took ~12 secs to process the entire video. The predictions of both models are very similar, and one cannot figure them out solely by looking at the videos.

Please note that the difference in the video lengths is because YOLOv8-L encoded the video at 29 FPS while YOLO-NAS L encoded it at 29.97 FPS (original).

Here’s a table listing all the metrics from YOLOv6 3.0, YOLOv8, and YOLO-NAS:

| Model | APval50:95 | Precision | Latency (bs=1) ms | Params (M) |

|---|---|---|---|---|

| YOLOv8-S | 44.9 | FP32 | 3.2 | 11.2 |

| YOLOv6-S | 45.0 | FP32 | 2.9 | 18.5 |

| YOLO-NAS S | 47.5 | FP16 | 3.21 | 19.0 |

| YOLO-NAS S INT-8 | 47.03 | INT8 | 2.36 | 19.0 |

| YOLOv8-M | 50.2 | FP32 | 7.0 | 25.9 |

| YOLOv6-M | 50.0 | FP32 | 5.7 | 34.9 |

| YOLO-NAS M | 51.55 | FP16 | 5.85 | 51.1 |

| YOLO-NAS M INT-8 | 51.0 | INT8 | 3.78 | 51.1 |

| YOLOv8-L | 52.9 | FP32 | 11.0 | 43.7 |

| YOLOv6-L | 52.8 | FP32 | 10.3 | 59.6 |

| YOLO-NAS L | 52.22 | FP16 | 7.87 | 66.9 |

| YOLO-NAS L INT-8 | 52.1 | INT8 | 4.78 | 66.9 |

That’s it; that’s all we wanted to cover in this quick introduction and hands-on of the newly released and the current SOTA – YOLO-NAS

Conclusion

The landscape of object detection state-of-the-art (SOTA) models is constantly evolving, driven by relentless research and innovation in the field of computer vision and deep learning. In recent times, YOLOv6 & YOLOv8 have been regarded as the best real-time object detection models openly available. Quite recently, a new competitor model, “YOLO-NAS” by Deci.ai has taken the top spot in terms of providing better real-time object detection capabilities.

In this article, we explored the latest installment of YOLO models, i.e., YOLO-NAS. We covered, in brief, the exploration and training details of these new models and their performance. Additionally, we also performed inference on videos.

Soon we’ll be adding new posts for custom training and deployment of these models.

If you conduct any experiments of your own, please share the results with us in the comment section. We would be delighted to hear about them.

In case you missed it, here’s the complete list of posts from our YOLO series:

- YOLOv8 Ultralytics: State-of-the-Art YOLO Models

- Train YOLOv8 on Custom Dataset – A Complete Tutorial

- Deploying a Deep Learning Model using Hugging Face Spaces and Gradio

- YOLOv6 Custom Training for Underwater Trash Detection

- YOLOv6 Object Detector Paper Explanation and Inference

- YOLOX Object Detector and Custom Training on Drone Dataset

- YOLOv7 Object Detector Training on Custom Dataset

- YOLOv7 Object Detector Paper Explanation and Inference

- YOLOv5 Custom Object Detector Training on Vehicles Dataset

- YOLOv5 Object Detection using OpenCV DNN

- YOLOv4 – Training a Custom Pothole Detector

- YOLOR Paper Explanation and Comparison

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning