AI Agents are usually API-bound workflows, designed to execute specific tasks with minimal human intervention. But when it comes to generic, open-ended automation, we’re still in the very early days. ...

Search Results for: install

Getting Started with VLM on Jetson Nano

Tiny Vision Language Models (VLMs) are rapidly transforming the AI landscape. Almost every week, new VLMs with smaller footprints are being released. These models are finding applications across ...

VLM on Edge: Worth the Hype or Just a Novelty?

In 2018, Pete Warden from TensorFlow Lite said, “The future of machine learning is tiny.” Today, with AI moving towards powerful Vision Language Models (VLMs), the need for high computing power has ...

AnomalyCLIP : Harnessing CLIP for Weakly-Supervised Video Anomaly Recognition

Video Anomaly Detection (VAD) is one of the most challenging problems in computer vision. It involves identifying rare, abnormal events in videos - such as burglary, fighting, or accidents - amidst ...

Video-RAG: Training-Free Retrieval for Long-Video LVLMs

Long videos are brutal for today’s Large Vision-Language Models (LVLMs). A 30-60 minute clip contains thousands of frames, multiple speakers, on-screen text, and objects that appear, disappear, and ...

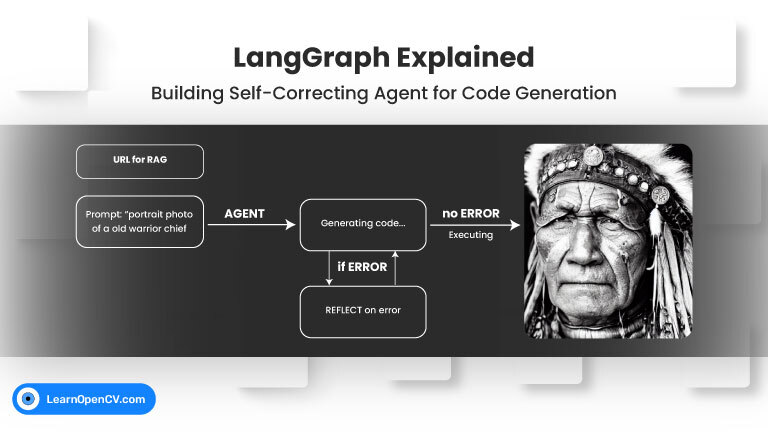

LangGraph: Building Self-Correcting RAG Agent for Code Generation

Welcome back to our LangGraph series! In our previous post, we explored the fundamental concepts of LangGraph by building a Visual Web Browser Agent that could navigate, see, scroll, and ...