Suppose you have an old photo of your childhood with your parents which is close to your heart. Unfortunately, some parts of it have become damaged or corrupted over time. But what if I tell you that image inpainting can restore those missing or damaged areas and give you a reason to smile again? Cool right!! In this article, we will explore the power of the SDXL inpainting model and its approach to image inpainting. Whether you are a beginner or an expert, it will help you gain knowledge about Diffusion models and their applications in image inpainting.

To see the experimental results, scroll down to the concluding part of the article or click here to see them right away. All the code discussed in this article is free for you to grab. Just hit the Download Code button to get started.

What is Image Inpainting?

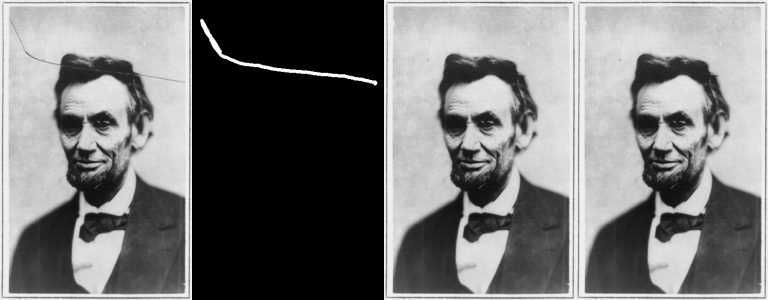

Image inpainting is a method for repairing damaged or missing pixels in images and has a wide range of applications. It’s not just about old photo restoration but also about enhancing photos, removing unwanted objects from photos, fixing old videos, and even generating art. In image inpainting, the primary workflow consists of two parts:

- The damaged or missing regions are identified using a binary mask.

- The missing pixels are filled by propagating information from the image’s neighborhood pixels or generating new pixels from learned features of the image.

You may have seen that your image editing app has a magic erase feature, right? You select the region you want to remove, and it’s magically removed from your photos within a few seconds. This is an image inpainting application where the marked region works as an image mask, and an image inpainting algorithm does the rest of the work. Sounds interesting, right? Nowadays, we have a variety of methods to inpaint images or videos, from classical computer vision methods to using GANs and DIffusers. As we learned above, they all work on the same principle under the hood. This article will go deeper into the Diffusers part, particularly the SDXL inpainting model.

You might be unfamiliar with the SDXL inpainting term. Don’t worry; we will explore it in detail throughout this blog. But before that, we will also try to get an overview of the other old techniques.

Image Inpainting – Classical vs Deep Learning

Using Classical Computer Vision

We have a variety of image inpainting techniques nowadays. But do you know it started way before the deep learning era? We can use classical computer vision methods to inpaint an image as well. OpenCV provides the cv2.inpaint function out-of-the-box, which uses two popular image inpainting algorithms: Navier-Stokes and Telea (Fast Marching Method).

Navier-Stokes-based Inpainting:

In this method, the algorithm first tries to calculate the image gradients of image pixels around the mask region and get the direction of intensity change of pixel values. Then, it uses a PDE (partial differential equation) to update the image intensity inside the missing region. In simpler terms, it works like fluid flowing into an empty hole and filling it up. The algorithm tries to flow the pixel values into the damaged or missing parts using the direction of intensity change.

Telea (Fast Marching Method):

This method uses a different approach to solve the same problem. After finding the intensity change direction of the masked region, it uses a weighted average over a known image neighborhood of the pixel to inpaint and update the intensity value on that pixel of the mask region.

We will not go into a detailed explanation of this right now, but you can explore our detailed article about image inpainting using OpenCV for a better idea.

Image Inpainting using Deep Learning

Now, we will try to explore what-if we can train a neural network that replicates these classic computer vision algorithms and predicts the missing parts of an image to ensure that the predictions are both visually appealing and contextually appropriate.

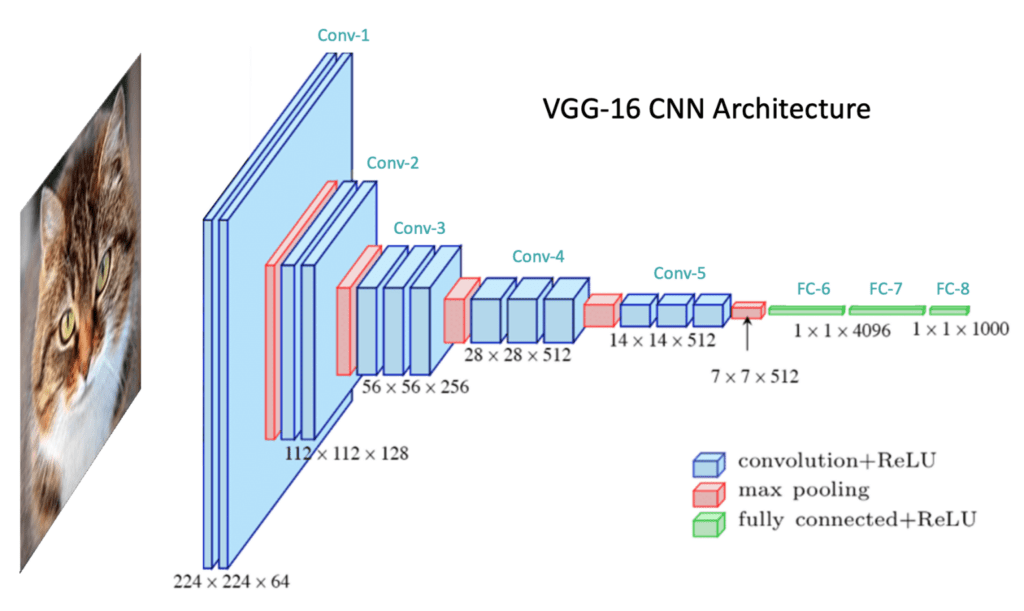

CNN

This is where the Convolutional Neural Network(CNN) comes into the picture; it’s a specialized neural network that tries to extract and learn features from the non-damaged parts of the image through deep convolutional layers. CNN is the root of all high-level deep-learning architectures and models used for image inpainting nowadays.

Consider how humans attempt to paint a damaged part of a picture using our brains: We imagine that missing part based on our knowledge base (understanding of the world). Similarly, CNN consists of a bunch of neural layers and neurons that try to learn features or attributes of the image and then use that knowledge to predict the missing parts.

GANs

Now, you may have a question: How does it predict the missing parts? For that, we will go a step ahead and introduce a specialized CNN architecture called GANs (Generative Adversarial Networks). This architecture mainly consists of 2 parts:

- Generator – It uses the knowledge base to generate an image from a random Gaussian noise.

- Discriminator – It works like a judge who tries to classify the generated image into real or fake samples. If the image seems fake, it penalizes the generator. This process will continue until the discriminator classifies the image as real.

This principle is the basis for all popular image-inpainting models, such as CM-GAN and GFP-GAN.

VAE

In the next step, we examine another CNN architecture called VAE (variational autoencoder), which takes a different approach to image inpainting:

- Encoder – It’s responsible for learning an appropriate latent space representation of the input data. The encoder effectively compresses the image into a lower-dimensional representation, capturing the essential features needed for the inpainting task.

- Decoder – Following the encoder, the decoder takes the compressed image information from the latent space and reconstructs the image, specifically filling in the missing parts. It expands the compressed features back into a full image, aiming to seamlessly integrate the newly generated parts with the original image portions.

These generative models are built to generate images. But, when we do image inpainting, we usually provide an input image and the mask of the damaged region to the model, and it works on that specific region of the image to generate the missing pixels.

Diffusion Models

We are in the era of Generative AI, so we will introduce the latest approach: Diffusion Models. Diffusion models take a more creative approach to image inpainting. They use advanced CNN architectures like UNet under the hood to generate images by gradually adding Gaussian noise and denoising it into a pixel space. Many popular diffusion models, like the diffusion probabilistic model (DDPM) and latent diffusion model (LDM), are being used nowadays to generate images.

Initially, diffusion models like the Denoising Diffusion Probabilistic Model (DDPM) introduced by Ho et al. in 2020 relied on a process where images gradually transitioned from the original state to a fully noisy state and then back to the original state. But in image inpainting, these models are fine-tuned to only work at the masked region and preserve the unmasked region to generate a perfectly inpainted image.

Further refinements addressed the computational demands and limitations of earlier models. For instance, Esser et al. (2021) transitioned the diffusion process to a discrete latent space from pixel space, significantly reducing the computational load and enabling the generation of very high-resolution images. This approach was encapsulated in models like ImageBART, which could produce images of impressive dimensions. The Latent Diffusion Model (LDM) proposed by Rombach et al. (2022) further optimized this concept, reducing training costs and boosting the visual quality of outputs at ultra-high resolutions. For this article, we will use the Stable Diffusion XL or SDXL model, an LDM, and an upgraded version of the Stable Diffusion Model.

Now that you know what diffusion models are, you probably still have many questions, like what latent space is, what noise is, what denoising is, and how SDXL works. We will cover all of these in the next section. Let’s look at SDXL’s workflow for image inpainting.

SDXL Inpainting – Architecture Evaluation

Stable Diffusion XL or SDXL is the latest version of the stable diffusion model. It can generate better images on a native resolution of 1024×1024. Its more complex and modified architecture allows it to generate accurate images even with a short prompt. We will use this model to do image inpainting, and for that, we have to use a fine-tuned version with additional capabilities to do our task.

Thankfully, we don’t need to make all those changes in architecture and train with an inpainting dataset. HuggingFace provides us SDXL inpaint model out-of-the-box to run our inference. We are going to use the SDXL inpainting model here. SDXL inpainting model is a fine-tuned version of stable diffusion. We will understand the architecture in 3 steps:

Stable Diffusion Architecture

Stable DIffusion is an LDM (latent diffusion model) that works on the latent space of images rather than on the pixel space of images. That makes it different from other DDPM models like DALL-E 2 and Imagen, which work on the pixel space of images. This improvement of latent space over pixel space makes stable diffusion faster and consumes less memory.

Now, this latent space is created by an Autoencoder. With autoencoders, one can “encode” the data into a much smaller “latent” space and “decode” it back into the original space. Actually, that’s why the original Stable Diffusion paper is called Latent Diffusion. This allows us to compress large images into lower dimensions effectively. After that, it uses architecture like UNet to denoise the latent space.

Now, on a high level, all this noising and denoising workflow of Stable Diffusion or any other LDM or DDPM model unfolds in two distinctive phases:

- In the first part, we take an image and introduce a controlled amount of random noise. This step is referred to as forward diffusion.

- In the second part, we aim to denoise the image and reconstruct the original content. This process is known as reverse diffusion.

The Stable Diffusion architecture concludes with text conditioning, which is critical for generating images based on text prompts. This process involves training a text embedding model, such as BERT or CLIP, using images paired with captions to produce token embedding vectors. These vectors are conditioning inputs integrated into the model’s U-Net architecture through a cross-attention mechanism involving queries, keys, and values. This setup allows the model to utilize both image captions and images during training, effectively tailoring image generation to correspond with the textual input provided.

Improvement in SDXL over standard Stable Diffusion

The term “XL” in SDXL indicates its expanded scale and increased complexity compared to previous SD models. It can able to produce high-quality images (1024×1024) with a small text prompt within a few seconds. Now, we will look at the main improvements of SDXL over Stable Diffusion:

- Increased U-Net Parameters: SDXL enhances its model capacity by taking a larger number of 2.6B U-Net model, allowing for more sophisticated image generation.

- Varied Distribution of Transformer Blocks: Unlike previous models, which had a uniform distribution of transformer blocks ([1,1,1,1]), SDXL adopts a heterogeneous distribution ([0,2,4]), introducing optimized and improved learning capabilities.

- Enhanced Text Conditioning Encoder: SDXL uses a bigger text conditioning encoder, OpenCLIP ViT-bigG, to effectively incorporate textual information into the image generation process.

- Additional Text Encoder: The model added an additional text encoder, CLIP ViT-L, which improved its output and enriched the conditioning process with complementary textual features.

- Size-Conditioning: This conditioner takes the original training image’s width and height as conditional input, enabling the model to adapt its image generation based on the desired size.

- Crop-Conditioning: SDXL introduces the “Crop-Conditioning” parameter, incorporating image cropping coordinates as conditional input.

- Specialized Refiner Model: SDXL introduces a second SD model specialized in handling high-quality, high-resolution data; essentially, it is an img2img model that effectively captures intricate local details.

Now, the main architectural workflow of SDXL looks very similar to that of the stable model. Only the upgraded part is SDXL effectively comprises two distinct models working together simultaneously:

- Initially, the base model is deployed to establish the overall composition of the image.

- Following this, an optional refiner model can be applied, which adds more intricate details to the image.

The basic architecture of the model follows:

1. Text Encoder (CLIP) :

The text encoder in SDXL processes textual input using the two CLIP models to create embeddings that capture the text’s semantic content.

Text Embedding Creation: The text prompt is fed into both the OpenCLIP ViT-bigG and CLIP ViT-L models. These models analyze the text and convert it into high-dimensional vectors or embeddings that represent its content and context.

Text and Image Conditioning: These embeddings act as conditional inputs in the image generation process. They guide the diffusion model by providing textual context that should be reflected in the generated images, ensuring the output closely aligns with the semantic meaning of the text.

2. U-Net – Latent Space:

The U-Net architecture in SDXL is central to the actual image generation and transformation:

Mixing Text and Image Embeddings in Latent Space: Initially, a latent representation of an image (which can start as random noise) is manipulated step-by-step. The U-Net uses the text embeddings from the CLIP models to condition this manipulation, ensuring that the transformations at each step are in line with the text’s semantic content.

Controlled Noise Generation and Reduction: The U-Net iteratively adds and then reduces noise in a controlled manner. This process is known as diffusion, where the model initially moves from a less noisy state to a more noisy state (forward diffusion) and then from a noisy state back to a clear state (reverse diffusion), reconstructing the image detail progressively.

3. Auto Encoder-Decoder (Variational Autoencoder – VAE) :

Though SDXL doesn’t explicitly mention a separate VAE, the principles of autoencoding are embedded within the U-Net and overall model architecture:

Encoding to Latent Space: In the diffusion process, the image data is encoded into a latent space, which is a compressed representation of the image data. This latent space captures the essential features needed to reconstruct the image but in a more manageable form that is easier to manipulate.

Decoding from Latent Space to Pixel Space: After the diffusion process (specifically, the reverse diffusion phase), the U-Net acts as a decoder. It takes the denoised latent representations and reconstructs them back into pixel space, forming the final image. This step is critical as it transforms the abstract features and noise patterns back into a coherent and visually recognizable image.

Now, we will see what is different in the finetuned SDXL Inpainting model in the next section.

Modifications in SDXL inpainting Architecture

The HuggingFace Diffusers team finetuned the SDXL Base model to take a more creative approach to image inpainting. With the SDXL inpainting model, we cannot only restore old photos but also generate new objects or replace any object within the image by using a mask. It’s like a skilled Photoshop artist for you—cool, right?

The SD-XL Inpainting 0.1 model was initialized with the stable-diffusion-xl-base-1.0 weights. The model is trained for 40k steps at resolution 1024×1024 and 5% dropping of the text-conditioning to improve classifier-free classifier-free guidance sampling. For inpainting, the UNet has 5 additional input channels (4 for the encoded masked-image and 1 for the mask itself) whose weights were zero-initialized after restoring the non-inpainting checkpoint. During training, diffusers team generates synthetic masks and, in 25%, mask everything.

Now that we have a clear idea of the SDXL inpainting model, let’s move on to the next section and see the dataset used to train it.

SXDL Inpainting – Dataset Visualization

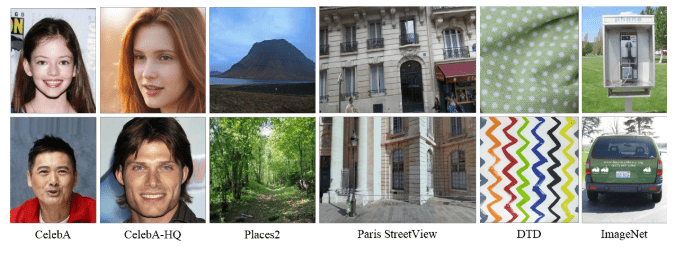

For Image Inpainting, there are six prevalent and public datasets available. These datasets cover various types of images, including faces (CelebA and CelebA-HQ), real-world encountered scenes (Places2), street scenes (Paris), texture (DTD), and objects (ImageNet).

The CelebA dataset consists of 202,599 facial images across 10,177 identities, each annotated with 40 attributes. At the same time, its high-quality counterpart, CelebA-HQ, enhances 30,000 of these images by removing artifacts and applying super-resolution. The Places2 dataset offers a vast collection of 1.8 million images across 365 scene categories aimed at scene recognition tasks. The Paris StreetView dataset provides street-level imagery with 14,900 images for training and 100 for testing, and the DTD dataset includes 5,640 images categorized into 47 texture types based on human perception. Lastly, the ImageNet dataset is a benchmark for object category classification with approximately 1.2 million training images and 50,000 validation images, supporting a wide range of machine learning and computer vision applications.

But for SDXL or stable diffusion, makers use a different dataset to make it even more perfect. The Stable Diffusion XL model was trained on several large datasets collected by LAION, a nonprofit organization:

- LAION-2B-EN: A set of 2.3 billion English-captioned images from LAION-5B’s full collection of 5.85 billion image-text pairs.

- LAION-High-Resolution: A subset of LAION-5B with 170 million images greater than 1024×1024 resolution (downsampled to 512×512).

- LAION-Aesthetics v2 5+: This is a 600 million image subset of LAION-2B-EN with a predicted aesthetics score of 5 or higher, with low-resolution and likely watermarked images filtered out.

This Image from a data explorer created by Waxy.org only includes a smaller 12 million image subset from LAION-Aesthetics v2 6+, with a higher predicted aesthetic score of 6 or higher. This is meant to be a representative sample of the images used to train the last three checkpoints of Stable Diffusion XL.

SXDL Inpainting – Code Pipeline

After Learning all the concepts and theories, let’s move on to the coding part.. We will use the HuggingFace Diffusers to create the code pipeline and Google Colab’s free NVIDIA Tesla T4 GPU with 16 GB of VRAM to run the inference. First, we will complete the installation steps:

! pip install diffusers -q

! pip install accelerate -q

We will install two popular HuggingFace libraries: diffusers to load our SDXL inpainting model, build the inference pipeline, and accelerate to make the inference fast and optimized on GPU.

Then we import the packages into our code:

from diffusers import AutoPipelineForInpainting

from diffusers.utils import load_image, make_image_grid

import torch

We import the AutoPipelineForInpainting to load our model and build the pipeline. And import load_image to load our input image and mask image and make_image_grid to visualize the output image from the inference. We import pytorch to define a generator object.

pipe = AutoPipelineForInpainting.from_pretrained("diffusers/stable-diffusion-xl-1.0-inpainting-0.1", torch_dtype=torch.float16, variant="fp16").to("cuda")

We use the AutoPipelineForInpainting class and load the pre-trained SDXL inpainting model.

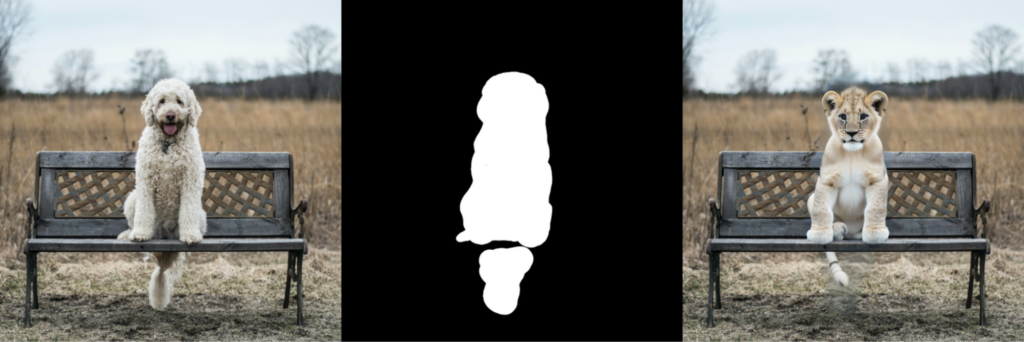

img_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png"

mask_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png"

input_image = load_image(img_url).resize((1024, 1024))

mask_image = load_image(mask_url).resize((1024, 1024))

We load your input image and the mask image. Then, we resize both to (1024,1024) because our SDXL inpainting model is trained with this image size and will generate proper results with images of other resolutions.

Although we provide input images with the downloaded code, we suggest you download any image, create a binary mask, and experiment with the model to get excellent results. We provide a Python script for creating the binary mask and the code, which you can download by clicking the Download Code Button.

prompt = "a cute baby lion sitting on a park bench"

negative_prompt = "bad anatomy, deformed, ugly, disfigured"

generator = torch.Generator(device="cuda").manual_seed(0)

Before we build our main image inpainting pipeline, we set our prompt and the negative_prompt beforehand. Then, we create our generator object with a manual seed to ensure that the operations involving randomness are reproducible.

image = pipe(

prompt=prompt,

negative_prompt=negative_prompt,

image=input_image,

mask_image=mask_image,

guidance_scale=8.0, # affects the alignment between the text prompt and the generated image.

num_inference_steps=20, # steps between 15 and 30 work well for us

strength=0.99, # make sure to use `strength` below 1.0

generator=generator,

padding_mask_crop=32 #Crops masked area + padding from image and mask.

).images[0]

Now, we are building the inference pipeline object. Let’s take a look into all the parameters and their role in the image inpainting process:

num_inference_steps:

- Controls the number of denoising steps during image generation.

- Higher steps improve image quality by allowing more iterations to refine the output but increase response time.

strength:

- Determines the noise level added to the base image, influencing how much the inpainted region resembles the original.

- A value of 1 indicates no trace of the original is left, while 0 reconstructs the original region.

- Higher strength results in more noise and deviation from the base, enhancing creativity but requiring more processing time.

guidance_scale:

- Indicates the alignment between the text prompt and the generated image.

- Higher values enforce a stricter adherence to the prompt, reducing creativity.

- Lower values allow for more varied and creative outputs.

negative_prompt:

- It serves to guide the model on what to avoid during image generation.

- It helps steer clear of unwanted visual elements, enhancing image quality with minimal effort.

padding_mask_crop:

- Crops the masked area with specified padding from both the image and the mask.

- The cropped area is upscaled and overlaid onto the original image, improving quality without complex processes.

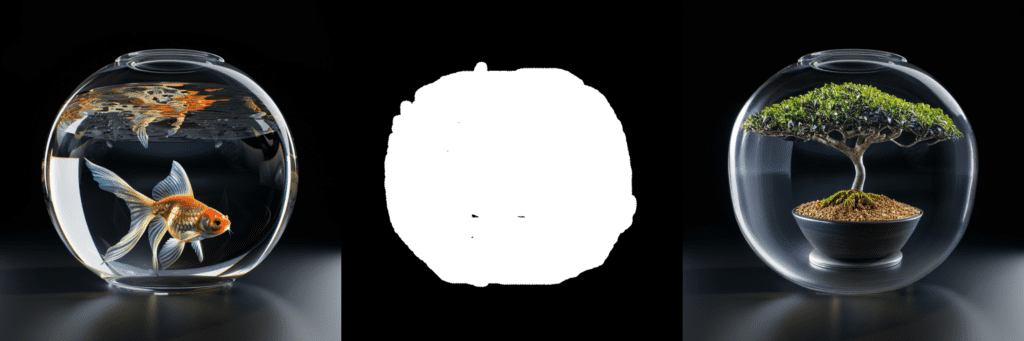

make_image_grid([input_image,mask_image,image], rows=1, cols=3)

After the Image generation process, we call the make_image_grid function to display our result with our input image and image mask.

Now, let’s move to the most interesting part: results!

SXDL Inpainting – Inference

Now, we look into the inference results that we gathered. SDXL produces high-quality images, so the SDXL inpainting model works like a magician here. Don’t believe it? See the results:

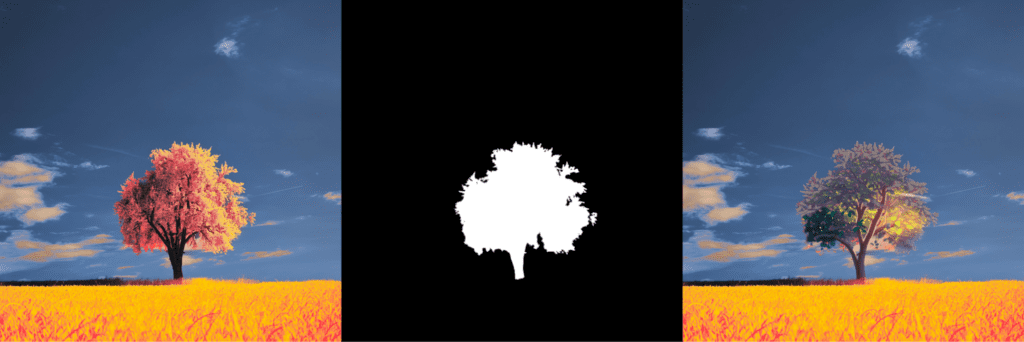

Cool right! Now, we will see how to make perfect results by tuning the parameters. For that, we need to look into three main components:

steps:

steps control the image’s denoising steps during the image generation process. See the result comparison when increasing the steps gradually:

Prompt = oil painting of a lion face on a t shirt

As the number of steps increased, the output quality improved. You need to tune this according to your input image and find the best fit for your output.

guidance_scale:

It controls the alignment of the generated image with the text prompt. We will now gradually increase the Scale and see the comparison of the results:

Prompt = oil painting of a lion face on t shirt

Increasing the scale makes the output image more gradient and prompt accurate here.

strength:

It controls the amount of noise added to the input image. We gradually increased the strength, and the results are here:

Prompt = oil painting of a lion face on a t shirt

With increasing strength, the output image became perfect and distinct from the input image.

After a lot of experiments, the perfect parameter values worked for me:

guidance_scale=20.0strength=0.99num_inference_steps=100

The result I got:

Have you ever wondered what the diffusion process for SDXL inpainting looks like under the hood?

So it’s magical, right? It’s all about tuning all the parameters again and again to get the perfect result. Why don’t you download the code and try it yourself?

SDXL inpainting – Key Takeaways

Here are four main key takeaways from the article:

SDXL inpainting – Robust Architecture

The SDXL inpainting model features a robust architecture that builds upon the foundational principles of Stable Diffusion. It incorporates advanced mechanisms like a larger U-Net, varied distribution of Transformer blocks, and enhanced text conditioning encoders. This architecture allows for more efficient and precise image generation, specifically tailored for tasks like image inpainting.

SDXL inpainting – High-Quality Image Generation

SDXL produces high-resolution images that maintain a high degree of fidelity to the original photos. The model’s ability to handle complex inpainting tasks, such as restoring old photos or modifying image content seamlessly, is a testament to its sophisticated generative capabilities. The integration of additional input channels and specialized refiner models further enhances the quality and detail of the inpainted images.

SDXL inpainting – Advancement in Image Inpainting

The SDXL model represents a significant advancement in image inpainting. By leveraging latent diffusion techniques and a fine-tuned approach to specific inpainting tasks, it offers a more creative and effective solution than traditional methods. This model not only restores damaged parts of images but also has the potential to create new elements within the images, broadening the scope of creative digital editing.

Conclusion

In conclusion, SDXL inpainting shows how much image restoration technology has improved. Using Stable Diffusion XL’s strong capabilities, it offers an easy way to fix and improve images with accuracy. Whether you want to restore old photos or try new creative ideas, SDXL inpainting helps you get professional-quality results without being complicated. It’s a step forward in digital image editing, making every pixel contribute to perfect visuals.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning