Arguably, the most crucial task of a Deep Learning based Multiple Object Tracking (MOT) is not to identify an object, but to re-identify it after occlusion. There are a plethora of trackers available to use, but not all of them have a good re-identification pipeline. In this blog post, we will focus on one such tracker, FairMOT, that revolutionised the joint optimisation of detection and re-identification tasks in tracking.

- Multiple Object Tracking Recap

- Need for FairMOT

- Problems FairMOT Tackles

- FairMOT Architecture

- Data Association in FairMOT

- FairMOT Results on Public Datasets

- Conclusion

- References

1. Multiple Object Tracking Recap

Referring to our previous post about MOTs and DeepSort, we will recap a few concepts.

- Types of trackers: trackers can be classified based on some attributes:

- Number of objects tracked-

- Single Object Trackers which include legacy OpenCV trackers like CSRT, KCF, etc. These work by initialising the object in the first frame, and track it throughout the sequence.

- Multiple Object Trackers include deep learning trackers which are trained on a dataset to track multiple objects of the same or different classes. These include DeepSort, JDE, FairMOT, etc.

- Inclusion of a detection pipeline-

- Traditionally, box coordinates are manually initialised around the objects in the first frame. Then, these objects are tracked in the next frames. These are trackers without a detection pipeline.

- Recent algorithms have an object detection pipeline, built along with an association stage to get better tracking results. These are trackers with a detection pipeline.

- Number of objects tracked-

- Metrics for tracking:

- Multiple Object Tracking Accuracy (MOTA) combines false positives, missed targets and identity switches.

Here, mt, fpt, and mmet are the number of misses, of false positives, and of mismatches, respectively, for time t.

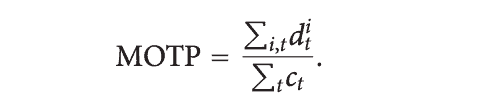

- Multiple Object Tracking Precision (MOTP) calculates the misalignment between ground truth and predicted boxes.

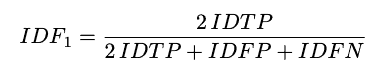

Here, ct is the number of matches found for time t, and for each of these matches, dit is the distance between the object oi and its corresponding hypothesis. - IDF1 score (IDF1) is the ratio of correctly identified detections over the average of ground truth and predicted detections.

Here, IDTP is Identity True Positives, IDFP is Identity False Positives, and IDFN is Identity False Negatives. - ID Switching (IDSw.) is the total number of ID switches in the video stream.

- Multiple Object Tracking Accuracy (MOTA) combines false positives, missed targets and identity switches.

- Drawbacks of DeepSORT

Even though DeepSORT is one of the most popular trackers, it has many drawbacks. Some of the examples when DeepSORT fails are:- ID switching,

- Bad occlusion handling,

- Motion blur, etc.

The metrics calculated in DeepSort did not show good results either. The average accuracy that we got was 28.6, which is very low.

2. Need for FairMOT

FairMOT was introduced to tackle the re-identification problem. Most Deep Learning Multiple Object Trackers do not have trouble with the detection task, but have troubles with the re-identification task. This was mainly due to three reasons.

- First, they treat re-identification as a secondary task. Due to this, the accuracy of re-ID depends on the primary detection task. This is also known as the ‘cascading effect’.

- Second, the object detector’s high-level ‘deep’ features are passed to get the re-ID embeddings. Research suggests that passing low-level features will give better results as it helps discriminate between different subjects of the same class.

- Third, the feature dimension size was too high, around 512 or 1024, much higher than object detection. Huge differences between the dimensions will harm the performance of both the tasks. Furthermore, empirically it is found that low-dimension re-ID features achieve both higher tracking accuracy and efficiency

More on these later. Multiple object trackers can be categorised into the following:

- Generic tracker

Block diagram of a generic tracker

- The input image is passed to an object detection model.

- The model localises the boxes, and passes the results to the association stage.

- This is usually made up of Kalman Filter, paired with the Hungarian algorithm to give us final tracking results.

Drawbacks: - No re-ID after occlusion: A unique ID can be assigned to the objects. But if ever the object detector fails, the object ID will be lost. There is no mechanism in place to retrieve those IDs.

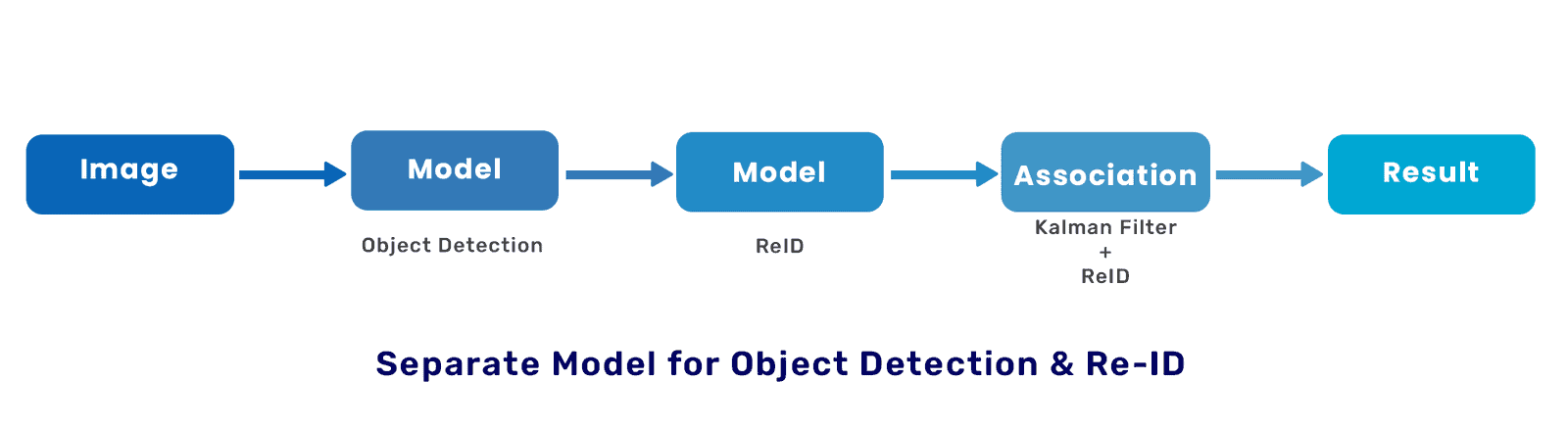

- Separate model for Object Detection and Re-ID

Block Diagram for tracking algorithm with a separate model for the Object Detection and the Re-ID task.

- The input image is passed to an object detection model.

- The object detector model localises the boxes, and passes the results to the dedicated re-ID model

- This model calculates the re-ID features on the detected object boxes.

- Both the results from the object detection model and the re-ID model are passed to the association stage.

- This stage works better than the previous approach, as it now has re-ID features to recover the object IDs.

Drawbacks: - Low inference speed: Since there are two models, it is difficult to get real-time performance. This is especially true when the number of objects are high. Since re-ID needs to be calculated for each bounding box separately.

- Low accuracy: The results of the object detection model is the input to the re-ID model. If problems exist in the object detection stage, the re-ID stage will suffer. This phenomenon is known as the cascading effect.

Murphy’s Law states, “Anything that can go wrong will go wrong”

- Single model for OD and Re-ID (One Shot tracker)

Block Diagram for One Shot Tracker. It is a joint detection and tracking in a single network.

- The input image is passed to the joint object detector and re-ID model.

- The model outputs both, the object bounding boxes (anchors) and the re-ID feature for each object (bounding box).

- These outputs are sent to the association stage, which uses re-ID features to recover the lost tracklets.

- It has reduced inference time, since it reuses the backbone features for the re-ID task.

Drawbacks: - Overlooked re-ID task: Object detection anchors are passed to calculate the re-ID embeddings. Anchors can create quite a few problems (more on them later). So when competition arises, the model favours the detection task.

- ID switching: Theoretically, this approach sounds the best amongst all, but practically (Voigtlaender et al., 2019), the results were subpar. Instead of decreasing the ID switching, it increases by a large margin.

Even though this is the right track, it is far from the best. A naive approach is not fruitful, and should be treated carefully.

P.S., The above list does not include Single Object Tracking, or the MultiTracker function by OpenCV.

3. Problems FairMOT tackles

3.1 Problems with anchors

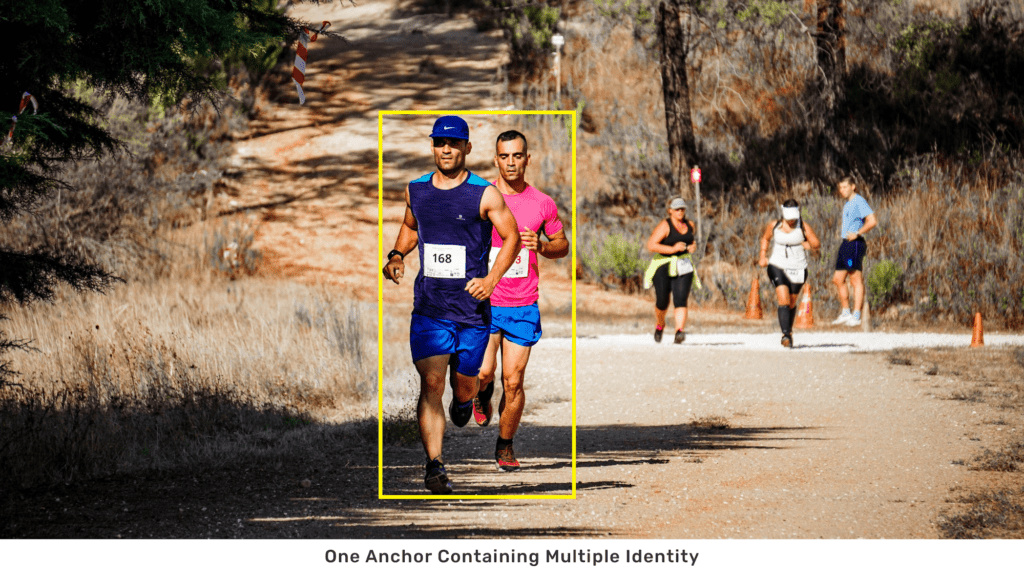

Anchors introduce a lot of ambiguities during the re-ID feature training or extraction. This is due to two reasons.

- Multiple identities correspond to a single anchor

When the subjects overlap, one anchor box can contain multiple identities. Sending these anchor coordinates, or the image region to the re-ID task will result in defective feature embeddings.

Multiple anchors correspond to a single identity

Anchor-based object detection models output thousands of irrelevant boxes. We need to apply NMS, to get rid of those boxes. Sometimes, not all the boxes get suppressed. That is when we get multiple anchors for a single subject. Passing multiple boxes of the same subject also leads to anomalies.

3.2 Feature sharing between object detection and re-ID

Studies show that object detection and re-ID need different features for their respective tasks. The earlier one-shot tracker approach used the same object detection features for the re-ID task as well. Instead, re-ID needs more low-level features. This is to discriminate between the objects of the same class. An object detector, however, needs to discriminate between the different classes.

3.3 Problems with re-ID feature dimension.

The earlier approach used high dimensional re-ID features, around 512 or 1024 dimension features. This was unnecessary. Learning low dimensional re-ID features causes less harm to the detection accuracy and improves the inference speed. Computing large dimensions will take longer, especially when the number of candidates is high. The MOTA score also improves when we lower the re-ID feature dimension from 512 to 64. This is mainly caused by the conflict between the detection and re-ID tasks. The detection result (AP) improves when we decrease the dimension of re-ID features.

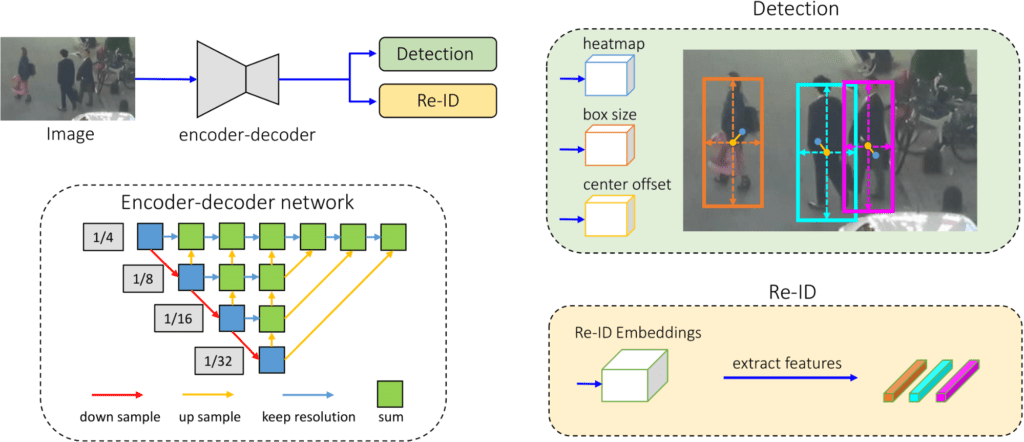

4. FairMOT Architecture

FairMOT was created by observing the drawbacks of other tracking algorithms. They found the anomalies created by anchors, issues with re-ID dimensions, and how one-shot tracking can get them real-time results. Hence, they experimented with anchor free object detector approach. FairMOT is based on CenterNet, but it is not a naive combination of that and re-ID. It follows a dual homogeneous branch, for detection and re-ID. Neither gets a priority over the other.

Spoiler: Tracking accuracy is improved significantly, without much engineering effort.

4.1 Backbone network

FairMOT’s backbone network is based on ResNet-34, with enhanced Deep Layer Aggregation (DLA). DLA helps fuse multi-layer features, as it has more skip connections between the low-level and high level features.

Additionally, the convolutional layers during upsampling are replaced with Deformable Convolution Networks. It helps solve the alignment issue and enables more flexible receptive fields for objects of different sizes.

If the input image is of the shape H x W, the output feature map is of the shape H/4 x W/4.

It is very important for our method since FairMOT only extracts features from object centers without using any region features.

4.2 Detection Branch

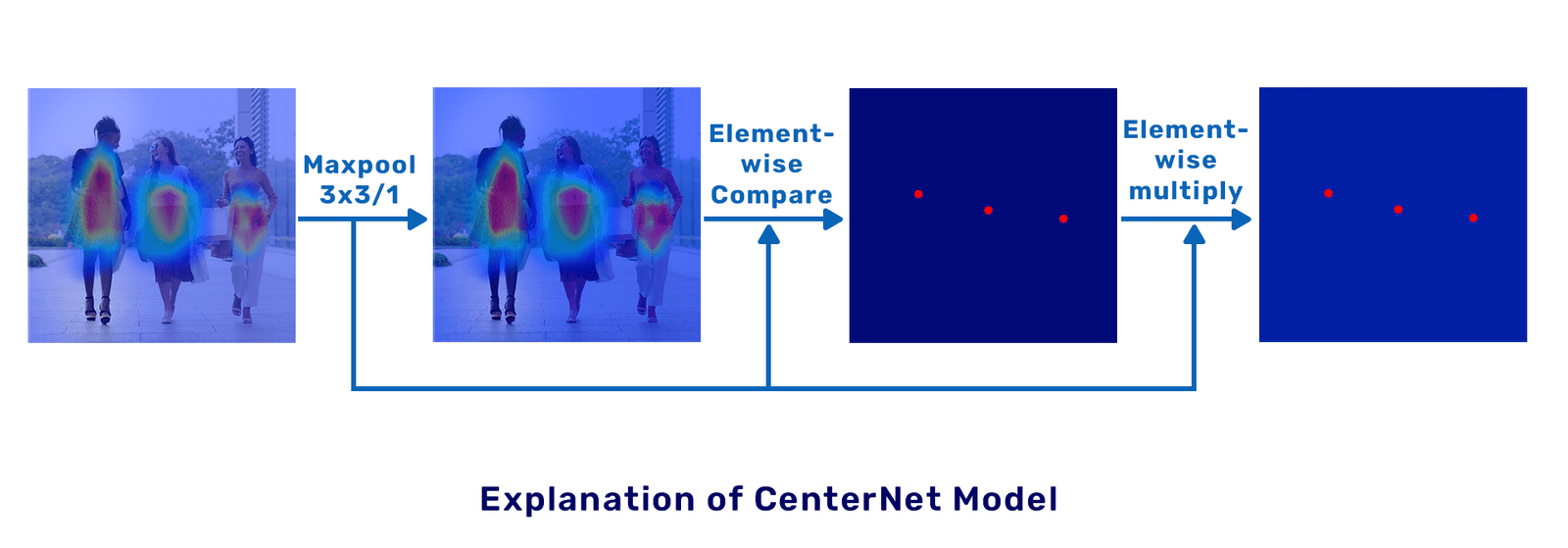

The detection branch is based on CenterNet. CenterNet is an anchor-free model and dumps the garbage detections during the deep CNN flow itself. No need for NMS, which requires additional computation time, and can sometimes give multiple anchors for the same subject. Briefly, CenterNet works in the following way:

Inner workings during CenterNet object detection.

- It draws delta functions at the ground truth box centers, and uses a gaussian filter to smear them. The values are peak at the object centers.

- Next, apply a 3×3 max pool layer with stride 1 to the heatmap head. This elevates each cell, such that a small flat region is formed at the peak.

- Apply boolean elementwise comparison between the max pool input and output. Thus, the output would either be 0 or 1. This would give rise to local maxima in the heatmap, resulting in the object centers.

- Elementwise multiply the input of the max pool layer and the output of the elementwise comparison. This will result in maxima with confidence scores.

- Use a confidence threshold to weed out low-confidence objects.

Now that we understand how CenterNet works, let’s see how FairMOT incorporates it to the detection branch.

CenterNet needs 3 heads to localise the object. It needs a heat map, box size, and box offset. Each head is implemented to the output features of DLA-34 by applying a 3 x 3 convolution with 256 channels and then by a 1 x 1 convolution layer. This generates the final targets.

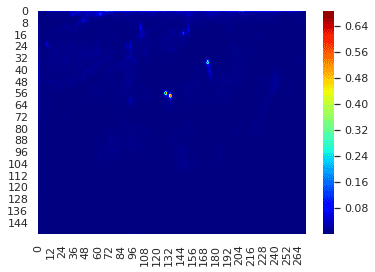

- Heat map head:

This is responsible for estimating the object centers. The heat map response is 1, when it collapses with the object centers. However, the response decays exponentially as it moves further away from the center. The output dimension is 1 x H/4 x W/4.

The loss function is pixel-wise logistic regression with focal loss. This is an improved version of cross-entropy loss; It down-weights easy examples, and assigns more weights to hard and misclassified examples. Thus, handling the class imbalance problem.

Take the following input image:

It outputs the following heatmap:

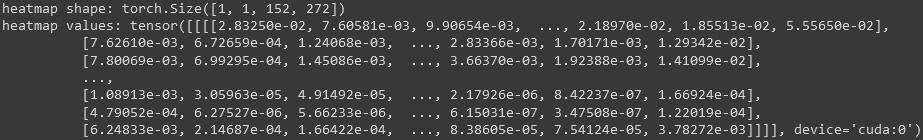

And these are the heatmap shape and values:

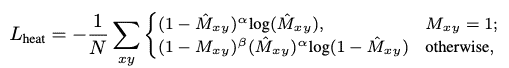

The loss function is given as:

here, Mˆ is the estimated heatmap, and α, β are the predetermined parameters in focal loss - Box size:

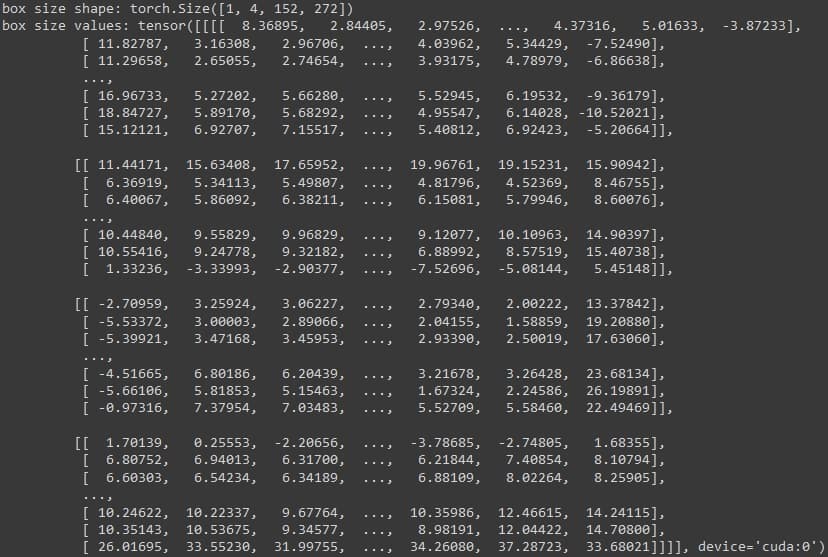

It is responsible for estimating the height and width of the target box at each location. Its feature dimension is of the shape 2 x H/4 x W/4.

It can also output the left top right bottom coordinates. The feature dimension will then be of the shape 4 x H/4 x W/4

These are the box size shape and output:

- Box offset:

Since the output feature map size is 4, each object center can introduce quantisation errors of up to 4 pixels. To get the proper results, this head estimates the offset of the object center, mitigating the impact of downsampling. The output dimension is of the shape 2 x H/4 x W/4.

These are the box offset shape and output:

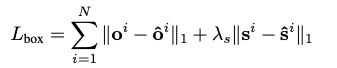

We calculate L1 loss for both, the box size and box offset head. It is given by:

Here, λs is a weighting parameter, and is taken as 0.1.

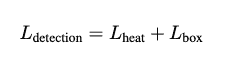

The detection loss is the summation of two. It is given by:

4.3 Re-ID branch

This branch is responsible for generating the re-ID features. These features help distinguish the objects. The model learns re-ID features through a classification task. In the training set, all the subjects of the same ID are treated as the same class.

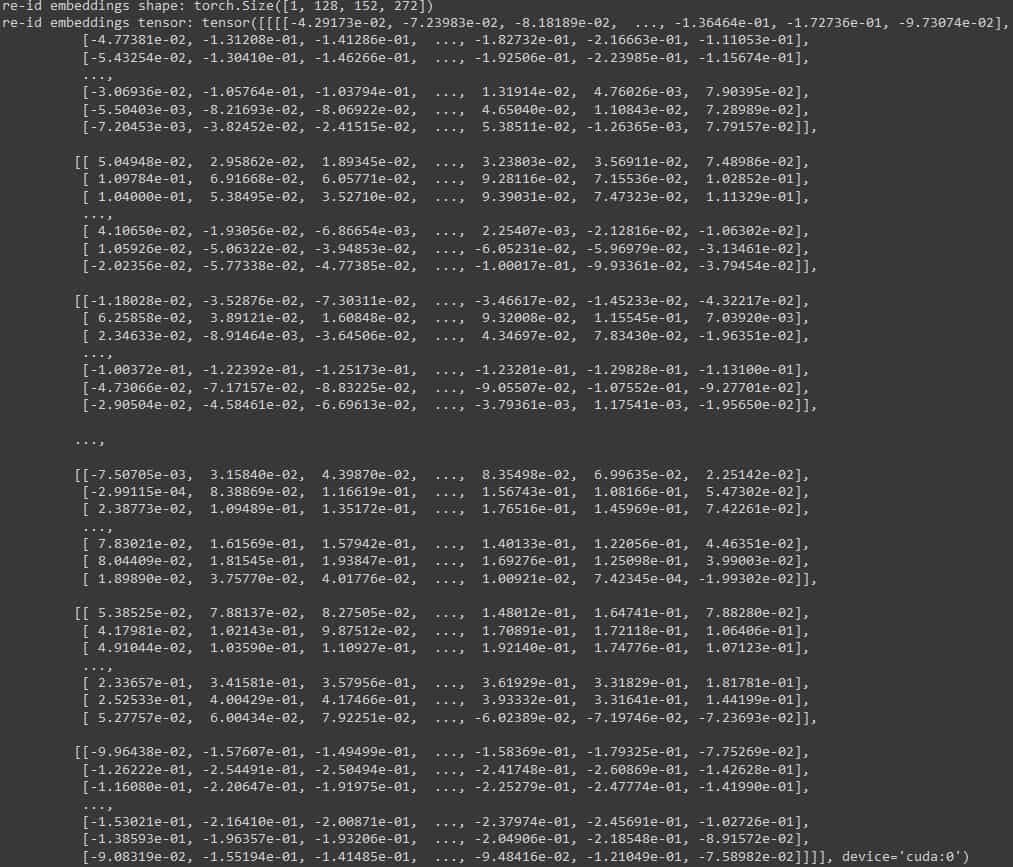

Use a fully connected layer. Apply a convolution layer with 128 kernels on top of the backbone model DLA-34. Then, apply a softmax operation to map the re-ID features to a class distribution layer. Extract the re-ID features for each location. During training, only the identity embedding vectors located at the object centers are used.

The output dimension is of the size 128 x H/4 x W/4.

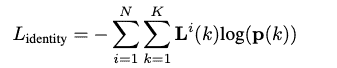

The re-ID loss is computed as:

Here, K is the number of all the identities in the training data, P is a class distribution vector, and Li(k) is the one-hot representation of the ground truth class label.

These are the re-ID shape and output:

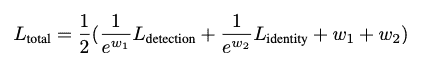

The total training loss is the uncertainty loss to balance both, detection and the re-ID task. It is given by:

Here, w1 and w2 are learnable parameters that balance the two tasks.

5. Data Association in FairMOT

This is where the actual ‘tracking’ happens. It uses a combination of the results from the previous stage, i.e., object localisation, and re-ID features, as well as predicts the bounding box coordinates using Kalman Filter.

Some tracking algorithms only use IoU. This will increase the number of ID Switches, especially in crowded scenes. Using re-ID alone increases the IDF1 scores. Adding Kalman Filter to mix smooths the tracks, and decreases the number of ID Switches.

A combination of re-ID, Kalman Filter, and IoU gives the best result. Following is how FairMOT performs data association:

- For the first frame, initialise all the tracklets based on the detection results. Give a unique ID to all the subjects.

2. For the subsequent frames, use a two-stage matching strategy to confirm the tracks and the IDs.

- First, apply Kalman Filter to get the predicted bounding box location. Calculate the Mahalanobis distance between the predicted (Kalman Filter output) and the detected (model output) boxes. Merge this distance with the cosine distance computed on the re-ID features of the previous frame and current frame detections. Finally, use the Hungarian algorithm to match these pairs, and retain as many identities as it can.

2. In the second stage for the unmatched detections, match the predicted (Kalman Filter output) and the detected (model output) boxes using IoU overlapping. Update the appearance features of the tracklets for every frame to handle appearance variation.

3. Otherwise, initialise the unmatched detections as new tracks. Save the unmatched tracklets, if any, for 30 frames— in case they reappear in the future.

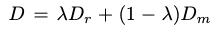

6. FairMOT Results on Public Datasets

FairMOT is trained on a variant of DLA-34 as the default backbone. The model, pre-trained on the COCO dataset, is trained using the Adam optimizer for 30 epochs with a starting learning rate of 10−4. The learning rate decays to 10−5 at 20 epochs. Standard data augmentation techniques including rotation, scaling and colour jittering are used.

6.1 Comparison with other One-Shot trackers

Following are the results compared with other models:

6.2 Output Format of FairMOT

FairMOT follows MOT challenge output format. It returns a text file, with the same name as the input name. It contains one object instance per line, with each line containing 10 values. Its format is:

<frame>, <id>, <bb_left>, <bb_top>, <bb_width>, <bb_height>, <conf>, <x>, <y>, <z>

- frame is the frame number

- id is the object id

- bb_left is the x coordinate of the top left point

- bb_top is the y coordinate of the top left point

- bb_width is the width of the bounding box

- bb_height is the height of the bounding box

- conf is the detection confidence of the object

- x, y, and z are world coordinates, and ignored for the 2D challenge. -1 is used in such cases.

6.3 Visualise FairMOT Results

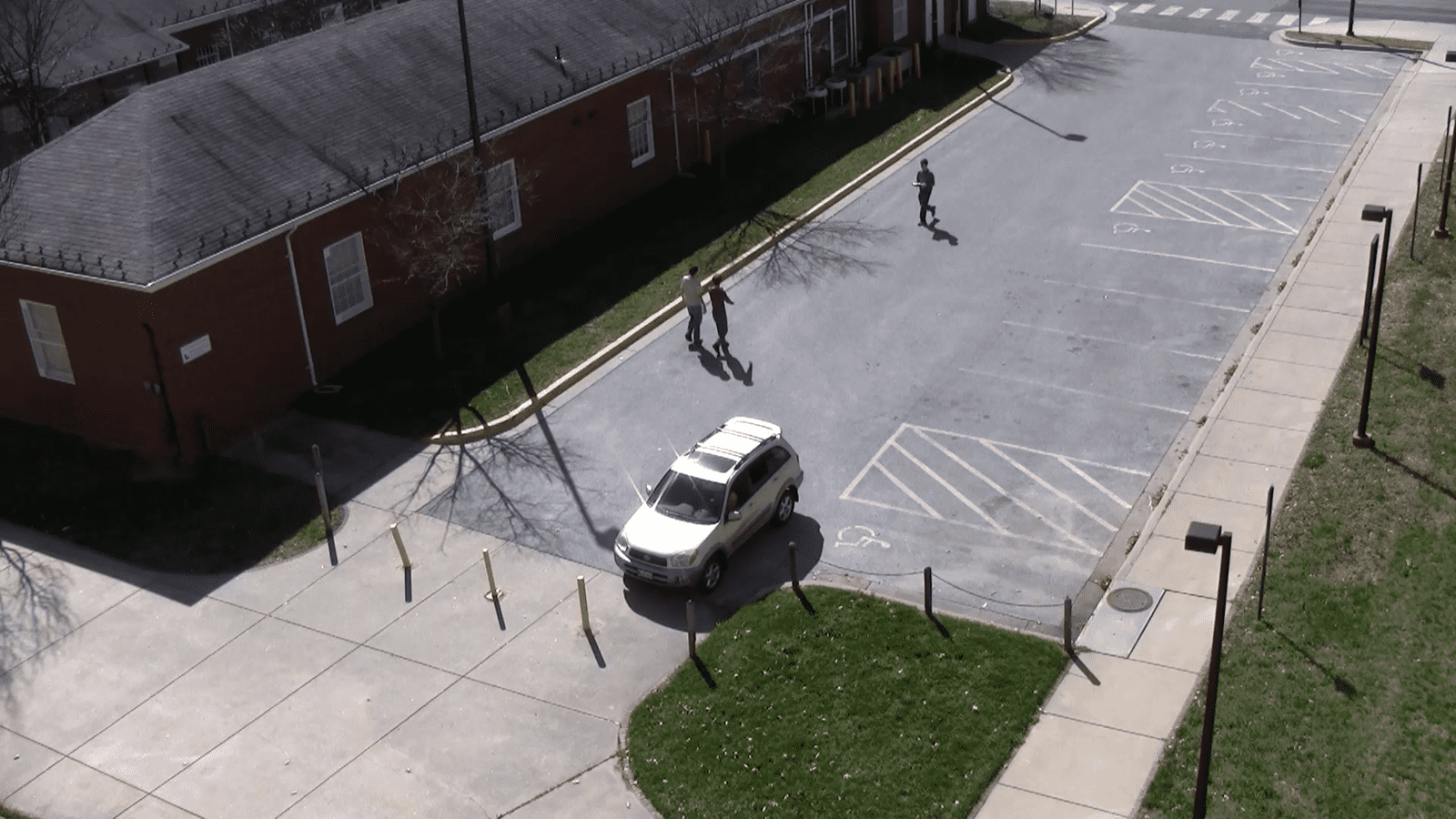

Here are some of the results taken from the MOT dataset.

6.4 Comparison between DeepSort and FairMOT

In the following videos, we have compared between DeepSort and FairMOT. The detection model used with DeepSort is YOLOv5s, whereas FairMOT uses both, YOLOv5s, as well as DLA-34. Furthermore, the re-ID features are compared over two different buffer size, 150 and 30. This means, the re-ID features are stored for 150 frames or 30 frames (which is the default). The re-ID features are responsible to help revive the unique person ID.

- Effects of Occlusion

For buffer size 30

- DS-YOLO: Works well, but has the same issue of anchor boxes. Original ID remain throughout.

- FM-DLA: One ID switch happens of the driver. Object detection works well.

- FM-YOLO: ID switched after shadows.

For buffer size 150

- DS-YOLO: Issue of anchor boxes consist. No real change after increasing the buffer size.

- FM-DLA: Way better object detection. Same ID switch once (pose change).

- FM-YOLO: Even though the object detection fails at times, ID never switched.

Conclusion: FairMOT-YOLOv5s fails to detect object at times. Conveniently, in the 150 frames variant, it did not detect the subject during the shadows. Had it detected, like other models, it would have failed as well. Since it did not, it did not register the ‘shadow’ id features. The 150 frames buffer size helps as well.

For buffer size 30

- DS-YOLO: ID switched and failing for the car and parcel guys. Multiple anchors for a single object. Not good with occlusion.

- FM-DLA: The parcel guy was not properly detected. Hence, only their ID kept changing.

- FM-YOLO: Not a powerful detector, and did not have a big buffer size. Hence, ID of the car guys kept changing frequently.

For buffer size 150

- DS-YOLO: ID switch and failing, and the problem with anchors persist.

- FM-DLA: Only one ID switch throughout the video. It happened due to extended occlusion. This can be rectified if we increase the buffer size even more.

- FM-YOLO: Detection fails considerably, but ID was NEVER switched.

Conclusion: FairMOT-YOLOv5s again performs the best, but FairMOT-DLA-34 gives the most consistent results.

For buffer size 30

- DS-YOLO: Small objects (id 5 and 12) are not tracked from beginning. Although with a bit ID inaccuracy, it tracks fast moving object (cycle).

- FM-DLA: Possible false positives (ID 82). Cycle ID also switches.

- FM-YOLO: The person on cycle is barely detected. Also returns a possible false positive.

For buffer size 150

- DS-YOLO: Similar issues like the other DeepSORT video.

- FM-DLA: Similar probable false positive. Cycle is correctly tracked even after a few frames.

- FM-YOLO: Tracks the cycle after it crosses the street.

Conclusion: In FairMOT Cycle ID switches mainly because of how it handles fast moving objects. In FairMOT, after a certain threshold the Mahalanobis distance is set to infinite. It is done to avoid getting trajectories with large motion.

For buffer size 30

- DS-YOLO: Although new IDs were created when people crossed, original IDs were retained afterwards. An issue because of anchor box. It did not detect ID 4 when background and foreground colours were similar.

- FM-DLA: Detection works well even when the background and foreground colours are relatively similar. During group collision there are anomalies with the ID.

- FM-YOLO: Possible false positive, and does not detect the subject when background and foreground colours are relatively similar. Issue with the ID is observed here too.

For buffer size 150

- DS-YOLO: Anchor issues are consistent. Increasing the id buffer size does not improve the performance.

- FM-DLA: For two frames when the groups collide, two people get the same ID. It gets rectified quickly.

- FM-YOLO: Does not detect person when background and foreground colours are similar. Might have false positive error. Anomalies with the ID are seen here as well.

Conclusion: Here we see the CenterNet approach also fail.

7. Conclusion

With this, we conclude tracking and re-identification with FairMOT tracker. I hope you enjoyed reading the post. In summary, we learnt:

- About MOTs

- Problems faced because of previous trackers

- The problems FairMOT tackles

- FairMOT’s homogenous architecture

- The detection branch and its various heads

- Re-ID branch and the embeddings

- Association stage of FairMOT, which consists of

- Kalman Filter

- Previous frame’s detections

- Current frame’s detections

- The results on public datasets

- And comparison with DeepSORT

8. References

- https://arxiv.org/pdf/2004.01888.pdf

- https://learnopencv.com/understanding-multiple-object-tracking-using-deepsort/

- https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.367.6279&rep=rep1&type=pdf

- https://arxiv.org/pdf/1609.01775.pdf

- https://arxiv.org/pdf/1904.08189.pdf

- https://learnopencv.com/intersection-over-union-iou-in-object-detection-and-segmentation/

- https://motchallenge.net/

- https://viratdata.org/

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning