YOLO11 is finally here, revealed at the exciting Ultralytics YOLO Vision 2024 (YV24) event. 2024 is a year of YOLO models. After the release of YOLOv8 in 2023, we got YOLOv9 and YOLOv10 this year, and now this. You might think like another day, another YOLO variant, not a big deal, right?

YOLO11 is the state-of-the-art (SOTA), lightest, and most efficient Object Detection model in the YOLO family. YOLO11 was developed by Ultralytics, the creators of YOLOv8. And now, it will continue the legacy of the YOLO series. In this article, we will explore:

- What Can YOLO11 Do?

- What are the Improvements in YOLO11 Architecture?

- Setting Up YOLOv11 for Inference

- YOLO11 vs YOLO12

- A Quick Recap of the Article

Now, that’s exciting, right? So, grab a cup of coffee, and let’s dive in!

In this article, we dive deep into YOLO11, the latest and fastest Object Detection model of YOLO series. Unlike other guides, we don’t just introduce it, we compare it directly with latest YOLOv12.

We look into YOLO11's new architecture, and show real-world benchmark results to prove its speed and efficiency. You’ll learn about game-changing upgrades like the C3k2 block, SPPF, and C2PSA, which make it more accurate and lightweight.

Plus, we provide a hands-on inference Notebook in the Download Code section so you can test YOLO11 on your own images and videos.

Introduction to YOLO11

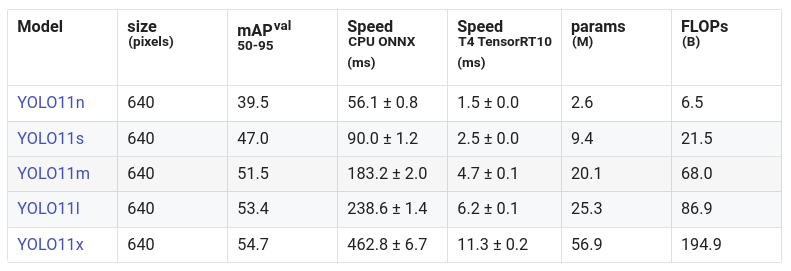

YOLOv11 is the latest iteration of the YOLO series from Ultralytics. It comes with super lightweight models that are much faster and more efficient than the previous YOLOs. It’s capable of doing a wider range of computer vision tasks including object detection, image segmentation, pose estimationand so on . Ultralytics released five YOLO11 models according to the size and 25 models across all tasks:

- YOLO11n – Nano for small and lightweight tasks.

- YOLO11s – Small upgrade of Nano with some extra accuracy.

- YOLO11m – Medium for general-purpose use.

- YOLO11l – Large for higher accuracy with higher computation.

- YOLO11x – Extra-large for maximum accuracy and performance.

YOLO11 is built on top of the Ultralytics YOLOv8 codebase with some architectural modifications. We will explore the new changes in the architecture and codebase later in the blog post. For more detailed YOLO series improvements, do read our YOLO master post later on!

YOLO Master Post – Every Model Explained

Don’t miss out on this comprehensive resource, Mastering All Yolo Models, for a richer, more informed perspective on the YOLO series.

YOLO11 Architecture

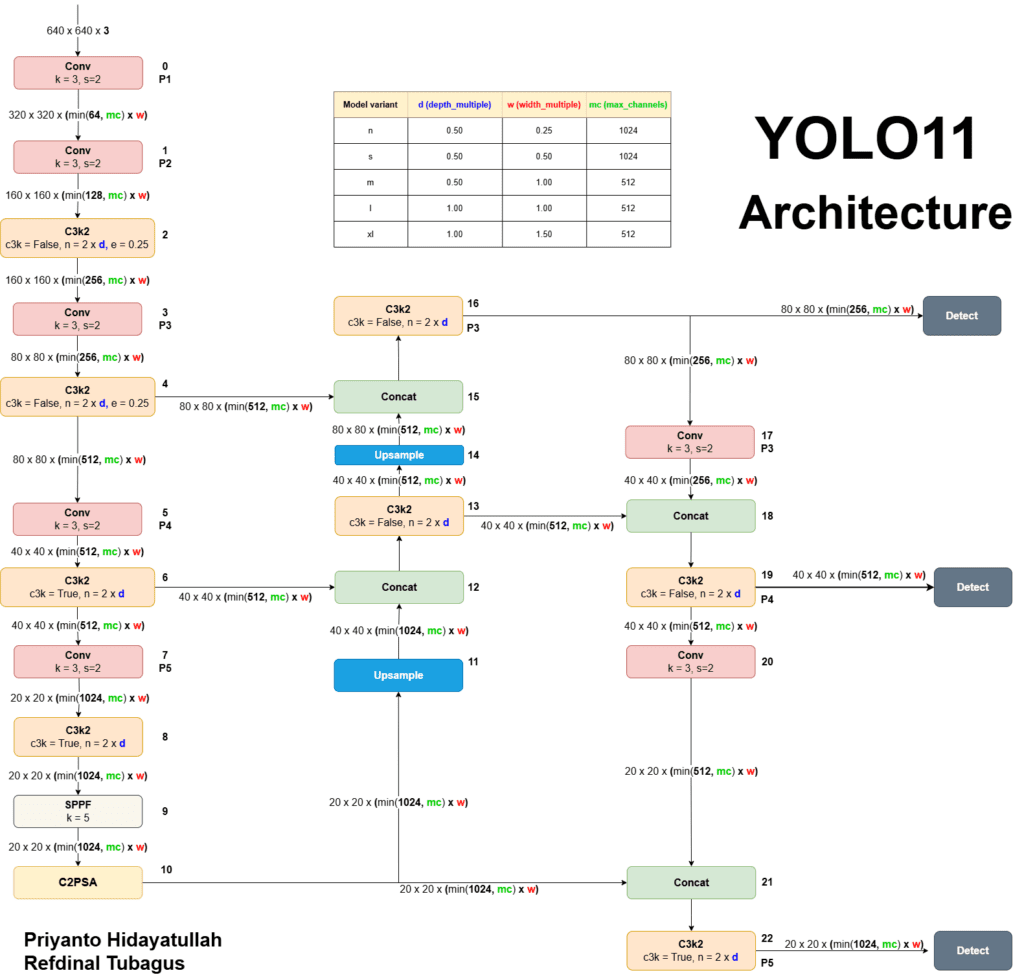

YOLO11 Architecture is an upgrade over YOLOv8 architecture with some new integrations and parameter tuning. Before we proceed to the main part, you can check out our detailed article on YOLOv8 to get an overview of the architecture. Now, if you look at the architecture:

Backbone

C3k2 Block: Instead of C2f, YOLO11 introduces the C3k2 block:

- [-1, 2, C3k2, [256, False, 0.25]]

It’s more efficient in terms of computation. This block is a custom implementation of the CSP Bottleneck, which uses two convolutions instead of one large convolution (as in YOLOv8).

CSP (Cross Stage Partial): CSP networks split the feature map and process one part through a bottleneck layer while merging the other part with the output of the bottleneck. This reduces the computational load and improves feature representation.

SPPF and C2PSA: YOLOv11 retains the SPPF block but adds a new C2PSA block after SPPF:

- [-1, 1, SPPF, [1024, 5]]

- [-1, 2, C2PSA, [1024]

class C2PSA(nn.Module):

def __init__(self, c1, c2, e=0.5):

super().__init__()

c_ = int(c2 * e)

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(2 * c_, c2, 1)

The C2PSA (Cross Stage Partial with Spatial Attention) block enhances the spatial attention in the feature maps, improving the model’s focus on the important parts of the image. This gives the model the ability to focus on specific regions of interest more effectively by pooling features spatially.

Neck

C3k2 Block: YOLO11 replaces the C2f block in the neck with the C3k2 block. As discussed earlier, C3k2 is a faster and more efficient block. For example, after upsampling and concatenation, the neck in YOLOv11 looks like this:

- [-1, 2, C3k2, [512, False]] # P4/16-medium

This change improves the speed and performance of the feature aggregation process.

Head

C3k2 Block: Similar to the neck, YOLOv11 replaces the C2f block in the head:

- [-1, 2, C3k2, [512, False]] # P4/16-medium

class C3k2(C2f):

def __init__(self, c1, c2, n=1, c3k=False, e=0.5, g=1, shortcut=True):

super().__init__(c1, c2, n, shortcut, g, e)

self.m = nn.ModuleList(

C3k(self.c, self.c, 2, shortcut, g) if c3k else Bottleneck(self.c, self.c, shortcut, g) for _ in range(n)

)

The use of C3k2 blocks makes the model faster in terms of inference and more efficient in terms of parameters.

PS: If you are intrested learning how YOLO or any other Object Detection model works and how you can build it from scratch, do join our free bootcamps to getting started!

Now we have an idea about the architecture. So, let’s see how the codebase is structured:

YOLO11 Codebase

In the ultralytics GitHub repo, we will mainly focus on,

Modules in nn/modules/

block.py: Defines various building blocks (modules) used in the model, such as bottlenecks, CSP modules, and attention mechanisms.

conv.py: Contains convolutional modules, including standard convolutions, depth-wise convolutions, and other variations.

head.py: Implements the head of the model responsible for producing the final predictions (e.g., bounding boxes, class probabilities).

transformer.py: Includes transformer-based modules, which are used for attention mechanisms and advanced feature extraction.

utils.py: Provides utility functions and helper classes used across the modules.

nn/tasks.py: Defines the different task-specific models (e.g., detection, segmentation, classification) that combine these modules to form complete architectures.

PS: please refer to the official YOLO11 YAML file for a more detailed overview.

Now, let’s get into the code!

Download the Code Here

YOLO11 Inference

Now, it’s time to do some experiments with YOLO11 models and see the inference results. Now, we will explore object detection, instance segmentation, and pose estimation tasks one by one:

We are using Nvidia Geforce RTX 3070 Ti Laptop GPU to run the inference. Let’s start:

First, we need to set up our environment:

We’ll use the pre-trained weights of YOLOv11 from the Ultralytics GitHub for our inference experiments. To do the inference, you need to clone the Ultralytics repository by the following command:

! git clone https://github.com/ultralytics/ultralytics.git

! cd ultralytics

Then, we need to set up the environment using the following command:

! conda create -n yolo11 python=3.11

! conda activate yolo11

! pip install ultralytics

Ultralytics supports both Python API and CLI commands to run the Inference. We will use the CLI option for this article.

YOLO11 Object Detection

For object detection, we will run this command:

! yolo detect predict model=yolo11x.pt source='./path/to/your/video.mp4' save=True

YOLO11 Instance Segmentation

For instance segmentation, run this command:

! yolo segment predict model=yolo11x.pt source='./path/to /your/video.mp4' save=True

YOLO11 Pose Estimation

For pose estimation, run:

! yolo pose predict model=yolo11x-pose.pt source='./path/to/your/video.mp4' save=True

YOLO11 Image Classification

For image classification, run:

! yolo classify predict model=yolo11x-pose.pt source='./path/to/your/video.mp4' save=True

YOLO11 Oriented Object Detection (OBB)

YOLOv11 can detect small objects as well. Let’s try that out, too:

! yolo obb predict model=yolo11x-obb.pt source='./path/to/your/video.mp4' save=True

PS: You can fine-tune all the other inference arguments that are available in ultralytics documentation.

Also, you don’t need to do all this manually; we’ve provided the Python notebook in the Download Code folder. Just use it and enjoy!

YOLO11 vs YOLO12

Now to make it more intresting will compare the inference results with the latest YOLOv12

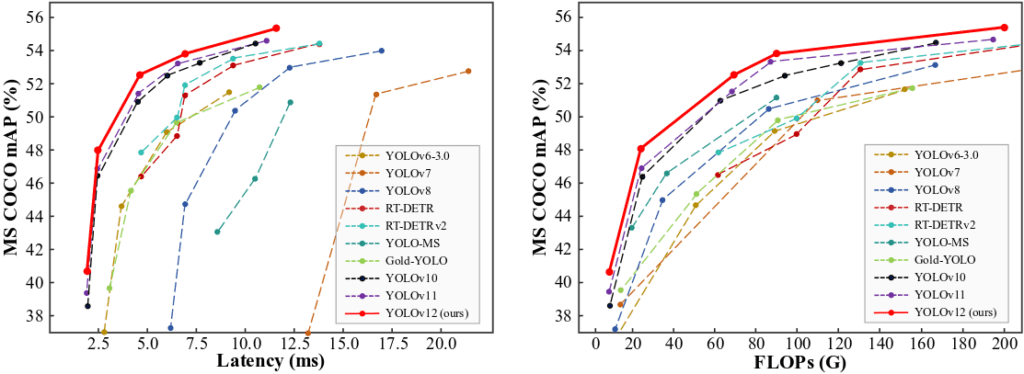

From the plot, it seems that YOLO11 is faster and simplier than YOLO12. So, why not run the inference and comapre both the models?

We are comparing YOLO11x (56.9M Params) with YOLO12x (59.1M params) model. Let’s see the results!

We ran inference on the same video and compared the latency and FPS:

#YOLOv11x

YOLO11x summary (fused): 190 layers, 56,919,424 parameters, 0 gradients, 194.9 GFLOPs

Speed: 1.9ms preprocess, 14.6ms inference, 0.7ms postprocess per image

FPS: ~58.14 frames/sec

# YOLOv12x

YOLO12x summary (fused): 283 layers, 59,135,744 parameters, 0 gradients, 199.0 GFLOPs

Speed: 1.5ms preprocess, 18.9ms inference, 0.7ms postprocess, per image

FPS: ~47.39 frames/sec

It’s clear now, YOLO11 is much lighter and faster than YOLOv12. How about a real-time camera inference comparision now?

Let’s do that too!

Just look at the top-left corner; you can clearly see the FPS drop in YOLOv12. Thus, we can conclude that YOLOv11 is still a relevant choice for real-time object detection tasks.

PS: We ran all the inferences on the Nvidia Geforce RTX 3070 Ti Laptop GPU. And we have provided this scipt as well in the Download Code folder.

So, we are almost at the end, let’s do a quick recap of what we have learn so far!

Quick Recap

- Lightweight and Efficient: The YOLO11 is the lightest and fastest model in the YOLO family. It features five different sizes (Nano, Small, Medium, Large, and Extra-large) to suit various use cases, from lightweight tasks to high-performance applications.

- New Architectures: YOLOv11 introduces new architectural improvements like the C3k2 block, SPPF, and C2PSA, making the model more efficient in extracting and processing features and improving attention on key areas of an image.

- Multi-Task Capabilities: In addition to object detection, YOLOv11 can handle instance segmentation, image classification, pose estimation, and oriented object detection (OBB), making it highly versatile in computer vision tasks.

- Enhanced Attention Mechanisms: The integration of spatial attention mechanisms like C2PSA in the architecture helps YOLOv11 focus more effectively on essential regions in the image, improving its detection accuracy, particularly for complex or occluded objects.

- Benchmark Superiority: In direct comparison to YOLOv12, YOLOv11 shows superior performance. Despite having less parameters, YOLO11x outperforms YOLO12x in terms of inference speed and FPS, making it a highly efficient model for real-time applications without sacrificing accuracy or computational efficiency.

Conclusion

We have explored YOLO11 and its capabilities so far. We hope you have a good understanding of the model architecture and code pipeline. Download the code, build cool projects, and make sure to share them with us!

See you in the next one! 😀

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning