Surveillance cameras plays an essential role in securing our home or business. These cameras are super affordable. So is setting up a surveillance system. The only difficult and expensive part is the monitoring. For real time monitoring, usually a security personnel or a team has to be assigned. It is simply not feasible for all.

But with the power of Computer Vision and AI, we can build something that is not only cheaper but also more reliable. There are various CV problems in a surveillance system, and Object Tracking is one of them.

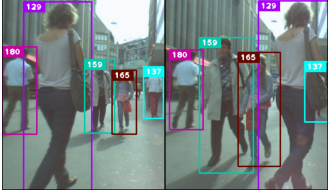

Object tracking is a method of tracking detected objects throughout frames using their spatial and temporal features. In this blog post, we will be implementing one of the most popular tracking algorithms DeepSORT along with YOLOv5 and testing it on the MOT17 dataset using MOTA and other metrics.

- Introduction to Tracking

- Types of Trackers

- Introduction to DeepSORT

- DeepSORT Implementation

- Evaluation

- Conclusion

- References

1. Introduction to Tracking

Tracking in deep learning is the task of predicting the positions of objects throughout a video using their spatial as well as temporal features. More technically, Tracking is getting the initial set of detections, assigning unique ids, and tracking them throughout frames of the video feed while maintaining the assigned ids. Tracking is generally a two-step process:

- A detection module for target localization: The module responsible for detecting and localization of the object in the frame using some object detector like YOLOv4, CenterNet, etc.

- A motion predictor: This module is responsible for predicting the future motion of the object using its past information.

1.1 Need of Tracker

Some questions must be arising in your mind. Why do we even need an object tracker? Why can’t we just use an object detector? Well, You are asking the right questions. There are many reasons why a tracker is needed.

- Tracking when object detection fails: There are many cases where an object detector might fail. But if we have an object tracker in place, it will still be able to predict the objects in the frame. For example, Consider a video where a motorbike running through the woods and we apply a detector to detect the motorbike. Here’s what will happen in this case, Whenever a bike gets occluded or overlapped by a tree the detector will fail. But, if we have a tracker with it, we will still be able to predict and track the motorbike.

- ID assignment: While using a detector, it only showcases the location of the objects, if we just look at the array of outputs we will not know which coordinates belong to which box. On the other hand, A tracker assigns an ID to each object it tracks and maintains that ID till the lifetime of that object in that frame.

- Real-time predictions: Trackers are very fast and generally faster than detectors. Because of this property, Trackers can be used in real-time scenarios and has many applications in the real world.

1.2 Applications of Tracking

As discussed in the previous section, Object tracking can have many real-world applications.

- Traffic monitoring: Trackers can be used to monitor traffic and track vehicles on the road. They can be used to judge traffic, detect violations, and many more, giving rise to systems like Automatic License Plate Recognition system.

- Sports: Trackers can also be used in sports, like ball tracking or player tracking. This in turn can be used to detect fouls, scorers in a game, and many more.

- Multi-cam surveillance: In Tracking, Multi-cam surveillance can be applied. In this, the core idea is Re-Identification. If a person is being tracked in one camera with an id, and the person goes out of the frame and comes back in another camera. Then the person will retain the same id they had previously. This application can help in re-identifying objects that reappear in a different camera and can be used in intrusion detection.

2. Types of Trackers

Trackers can be classified based on many categories like methods of tracking or the number of objects to be tracked.. In this section, we will see different trackers types with some examples.

2.1 Single and Multiple Object Trackers

These types of trackers track only a single object even if there are many other objects present in the frame. They work by first initializing the location of the object in the first frame, and then tracking it throughout the sequence of frames. These types of tracking methods are very fast. Some of them are CSRT, KCF, and many more which are built using Traditional computer vision. However, deep dearning based trackers are now proved to be far more accurate than traditional trackers. For example, SiamRPN and GOTURN are examples of deep learning based single object trackers.

These types of trackers can track multiple objects present in a frame. Multiple object trackers or MOTs are trained on a large amount of data, unlike traditional trackers. Therefore, they are proved to be more accurate as they can track multiple objects and even of different classes at the same time while maintaining high speed. Some of the algorithms include DeepSORT, JDE, and CenterTrack which are very powerful algorithms and handle most of the challenges faced by trackers.

2.2 Tracking by Detection and without Detection

- Tracking by Detection:

The type of tracking algorithm where the object detector detects the objects in the frames and then perform data association across frames to generate trajectories hence tracking the object. These types of algorithms help in tracking multiple objects and tracking new objects introduced in the frame. Most importantly, they help track objects even if the object detection fails.

- Tracking without Detection:

The type of tracking algorithm where the coordinates of the object are manually initialized and then the object is tracked in further frames. This type is mostly used in traditional computer vision algorithms as discussed earlier.

3. Introduction to DeepSORT

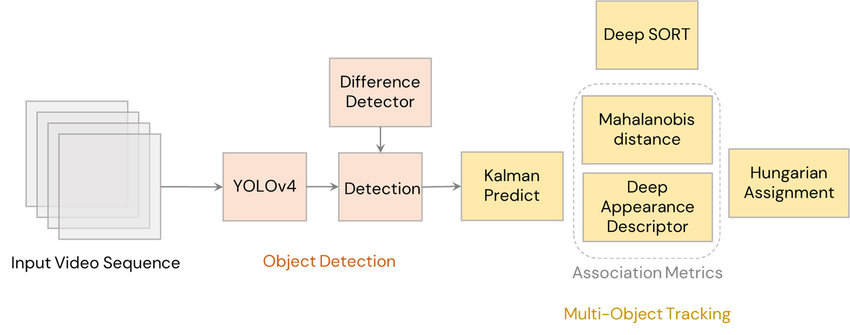

DeepSORT is a computer vision tracking algorithm for tracking objects while assigning an ID to each object. DeepSORT is an extension of the SORT (Simple Online Realtime Tracking) algorithm. DeepSORT introduces deep learning into the SORT algorithm by adding an appearance descriptor to reduce identity switches, Hence making tracking more efficient. To understand DeepSORT, First Let’s see how the SORT algorithm works.

3.1 Simple Online Realtime Tracking (SORT)

SORT is an approach to Object tracking where rudimentary approaches like Kalman filters and Hungarian algorithms are used to track objects and claim to be better than many online trackers. SORT is made of 4 key components which are as follows:

- Detection: This is the first step in the tracking module. In this step, an object detector detects the objects in the frame that are to be tracked. These detections are then passed on to the next step. Detectors like FrRCNN, YOLO, and more are most frequently used.

- Estimation: In this step, we propagate the detections from the current frame to the next which is estimating the position of the target in the next frame using a constant velocity model. When a detection is associated with a target, the detected bounding box is used to update the target state where the velocity components are optimally solved via the Kalman filter framework

- Data association: We now have the target bounding box and the detected bounding box. So, a cost matrix is computed as the intersection-over-union (IOU) distance between each detection and all predicted bounding boxes from the existing targets. The assignment is solved optimally using the Hungarian algorithm. If the IOU of detection and target is less than a certain threshold value called IOUmin then that assignment is rejected. This technique solves the occlusion problem and helps maintain the IDs.

- Creation and Deletion of Track Identities: This module is responsible for the creation and deletion of IDs. Unique identities are created and destroyed according to the IOUmin. If the overlap of detection and target is less than IOUmin then it signifies the untracked object. Tracks are terminated if they are not detected for TLost frames, you can specify what the amount of frame should be for TLost. Should an object reappear, tracking will implicitly resume under a new identity.

The objects can be successfully tracked using SORT algorithms beating many State-of-the-art algorithms. The detector gives us detections, Kalman filters give us tracks and the Hungarian algorithm performs data association. So, Why do we even need DeepSORT? Let’s look at it in the next section.

3.2 DeepSORT

SORT performs very well in terms of tracking precision and accuracy. But SORT returns tracks with a high number of ID switches and fails in case of occlusion. This is because of the association matrix used. DeepSORT uses a better association metric that combines both motion and appearance descriptors. DeepSORT can be defined as the tracking algorithm which tracks objects not only based on the velocity and motion of the object but also the appearance of the object.

For the above purposes, a well-discriminating feature embedding is trained offline just before implementing tracking. The network is trained on a large-scale person re-identification dataset making it suitable for tracking context. To train the deep association metric model in the DeepSORT cosine metric learning approach is used. According to DeepSORT’s paper, “The cosine distance considers appearance information that is particularly useful to recover identities after long-term occlusions when motion is less discriminative.” That means cosine distance is a metric that helps the model recover identities in case of long-term occlusion and motion estimation also fails. Using these simple things can make the tracker even more powerful and accurate

4. DeepSORT Implementation

As discussed in an earlier section, DeepSORT can be implemented for various real-life applications, one of them was sports. In this section, We will implement DeepSORT on sports like Football and 100m Race. Along with DeepSORT, YOLOv5 will be used as a detector to detect the required objects. The code is implemented on Google Colab on Tesla T4 GPU.

This is an anchor-based approach. FairMOT follows an anchor-free MOT approach.

4.1 YOLOv5 Implementation

Firstly, We will clone the YOLOv5 official repository to access the functionality and the pre-trained weights.

!mkdir ./yolov5_deepsort

!git clone https://github.com/ultralytics/yolov5.git

Installing the requirements.

%cd ./yolov5

!pip install -r requirements.txt

Now that we have the YOLOv5 ready, Let’s integrate DeepSORT with it.

4.2 Integrating DeepSORT

Again, We will clone the official implementation of DeepSORT to access its code and functionalities.

!git clone https://github.com/nwojke/deep_sort.git

Finally, Everything is in place! But how will the DeepSORT be integrated with the detector? The detect.py file of YOLOv5 is responsible for inferencing. We will use that detect.py file and make add the DeepSORT functionality using it into a new file detect_track.py.

%%writefile detect_track.py

# YOLOv5 ? by Ultralytics, GPL-3.0 license.

"""

Run inference on images, videos, directories, streams, etc.

Usage - sources:

$ python path/to/detect.py --weights yolov5s.pt --source 0

# webcam

img.jpg # image

vid.mp4 # video

path/ # directory

path/*.jpg # glob

'https://youtu.be/Zgi9g1ksQHc' # YouTube

'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream

"""

import argparse

import os

import sys

from pathlib import Path

import torch

import torch.backends.cudnn as cudnn

FILE = Path(__file__).resolve()

ROOT = FILE.parents[0] # YOLOv5 root directory.

if str(ROOT) not in sys.path:

sys.path.append(str(ROOT)) # Add ROOT to PATH.

ROOT = Path(os.path.relpath(ROOT, Path.cwd())) # Relative.

# DeepSORT -> Importing DeepSORT.

from deep_sort.application_util import preprocessing

from deep_sort.deep_sort import nn_matching

from deep_sort.deep_sort.detection import Detection

from deep_sort.deep_sort.tracker import Tracker

from deep_sort.tools import generate_detections as gdet

from models.common import DetectMultiBackend

from utils.dataloaders import IMG_FORMATS, VID_FORMATS, LoadImages, LoadStreams

from utils.general import (LOGGER, check_file, check_img_size, check_imshow, check_requirements, colorstr, cv2,

increment_path, non_max_suppression, print_args, scale_coords, strip_optimizer, xyxy2xywh)

from utils.plots import Annotator, colors, save_one_box

from utils.torch_utils import select_device, time_sync

@torch.no_grad()

def run(

weights=ROOT / 'yolov5s.pt', # model.pt path(s)

source=ROOT / 'data/images', # file/dir/URL/glob, 0 for webcam

data=ROOT / 'data/coco128.yaml', # dataset.yaml path.

imgsz=(640, 640), # Inference size (height, width).

conf_thres=0.25, # Confidence threshold.

iou_thres=0.45, # NMS IOU threshold.

max_det=1000, # Maximum detections per image.

device='', # Cuda device, i.e. 0 or 0,1,2,3 or cpu.

view_img=False, # Show results.

save_txt=False, # Save results to *.txt.

save_conf=False, # Save confidences in --save-txt labels.

save_crop=False, # Save cropped prediction boxes.

nosave=False, # Do not save images/videos.

classes=None, # Filter by class: --class 0, or --class 0 2 3.

agnostic_nms=False, # Class-agnostic NMS.

augment=False, # Augmented inference.

visualize=False, # Visualize features.

update=False, # Update all models.

project=ROOT / 'runs/detect', # Save results to project/name.

name='exp', # Save results to project/name.

exist_ok=False, # Existing project/name ok, do not increment.

line_thickness=3, # Bounding box thickness (pixels).

hide_labels=False, # Hide labels.

hide_conf=False, # Hide confidences.

half=False, # Use FP16 half-precision inference.

dnn=False, # Use OpenCV DNN for ONNX inference.

):

source = str(source)

save_img = not nosave and not source.endswith('.txt') # Save inference images.

is_file = Path(source).suffix[1:] in (IMG_FORMATS + VID_FORMATS)

is_url = source.lower().startswith(('rtsp://', 'rtmp://', 'http://', 'https://'))

webcam = source.isnumeric() or source.endswith('.txt') or (is_url and not is_file)

if is_url and is_file:

source = check_file(source) # Download.

# DeepSORT -> Initializing tracker.

max_cosine_distance = 0.4

nn_budget = None

model_filename = './model_data/mars-small128.pb'

encoder = gdet.create_box_encoder(model_filename, batch_size=1)

metric = nn_matching.NearestNeighborDistanceMetric("cosine", max_cosine_distance, nn_budget)

tracker = Tracker(metric)

# Directories.

if not os.path.isdir('./runs/'):

os.mkdir('./runs/')

save_dir = os.path.join(os.getcwd(), "runs")

print(save_dir)

'''save_dir = increment_path(Path(project) / name, exist_ok=exist_ok) # increment run

(save_dir / 'labels' if save_txt else save_dir).mkdir(parents=True, exist_ok=True) # make dir'''

# Load model.

device = select_device(device)

model = DetectMultiBackend(weights, device=device, dnn=dnn, data=data, fp16=half)

stride, names, pt = model.stride, model.names, model.pt

imgsz = check_img_size(imgsz, s=stride) # Check image size.

# Dataloader.

if webcam:

view_img = check_imshow()

cudnn.benchmark = True # Set True to speed up constant image size inference.

dataset = LoadStreams(source, img_size=imgsz, stride=stride, auto=pt)

bs = len(dataset) # batch_size.

else:

dataset = LoadImages(source, img_size=imgsz, stride=stride, auto=pt)

bs = 1 # batch_size.

vid_path, vid_writer = [None] * bs, [None] * bs

# Run inference.

model.warmup(imgsz=(1 if pt else bs, 3, *imgsz)) # Warmup.

dt, seen = [0.0, 0.0, 0.0], 0

frame_idx=0

for path, im, im0s, vid_cap, s in dataset:

t1 = time_sync()

im = torch.from_numpy(im).to(device)

im = im.half() if model.fp16 else im.float() # uint8 to fp16/32. im /= 255 # 0 - 255 to 0.0 - 1.0

if len(im.shape) == 3:

im = im[None] # Expand for batch dim.

t2 = time_sync()

dt[0] += t2 - t1

# Inference.

visualize = increment_path(save_dir / Path(path).stem, mkdir=True) if visualize else False

pred = model(im, augment=augment, visualize=visualize)

t3 = time_sync()

dt[1] += t3 - t2

# NMS.

pred = non_max_suppression(pred, conf_thres, iou_thres, classes, agnostic_nms, max_det=max_det)

dt[2] += time_sync() - t3

# Second-stage classifier (optional).

# pred = utils.general.apply_classifier(pred, classifier_model, im, im0s)

frame_idx=frame_idx+1

# Process predictions.

for i, det in enumerate(pred): # Per image.

seen += 1

if webcam: # batch_size >= 1

p, im0, frame = path[i], im0s[i].copy(), dataset.count

s += f'{i}: '

else:

p, im0, frame = path, im0s.copy(), getattr(dataset, 'frame', 0)

p = Path(p) # To Path.

print("stem", p.stem)

print("dir", save_dir)

save_path = os.path.join(save_dir, p.name) # im.jpg

txt_path = os.path.join(save_dir , p.stem) # im.txt

s += '%gx%g ' % im.shape[2:] # Print string.

gn = torch.tensor(im0.shape)[[1, 0, 1, 0]] # Normalization gain whwh.

imc = im0.copy() if save_crop else im0 # For save_crop.

annotator = Annotator(im0, line_width=line_thickness, example=str(names))

if len(det):

# Rescale boxes from img_size to im0 size.

det[:, :4] = scale_coords(im.shape[2:], det[:, :4], im0.shape).round()

# Print results.

for c in det[:, -1].unique():

n = (det[:, -1] == c).sum() # Detections per class.

s += f"{n} {names[int(c)]}{'s' * (n > 1)}, " # Add to string.

# DeepSORT -> Extracting Bounding boxes and its confidence scores.

bboxes = []

scores = []

for *boxes, conf, cls in det:

bbox_left = min([boxes[0].item(), boxes[2].item()])

bbox_top = min([boxes[1].item(), boxes[3].item()])

bbox_w = abs(boxes[0].item() - boxes[2].item())

bbox_h = abs(boxes[1].item() - boxes[3].item())

box = [bbox_left, bbox_top, bbox_w, bbox_h]

bboxes.append(box)

scores.append(conf.item())

# DeepSORT -> Getting appearance features of the object.

features = encoder(im0, bboxes)

# DeepSORT -> Storing all the required info in a list.

detections = [Detection(bbox, score, feature) for bbox, score, feature in zip(bboxes, scores, features)]

# DeepSORT -> Predicting Tracks.

tracker.predict()

tracker.update(detections)

#track_time = time.time() - prev_time

# DeepSORT -> Plotting the tracks.

for track in tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

# DeepSORT -> Changing track bbox to top left, bottom right coordinates.

bbox = list(track.to_tlbr())

# DeepSORT -> Writing Track bounding box and ID on the frame using OpenCV.

txt = 'id:' + str(track.track_id)

(label_width,label_height), baseline = cv2.getTextSize(txt , cv2.FONT_HERSHEY_SIMPLEX,1,1)

top_left = tuple(map(int,[int(bbox[0]),int(bbox[1])-(label_height+baseline)]))

top_right = tuple(map(int,[int(bbox[0])+label_width,int(bbox[1])]))

org = tuple(map(int,[int(bbox[0]),int(bbox[1])-baseline]))

cv2.rectangle(im0, (int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])), (255,0,0), 1)

cv2.rectangle(im0, top_left, top_right, (255,0,0), -1)

cv2.putText(im0, txt, org, cv2.FONT_HERSHEY_SIMPLEX, 1, (255,255,255), 1)

# DeepSORT -> Saving Track predictions into a text file.

save_format = '{frame},{id},{x1},{y1},{w},{h},{x},{y},{z}\n'

print("txt: ", txt_path, '.txt')

with open(txt_path + '.txt', 'a') as f:

line = save_format.format(frame=frame_idx, id=track.track_id, x1=int(bbox[0]), y1=int(bbox[1]), w=int(bbox[2]- bbox[0]), h=int(bbox[3]-bbox[1]), x = -1, y = -1, z = -1)

f.write(line)

# Stream results.

im0 = annotator.result()

# Save results (image with detections and tracks).

if save_img:

if dataset.mode == 'image':

cv2.imwrite(save_path, im0)

else: # 'video' or 'stream'

if vid_path[i] != save_path: # New video.

vid_path[i] = save_path

if isinstance(vid_writer[i], cv2.VideoWriter):

vid_writer[i].release() # Release previous video writer.

if vid_cap: # video

fps = vid_cap.get(cv2.CAP_PROP_FPS)

w = int(vid_cap.get(cv2.CAP_PROP_FRAME_WIDTH))

h = int(vid_cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

else: # Stream.

fps, w, h = 30, im0.shape[1], im0.shape[0]

save_path = str(Path(save_path).with_suffix('.mp4')) # Force *.mp4 suffix on results videos.

vid_writer[i] = cv2.VideoWriter(save_path, cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

vid_writer[i].write(im0)

# Print time (inference-only).

LOGGER.info(f'{s}Done. ({t3 - t2:.3f}s)')

# Print results.

t = tuple(x / seen * 1E3 for x in dt) # Speeds per image.

LOGGER.info(f'Speed: %.1fms pre-process, %.1fms inference, %.1fms NMS per image at shape {(1, 3, *imgsz)}' % t)

if update:

strip_optimizer(weights) # Update model (to fix SourceChangeWarning).

def parse_opt():

parser = argparse.ArgumentParser()

parser.add_argument('--weights', nargs='+', type=str, default=ROOT / 'yolov5s.pt', help='model path(s)')

parser.add_argument('--source', type=str, default=ROOT / 'data/images', help='file/dir/URL/glob, 0 for webcam')

parser.add_argument('--data', type=str, default=ROOT / 'data/coco128.yaml', help='(optional) dataset.yaml path')

parser.add_argument('--imgsz', '--img', '--img-size', nargs='+', type=int, default=[640], help='inference size h,w')

parser.add_argument('--conf-thres', type=float, default=0.25, help='confidence threshold')

parser.add_argument('--iou-thres', type=float, default=0.45, help='NMS IoU threshold')

parser.add_argument('--max-det', type=int, default=1000, help='maximum detections per image')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--view-img', action='store_true', help='show results')

parser.add_argument('--save-txt', action='store_true', help='save results to *.txt')

parser.add_argument('--save-conf', action='store_true', help='save confidences in --save-txt labels')

parser.add_argument('--save-crop', action='store_true', help='save cropped prediction boxes')

parser.add_argument('--nosave', action='store_true', help='do not save images/videos')

parser.add_argument('--classes', nargs='+', type=int, help='filter by class: --classes 0, or --classes 0 2 3')

parser.add_argument('--agnostic-nms', action='store_true', help='class-agnostic NMS')

parser.add_argument('--augment', action='store_true', help='augmented inference')

parser.add_argument('--visualize', action='store_true', help='visualize features')

parser.add_argument('--update', action='store_true', help='update all models')

parser.add_argument('--project', default=ROOT / 'runs/detect', help='save results to project/name')

parser.add_argument('--name', default='exp', help='save results to project/name')

parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

parser.add_argument('--line-thickness', default=3, type=int, help='bounding box thickness (pixels)')

parser.add_argument('--hide-labels', default=False, action='store_true', help='hide labels')

parser.add_argument('--hide-conf', default=False, action='store_true', help='hide confidences')

parser.add_argument('--half', action='store_true', help='use FP16 half-precision inference')

parser.add_argument('--dnn', action='store_true', help='use OpenCV DNN for ONNX inference')

opt = parser.parse_args()

opt.imgsz *= 2 if len(opt.imgsz) == 1 else 1 # Expand.

print_args(vars(opt))

return opt

def main(opt):

check_requirements(exclude=('tensorboard', 'thop'))

run(**vars(opt))

if __name__ == "__main__":

opt = parse_opt()

main(opt)

With just a few extra lines into the detector code, we have integrated the DeepSORT which is ready to use. According to the official implementation of YOLOv5, the results are saved into a new folder called runs, and the tracker results and the output videos will be saved in the same folder as well. Let’s run this script and see how it performs.

4.3 Inference

As discussed earlier, This tracker will be tested on sports scenarios. The detect_track script takes many arguments but some of them are required to be passed.

--weights: The name of the weight is passed here which will be automatically downloaded. We will use the medium network that is YOLOv5m.--img: Specifies image size, default size is 640--source: Specifies the path to the image or the video file, directory, webcam or link.--classes: Specifies indexes of classes. For example 0 for the person, 32 for sports ball. Refer to yolov5/data/coco.yaml for more classes.--line-thickness: Specifies bounding box thickness.

First off, Let’s test it on the following video.

!python detect_track.py --weights yolov5m.pt --img 640 --source ./football-video.mp4 --classes 0 32 --line-thickness 1

WoW! That was fast, Each image took 20.6ms for inference which roughly translates to 50 FPS. Even the output looks pretty good. Output can be seen below.

The detector has done its work pretty great. But the occlusion is not handled well at all in the case of the ball as well as the players. Also, there are many ID switches too. The players in the video are tracked good, but due to motion blur, the ball is not even detected as well as tracked properly. Maybe DeepSORT will perform better on a less dense video.

Let’s test it on another sports video that is of a sprint race.

!python detect_track.py --weights yolov5m.pt --img 640 --source ./sprint.mp4 --save-txt --classes 0 --line-thickness 1

The speed still remains similarly fast, around 52 FPS. The output is still very accurate, The ID switches are very less, but fail to maintain it during occlusion. Output can be viewed below.

So, We can conclude from these results that DeepSORT does not handle occlusion well and can lead to ID switches.

5. Evaluation

Evaluation always plays a big role when experimenting and testing out new things. Similarly, For DeepSORT we will be judging its performance based on some standard metrics. As we know, DeepSORT is a multi-object tracking algorithm, so to judge its performance we need special metrics and benchmark datasets. We will be using CLEARMOT metrics to judge the performance of our DeepSORT on the MOT17 dataset. Let’s dive deeper into these in the following section.

5.1 MOT Challenge benchmark

MOT Challenge benchmark is a framework that provides a large collection of datasets with challenging real-world sequences, accurate annotations, and many metrics. MOT Challenge consists of various datasets like persons, objects, 2D, 3D, and many more. More specifically, there are several variants of the dataset released each year, such as MOT15, MOT17, and MOT20 introduced to measure the performance of multiple object trackers.

- MOT15, along with numerous state-of-the-art results that were submitted in the last years

- MOT16, which contains new challenging videos

- MOT17, which extends MOT16 sequences with more precise labels

- MOT20, which contains videos from the top-down view

For our evaluation, we will be using a subset of MOT17 dataset.

Dataset format:

All of the dataset variants have the same format which provides us with

- Video files

- seqinfo.ini – information about the file

- gt.txt – Ground truth annotation of tracking

- det.txt – Ground truth annotation of detection

Output format

To evaluate the performance, the output of the tracker should in a particular format i.e. frame,id,x1,y1,x2,y2,1,-1,-1,-1 where,

- frame: frame number

- id: id of the tracked object

- x1, y1: left top coordinates

- x2, y2: right bottom coordinates

Ground Truth format

We will be needing gt.txt file from the annotation zip file for evaluation. It can be downloaded from here.

The format is as follows: Frame, ID, bbox, whether to ignore, classes, occlusion

- Frame: Frame number of video

- ID: ID of the tracked object

- bbox: Bounding box coordinate of the object

- whether to ignore: whether to ignore object, 0 means ignore

- classes: contains classes of pedestrian, car, static persons, etc.

- occlusion: it shows if the object is covered or cut by other objects

5.2 ClearMOT metrics

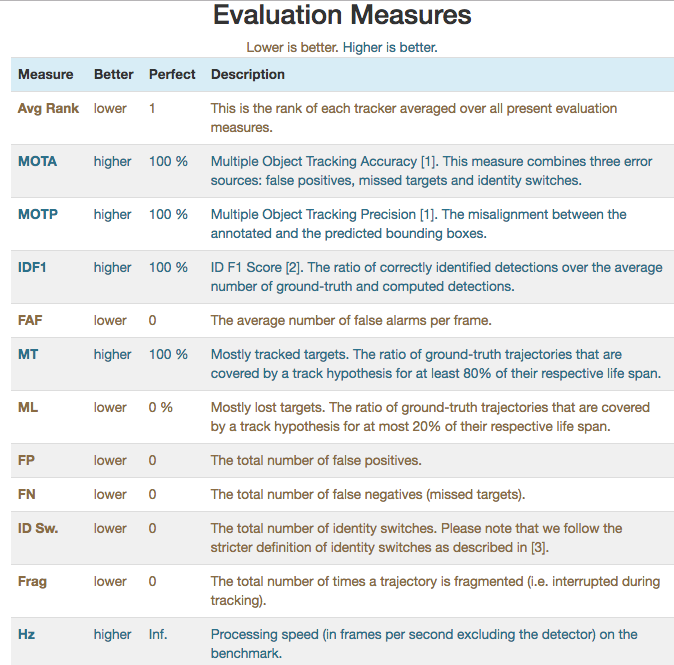

It is a Framework for evaluating the performance of the tracker over different parameters. A total of 8 different metrics are given to evaluate object detection, localization and tracking performance. It also provides us with two novel metrics:

- Multiple Object Tracking Precision (MOTP)

- Multiple Object Tracking Accuracy (MOTA)

These metrics helps evaluate the tracker’s overall strengths and judge its general performance. Other measures are as follows:

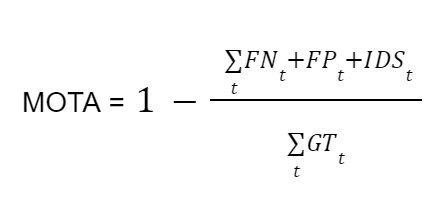

For person tracking, we will be evaluating our performance based on MOTA, which tells us about the performance of detection, misses and ID switches. The accuracy of the tracker, MOTA (Multiple Object Tracking Accuracy) is calculated by:

Where FN is the number of false negatives, FP is the number of false positives, IDS is the number of identity switches at time t and GT is the ground truth. MOTA can be negative too.

5.3 Evaluating Performance

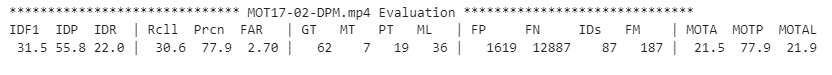

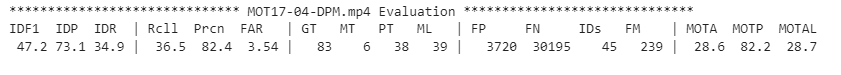

As discussed earlier that MOT17 will be used for testing, you can download the video sequences from here. Let’s run the sequences one by one.

!python detect_track.py --weights yolov5m.pt --img 640 --source ./videos/MOT17-02-DPM.mp4 --save-txt --class 0 --line-thickness 1

!python detect_track.py --weights yolov5m.pt --img 640 --source ./videos/MOT17-04-DPM.mp4 --save-txt --class 0 --line-thickness 1

!python detect_track.py --weights yolov5m.pt --img 640 --source ./videos/MOT17-10-DPM.mp4 --save-txt --class 0 --line-thickness 1

To evaluate the performance, we will be referring to the following repository by cloning it.

!git clone https://github.com/shenh10/mot_evaluation.git

%cd ./mot_evaluation

The videos are of resolution 960×540 and the annotations are of resolution 1920×1080. So, The annotations are needed to be modified according to the video resolution. You can directly download the resized ground truth annotations from here.

We will be using the evaluate_tracking.py file under mot_evaluation for evaluating the results. This script takes three arguments that are as follows:

--seqmap: Here, video filenames whose evaluation is to be done are specified.--track: Specifies the path to the tracking result folders.--gt: Specifies ground truth file path.

!python evaluate_tracking.py --seqmap './MOT17/videos' --track './yolov5/runs/' --gt './label_960x540'

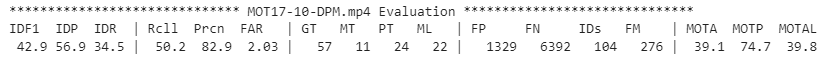

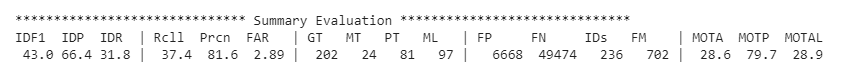

This gives the accuracy of all three videos and gives the average result of all of the videos. Here are the results.

6. Conclusion

DeepSORT has performed fairly well visually. However,the metrics show not-so-good results. DeepSORT has many drawbacks like ID switches, bad occlusion handling, motion blur, and many more. The average accuracy we got is 28.6 which is very low. But, one thing that is good here is the speed. All of the things can be fixed by using the latest algorithms. Algorithms like FairMOT, and CentreTrack are very advanced and can reduce ID switches significantly and handle occlusions very well. So, experiment with DeepSORT for some more time. Try different scenarios, use other variants of the YOLOv5 model or use DeepSORT on your custom object detector. Feel free to share your findings with us.

7. References

- DeepSORT: arXiv:1703.07402

- YOLOv5: https://github.com/ultralytics/yolov5

- ClearMOT: Bernardin, K., Stiefelhagen, R. Evaluating Multiple Object Tracking Performance: The CLEAR MOT Metrics. J Image Video Proc 2008, 246309 (2008). https://doi.org/10.1155/2008/246309

- MOT17 dataset: arXiv:1810.11780, https://motchallenge.net/

- MOT evaluation code: https://github.com/shenh10/mot_evaluation.git

- DeepSORT feature image: Freepik.com

- Video-1/Football tracking demo image: https://youtu.be/QXhV148EryQ

- Video-2/Sprint race image: https://youtu.be/z4–4_Gzrb0

- DeepSORT architecture image: Parico, Addie Ira & Ahamed, Tofael. (2021). Real Time Pear Fruit Detection and Counting Using YOLOv4 Models and Deep SORT. Sensors. 21. 4803. 10.3390/s21144803.

- Evaluation measures image: https://motchallenge.net/

- Cosine Metric Learning: https://github.com/nwojke/cosine_metric_learning

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning