In the previous post, we’ve learned how to work with OpenCV Java API with the example of a PyTorch convolutional neural network, integrated into the Java pipeline. Now we are going to transform the obtained experience into a lightweight Android application.

There are some challenges on the way of creating such an application, and they are partly connected with OpenCV usage. In this post, we’ve collected the steps, which can help you to deal with some common problems while creating an Android application using OpenCV API. If you are mostly interested in the code part, you may skip the project configuration details and go to the “Application Code Analysis” section.

Application Development

Here we will describe the Android application creation process using Android Studio and OpenCV for Android SDK. We are going to cover the following points:

- Android project creation and initial configuration.

- OpenCV module import.

- Application configuration.

- Data capturing and processing pipeline implementation (

MainActivity.java). - CNN integration (

CNNExtractorService). - Run the final application.

Step-1: Android Studio Project Configuration

In this section, we are going to reproduce the project creation process in Android Studio step-by-step from scratch.

For the application development, we use 4.0.1 IDE version and opencv-4.3.0-android-sdk (for more details about OpenCV Android SDK retrieving, explore README file).

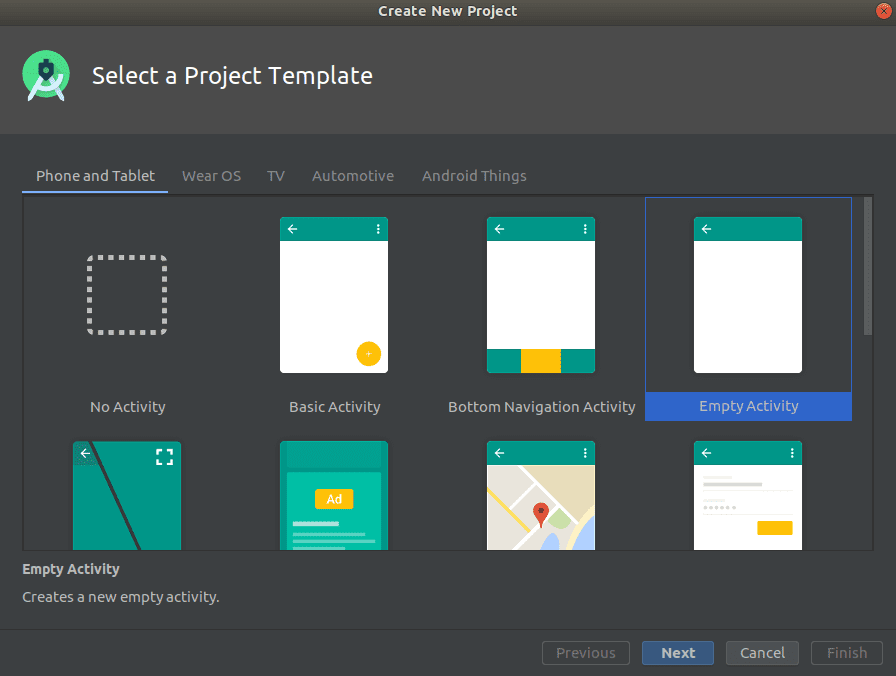

In the Android Studio, after you’ve chosen Start a new Android Studio project option, on the Select a Project Template page click on Phone and Tablet tab to choose Empty Activity:

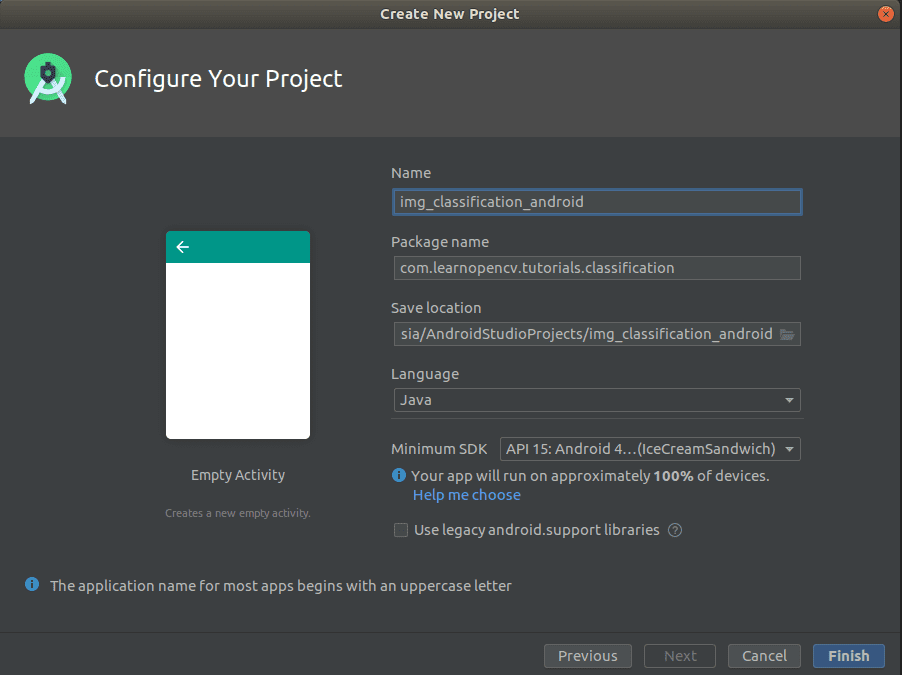

Then configure your project by filling project name, package, location and API configurations fields:

OpenCV Manager Import

OpenCV Manager represents an Android service for managing OpenCV binaries on the end devices. There are two ways to involve OpenCV manager into the project – asynchronous and static. In the static approach, OpenCV binaries will be included in the application package. In the asynchronous way, OpenCV Manager should be also installed on the target device.

The recommended way is to use static linking as the compatibility provision between the platform and the library became an arduous task.

Thus, we will use static OpenCV manager initialization:

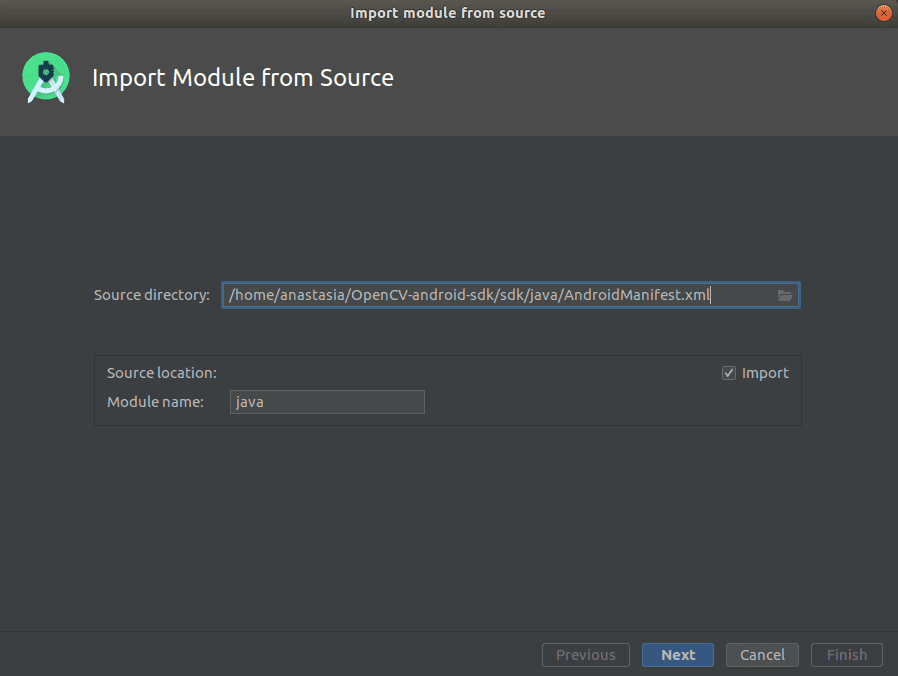

1. Import a new module to the project:

File > New > Import Module ... specifying in the Source directory: ~/OpenCV-android-sdk/sdk/java/AndroidManifest.xml

2. Set module name:

In the appeared Module name field choose the name for the imported module, in our application, we will use OpenCVLib430. After import configurations are completed, you will find a new module named OpenCVLib430 in img_classification_android/settings.gradle file:

include ':app'

rootProject.name = "img_classification_android"

include ':OpenCVLib430'

3. Modify build.graddle files of the new module in accordance with img_classification_android/app/build.graddle:

- Check the lines from the app module:

img_classification_android/app/build.graddlecontaining compile SDK version and build tools version:

compileSdkVersion 30

buildToolsVersion "30.0.2"

- Copy these versions to the appropriate lines of the imported OpenCV Android module (

img_classification_android/OpenCVLib430/build.gradle):

compileSdkVersion 29 -> compileSdkVersion 30

buildToolsVersion "29.0.2" -> buildToolsVersion "30.0.2"

- In

img_classification_android/OpenCVLib430/build.gradlechange the defaultapply plugin: 'com.android.application'intoapply plugin: 'com.android.library'. After that modifydefaultConfigblock, addingminSdkVersionandtargetSdkVersionas inimg_classification_android/app/build.graddle:

apply plugin: 'com.android.library'

android {

compileSdkVersion 30

buildToolsVersion "30.0.2"

defaultConfig {

minSdkVersion 15

targetSdkVersion 30

}

...

}

- Add configured OpenCVLib430 module into app dependencies modifying its build.gradle

implementation project(':OpenCVLib430')

Now let’s execute Make Project (crtl+F9) and verify whether the build was successful.

4. Transfer native libraries:

To finalize the OpenCV manager installation we need to transfer native libraries to the app:

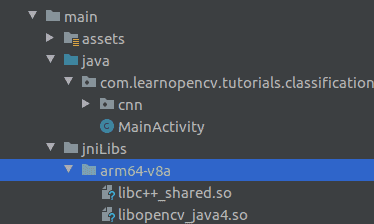

Create jniLibs folder in the app/src/main directory. Then move the suitable files for your device architecture from OpenCV-android-sdk/sdk/native/libs into created app/src/main/jniLibs. For example, in our case it’s arm64-v8a (to get the cpu details on the device, you may run cat /proc/cpuinfo).

5. Install NDK:

Now we need to install NDK (native development kit) to use C++ code of the OpenCV library. To do so, follow the steps below:

- Go to

Tools > SDK Manager > Android SDK > SDK Tools - Find

NDK (Side by Side)and fill the checkbox - Click

OKbutton to proceed with the installation

After successful installation, go to the ~/Android/Sdk/ndk/<ndk_version>/sources/cxx-stl/llvm-libc++/libs/ directory. There you will find the folders with the names corresponding to the names of directories in app/src/main/jniLibs. You need to copy libc++_shared.so into the corresponding folders in app/src/main/jniLibs. For example, in our case, we copy ~/Android/Sdk/ndk/<ndk_version>/sources/cxx-stl/llvm-libc++/libs/arm64-v8a/libc++_shared.so into app/src/main/jniLibs/arm64-v8a.

The final project structure is below:

Application Code Analysis

In the first part, we described how to obtain the ONNX model with its further use in Java code with OpenCV API. We introduced Mobilenetv2ToOnnx.py script for .onnx obtaining and evaluating the results. After its execution, we get models/pytorch_mobilenet.onnx file, which will be used in the application. To be able to involve the converted model into the code, move it into assets folder.

It should be mentioned that assets directory in the Android project is used for the allocation of the project sources. By default, there is no such directory in the project. It can be created manually with mkdir or with the Android Studio component configurator via the right click on the app component: New > Folder > Assets Folder: app/src/main/assets

For further decoding of the predictions, we should also add imagenet_classes.txt file into app/src/main/assets.

To configure displaying of the precessed frames, we will modify app/src/main/activity_main.xml in the following way:

<FrameLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

xmlns:opencv="http://schemas.android.com/apk/res-auto"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<org.opencv.android.JavaCameraView

android:id="@+id/CameraView"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:visibility="visible"

opencv:show_fps="false"

opencv:camera_id="any" />

</FrameLayout>

To configure camera access and screen orientation, we should add the below code into app/src/main/res/layout/AndroidManifest.xml:

<application

android:allowBackup="true"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:roundIcon="@mipmap/ic_launcher_round"

android:supportsRtl="true"

android:theme="@style/Theme.AppCompat.NoActionBar"> <!--Full screen mode-->

<activity

android:name=".MainActivity"

android:screenOrientation="landscape"> <!--Screen orientation-->

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

<supports-screens android:resizeable="true"

android:smallScreens="true"

android:normalScreens="true"

android:largeScreens="true"

android:anyDensity="true" />

<!--Camera usage configuration-->

<uses-permission android:name="android.permission.CAMERA" />

<uses-feature

android:name="android.hardware.camera"

android:required="false" />

<uses-feature

android:name="android.hardware.camera.autofocus"

android:required="false" />

<uses-feature

android:name="android.hardware.camera.front"

android:required="false" />

<uses-feature

android:name="android.hardware.camera.front.autofocus"

android:required="false" />

Let’s have a look at the project code structure. MainActivity.java interacts with the core application logic, described in the CNNExtractorService implementation – CNNExtractorServiceImpl. MainActivity.java processes obtained from the device data with CNNExtractorService methods and returns labeled images as application response.

CNNExtractorService Content

CNNExtractorService consists of:

Net getConvertedNet(String clsModelPath, String tag);

String getPredictedLabel(Mat inputImage, Net dnnNet, String classesPath);

getConvertedNet invokes OpenCV org.opencv.dnn.Net obtained from pytorch_mobilenet.onnx:

@Override

public Net getConvertedNet(String clsModelPath, String tag) {

TAG = tag;

Net convertedNet = Dnn.readNetFromONNX(clsModelPath);

Log.i(TAG, "Network was successfully loaded");

return convertedNet;

}

getPredictedLabel provides inference and transforms MobileNet predictions into resulting object class:

@Override

public String getPredictedLabel(Mat inputImage, Net dnnNet, String classesPath) {

// preprocess input frame

Mat inputBlob = getPreprocessedImage(inputImage);

// set OpenCV model input

dnnNet.setInput(inputBlob);

// provide inference

Mat classification = dnnNet.forward();

return getPredictedClass(classification, classesPath);

}

In the first post, we discussed in detail each of the presented in the above code steps. Let’s briefly cover them:

- preprocess input frame – here we prepare input blob for the MobileNet model:

Imgproc.cvtColor(image, image, Imgproc.COLOR_RGBA2RGB);

// create empty Mat images for float conversions

Mat imgFloat = new Mat(image.rows(), image.cols(), CvType.CV_32FC3);

// convert input image to float type

image.convertTo(imgFloat, CvType.CV_32FC3, SCALE_FACTOR);

// resize input image

Imgproc.resize(imgFloat, imgFloat, new Size(256, 256));

// crop input image

imgFloat = centerCrop(imgFloat);

// prepare DNN input

Mat blob = Dnn.blobFromImage(

imgFloat,

1.0, /* default scalefactor */

new Size(TARGET_IMG_WIDTH, TARGET_IMG_HEIGHT), /* target size */

MEAN, /* mean */

true, /* swapRB */

false /* crop */

);

// divide on std

Core.divide(blob, STD, blob);

- set OpenCV model input – after input blob was prepared, we pass it into org.opencv.dnn.Net:

dnnNet.setInput(inputBlob);

- obtaining inference result:

Mat classification = dnnNet.forward();

- process inference results with

getPredictedClass(...)method:

private String getPredictedClass(Mat classificationResult, String classesPath) {

ArrayList<String> imgLabels = getImgLabels(classesPath);

if (imgLabels.isEmpty()) {

return "Empty label";

}

// obtain max prediction result

Core.MinMaxLocResult mm = Core.minMaxLoc(classificationResult);

double maxValIndex = mm.maxLoc.x;

return imgLabels.get((int) maxValIndex);

}

MainActivity Content

Let’s view MainActivity.java in more detail. One of its necessary parts provides OpenCV Manager initialization.

OpenCV Android Manager

As we’ve already discussed, the recommended way of OpenCV manager initialization is static. The below code represents static initialization:

private BaseLoaderCallback mLoaderCallback = new BaseLoaderCallback(this) {

@Override

public void onManagerConnected(int status) {

switch (status) {

case LoaderCallbackInterface.SUCCESS: {

Log.i(TAG, "OpenCV loaded successfully!");

mOpenCvCameraView.enableView();

}

break;

default: {

super.onManagerConnected(status);

}

break;

}

}

};

@Override

public void onResume() {

super.onResume();

// OpenCV manager initialization

OpenCVLoader.initDebug();

mLoaderCallback.onManagerConnected(LoaderCallbackInterface.SUCCESS);

}

Application Startup Configuration

The next part is onCreate(Bundle savedInstanceState) method description. Here we configure the application startup. The described in onCreate logic is invoked once on activity creation for its entire lifecycle. Thus, here we inject CNNExtractorService implementation and configure camera listener:

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

// initialize implementation of CNNExtractorService

this.cnnService = new CNNExtractorServiceImpl();

// configure camera listener

mOpenCvCameraView = (CameraBridgeViewBase) findViewById(R.id.CameraView);

mOpenCvCameraView.setVisibility(CameraBridgeViewBase.VISIBLE);

mOpenCvCameraView.setCvCameraViewListener(this);

}

Camera Capturing

For the device camera interactions we need to provide the implementation of the following methods from CvCameraViewListener2 interface:

- onCameraViewStarted() – the logic is executed when the camera preview starts. After the method is invoked, the frames will be provided by onCameraFrame() callback. In this method we initialize the MobileNet model, reading its

.onnxinto org.opencv.dnn.Net:

public void onCameraViewStarted(int width, int height) {

// obtaining converted network

String onnxModelPath = getPath(MODEL_FILE, this);

if (onnxModelPath.trim().isEmpty()) {

Log.i(TAG, "Failed to get model file");

return;

}

opencvNet = cnnService.getConvertedNet(onnxModelPath, TAG);

}

onCameraFrame()– the logic is executed at the frame delivery time; the returned object is of Mat type and will be displayed in the application. In this method, we process input frames by passing them into MobileNet and getting the predicted classes.

public Mat onCameraFrame(CameraBridgeViewBase.CvCameraViewFrame inputFrame) {

Mat frame = inputFrame.rgba();

String classesPath = getPath(IMAGENET_CLASSES, this);

String predictedClass = cnnService.getPredictedLabel(frame, opencvNet, classesPath);

// place the predicted label on the image

Imgproc.putText(frame, predictedClass, new Point(200, 100), Imgproc.FONT_HERSHEY_SIMPLEX, 0.5, new Scalar(0, 0, 0));

return frame;

}

onCameraViewStopped()– the logic is invoked on camera preview interruption. After the method was invoked,onCameraFrame()stops provisions of the frames. We don`t implement any specific logic in this method, leaving it empty:

public void onCameraViewStopped() {

}

Application Build

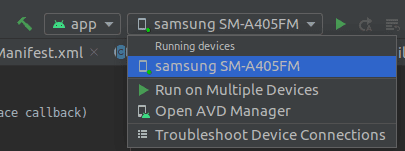

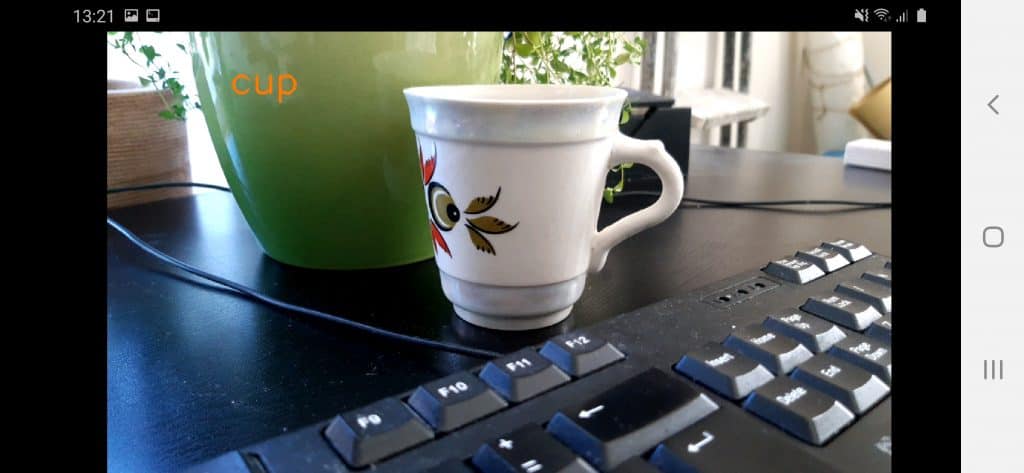

For building the project we used the physical device with Android v. 10. To be able to choose the device from the device list in Android Studio enable the USB debugging on your device. After successful USB debugging activation the list of devices will be updated:

After the device was chosen click Run button. If the project was run successfully, you will find a new Android process on your device.

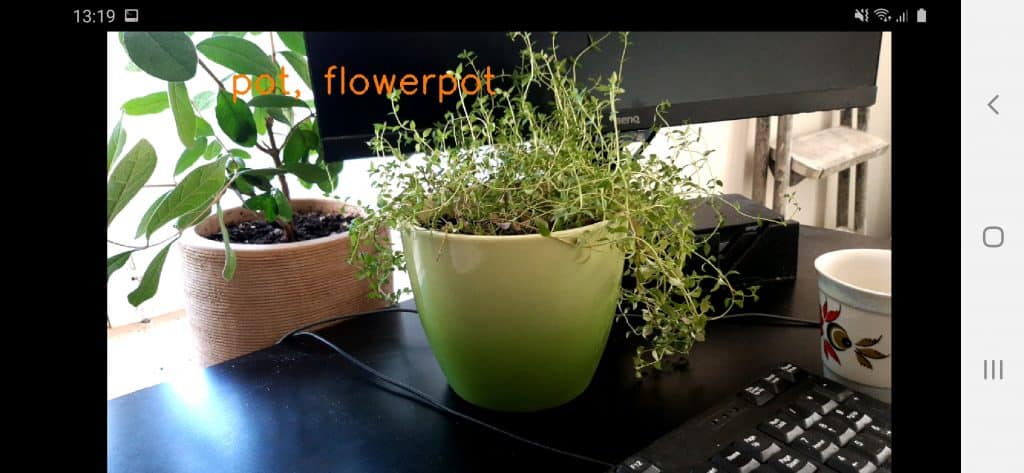

Let’s test the application, capturing various objects by the camera:

Conclusion

In this post, we went through a step by step process of creating an Android application using Android SDK Studio and previously integrated by us PyTorch model into the Java pipeline. We hope it will help you to create a useful application yourself to run on an Android device.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning