You use panorama mode to click a wide-view photo in your camera. But how does this panorama mode actually work under the hood? Or suppose you have an unstable video of your bike riding, and you go to your editor app and select the video stabilization option. It gives you a perfectly stabilized version of the same video. Cool, right? But how does it work? Let me tell you a secret: all of these use a traditional computer vision method called feature matching.

So, in this article, we will see:

- What is feature matching?

- Why is feature matching still relevant in the deep learning era?

- What are the recent advancements in feature matching?

- What are the applications of feature matching?

- How do feature matching algorithms work in code?

Sounds interesting? Let’s start the journey then.

- What is an Image Feature?

- Why Feature Matching in 2024?

- Feature Matching – Classical vs Deep Learning

- Download the Code Here

- Feature Matching – Code Pipeline for WebUI

- Feature Matching – Experiment Results

- Conclusion

- References

What is an Image Feature?

An image consists of multiple objects or a single object. Each object carries a different description in that image. An image feature is a piece of information that describes the objects with a unique quality. These features include anything from simple edges and corners to more complex textures like intensity gradients or unique shapes like blobs. Consider the image of a person holding a book. Humans can understand that some objects (persons or books) are present in the frame by looking at the lighting conditions or the shapes and outlines around the objects in the image frame. How does a computer interpret the same?

For that, we use image features. We take each image pixel and calculate the intensity gradients (change of intensity value compared with the surrounding pixel) of those pixels. Now, with these gradients, we can tell the computer – ‘Hey, see, this region of the image has higher intensity values; these are the image features (maybe corners or edges); there might be an object present in the image(book or the person).’ Now, how do we extract these image features? Or how are these image features used to recognize the object?

One simple answer is feature matching, which we will explore now.

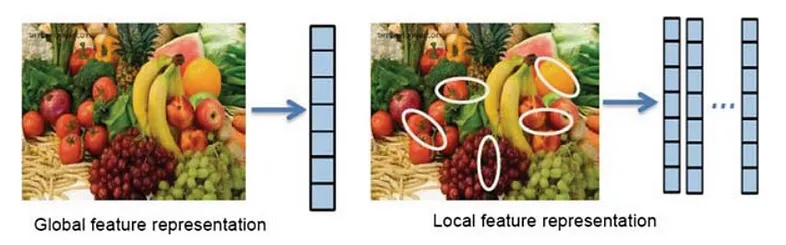

Before that, we can categorize these image features into two types:

- Local features – These refer to specific parts of an image that capture information about small regions. These features are particularly useful for understanding detailed aspects of an image, such as textures, corners, and edges.

- Global features – These describe the entire image as a whole and capture overall properties such as shape, color histogram, and texture layout.

Both can be used according to the use cases. For this article, we will mostly work with the local features.

Why Feature Matching in 2024?

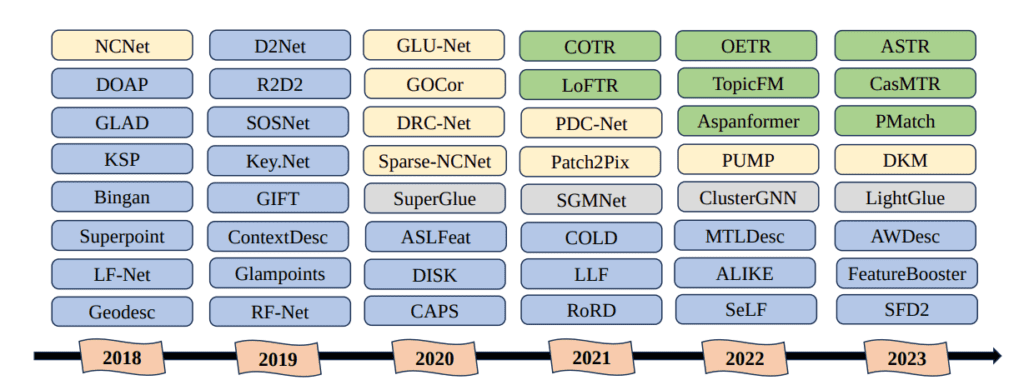

Feature matching is an old computer vision technique that started in the late 1990s with edge detection algorithms like Sobel, Canny, and the corner detection algorithm Harris Corner Detection. It improved over the years and introduced neural networks into feature matching with Superpoint. Then, LoFTR came along and changed the game by introducing transformers into the feature matching pipeline. But why do we have to use feature matching today?

Feature matching is heavily used in some of the mainstream computer vision tasks. Let’s explore them one by one:

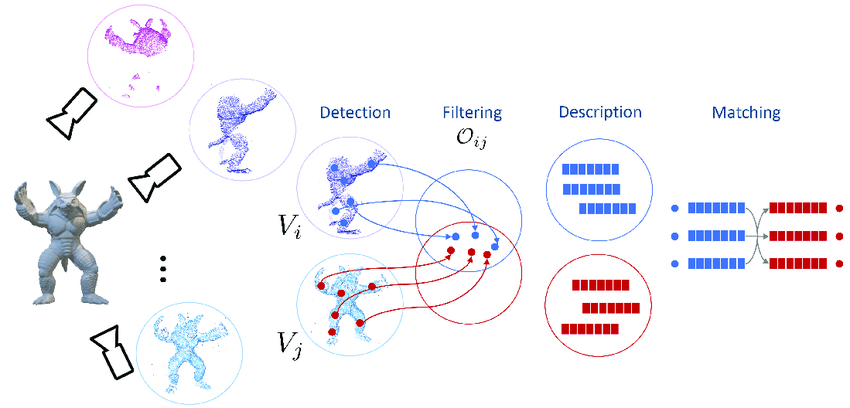

Structure from Motion(3D Reconstruction)

3D reconstruction is one of the most crucial research topics in this era. Researchers are working to generate 3D structures from even a single image, which extends these 3D structures to the AR/VR space. And feature matching is a crucial part of this whole 3D reconstruction pipeline. When you take multiple photos of an object or scene from different angles, feature matching identifies common points across these images. By analyzing how these points move between photos, a 3D-CNN model calculates the 3D coordinates of the points, reconstructing a 3D model of the scene.

Medical Image Registration

Think of aligning MRI or CT scans taken before and after treatment. Feature matching identifies corresponding points in these scans to align them accurately. This might involve matching the edges of tumors or organs across different scans. Features such as edges or blobs are detected using a feature detector. These features are matched across scans, and transformations (rigid or non-rigid) are applied to align the images, helping doctors see changes over time or combine different scan types for better diagnosis.

Face Recognition

Face recognition is one of the mainstream applications that heavily relies on feature matching. A feature detector(Haar Cascades) extracts facial landmarks(features or points that describe the face), and we use a matcher to match the face and recognize it throughout the frame using these landmarks. We can also use these features to implement some AR filters on top of it. Go through our detailed article about Creating Instagram Filters to do some hands-on.

Image Stitching (Panorama)

One of the most used computer vision tasks is image stitching or panorama, which we do with our mobile camera on a day-to-day basis. You take several overlapping pictures and combine them. Feature matching finds the overlapping areas and aligns them to create a seamless wide-angle view. The algorithm detects features in the overlapping regions using methods like Harris corner detection or SURF and matches them with Brute Force or FLANN. It then uses homography to warp and blend the images together, correcting for distortions and creating a single continuous panorama. Learn more about it in our Course Mastering OpenCV with Python.

SLAM (Simultaneous Localization and Mapping)

Another field that uses feature matching in its pipeline is robotics. Whether it’s a robot or autonomous vehicle, they all use SLAM (Simultaneous Localization and Mapping) to navigate (walk or go) through the environment (surroundings). The robot needs to map its surroundings and track its location within it. Feature matching helps by identifying landmarks and tracking them as the robot moves. The system detects features like corners or distinctive textures using FAST or BRIEF and matches them as the robot moves. Algorithms like EKF-SLAM or graph-based SLAM use these matched features to update the map and the robot’s location, ensuring accurate navigation and mapping. Learn more about Visual SLAM in our article.

Video Stabilization

You have a shaky video of your bike riding and want to stabilize it. Feature matching helps you do that. To stabilize the video, frames are aligned by finding consistent points in consecutive frames through feature matching. Features are detected in each frame using methods like optical flow or Lucas-Kanade. These features are matched to the previous frame, and transformations (like affine or projective) are applied to align the frames based on these matches, smoothing out the motion and producing a stable video. Learn more about video stabilization in our article Video Stabilization Using Point Feature Matching in OpenCV.

The above are the mainstream tasks that use feature matching. But what about doing some crazy stuff, like automating the Chrome Dino game with feature matching?

Want to know how? Go through our blog to learn how to build a Chrome Dino game bot using OpenCV Feature Matching.

Amazing right? Want to learn AI and Computer Vision from scratch and build cool projects like these? Join the world’s obviously best AI Courses by OpenCV University. Time is everything, give the best out of you and become the best of yourself.

Feature Matching – Classical vs Deep Learning

As we discussed before, feature matching is a classical computer vision technique that started in the late 90s. Since then, there have been many advancements in the methodologies, including CNN, RNN, and Transformers, throughout the evolution journey. In this section, we will try to understand:

- Classical Computer Vision Pipeline for Feature Matching

- Deep Learning Pipeline for Feature Matching

- Latest Research in Feature Matching

What are the Key Components of Classical Feature Matching?

The feature matching method consists of three main parts:

Detection

There are algorithms like Harris Corner Detector, Sobel or Canny, and Haar Cascade, which try to find the keypoints (features) by doing simple math, like calculating pixel intensity, or applying a blurring filter, like gaussian blur or applying thresholding. Now, wherever it detects change in pixel intensity in the image, the algorithm counts that as a keypoint or a feature (that might be a corner or edge of an object). The idea is very simple, obviously color saturation or congestion will be higher in the edges, and corners of an image, so it will appear as a gradient (intensity change) over that region.

Description

After you get the keypoints, a feature descriptor like SIFT or SURF will take those keypoints (features) and generate feature vectors (descriptor vectors). These vectors are a series of numbers that act as a sort of numerical “fingerprint” that can be used to differentiate one feature from another. SIFT or SURF uses a feature detector in their algorithm pipeline, which can directly take an image and return the feature vectors. End-of-the-day images are 2D vectors (matrix), so the pixel gradients (changes in pixel intensities for illumination, translation, scale, and in-plane rotation) that the algorithm detects will only be an array of numbers (a vector).

Applications shall use detectors and descriptors appropriate for the image’s contents. For instance, if an image contains bacteria cells, the blob detector should be used rather than the corner detector. However, if the image is an aerial view of a city, the corner detector is suitable for finding man-made structures. Furthermore, selecting a detector and a descriptor according to our use cases is an important task.

Matching

We have feature vectors for our input image, and we’ll do the same for another input image since we have two input images with which to calculate matches. Then, a matching algorithm like Brute-Force Search or Fast Library for Approximate Nearest Neighbors (FLANN) will do the rest. These algorithms generally do the nearest neighbor search, like it now has two bag-of-feature vectors for both images. It just takes a feature vector from a bag(suppose image 1) and tries to calculate the similarity distance with all the feature vectors present in another bag (image 2). It takes the nearest distance feature vector pair to consider a perfect match. This workflow simultaneously goes with all the feature vectors, and we get the matches. These matches are being used in different applications, as we discussed before.

ORB (Oriented FAST and Rotated BRIEF) is the algorithm that uses FAST (Features from Accelerated Segment Test) to detect features and to compute descriptors it uses BRIEF (Binary Robust Independent Elementary Features). It’s widely used and still the best classical method for feature matching. Read more about ORB in our blog feature matching with ORB.

Why do we need Deep Learning in Feature Matching?

The answer is simple: Deep learning or neural networks take over classical ML or CV algorithms for better accuracy and robustness in edge cases. Similarly, for feature matching, deep learning introduced CNN to the main feature matching pipeline to make it more efficient. Initially, techniques like Superpoint combined keypoint detection and description within a single network, followed by D2Net and R2D2, which further integrated these processes.

Then, NCNet introduced four-dimensional cost volumes (a big cube where each slice of the cube represents how well features from two different images might match up at different shifts or positions), leading to detector-free methods like Sparse-NCNet, DRC-Net, GLU-Net, and PDC-Net. SuperGlue took a different approach by treating matching as a graph problem, with SGMNet and ClusterGNN refining this concept. Later, methods like LoFTR and Aspanformer incorporated Transformer or Attention mechanisms, expanding the receptive field and advancing deep learning-based matching techniques even further. We have two latest deep learning methods, XFeat and OmmiGlue, from CVPR 2024.

Latest trends in Feature Matching with Deep Learning

First, we will discuss two recent research works: XFeat (an optimized deep neural network for feature matching that only uses CPU) and OmniGlue(a perfect model that uses Transformers and CNN in the feature matching pipeline). Later, we will also learn about LoFTR, the model that introduced the Transformer architecture into the feature matching pipeline.

XFeat

Local feature extraction accuracy depends on how detailed your input images are. For tasks like figuring out camera positions, finding locations in images, and building 3D models from photos (SfM), you need very precise match-ups between image points. High-resolution images provide this precision but make the computing process extremely demanding, even for streamlined networks like SuperPoint.

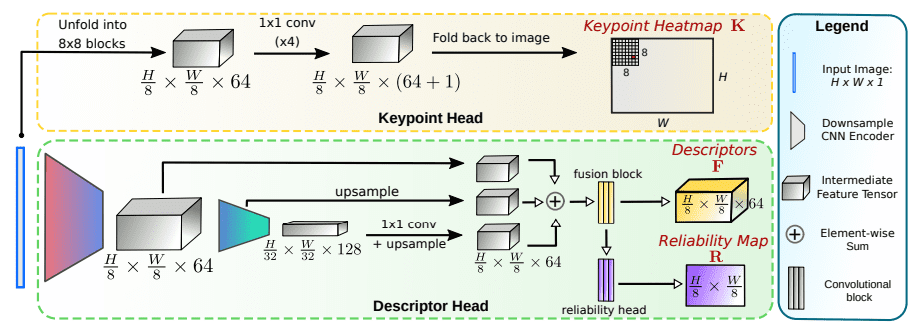

Featherweight Network Backbone

Let’s take a grayscale image I (where H is the height, W is the width, and C=1 means it has one color channel). We usually begin with shallow layers in the network to cut down on processing costs and gradually reduce the image size while increasing the number of channels at each step. The computational cost for each layer i is given by:

But just chopping down channels throughout the network can mess with its ability to handle different lighting or viewpoints. Using depthwise separable convolutions cuts computation costs, with fewer parameters involved. However, for extracting local features where you need high detail, this method doesn’t really save as much time and ends up limiting what the network can represent.

The backbone of the XFeat network consists of simple units called “basic layers,” which are just 2D convolutions paired with ReLU and BatchNorm, structured into blocks that halve image resolution and increase depth progressively: {4, 8, 24, 64, 64, 128}, ending with a fusion block that ties together features from multiple resolutions.

Local Feature Extraction

Descriptor Head: This part of the network pulls together a dense map of features F (a compact 64-D dense descriptor map) by combining features at different scales. It uses a feature pyramid technique to increase the area the network “sees” with each layer, helping it remain robust against changes in viewpoint—an essential quality for smaller network designs. An additional convolutional block is used to regress a reliability map R, which models the unconditional probability Rij that a given local feature Fij can be matched confidently.

Keypoint Head: Unlike typical methods that might use more complex architectures like UNets or ResNets, XFeat introduces a unique way of detecting keypoints through a dedicated branch focused on the simpler structures in images. It efficiently processes by transforming image regions into 8×8 blocks and using fast 1×1 convolutions to figure out where the keypoints are, and generate a keypoint heatmap K.

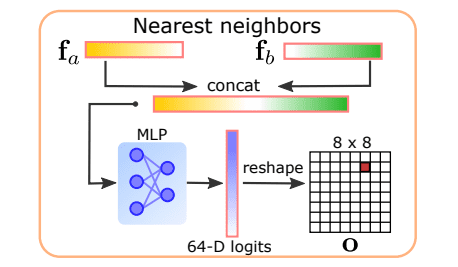

Dense Matching: This module introduces a streamlined way to match features that cover more area and are more reliable. It doesn’t rely on high-resolution maps, which is perfect for settings where you can’t afford heavy computation. It uses a simple neural network model (MLP) to fine-tune the match locations based on their initial reliability scores. It learns to predict pixel-level intensities by only considering input pairs of nearest neighbors from the original coarse-level features at 1/8 of the original spatial resolution, significantly saving memory and computing.

Network Training

The training process uses actual matched points in images to teach the network how to correctly recognize and describe local features. The method uses a specific loss function that handles mismatches by comparing the network’s predictions against these known correspondences. The reliability of these features is also learned during training, ensuring the network can tell how confident it should be about each match it predicts. Additionally, a module to refine these matches helps ensure that the predicted locations are as accurate as possible.

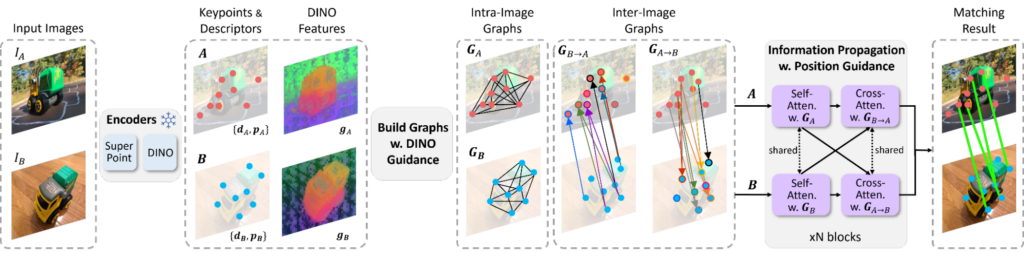

OmniGlue

Model Overview

OmniGlue, the first learnable image matcher that is designed with generalization as a core principle, consists of 4 stages:

- Feature Extraction:

- SuperPoint Encoder: Extracts high-resolution, fine-grained local features crucial for precise matching in complex scenes.

- DINOv2 Encoder: This encoder uses a pre-trained vision transformer model to extract more generalized and robust features that capture broader visual patterns, facilitating better matching across significant domain variations.

- Keypoint Association Graphs:

- Intra-image Graphs: Construct dense connectivity graphs within each image, allowing for comprehensive feature interrelation and enhancing local descriptor refinement.

- Inter-image Graphs: Uses the guidance from DINOv2 to selectively establish connections between similar keypoints from different images, prioritizing high-probability matches and reducing computational overhead by pruning unlikely connections.

- Information Propagation:

- Integrates dual attention mechanisms:

- Self-Attention: Focuses on individual graphs to refine keypoints based on intra-image contextual cues.

- Cross-Attention: Bridges keypoints between images, selectively incorporating features based on their estimated relevance and similarity, guided by DINOv2 insights.

- The attention processes are designed to be adaptive, dynamically adjusting the focus based on the complexity and distinctiveness of the feature landscapes within and across images.

- Integrates dual attention mechanisms:

- Descriptor Refinement and Matching:

- After propagating and refining information, the descriptors are optimized through a sequence of operations that ensure the matching is not only based on immediate feature similarity but also on a more holistic integration of spatial and appearance attributes.

- Final matching is achieved using a combination of learned metrics and geometric constraints, ensuring the matches are consistent with both local and global image structures.

OmniGlue Details

- Detailed Feature Extraction:

- Each keypoint’s local descriptor is enriched with contextually relevant information extracted from both the SuperPoint and DINOv2 outputs, ensuring a rich representation that balances specificity and generalizability.

- Positional embeddings are dynamically adjusted using multi-layer perceptrons (MLPs), which refine the spatial encoding based on both local image features and the broader image context provided by DINOv2.

- Graph Construction:

- Dynamic Graph Pruning: Utilizes a thresholding mechanism informed by DINOv2 to dynamically adjust the connectivity in inter-image graphs, ensuring that only potentially meaningful connections are maintained. This optimizes both computational efficiency and matching accuracy.

- Advanced Information Propagation:

- It incorporates a novel hybrid attention mechanism that combines traditional attention with domain-adaptive components, allowing the model to adjust its focus based on the domain’s specificity and the features’ distinctiveness.

- Positional information is used to modulate the attention mechanism to enhance its sensitivity to contextually important features while preventing overfitting to misleading positional cues.

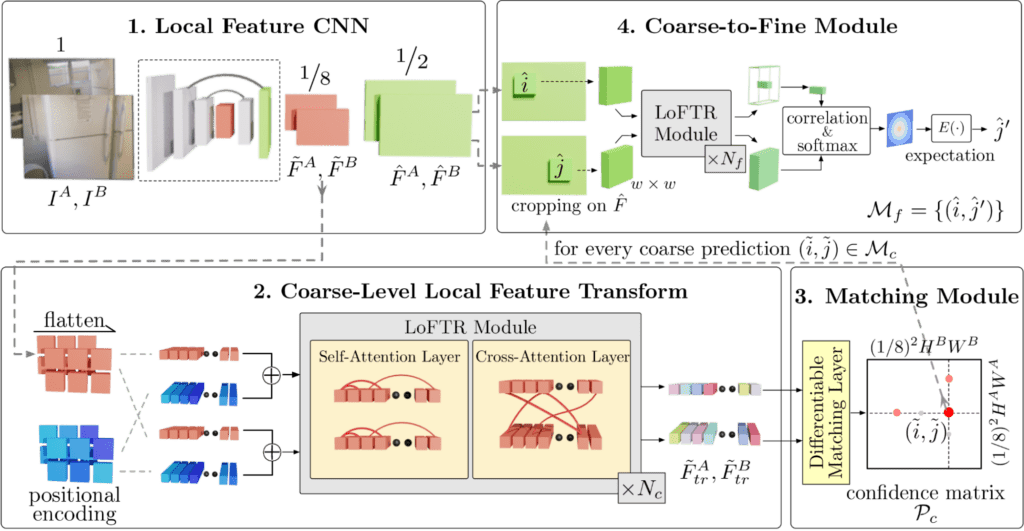

LoFTR

LoFTR introduced the Transformer architecture in the traditional feature matching pipeline. The LoFTR architecture consists of four parts:

Local Feature CNN

- Extraction of Feature Maps: This component uses a Convolutional Neural Network (CNN) to extract two levels of feature maps from each image in the pair IA and IB. The coarse-level feature maps (

and

) provide a broad view, while the fine-level feature maps (

and

) capture detailed information. This dual extraction allows for both general alignment and detailed match refinement.

Local Feature Transformer

- Flattening and Encoding: The coarse feature maps are first flattened into 1-D vectors. Positional encoding is added to these vectors to maintain information about the location of features within the original image layout.

- Transformer Processing: The combined feature vectors are processed through the Local Feature TRansformer (LoFTR) module, which consists of Nc layers of self-attention (attention within the same image vectors) and cross-attention (attention between different image vectors). This structure allows the model to integrate information across the entire image.

Differentiable Matching Layer

- Confidence Matrix and Selection: The output from the transformer module passed into a matching layer to make a confidence matrix Pc, representing the confidence levels of potential matches. Matches are selected from this matrix based on a predefined confidence threshold and mutual-nearest-neighbor criteria, resulting in a set of coarse-level match predictions (Mc).

Refinement of Matches

- Local Window Cropping: For each coarse prediction pair (

) in Mc, a local window of size w×w is cropped from the fine-level feature maps.

- Sub-pixel Refinement: Within these local windows, the coarse matches are refined to sub-pixel accuracy, yielding the final match predictions (Mf). This step ensures that the matching is not only based on a broad alignment but also precisely adjusted at a finer scale.

Download the Code Here

Feature Matching – Code Pipeline for WebUI

Now, we come to the coolest part of the article. Let’s start coding. To test all the models and compare the results, we will use Image Matching WebUI and make some additional changes according to our use case. Here, we will compare the classical SIFT, LoFTR, XFeat, and OmniGlue. I hope you have downloaded the code. If not, click the Download Code button above.

First, we will set up the environment. We are using miniconda for managing environments. We create a virtual environment with Python 3.10:

conda crate -n imw python=3.10.0

conda activate imw

Then we need to install pytroch along with the CUDA. We are using pytorch 2.2.1 and CUDA 12.1. Install with the following command:

conda install pytorch==2.2.1 torchvision==0.17.1 torchaudio==2.2.1 pytorch-cuda=12.1 -c pytorch -c nvidia

We also need Torchmetrics and PyTorch Lightning:

conda install torchmetrics=0.6.0 pytorch-lightning=1.4.9

Then, we need to install the dependencies in the requirements.txt file:

pip install -r requirements.txt

Now, we are ready to run the WebUI. Run the app.py script:

cd image-matching-webui/

python app.py

This WebUI is a collection of all the codebases of different feature matching models stored in one place. The main directory structure is:

├── app.py

├── assets

├── build_docker.sh

├── CODE_OF_CONDUCT.md

├── datasets

├── docker

├── Dockerfile

├── environment.yaml

├── experiments

├── format.sh

├── hloc

├── LICENSE

├── output.pkl

├── pyproject.toml

├── README.md

├── requirements.txt

├── test_app_cli.py

├── third_party

└── ui

Let’s understand the codebase now. We have all the model’s codebase collected in third_party folder:

./third_party/

├── ALIKE

├── ASpanFormer

├── COTR

├── d2net

├── DarkFeat

├── DeDoDe

├── DKM

├── dust3r

├── gim

├── GlueStick

├── lanet

├── LightGlue

├── mast3r

├── mickey

├── omniglue

├── pram

├── r2d2

├── RoMa

├── RoRD

├── SGMNet

├── SOLD2

├── SuperGluePretrainedNetwork

└── TopicFM

Then, it has a general pipeline for all the feature matching models in the hloc folder:

./hloc/

├── extractors

├── matchers

├── pipelines

├── __pycache__

└── utils

We have our main code integration code base in the ui folder:

./ui/

├── api.py

├── app_class.py

├── config.yaml

├── __init__.py

├── __pycache__

├── sfm.py

├── utils.py

└── viz.py

And then we have our app.py:

import argparse

from pathlib import Path

from ui.app_class import ImageMatchingApp

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument(

"--server_name",

type=str,

default="0.0.0.0",

help="server name",

)

parser.add_argument(

"--server_port",

type=int,

default=7860,

help="server port",

)

parser.add_argument(

"--config",

type=str,

default=Path(__file__).parent / "ui/config.yaml",

help="config file",

)

args = parser.parse_args()

ImageMatchingApp(

args.server_name, args.server_port, config=args.config

).run()

Under the hood, it’s calling the ImageMatchingApp(...) class from app_class.py. Let us understand how this function works. The app_class.py has three classes:

from pathlib import Path

from typing import Any, Dict, Optional, Tuple

import gradio as gr

import numpy as np

from easydict import EasyDict as edict

from omegaconf import OmegaConf

from ui.sfm import SfmEngine

from ui.utils import (

GRADIO_VERSION,

gen_examples,

generate_warp_images,

get_matcher_zoo,

load_config,

ransac_zoo,

run_matching,

run_ransac,

send_to_match,

)

DESCRIPTION = """

# Image Matching WebUI

This Space demonstrates [Image Matching WebUI](https://github.com/Vincentqyw/image-matching-webui) by vincent qin. Feel free to play with it, or duplicate to run image matching without a queue!

<br/>

## It's a modified version of the original code for better structure and optimization.

"""

class ImageMatchingApp:

class AppBaseUI:

class AppSfmUI(AppBaseUI):

ImageMatchingApp(...)– This class is our main gradio app; it contains all the components of gradio, which includes all the inputs, methods, parameter settings, and output.AppBaseUI(...)– his class includes some extra parameter inputs that we can consider for a more complex task.AppSfmUI(AppBaseUI)– This class is for SFM (Structure from Motion), which we will not consider in our use case.- All the classes contain functions and codes that we minimized for visualization.

# button callbacks

button_run.click(

fn=run_matching, inputs=inputs, outputs=outputs

)

Now, in the ImageMatchingApp(...) class, it uses run_matching(...) from utils.py. This utils.py pulls all the feature matching pipeline code from the hloc module.

from hloc import (

DEVICE,

extract_features,

extractors,

logger,

match_dense,

match_features,

matchers,

)

In hloc, all the code is structured to fit the pipeline is taken from the third_party folder. If you remember, this is the folder where we downloaded all the models and codebases. Let’s simplify the process; suppose you are doing a feature matching using OmniGlue. The pipeline will look like this:

app.pywill call theImageMatchingApp(...)class fromui/app_class.pyImageMatchingApp(...)call therun_matching(...)function fromui/utilis.py- In

utils.pytherun_matching(...)function callshloc/matchers//omniglue.py - In

omniglue.pyit imports the omniglue module fromthird_party/omniglue - Finally, the whole pipeline runs the feature matching and gives the matches to our

app.pyGradio interface.

This is the general overview pipeline, and multiple processes are happening simultaneously. It’s all about the logic that we learned in theory applied in Python code. The codebase is quite large to explain in this section; It is highly recommended to just go through the code for a better understanding. Here, we have explored the main executable pipeline. Now, we have an understanding of the code structure. So, we will move on to the experiment results.

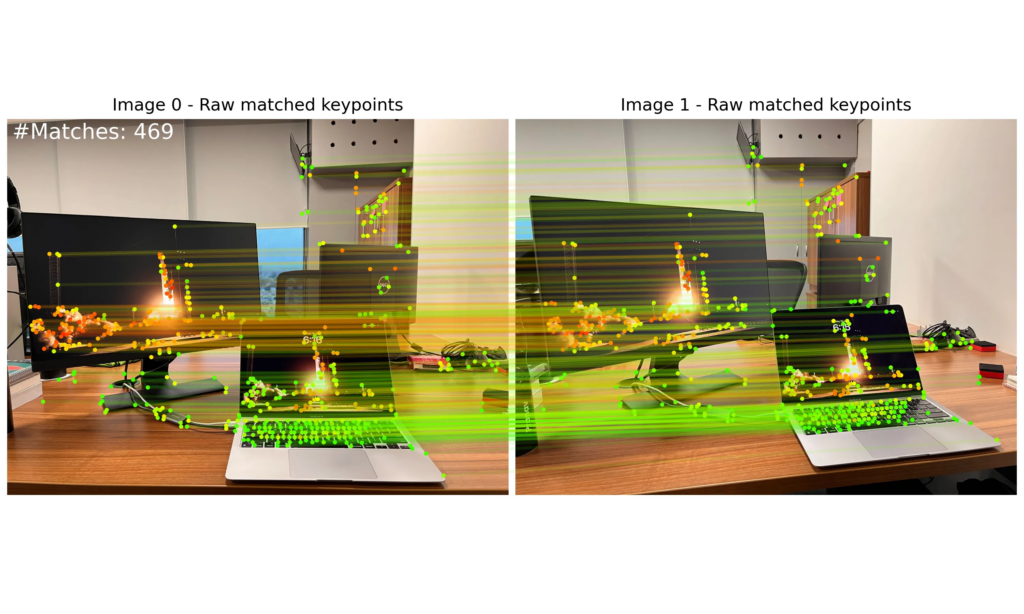

Feature Matching – Experiment Results

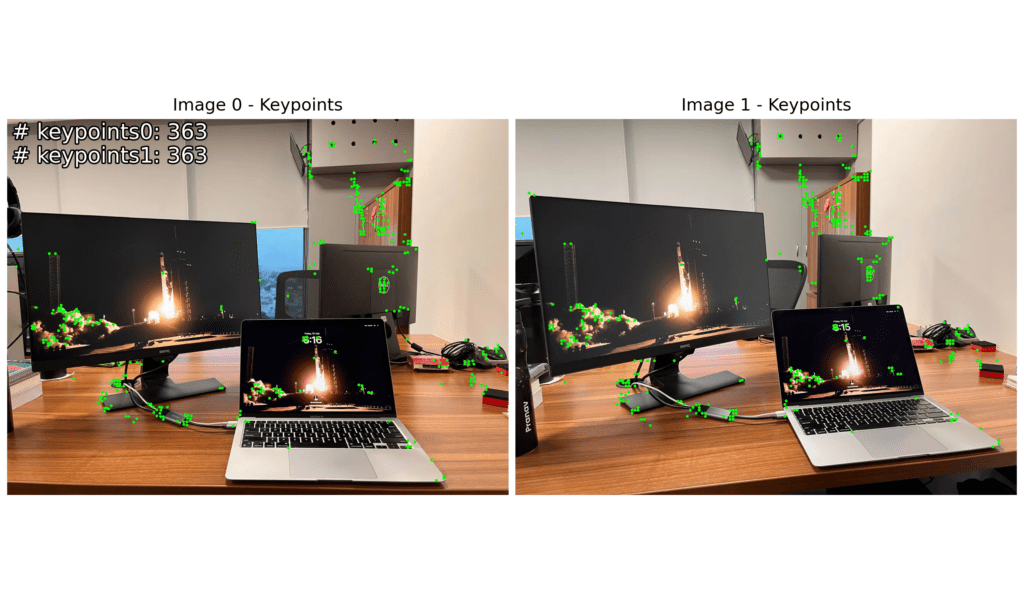

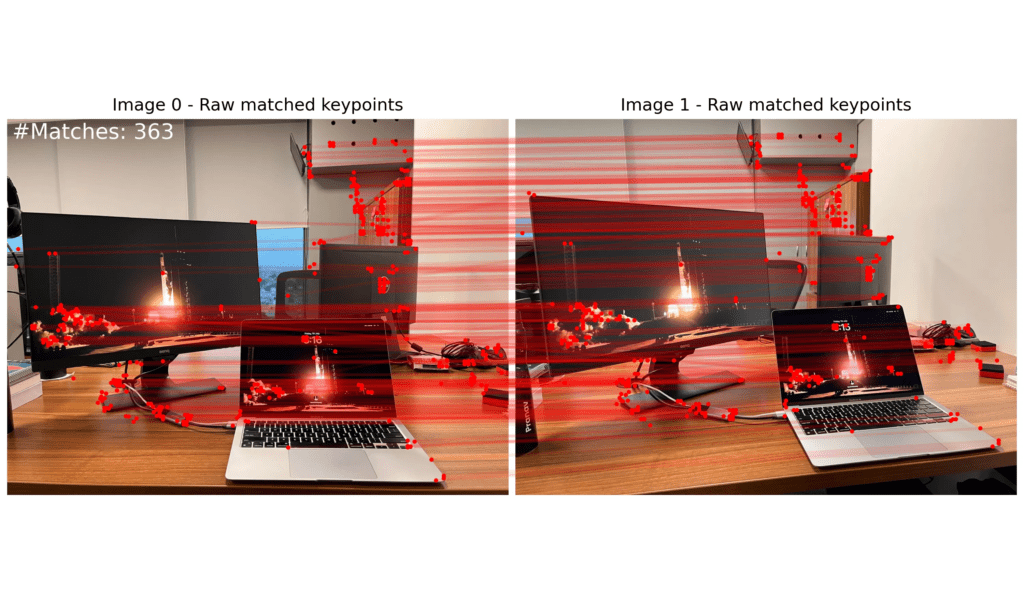

Feature Matching with LoFTR

The Log for inference time:

Loaded LoFTR with weights outdoor

Loading model using: 0.368s

Matching images done using: 0.528s

RANSAC matches done using: 2.302s

Display matches done using: 1.434s

TOTAL time: 5.411s

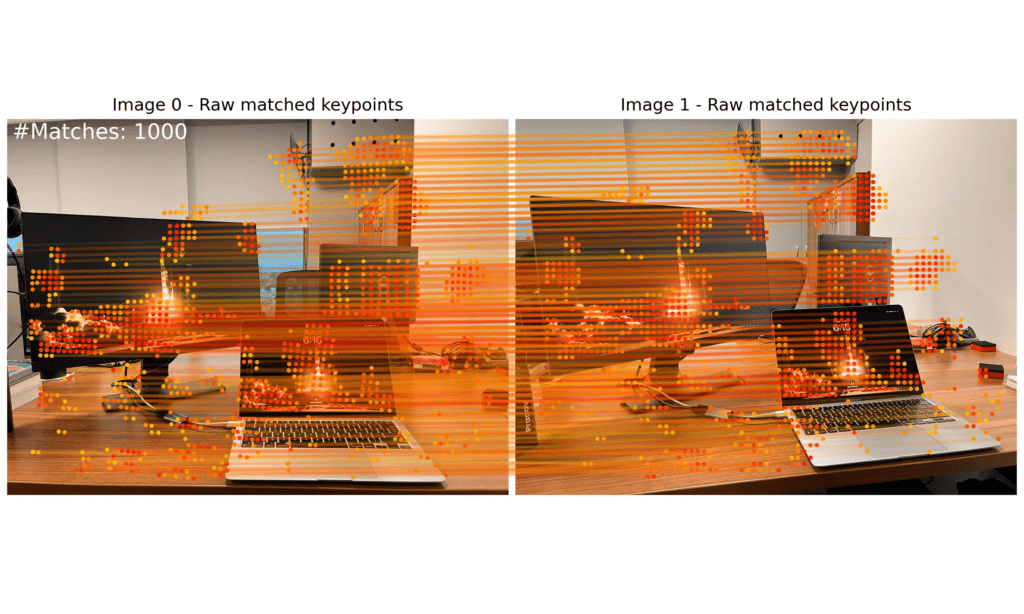

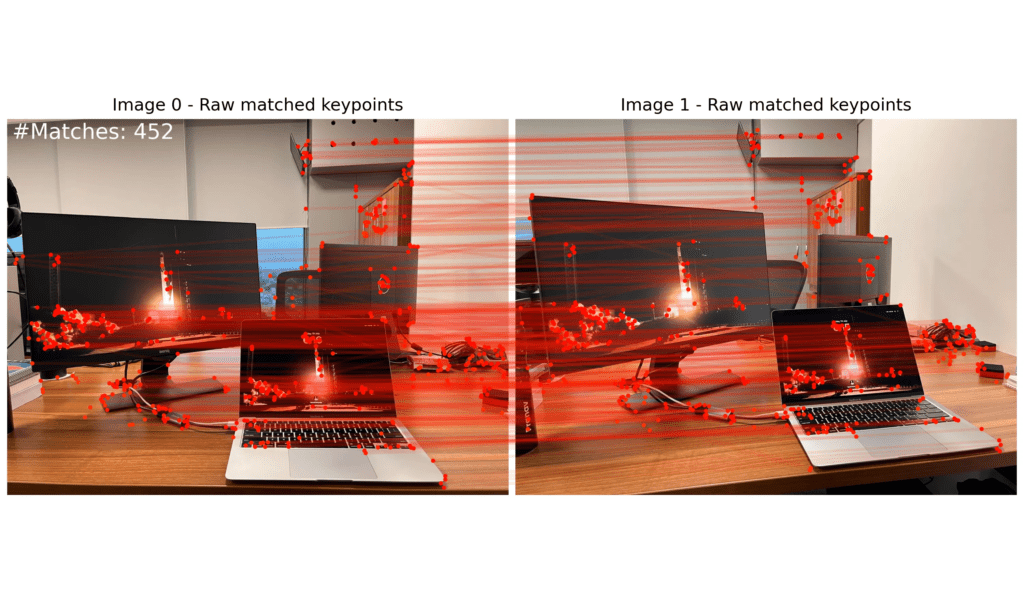

Feature Matching with XFeat(Dense)

The Log for inference time:

Load XFeat(dense) model done

Loading model using: 0.983s

Matching images done using: 0.194s

RANSAC matches done using: 2.161s

Display matches done using: 1.358s

TOTAL time: 5.463s

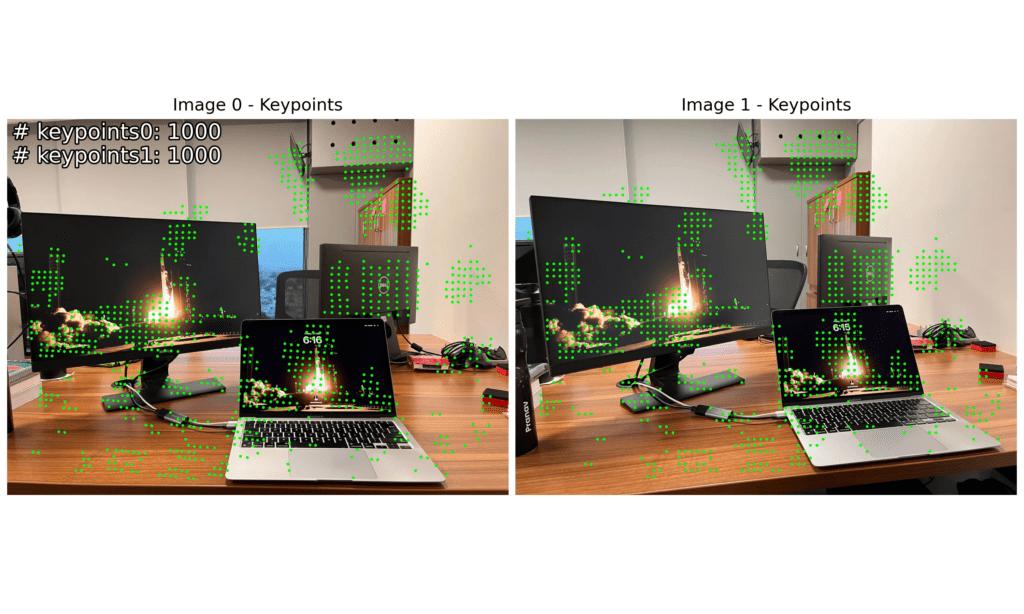

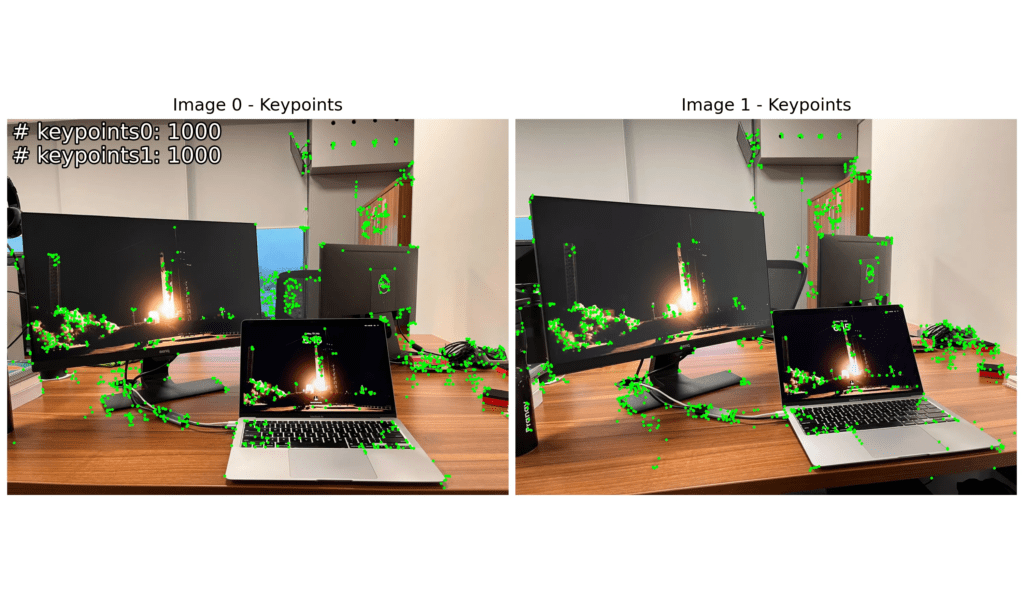

Feature Matching XFeat(Sparse)

The Log for inference time:

Load XFeat(sparse) model done.

Matching images done using: 1.351s

RANSAC matches done using: 2.177s

Display matches done using: 1.392s

TOTAL time: 5.704s

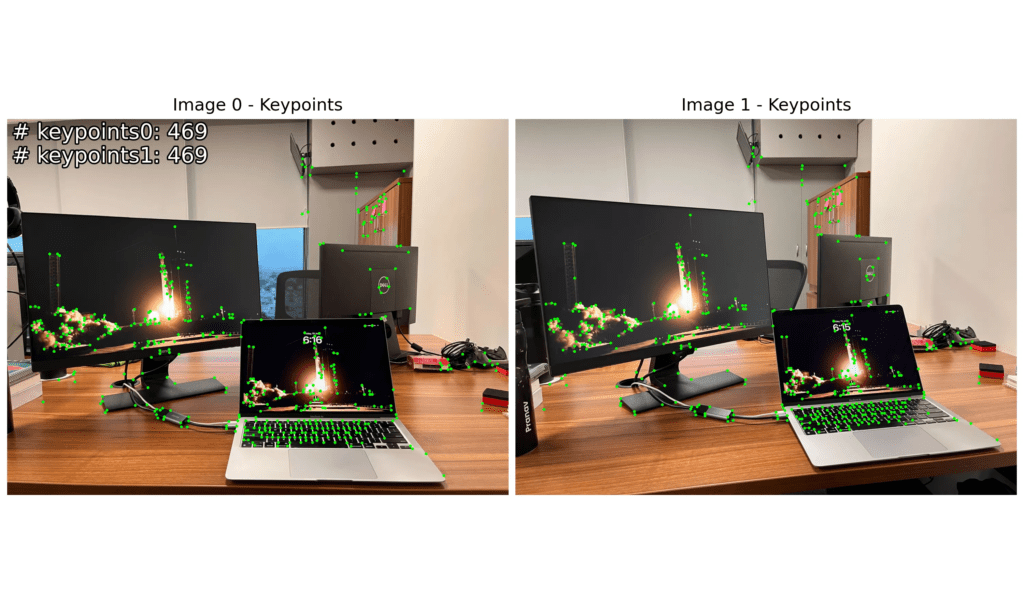

Feature Matching with OmniGlue

The Log for inference time:

Loaded OmniGlue model done!

Loading model using: 4.561s

Matching images done using: 7.700s

RANSAC matches done using: 2.166s

Display matches done using: 1.395s

TOTAL time: 16.606s

Overview

- We applied the match threshold of 1000.

- LoFTR took the least amount of time among all of the models.

- XFeat uses CPU only and gives decent matches in an acceptable time.

- Omniglue Matches are the most accurate and optimized, although processing takes longer.

Conclusion

Having reached this point, we hope that you now have a clear understanding of feature matching. Apply this learning to your code and build some amazing projects, perhaps a face recognition app or a 3D Avatar Maker. If you have something to share, please let us know!

References

- Xu, Shibiao, et al. “Local feature matching using deep learning: A survey.” Information Fusion 107 (2024): 102344.

- Sun, Jiaming, et al. “LoFTR: Detector-free local feature matching with transformers.” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2021.

- Potje, Guilherme, et al. “XFeat: Accelerated Features for Lightweight Image Matching.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2024.

- Jiang, Hanwen, et al. “OmniGlue: Generalizable Feature Matching with Foundation Model Guidance.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2024.

- All the assets except the experiment results are taken from Google Image Search, Medium, YouTube, project pages of research papers, etc.