In the realm of computer vision, YOLOv8 object tracking is revolutionizing the way we approach real-time tracking and analysis of moving objects. This article takes a close look at the fascinating world of YOLOv8 object tracking, offering a thorough understanding of its application in object tracking and counting. By reading this piece, you will gain insight into various practical implementations of object tracking and learn how these techniques can be effectively used in real-world scenarios. It also presents an in-depth exploration of the inference pipeline for object tracking and counting using YOLOv8. All the code scripts used in this article are free and available for download. You can download them by clicking the “Download Code” button below.

This comprehensive guide is not just informative but also equips you with the knowledge to apply different types of object counting in everyday situations, enhancing your understanding and capability in the dynamic field of computer vision.

- What is YOLOv8?

- Using YOLOv8 for Object Detection

- OpenCV for YOLOv8 Object Tracking

- What is Object Tracking?

- Types of Object Tracking

- YOLOv8 Object Tracking

- What is Object Counting?

- Object Counting Using YOLOv8 Object Tracking

- Applications of YOLOv8 Object Tracking and Object Counting

- Key Takeaways

- Conclusion

- References

What is YOLOv8?

YOLOv8 is the latest family of YOLO-based object detection models from Ultralytics that provides state-of-the-art performance.

Leveraging the previous YOLO versions, the YOLOv8 model is faster and more accurate while providing a unified framework for training models for performing :

- Object Detection

- Object Tracking

- Instance Segmentation

- Image Classification

Ultralytics has released a complete repository for YOLO Models. Also, Ultralytics will release a paper on Arxiv comparing YOLOv8 with other state-of-the-art vision models. We will not discuss YOLOv8 here; read our detailed article about YOLOv8 to learn more about YOLOv8 and its applications. In this article, we will explore YOLOv8 object tracking. And how we can use YOLOv8 and OpenCV for object tracking and counting.

Using YOLOv8 for Object Detection

In this article, we will explore YOLOv8 object tracking and counting. It’s important to note that for effective object tracking, we require inputs from an object detection system, in this case, YOLOv8.

Object detection is a task where we localize and classify objects in an image or sequence of video frames. You can read our article about moving object detection using OpenCV, where we discussed how to detect objects just using OpenCV.

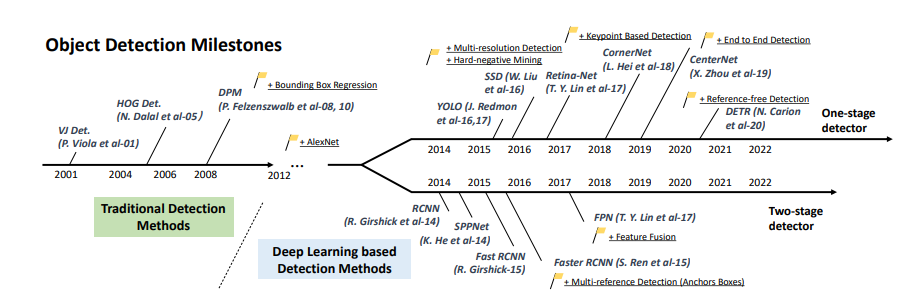

A paper by Zhengxia Zou, Keyan Chen, et al. [1] suggests that the progress of object detection over the past two decades has generally been divided into two historical periods: the traditional object detection period (before 2014) and the deep learning-based detection period (after 2014). We also have RT-DETR (Real Time Detection Transformer), which uses Vision Transformers for efficient, realtime performance in various scales of image feature processing. You can check our detailed article about object detection to learn more.

OpenCV for YOLOv8 Object Tracking

OpenCV is our most extensive open-sourced library for computer vision, containing almost every possible image-processing algorithm. Leveraging OpenCV for YOLOv8 Object Tracking combines the advanced detection capabilities of YOLOv8 with the robust features of the OpenCV library, offering an innovative solution for sophisticated realtime object tracking. Let’s explore this unique combination in more detail.

OpenCV primarily provides eight different trackers available in OpenCV 4.2 — BOOSTING, MIL, KCF, TLD, MEDIANFLOW, GOTURN, MOSSE, and CSRT out of the box. A majority of the open-source trackers use OpenCV for most of the visualization and image processing works. Learn more about OpenCV Object Tracking in our detailed article Object Tracking using OpenCV.

What is Object Tracking?

YOLOv8 Object Tracking is an extended part of object detection where we identify the location and class of objects within the frame and maintain a unique ID for each detected object in subsequent video frames.

You might have a question at this point: Can you easily detect the object in every frame? If yes, why do you need tracking?

The answer is straightforward: By only using object detection, you can face multiple problems like occlusion, where your detector cannot detect the object, and also, you would need a tracker to get perfect detection throughout the video frames. The Complete Guide to Object Tracking is a great resource to understand this concept better.

Types of Object Tracking

This article focuses mainly on YOLOv8 object tracking and object counting. To fully understand the landscape of object-tracking technology, it’s essential first to explore the various current tracking methods. This exploration will include a detailed look at different types of trackers and their unique capabilities.

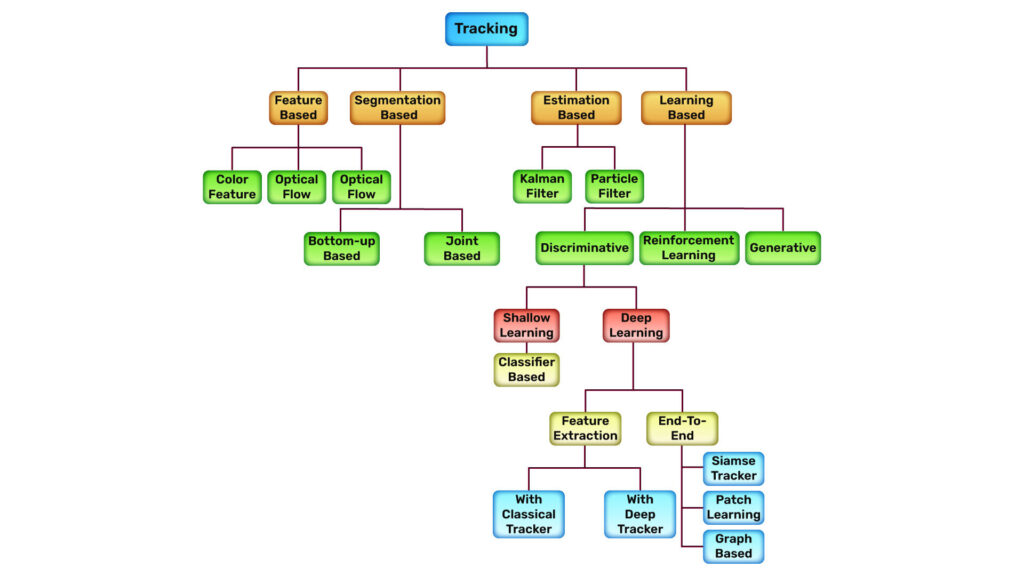

Method Based Classification

In the paper by Zahra Soleimanitaleba and Mohammad Ali Keyvanrada [2], we learn that object tracking methods have different categories. For example, from feature-based methods like optical flow to Kalman Filter, which uses estimation-based methods for object tracking, to Deep learning-based methods like GOTURN or SORT by Alex Bewley, Zongyuan Ge, et al. [3].

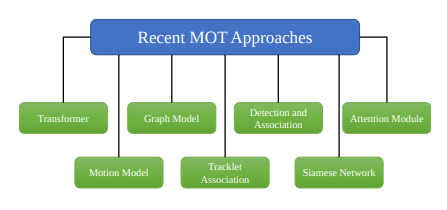

Author Mk Bashar, Samia Islam, et al.[4] Their paper explained the recent approach of object tracking, like Transformer or Attention Modules. This article will also explore applying YOLOv8 object tracking in real-time, demonstrating its practical use in this advanced field.

Application Based Classification

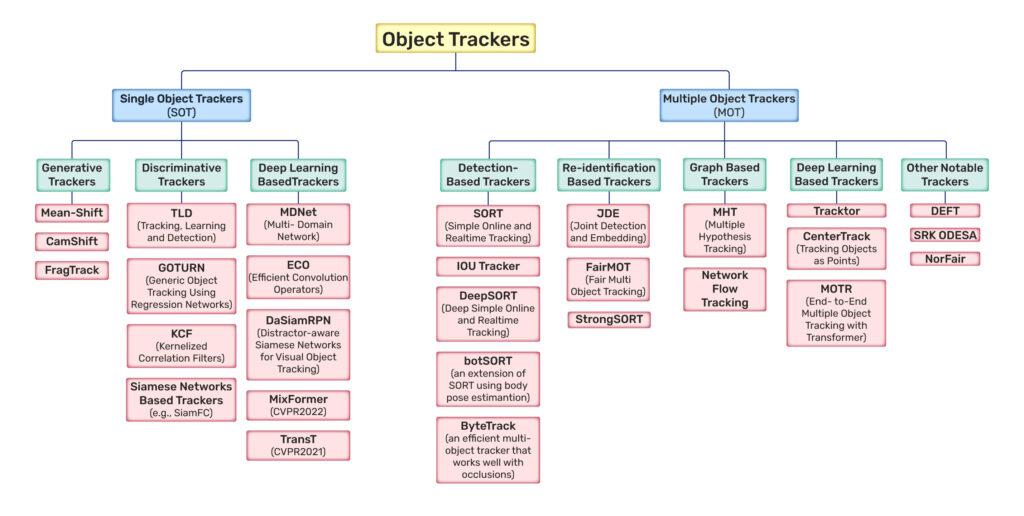

We can classify Object Tracking into two main categories based on the type and functionality of available trackers.

Single Object Tracker (SOT)

In this class of trackers, the first frame is marked using a rectangle to indicate the location of the object we want to track. The object is then tracked in subsequent frames using the tracking algorithm. In most real-life applications, these trackers are used with an object detector. OpenCV has built-in trackers for you, including KCF, TLD, and GOTURN. You can check our detailed article about Single Object Trackers for more information.

Multiple Object Tracker (MOT)

With a fast object detector, detecting multiple objects and then running a tracker to track multiple objects in a single or consecutive frame makes sense.

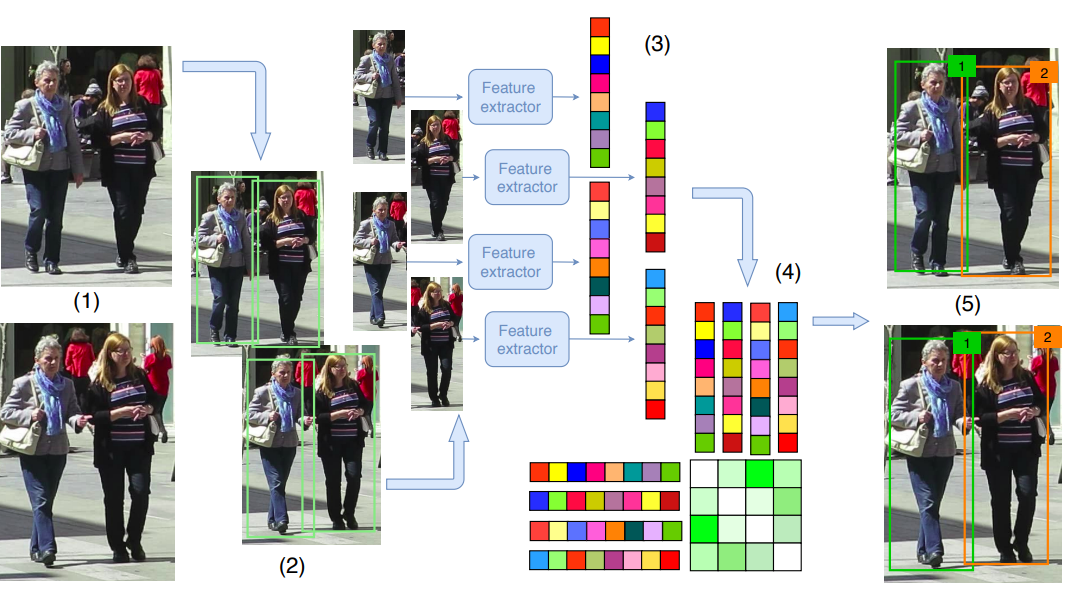

Various advanced Multi-Object Tracking (MOT) systems exist, such as DeepSORT, FaitMOT, ByteTrack, botSORT, etc. These systems employ sophisticated algorithms to track multiple objects accurately and efficiently in video sequences.

In the paper by Gioele Ciaparrone, Francisco Luque Sánchez, et al.[5] the standard approach in Multiple Object Tracking (MOT) algorithms is tracking-by-detection, where detections (bounding boxes identifying targets in video frames) guide the tracking process. These detections are associated with maintaining consistent IDs for the same targets across frames. This makes MOT primarily an assignment problem. Thanks to modern detection frameworks ensuring high-quality detections, most MOT methods focus on enhancing Data association rather than detection. Many MOT datasets provide pre-determined detections, allowing algorithms to bypass detection and focus solely on comparing association quality. This approach isolates the impact of detector performance on tracking results. We have discussed only some of the trackers here. You can learn more about our Multiple Object Tracker in our detailed article.

YOLOv8 Object Tracking

When you hear the term object tracking using YOLOv8, it sounds fascinating, right? So, let’s get started.

Setting up the Environment

First, we will create a virtual environment in our workspace. We are using Miniconda here. You can use any other libraries as per your requirements.

!conda create -n your_env python=3.9.0

!conda activate your_env

!conda install pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia

!pip install ultralytics

!pip install opencv-python

The next step is to import the libraries, after which we can run the inference.

import cv2

from ultralytics import YOLO

Object Tracking with YOLOv8

There are five models in each category of YOLOv8 models for detection, segmentation, and classification. YOLOv8 Nano is the fastest and smallest, while YOLOv8 Extra Large (YOLOv8x) is the most accurate yet the slowest among them. We are going to use the YOLOv8x to run the inference. You can fine-tune these models, too, as per your use cases. This tutorial, Train YOLOv8 on Custom Dataset, will help you gain more insights about fine-tuning YOLOv8.

# Load an official or custom model

model = YOLO('yolov8x.pt') # Load an official Detect model

# Perform tracking with the model

results = model.track(source="path_to_your_video.mp4", show=False, save=True, name='test_result', persist=True, classes=18) # Tracking with default tracker

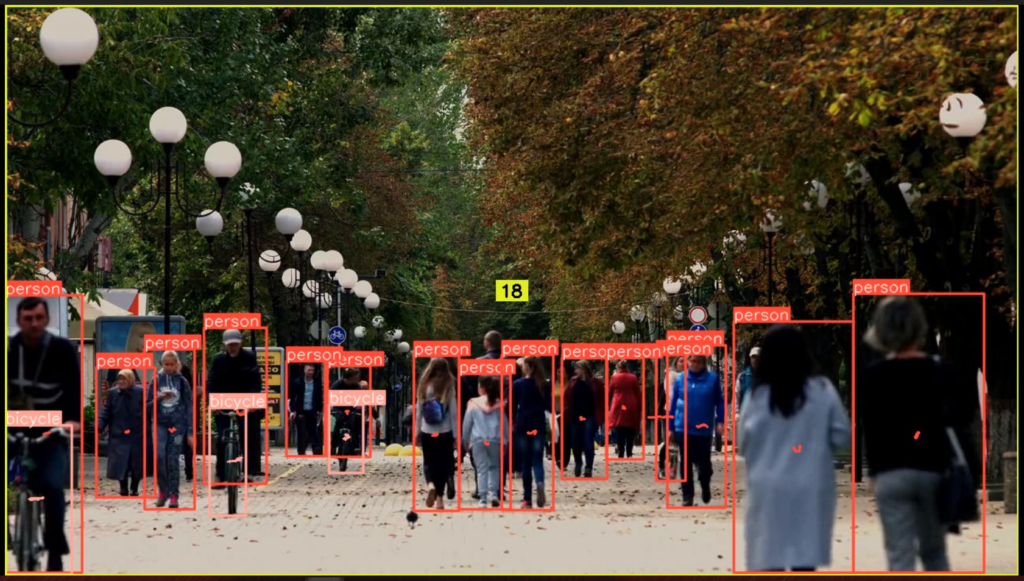

What we have done is first create an object model and load the model from the YOLO directory. Then, we used the track method to run inference. Results you can see here.

This track method internally runs a tracker.py script that supports two tracking algorithms. They can be enabled by passing the relevant YAML configuration file, such as tracker=tracker_type.yaml:

- BoT-SORT – A new robust state-of-the-art tracker that combines motion and appearance information advantages, camera-motion compensation, and a more accurate Kalman filter state vector.

- ByteTrack – A simple, effective, and generic association method that tracks by associating every detection box instead of only the high-score ones. For the low-score detection boxes, we utilize their similarities with tracklets to recover valid objects and filter out the background detections.

We ran our inference with the default tracker BoT-SORT in this article.

What is Object Counting?

YOLOv8 Object counting is an extended part of object detection and object tracking. It begins with YOLOv8 object tracking to identify objects in video frames. These objects are then tracked across frames via algorithms like BoTSORT or ByteTrack, maintaining consistent identification.

The counting employs simple whole-frame counting, line counting (counting objects crossing a predefined line), and zone-based counting (tallying objects within a specific area). In/out counting further distinguishes objects entering or exiting designated areas. To ensure accuracy, especially against double counting, objects are tracked in lists (like counting_list), and counts are incremented (count += 1) under specific conditions. Addressing challenges such as occlusions and dynamic conditions, the process yields valuable data for insights in fields ranging from traffic monitoring to retail analytics, showcasing its comprehensive utility.

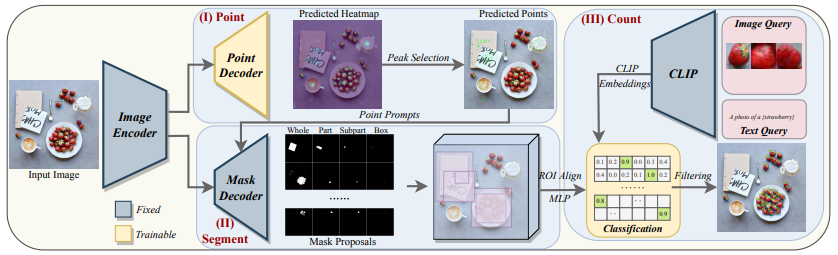

Paper by Zhizhong Huang, Mingliang Dai, et al.[6] introduced a deep learning framework, PseCo, that combines the superior advantages of two foundation models without compromising their zero-shot capability:

(i) SAM to segment all possible objects as mask proposals and

(ii) CLIP to classify proposals to obtain accurate object counts.

In this article, we’ll focus on whole frame-based counting methods, reserving the discussion of other object-counting techniques for a separate, dedicated article.

Object Counting Using YOLOv8 Object Tracking

Using YOLOv8 object tracking, we can count objects quickly and in real-time. We will use OpenCV, too. Let’s explore how.

# Clone Ultralytics repo

!git clone https://github.com/ultralytics/ultralytics

# Navigate to the local directory

!cd ultralytics/examples/YOLOv8-Region-Counter

First, you must clone the ultralytics repository on your local machine and navigate to the YOLOv8-Region-Counter directory. Then, you need to make a few changes to the yolov8_region_counter.py script.

counting_regions = [

{

"name": "YOLOv8 Rectangle Region",

"polygon": Polygon([(0, 0), (0, 1280), (720, 1280), (720, 0)]), # Polygon points (tl,bl,br,tr)

"counts": 0,

"dragging": False,

"region_color": (37, 255, 225), # BGR Value

"text_color": (0, 0, 0), # Region Text Color

},

]

We have added a customized region(rectangle) and mentioned four corner points of our input video frame.

Don’t be concerned about calculating those points; we’ve got you covered. Below, you’ll find a download button to take care of this.

!python ultralytics/examples/YOLOv8-Region-Counter/yolov8_region_counter.py --source "/path/to/your/video.mp4" --weights "yolov8x.pt" --view-img --save-img

Now, we are ready to run the inference. We will use this simple CLI command to run the inference in our local. You can see the results here.

Let’s break down how this yolov8_region_counter.py works internally.

results = model.track(frame, persist=True, classes=classes)

First, the script uses YOLOv8 to detect people and BoT-SORT to assign IDs to each detected person.

for region in counting_regions:

for box in boxes:

bbox_center = ((box[0] + box[2]) / 2, (box[1] + box[3]) / 2) # Center of the bounding box

if region["polygon"].contains(Point(bbox_center)):

region["counts"] += 1

After that, it goes through each detected person. It determines if they fall within the predefined region. If a person is inside the region, the script increments the count for that region.

# Draw regions (Polygons/Rectangles)

for region in counting_regions:

region_label = str(region["counts"])

region_color = region["region_color"]

region_text_color = region["text_color"]

polygon_coords = np.array(region["polygon"].exterior.coords, dtype=np.int32)

centroid_x, centroid_y = int(region["polygon"].centroid.x), int(region["polygon"].centroid.y)

text_size, _ = cv2.getTextSize(region_label, cv2.FONT_HERSHEY_SIMPLEX, fontScale=0.7, thickness=line_thickness)

text_x = centroid_x - text_size[0] // 2

text_y = centroid_y + text_size[1] // 2

cv2.rectangle(

frame,

(text_x - 5, text_y - text_size[1] - 5),

(text_x + text_size[0] + 5, text_y + 5),

region_color,

-1,)

cv2.putText(frame, region_label, (text_x, text_y), cv2.FONT_HERSHEY_SIMPLEX, 0.7, region_text_color, line_thickness)

cv2.polylines(frame, [polygon_coords], isClosed=True, color=region_color, thickness=region_thickness)

After this, It uses cv2.rectangle, cv2.putText and cv2.polylines to visualize counts and the region.

There’s no need to stress about making these changes yourself. Along with this article, we will provide an updated yolov8_region_counter.py script. All you need to do is replace the original file in your cloned directory with our provided script.

Applications of YOLOv8 Object Tracking and Object Counting

We can leverage the power of YOLOv8 object tracking combined with object counting methods to solve many real-world problems. Let’s see one by one here.

Implementation in a Retail Store

In supermarkets or retail stores, using a line counter, we can count on the number of products a particular customer takes, which helps store owners keep track of their products.

Airport Baggage Claim

Another valuable application of line counting is counting the number of baggage out from the luggage belt. It can be very beneficial for both travelers and airport authorities.

Livestock Tracking and Counting

Using the region counter, we can count the number of animals on the farm and even track them while they are grazing. It can help all the farm owners and farmers as well.

Highway Traffic Analysis

Using a rectangle region counter, we can monitor in/out the count of cars on the highway.

Street Monitoring

We can use a region counter to count the number of people and objects in a specific region on the streets.

In one of our upcoming articles, we will dive deeper into each of the above-mentioned object-counting techniques.

Key Takeaways

Let’s analyze a few key takeaways from our article:

- YOLOv8 for Object Tracking: YOLOv8 is a state-of-the-art object detection model that can be leveraged for object tracking. It offers fast and accurate detection and seamlessly transitions from detection to tracking.

- Object Tracking and Counting Importance: YOLOv8 Object tracking is essential for consistently identifying objects across video frames, especially in occlusion cases. Object counting extends object detection and tracking, providing valuable insights for various applications.

- Types of Object Tracking: YOLOv8 Object tracking methods can be categorized into Single Object Tracking (SOT) and Multiple Object Tracking (MOT). MOT algorithms focus on tracking multiple objects in a single or consecutive frame, making them suitable for various scenarios.

- Applications of Object Tracking and Counting: YOLOv8 Object tracking and counting have practical applications in retail stores, airport baggage claims, livestock tracking, highway traffic analysis, and street monitoring. These technologies offer solutions for tracking and counting objects in real-world situations.

Overall, YOLOv8 and OpenCV provide a powerful combination for object tracking and counting with a wide range of computer vision applications.

Conclusion

In conclusion, YOLOv8 object tracking presents a transformative approach in the field of computer vision, enabling effective real-world applications. This exploration of the YOLOv8 inference pipeline has shed light on the vast potential of object tracking and counting, offering the readers a deep understanding of its practical uses.

From retail management to traffic analysis, the versatility of YOLOv8 is evident and addresses a range of real-world challenges. By embracing the insights provided in this article, readers can apply these advanced object counting techniques in various domains, thereby solving complex problems with the cutting-edge technology of YOLOv8 object tracking.

References

[1] Zou, Zhengxia, et al. “Object detection in 20 years: A survey.” Proceedings of the IEEE (2023).