Facial Emotion Recognition (FER) refers to the process of identifying and categorizing human emotions based on facial expressions. By analyzing facial features and patterns, machines can make educated guesses about a person’s emotional state. This subfield of facial recognition is highly interdisciplinary, drawing on insights from computer vision, machine learning, and psychology.

In this research article, we will try to understand the concept of facial emotion recognition from both a philosophical and technical point of view. We will also explore a custom VGG13 model architecture and the revolutionary Face Expression Recognition Plus (FER+) dataset to build a consolidated real time facial emotion recognition system. In the end, we’ll also analyze the results obtained from the experiments.

To see the results, you may SCROLL BELOW to the concluding part of the article or click here to see the experimental results.

- Importance of Human Emotion

- Can an AI-accelerated System Detect Human Emotions?

- Applications of Facial Emotion Recognition

- Building a Facial Emotion Recognition System

- Conclusions

- References

Importance of Human Emotion

Philosophers have debated the nature of emotions for centuries. While some see emotions as purely physiological responses, others view them as complex mental states involving judgments, desires, and beliefs. Still, others understand emotions as socially constructed phenomena. Historically, emotions were often contrasted with reason, with reason being seen as superior and emotions as volatile and disruptive. However, modern perspectives, informed by psychology and neuroscience, recognize that emotions often play a crucial role in decision-making, moral judgments, and other cognitive processes.

Emotions are not just individual experiences; they are also shaped by culture and history. Different cultures might prioritize, understand, or even name emotions differently. This cultural variability suggests that while there may be some universal emotional experiences, the way they are interpreted and expressed can vary widely.

Emotions have been linked to physical health outcomes. Understanding and managing emotions is crucial for mental wellbeing. Unaddressed or suppressed emotions can lead to various mental health issues. Chronic stress or prolonged negative emotional states can have adverse effects on the body. It can also serve as a powerful motivator. For instance, anger can motivate social change, while fear can drive individuals to avoid danger.

Can an AI-accelerated System Detect Human Emotions?

For humans, the process of seeing and interpreting the world around us is intuitive and seemingly effortless. Our eyes capture light, our optic nerves transmit this data to our brain, and within milliseconds, our brain processes this information to form a coherent picture of our surroundings. This ability to perceive, recognize, and interpret is a culmination of millions of years of evolutionary refinement.

Computers, on the other hand, have no innate understanding of the world. They do not “see” in the same way humans do. Instead, they interpret the world through data, which, in the case of vision, is typically provided by cameras. A camera converts visual information into digital data, which an image classification model can then process.

Nevertheless, you might wonder, “What is the real use of facial emotion recognition systems?”

Applications of Facial Emotion Recognition

It turns out that there are multiple domains where emotion recognition can be applied. The following are a few key areas where this may be helpful:

- Social and Content Creation Platforms:

- Personalizing user experiences based on emotional feedback.

- Adaptive learning systems adjust content based on the learner’s emotional state to provide more tailored recommendations.

- Medical Research:

- Monitoring patients for signs of depression, anxiety, or other emotional disorders.

- Assisting therapists in tracking patients’ progress or reactions during sessions.

- Monitoring stress levels in real-time.

- Driver Safety Mechanisms:

- Monitoring drivers for signs of fatigue, distraction, stress, or drowsiness.

- Marketing and Market Research:

- Analyzing audience reactions to advertisements in real-time.

- Tailoring advertising content to the viewer’s emotional state.

- Product testing and feedback.

- Security and Surveillance:

- Detecting suspicious or abnormal behavior in crowded areas.

- Analyzing crowd reactions during public events for safety purposes.

Building a Facial Emotion Recognition System

This section goes deep into the intricacies of constructing a facial emotion recognition system. We begin by exploring a dataset tailored for face emotion recognition, ensuring a robust foundation for our model. Following this, we will introduce a custom VGG13 model architecture renowned for its efficiency and accuracy in classification tasks and elucidate its relevance to our emotion recognition objective.

Lastly, we spend some time on the experimental results section to offer a comprehensive evaluation, shedding light on the system’s performance metrics and its potential applications.

Face Emotion Recognition Dataset (FER+)

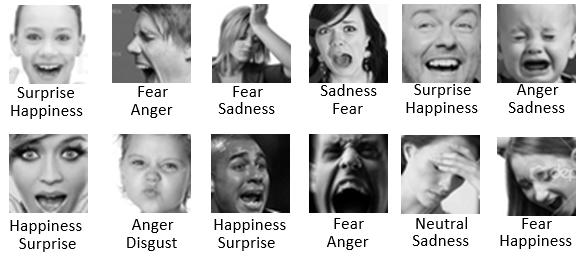

The FER+ dataset is a notable extension of the original Facial Expression Recognition (FER) dataset. Developed to improve upon the limitations of the original dataset, FER+ offers a more refined and nuanced labeling of facial expressions. While the original FER dataset categorized facial expressions into six basic emotions – happiness, sadness, anger, surprise, fear, and disgust – FER+ takes this a step further by introducing two additional categories: neutral and contempt.

This expansion of emotional categories in the FER+ dataset reflects a recognition of the complexity of human emotions and expressions. It turns out that a person looks expressionless most of the time, and hence “Neutral” serves as a baseline for comparison and helps account for situations where an individual’s expression doesn’t strongly convey any of the primary emotions. By adding “neutral” as a distinct category, the dataset acknowledges that not all facial expressions can be easily categorized into the primary emotion labels.

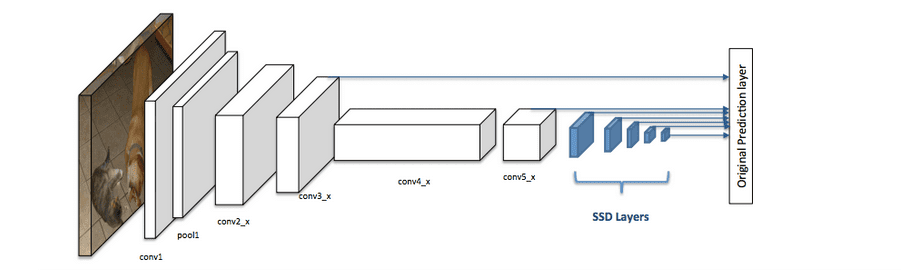

RFB-320 Single Shot Multibox Detector (SSD) Model for Face Detection

Before the emotion is recognized, the face needs to be detected in the input frame. For this, the Ultra-lightweight Face Detection RFB-320 is used. It is an innovative face detection model optimized for edge computing devices. Incorporating a modified Receptive Field Block (RFB) module, it effectively captures multiscale contextual information without adding computational overhead. Trained on the diverse WIDER FACE dataset and tailored for 320×240 input resolution, it boasts an impressive balance of efficiency with a computational rate of 0.2106 GFLOPs and a compact size housing of 0.3004 million parameters. Developed in the PyTorch framework, the model achieves a commendable mean average precision (mAP) of 84.78%, marking its position as a potent solution for efficient face detection in resource-constrained environments. In this implementation, the RFB-320 SSD model has been used in Caffe format.

Custom VGG13 Model Architecture

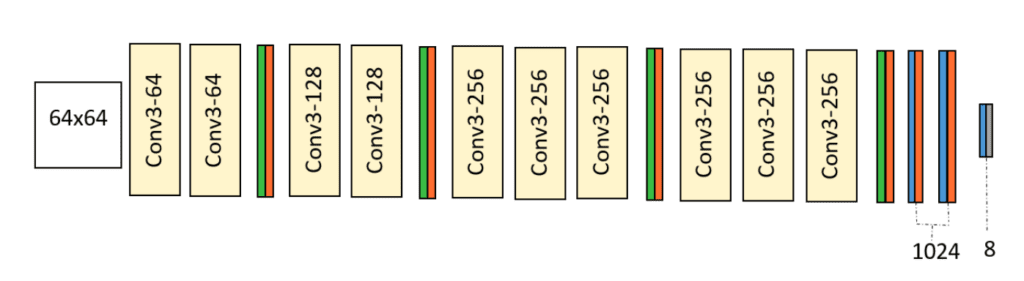

The emotion recognition classification model employs a customized VGG13 architecture designed for 64×64 grayscale images. It classifies images into eight emotion classes using convolutional layers with max pooling and dropout to prevent overfitting. The architecture starts with two convolutional layers of 64 kernels, followed by max pooling and 25% dropout. Additional convolutional layers capture intricate features—two dense layers with 1024 nodes each aggregate information, followed by 50% dropout. A softmax output layer predicts the emotion class.

In the model architecture shown in Figure 5, we can observe that yellow, green, orange, blue, and gray are convolution, max pooling, dropout, fully connected, and soft-max layer, respectively. Despite a small dataset, dropout layers are strategically placed to curb overfitting. This enhances the model’s ability to generalize and accurately recognize emotions in various images. In this implementation, this model has been pre-trained on the above-mentioned FER+ dataset.

Code Implementation of Facial Expression Recognition

Alright, let’s write some code to build this system practically. Initially, a few important parameters need to be initialized.

image_mean = np.array([127, 127, 127])

image_std = 128.0

iou_threshold = 0.3

center_variance = 0.1

size_variance = 0.2

min_boxes = [

[10.0, 16.0, 24.0],

[32.0, 48.0],

[64.0, 96.0],

[128.0, 192.0, 256.0]

]

strides = [8.0, 16.0, 32.0, 64.0]

threshold = 0.5

- image_mean: Mean values for image normalization across RGB channels.

- image_std: Standard deviation for image normalization.

- iou_threshold: Threshold for Intersection over Union (IoU) metric to determine bounding box matches.

- center_variance: Scaling factor for predicted bounding box center coordinates.

- size_variance: Scaling factor for predicted bounding box dimensions.

- min_boxes: Minimum bounding box dimensions for objects of different sizes.

- strides: Control the scale of the feature maps according to the image size..

- threshold: Confidence threshold for object detection.

def define_img_size(image_size):

shrinkage_list = []

feature_map_w_h_list = []

for size in image_size:

feature_map = [int(ceil(size / stride)) for stride in strides]

feature_map_w_h_list.append(feature_map)

for i in range(0, len(image_size)):

shrinkage_list.append(strides)

priors = generate_priors(

feature_map_w_h_list, shrinkage_list, image_size, min_boxes

)

return priors

The above function `define_img_size` is aimed at generating prior bounding boxes (priors) for object detection tasks. This function takes an `image_size` argument as input. It calculates feature map dimensions based on the provided image size and a set of predefined stride values. These feature map dimensions reflect the expected output dimensions of convolutional neural network (CNN) layers for varying scales of the input image. In essence, the priors in SSD provide an efficient way to simultaneously predict multiple bounding boxes and their associated class scores in a single forward pass of the network, enabling real-time object detection.

The code prepares a `shrinkage_list` by replicating the `strides` list for each element in the `image_size` list. Subsequently, the code invokes a function named `generate_priors`, passing the calculated feature map dimensions, shrinkage information, `image_size`, and a predefined set of minimum bounding box dimensions. The purpose of the `generate_priors` function is to utilize the provided information to create and return the desired prior bounding boxes. In the end, the function `define_img_size` returns these calculated prior bounding boxes.

def generate_priors(feature_map_list, shrinkage_list, image_size, min_boxes):

priors = []

for index in range(0, len(feature_map_list[0])):

scale_w = image_size[0] / shrinkage_list[0][index]

scale_h = image_size[1] / shrinkage_list[1][index]

for j in range(0, feature_map_list[1][index]):

for i in range(0, feature_map_list[0][index]):

x_center = (i + 0.5) / scale_w

y_center = (j + 0.5) / scale_h

for min_box in min_boxes[index]:

w = min_box / image_size[0]

h = min_box / image_size[1]

priors.append([

x_center,

y_center,

w,

h

])

print("priors nums:{}".format(len(priors)))

return np.clip(priors, 0.0, 1.0)

The code defines `generate_priors`, a function generating prior bounding boxes for object detection. Using input data like feature maps, image dimensions, and minimum box sizes, it calculates and normalizes bounding box coordinates. The function accumulates these boxes and returns them, forming vital references for accurate object localization.

def hard_nms(box_scores, iou_threshold, top_k=-1, candidate_size=200):

scores = box_scores[:, -1]

boxes = box_scores[:, :-1]

picked = []

indexes = np.argsort(scores)

indexes = indexes[-candidate_size:]

while len(indexes) > 0:

current = indexes[-1]

picked.append(current)

if 0 < top_k == len(picked) or len(indexes) == 1:

break

current_box = boxes[current, :]

indexes = indexes[:-1]

rest_boxes = boxes[indexes, :]

iou = iou_of(

rest_boxes,

np.expand_dims(current_box, axis=0),

)

indexes = indexes[iou <= iou_threshold]

return box_scores[picked, :]

The `hard_nms` function implements Hard Non-Maximum Suppression (NMS) for object detection. It processes `box_scores` with parameters like `iou_threshold`, `top_k`, and `candidate_size`. It selects high-scoring, non-overlapping boxes through looping and IoU computation. The function returns a refined subset of boxes, improving object detection accuracy.

def area_of(left_top, right_bottom):

hw = np.clip(right_bottom - left_top, 0.0, None)

return hw[..., 0] * hw[..., 1]

def iou_of(boxes0, boxes1, eps=1e-5):

overlap_left_top = np.maximum(boxes0[..., :2], boxes1[..., :2])

overlap_right_bottom = np.minimum(boxes0[..., 2:], boxes1[..., 2:])

overlap_area = area_of(overlap_left_top, overlap_right_bottom)

area0 = area_of(boxes0[..., :2], boxes0[..., 2:])

area1 = area_of(boxes1[..., :2], boxes1[..., 2:])

return overlap_area / (area0 + area1 - overlap_area + eps)

The `area_of` function calculates rectangle areas using top-left and bottom-right coordinates. It avoids negative values through `np.clip`. On the other hand, the `iou_of` function computes IoU by assessing overlapping regions between two sets of boxes. It leverages the `area_of` function and aids in evaluating detection accuracy by quantifying bounding box overlap.

def predict(

width,

height,

confidences,

boxes,

prob_threshold,

iou_threshold=0.3,

top_k=-1

):

boxes = boxes[0]

confidences = confidences[0]

picked_box_probs = []

picked_labels = []

for class_index in range(1, confidences.shape[1]):

probs = confidences[:, class_index]

mask = probs > prob_threshold

probs = probs[mask]

if probs.shape[0] == 0:

continue

subset_boxes = boxes[mask, :]

box_probs = np.concatenate(

[subset_boxes, probs.reshape(-1, 1)], axis=1

)

box_probs = hard_nms(box_probs,

iou_threshold=iou_threshold,

top_k=top_k,

)

picked_box_probs.append(box_probs)

picked_labels.extend([class_index] * box_probs.shape[0])

if not picked_box_probs:

return np.array([]), np.array([]), np.array([])

picked_box_probs = np.concatenate(picked_box_probs)

picked_box_probs[:, 0] *= width

picked_box_probs[:, 1] *= height

picked_box_probs[:, 2] *= width

picked_box_probs[:, 3] *= height

return (

picked_box_probs[:, :4].astype(np.int32),

np.array(picked_labels),

picked_box_probs[:, 4]

)

The `predict` function refines object detection model results, generating accurate bounding box predictions, labels, and confidences. It filters predictions based on provided thresholds and employs Non-Maximum Suppression (NMS) to eliminate redundancies. The function takes inputs like `width`, `height`, `confidences`, and `boxes`, and outputs refined bounding box coordinates, labels, and confidences.

def convert_locations_to_boxes(locations, priors, center_variance,

size_variance):

if len(priors.shape) + 1 == len(locations.shape):

priors = np.expand_dims(priors, 0)

return np.concatenate([

locations[..., :2] * center_variance * priors[..., 2:] + priors[..., :2],

np.exp(locations[..., 2:] * size_variance) * priors[..., 2:]

], axis=len(locations.shape) - 1)

def center_form_to_corner_form(locations):

return np.concatenate(

[locations[..., :2] - locations[..., 2:] / 2,

locations[..., :2] + locations[..., 2:] / 2],

len(locations.shape) - 1

)

The function `convert_locations_to_boxes` plays a pivotal role in translating predicted offsets and scales into real bounding box coordinates. By using the given information and adjusting factors like `center_variance` and `size_variance`, it corrects the predicted bounding box to accurately show where the object is and how big it is.

While the `center_form_to_corner_form` function serves the purpose of converting bounding box coordinates from a center-based representation to a corner-based format. This conversion is essential for compatibility with different detection algorithms and effectively visualizing bounding boxes. The function recalibrates the bounding box’s center coordinates and dimensions to represent the top-left and bottom-right corners.

def FER_live_cam():

emotion_dict = {

0: 'neutral',

1: 'happiness',

2: 'surprise',

3: 'sadness',

4: 'anger',

5: 'disgust',

6: 'fear'

}

# cap = cv2.VideoCapture('video1.mp4')

cap = cv2.VideoCapture(0)

frame_width = int(cap.get(3))

frame_height = int(cap.get(4))

size = (frame_width, frame_height)

result = cv2.VideoWriter('result.avi',

cv2.VideoWriter_fourcc(*'MJPG'),

10, size)

# Read ONNX model

model = 'onnx_model.onnx'

model = cv2.dnn.readNetFromONNX('emotion-ferplus-8.onnx')

# Read the Caffe face detector.

model_path = 'RFB-320/RFB-320.caffemodel'

proto_path = 'RFB-320/RFB-320.prototxt'

net = dnn.readNetFromCaffe(proto_path, model_path)

input_size = [320, 240]

width = input_size[0]

height = input_size[1]

priors = define_img_size(input_size)

while cap.isOpened():

ret, frame = cap.read()

if ret:

img_ori = frame

#print("frame size: ", frame.shape)

rect = cv2.resize(img_ori, (width, height))

rect = cv2.cvtColor(rect, cv2.COLOR_BGR2RGB)

net.setInput(dnn.blobFromImage(

rect, 1 / image_std, (width, height), 127)

)

start_time = time.time()

boxes, scores = net.forward(["boxes", "scores"])

boxes = np.expand_dims(np.reshape(boxes, (-1, 4)), axis=0)

scores = np.expand_dims(np.reshape(scores, (-1, 2)), axis=0)

boxes = convert_locations_to_boxes(

boxes, priors, center_variance, size_variance

)

boxes = center_form_to_corner_form(boxes)

boxes, labels, probs = predict(

img_ori.shape[1],

img_ori.shape[0],

scores,

boxes,

threshold

)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

for (x1, y1, x2, y2) in boxes:

w = x2 - x1

h = y2 - y1

cv2.rectangle(frame, (x1,y1), (x2, y2), (255,0,0), 2)

resize_frame = cv2.resize(

gray[y1:y1 + h, x1:x1 + w], (64, 64)

)

resize_frame = resize_frame.reshape(1, 1, 64, 64)

model.setInput(resize_frame)

output = model.forward()

end_time = time.time()

fps = 1 / (end_time - start_time)

print(f"FPS: {fps:.1f}")

pred = emotion_dict[list(output[0]).index(max(output[0]))]

cv2.rectangle(

img_ori,

(x1, y1),

(x2, y2),

(0, 255, 0),

2,

lineType=cv2.LINE_AA

)

cv2.putText(

frame,

pred,

(x1, y1),

cv2.FONT_HERSHEY_SIMPLEX,

0.8,

(0, 255, 0),

2,

lineType=cv2.LINE_AA

)

result.write(frame)

cv2.imshow('frame', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

else:

break

cap.release()

result.release()

cv2.destroyAllWindows()

The function `FER_live_cam()` conducts real-time facial emotion recognition on video frames. It first sets up a dictionary, `emotion_dict`, which maps numerical emotion class indices to human-readable emotion labels. The video source is initialized, although there’s a provision to use a webcam feed. The function also initializes an output video writer to save the processed frames with emotion annotations. The primary emotion prediction model, saved in the ONNX format, is read using OpenCV DNN `readNetFromONNX` method and is loaded alongside the RFB-30 SSD face detection model in Caffe format. As the video is processed frame-by-frame, the face detection model identifies faces represented by bounding boxes.

These detected faces undergo pre-processing—being resized and converted to grayscale—before being fed into the emotion recognition model. The recognized emotion, determined via the maximum output score from the model, is mapped to a label using `emotion_dict`. The frame is then annotated with rectangles around detected faces and emotion labels, saved to the output video file, and displayed in real-time. Users can halt the video display by pressing ‘q‘. Once the video processing completes or is interrupted, resources like the video capture and writer are released, and any open windows are closed. It’s worth noting that the function references several variables and helper functions not defined within its scope, suggesting they are part of a broader codebase.

Experimental Results

Let’s look at a few inference results using this model with stock footage of human beings exhibiting different facial emotions.

If you found these experimental results interesting, why don’t you try implementing a facial emotion recognition by yourself? You can SCROLL UP or click here for the code walk-through.

Conclusions

This research article has shed light on the intricate interplay between human emotions and cutting-edge deep-learning technological advancements. The journey from the human perceptual experience to the digital domain has been a fascinating exploration, revealing not only the immense potential of deep learning but also raising profound philosophical questions about the essence of emotions and their interpretation.

From a purely technical perspective, the strides made in the field of facial emotion recognition are remarkable. The utilization of deep learning models such as convolutional neural networks has enabled the development of systems that can discern emotions from facial expressions with astonishing accuracy. These advancements hold promise for a wide array of applications, from healthcare and education to human-computer interaction and marketing.

However, while we marvel at the capabilities of these technologies, we must also confront philosophical considerations. The nature of emotions, often seen as the quintessential essence of human experience, presents a philosophical challenge when distilled into algorithms and mathematical models. Can an algorithm truly comprehend the depth and nuance of human emotions, or are we merely translating a complex phenomenon into patterns of data? Ethical concerns arise as we ponder the potential for misuse, raising questions about privacy, consent, and the boundaries of emotional surveillance.

References

FER+ Paper: https://arxiv.org/abs/1608.01041

RFB-320 SSD Model: https://github.com/Linzaer/Ultra-Light-Fast-Generic-Face-Detector-1MB

Repository: https://github.com/Microsoft/FERPlus