The YOLOv5 object detection models are well known for their excellent performance and optimized inference speed. Recently, the support for instance segmentation has also been added to the codebase. With this, the YOLOv5 instance segmentation models have become some of the fastest and most accurate models for real-time instance segmentation.

Real-time instance segmentation models have use cases in robotics, autonomous driving, manufacturing, and medical imaging.

In this article, we will answer the following questions about YOLOv5 instance segmentation:

- What changes were made to the YOLOv5 detection model to obtain the YOLOv5 instance segmentation architecture?

- What is the ProtoNet module that is used for instance segmentation?

- Which models are available in the YOLOv5 instance segmentation family?

- What is the performance and results on benchmark datasets like MS COCO?

- What kind of FPS and detection results can we expect when running inference?

By answering the above questions, we will gain vital insights into almost everything about the new YOLOv5 instance segmentation models.

- YOLOv5 Instance Segmentation Architecture

- Models Available in the YOLOv5 Instance Segmentation Family

- YOLOv5 Instance Segmentation Performance on the COCO Dataset

- Inference using YOLOv5 Instance Segmentation Models

- Summary

YOLO Master Post – Every Model Explained

Don’t miss out on this comprehensive resource — Mastering All Yolo Models for a richer, more informed perspective on the YOLO series.

YOLOv5 Instance Segmentation Architecture

The YOLOv5 instance segmentation architecture is a modification of the detection architecture.

In addition to the YOLOv5 object detection head, there is a small, fully connected neural network called ProtoNet. The object detection head combined with the ProtoNet makes up the YOLOv5 instance segmentation architecture.

YOLOv5 ProtoNet Architecture for Instance Segmentation

ProtoNet produces prototype masks for the segmentation model. It is similar to an FCN (Fully Connected Network) used for semantic segmentation.

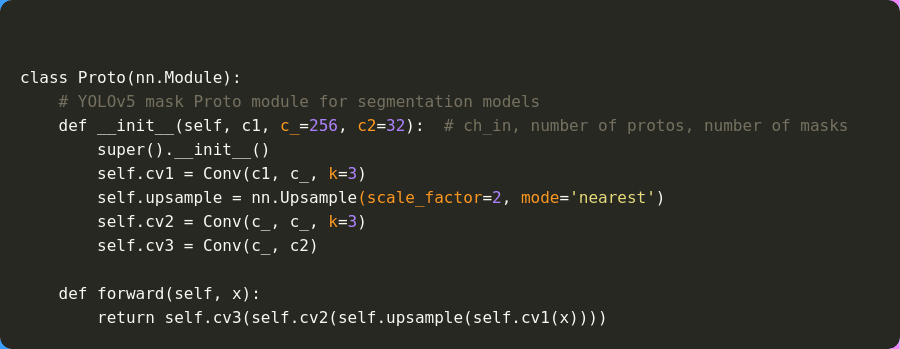

The following code shows the entire architecture of the ProtoNet (Proto class):

It is a three-layer network made up of 2D convolutions with SiLU activation functions. There are important observations that we can deduce from this. They are:

- The

c_acts as the number of protos, which is the number of output channels in the first and second convolutional layers. c2is the number of masks that we want the network to generate. It corresponds to the number of output channels in the final layer.- You may also observe that the upsampling mode in the intermediate layers is nearest instead of

bilinear. This is so because the nearest interpolation upsampling seems to work better here.

The above shows the ProtoNet architecture. But where is it used in the YOLOv5 instance segmentation model?

It’s used in the final instance segmentation head along with the final 2D convolutional layers.

The 2D convolutional layers are still responsible for scaled detections. The detection layers detect objects at three scales, each scale outputting three different anchor boxes. And the ProtoNet is responsible for outputting the prototype masks.

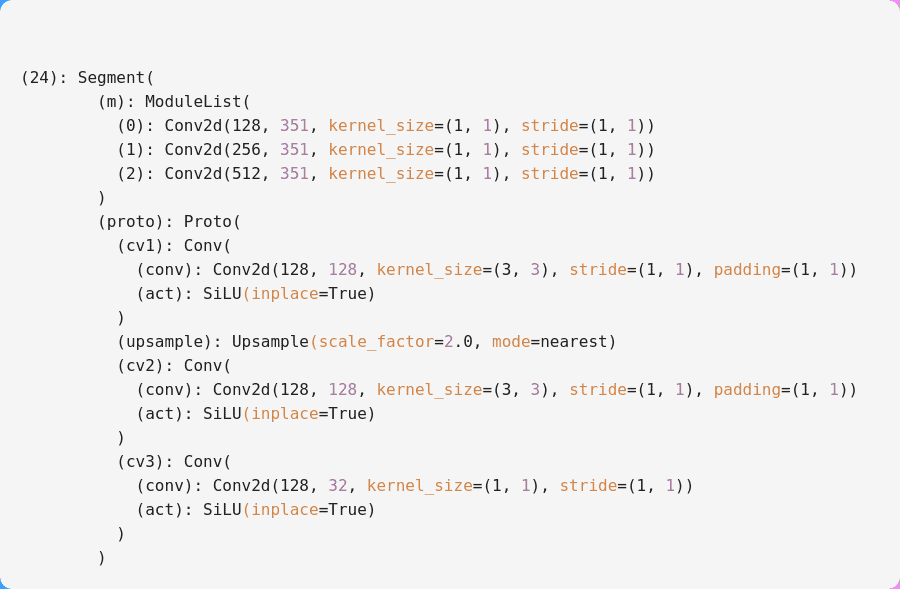

It is important to note that the final convolutional detection heads have 351 channels instead of 255.

Where do the extra channels come from?

- Remember that solely the detection head outputs 80 class labels, 4 bounding box coordinates, and 1 confidence score. This results in 85 outputs. And the detection happens for three anchor scales. So, 85*3 = 255 channels.

- Now, we have 32 mask outputs as well. This makes it 85+32 = 117 outputs per anchor. And for three different anchors, we get 117*3 = 351 output channels.

YOLOv5 Instance Segmentation Head

To reinforce the above analysis, let’s examine the code for the instance segmentation head used in the YOLOv5 architecture.

If you take a look at line 7 in the Segment head, the number of outputs is 5+80 (number of classes) +3 2 (number of masks) = 117 per anchor. For three anchors, we get 117*3 = 351 outputs which is exactly what we discussed above.

Further, the convolution operations happen in the forward method. As we can see, the features go through both the Proto class instance and the Detect head instance.

The rest of the code revolves around post-processing after getting the detection boxes and segmentation masks. One of the major operations among these is clipping the segmentation masks to bind them inside each of the detected bounding boxes. This ensures that the segmentation masks do not flow out of the bounding boxes.

So, this is how the ProtoNet and the detection heads are combined to create the YOLOv5 instance segmentation architecture.

As of writing this, an official model architecture diagram for the instance segmentation model has not been released by Ultralytics. Hence, it is hard to get into more details about the model as of now. However, this will be the subject of our future post, in which the details will be discussed when an official architectural diagram is released.

Which Models are Available in the YOLOv5 Instance Segmentation Family?

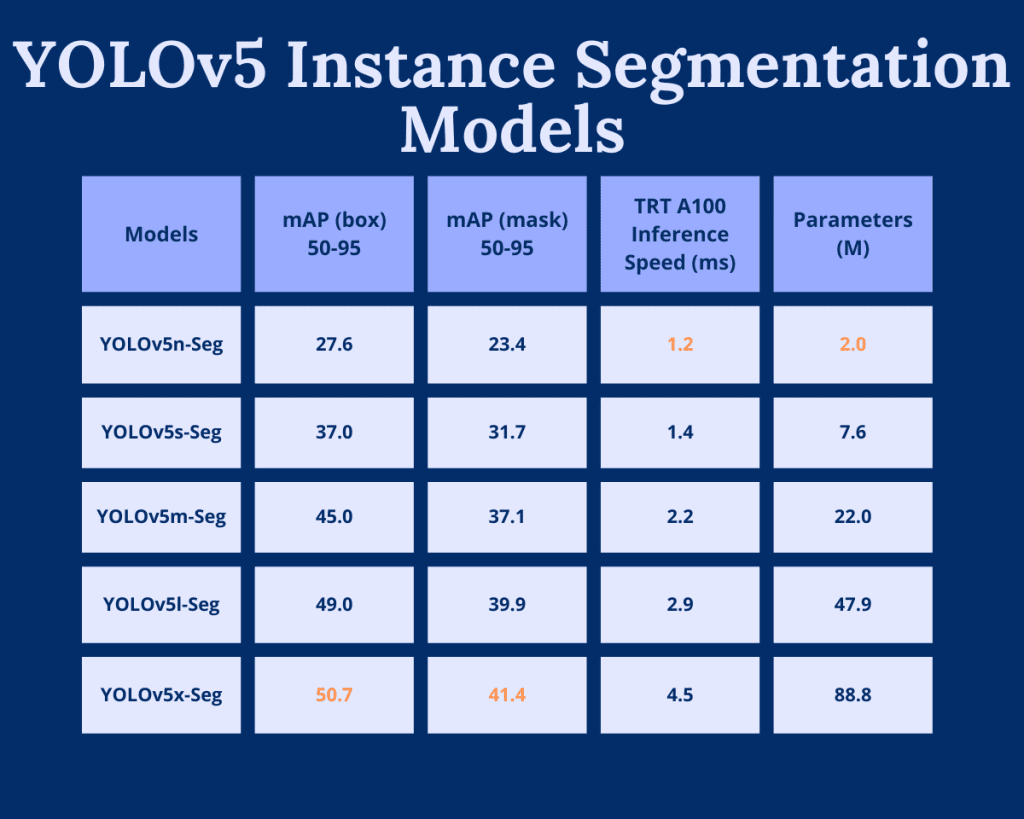

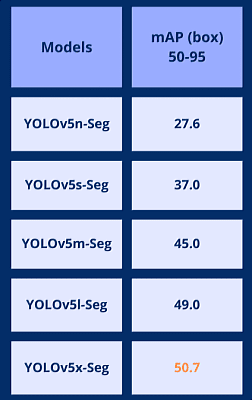

With the initial release, we have access to five instance segmentation models in YOLOv5.

The smallest is the YOLOv5 Nano instance segmentation model. With just 2 million parameters, it is the perfect model for edge deployment and mobile devices. But it has the lowest segmentation mask mAP of 23.4. The most accurate among them is the YOLOv5 Extra large (yolov5x-seg) model with 41.4 mask mAP. However, it is the slowest as well.

The above table gives the inference speeds for A100 GPU with NVIDIA TensorRT. With this particular GPU model, the yolovn-seg model runs with a latency of 1.2 milliseconds. This amounts to roughly 833 FPS which is pretty high for any instance segmentation model.

The following graph compares speed (in FPS) and the number of parameters for all YOLOv5 instance segmentation models.

It is a common trend for deep learning models to run slower when we increase the number of parameters which we observe here as well. It is also important to note that all the speeds in table 1 for 640 pixel images.

Dive deeper into personalized model training with YOLOv5 – Custom Object Detection Training, a guide focused on tailoring YOLOv5 for specific detection tasks.

YOLOv5 Instance Segmentation Performance on the COCO Dataset

Let’s continue to analyze table 1 from the previous section.

As is common with most of the benchmark results in the world of object detection and instance segmentation, YOLOv5 models are also benchmarked on the COCO dataset.

Instance segmentation models output both bounding boxes and segmentation masks. We use the Mean Average Precision (mAP) metric to evaluate both.

To reiterate, let’s take a closer look at all the models in which they box mAP and mask mAP.

It’s no doubt that larger models have higher box mAP and higher mask mAP as well.

To reach this point, all the models were trained for 300 epochs on the COCO dataset using the NVIDIA A100 GPU.

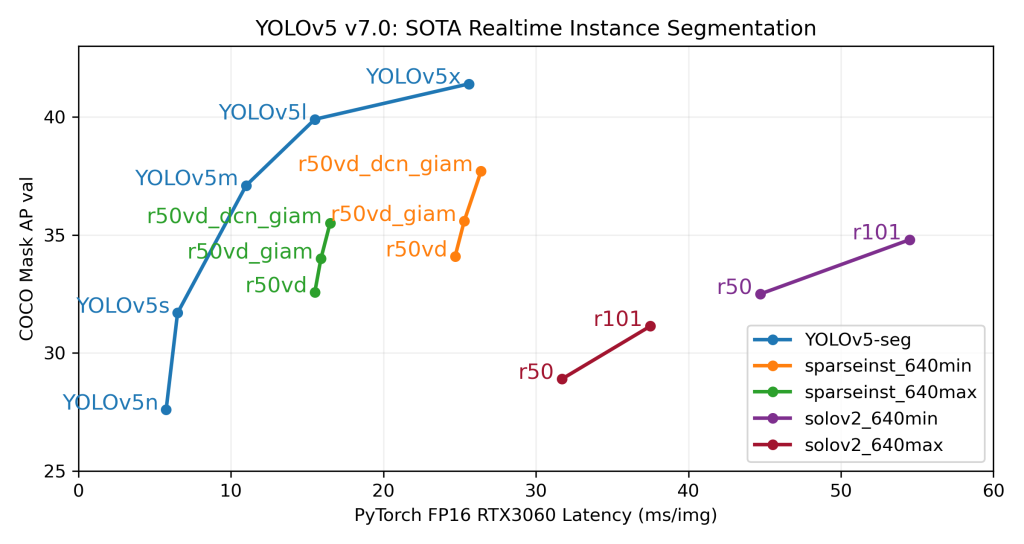

Compared with other state-of-the-art instance segmentation models, YOLOv5 models fare much better in terms of speed and accuracy.

Note: The above graph shows latency on the RTX 3060 GPU.

What’s more interesting is that the YOLOv5 instance segmentation models can beat models with ResNet101 backbones while being faster.

Inference using YOLOv5 Instance Segmentation Models

Until now, we have covered only the theoretical discussion of YOLOv5 instance segmentation models. Let’s jump into running inference using the models and analyzing some interesting outputs.

Setting Up YOLOv5 Instance Segmentation Locally

First, let’s set up the Ultralytics repository locally. This is helpful if you want to run the experiments on your local system.

1. Clone the Ultralytics GitHub Repository

git clone https://github.com/ultralytics/yolov5.git

cd yolov5

2. Install the Requirements

pip install -r requirements.txt

Once this has been done, we can now start running the inference commands..

YOLOv5 Instance Segmentation Directory Structure

The instance segmentation files reside inside the segment directory in the repository. Let’s take a look at the directory structure.

├── classify

│ ...

├── data

│ ├── hyps

│ ...

│ └── xView.yaml

├── models

│ ├── hub

│ ...

│ └── yolov5x.yaml

├── runs

│ ├── detect

│ └── predict-seg

├── segment

│ ├── predict.py

│ ├── train.py

│ ├── tutorial.ipynb

│ └── val.py

├── utils

│ ├── aws

│ ...

│ └── triton.py

├── benchmarks.py

├── CITATION.cff

├── CONTRIBUTING.md

├── detect.py

...

└── val.py

All the executable Python files for instance segmentation are inside the segment directory. For this particular post, we are only interested in the predict.py file used for inference on images and videos.

Instance Segmentation on Images

Note: All inference experiments shown here were run on a laptop with GTX 1060 GPU, i7 8th generation CPU, and 16 GB RAM.

Let’s start with a simple example of carrying out instance segmentation on images. First, we will carry out instance segmentation on a single image.

Within the yolov5 directory, execute the following command using the YOLOv5 Nano instance segmentation model.

python segment/predict.py --weights yolov5n-seg.pt --source ../input/images/image_1.jpg --view-img

The following explains the command line arguments used in the above command:

--weights: This flag accepts the path to the model weight file. When you execute it for the first time, the model automatically downloads to the current directory.--source: This flag accepts the path to the image source file that we want to run inference on. You may provide the path to your own images.--view-img: You can pass this flag to visualize the images on the screen at the end of the inference run.

In case we have a directory full of images, we can pass the directory path to the predict.py script to run inference on all images.

python segment/predict.py --weights yolov5n-seg.pt --source ../input/images --view-img

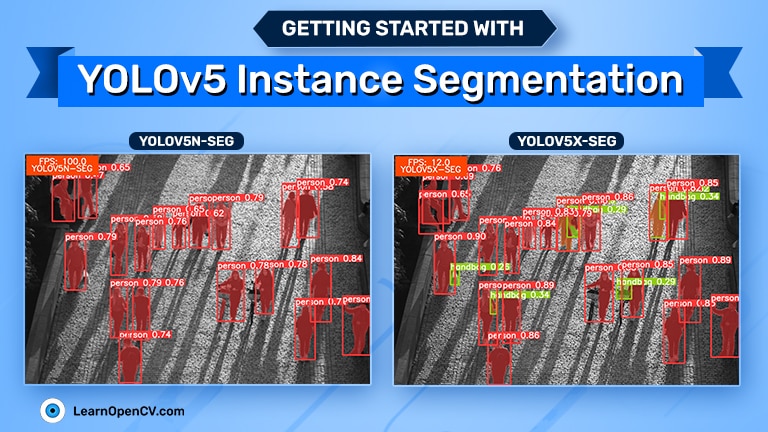

The following is the output that we get after running the first command.

We can see that the edges of the persons are not perfectly segmented.

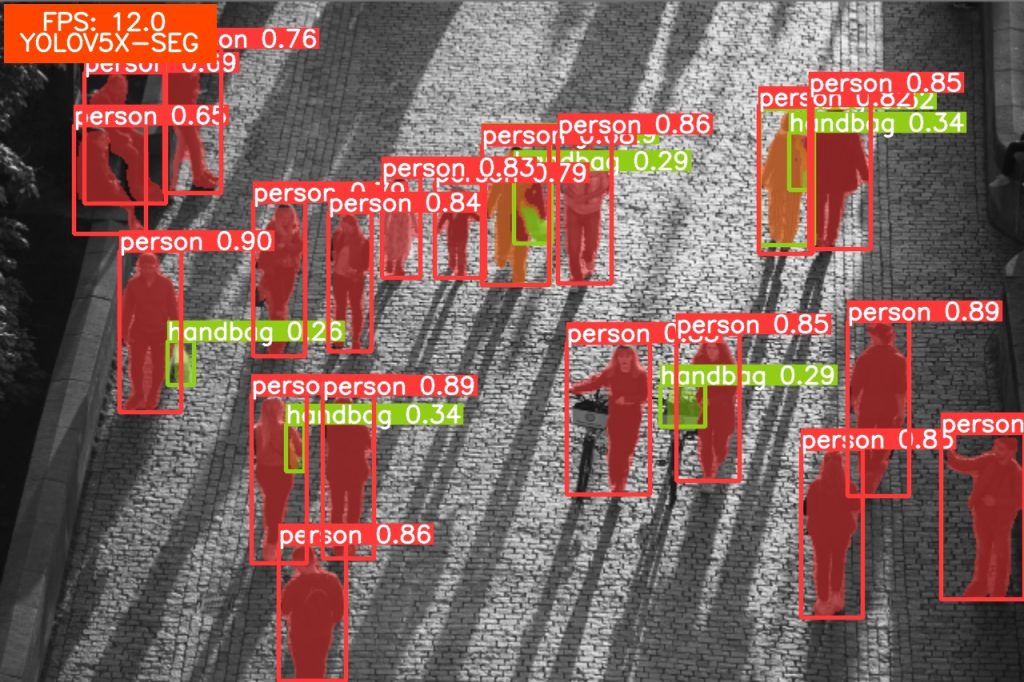

Let’s try with the YOLOv5X Segmentation model and check whether we can get any better results. For that, we need to change the model weight file in the above command.

python segment/predict.py --weights yolov5x-seg.pt --source ../input/images/image_1.jpg --view-img

There are two interesting points to observe here:

- The Extra Large model can detect more objects like the handbag and can also detect more persons.

- But the masks are still a bit blurry. We did not get any sharper masks.

The reason for this is that by default, the masks are overlaid on the preprocessed image, and the entire image is resized to the original size. This makes the masks a bit blurry.

To overcome this, we can use the --retina-masks flag.

If you are interested in fine-tuning instance segmentation model using Ultralytics, then this article on YOLOv8 Instance Segmentation Training will surely help you.

Instance Segmentation Mask In Native Resolution

If we provide the --retina-masks flag to the execution command, then the masks will be plotted in native resolution.

python segment/predict.py --weights yolov5x-seg.pt --source ../input/images/image_1.jpg --view-img --retina-masks

Using the --retina-masks flag the final masks and the image becomes much sharper. Although the inference time remains the same, the post-processing time may take a hit. This is because of working with high-resolution images and flags.

For reference, without using --retina-masks flag, the final FPS with post-processing is around 92 FPS on a video with the Nano model. Under similar conditions, using the --retina-masks flag, the FPS after post-processing drops to 65 FPS. That’s quite a big difference when considering real-time applications and edge deployment.

YOLOv5 Instance Segmentation Inference on Videos

Running inference on videos is just as simple. We will use the same predict.py script and only change the source file path to a video.

We will run all the inference results using the --retina-masks flag to get sharper results. The following command shows running inference using YOLOv5 Nano instance segmentation model.

All the FPS numbers shown here are for the forward pass and the non-maximum suppression. It does not include the post-processing time.

python segment/predict.py --weights yolov5n-seg.pt --source ../input/videos/video_1.mp4 --view-img --retina-masks

The following is the output.

On the GTX 1060 GPU, the average FPS was 139 using the Nano model. We can see a few vehicles that are far away not getting detected., We can easily solve this by using the yolov5x-seg.pt model.

With the Extra Large model, the bounding boxes are tighter with fewer fluctuations. But the FPS is also much lower this time, around 15 FPS.

Comparison Between Different YOLOv5 Instance Segmentation Models

In this section, we will compare the following four YOLOv5 instance segmentation models(except the Nano models) on a few videos.

- YOLOv5s-seg

- YOLOv5m-seg

- YOLOv5l-seg

- YOLOv5x-seg

This will give us a solid understanding of the strengths and weaknesses of different models and where to use which model.

This is an apt video to compare crowded and small objects. From the first look, it is clear that the YOLOv5x-seg is giving the best results. It has fewer false positives, fewer fluctuations, more far-away detection, and even fewer wrong detections.

From the number of detections per class, we can also see that YOLOv5x-seg is detecting classes correctly compared to the small model.

In this case, the smaller models like YOLOv5s-seg and YOLOv5m-seg are making the most mistakes. The small model is missing out on detecting the ball when it is moving between the legs. It also detects the door as a person on the far end. Here, again, YOLOv5x-seg is the slowest and the most accurate.

There is one thing to note in the above results, though. The YOLOv5s-seg model never outputs bad detections. For over 100 FPS, the results are decent most of the time. With proper fine-tuning on a custom dataset, even a small model can become very competitive.

Summary

In this post, we covered the YOLOv5 instance segmentation model in detail. We started with a short introduction, followed by the model architecture of the instance segmentation model, and ended with carrying out inferences using different models. This provided us with insights about:

- Which model is the fastest?

- Which model is the most accurate?

- What are the trade-offs between the smaller and larger models?

We hope that this article helped you get a better understanding of the YOLOv5 instance segmentation model. Watch our blog for comprehensive articles on custom training using the models.