This post is part of a series I am writing on Image Recognition and Object Detection.

The complete list of tutorials in this series is given below:

- Image recognition using traditional Computer Vision techniques : Part 1

- Histogram of Oriented Gradients : Part 2

- Example code for image recognition : Part 3

- Training a better eye detector: Part 4a

- Object detection using traditional Computer Vision techniques : Part 4b

- How to train and test your own OpenCV object detector : Part 5

- Image recognition using Deep Learning : Part 6

- Object detection using Deep Learning : Part 7

Sometimes things work out of the box. At other times, they don’t. Such occasions present an opportunity to get better.

Object detection using Haar feature-based cascade classifiers is more than a decade and a half old. OpenCV framework provides a pre-built Haar and LBP based cascade classifiers for face and eye detection which are of reasonably good quality. However, I had never measured the accuracy of these face and eye detectors. So, it was a suprise when I discovered that pre-built Haar/LBP cascades have a high false positive rate which might make them unsuitable for many use-cases. Fortunately, it is possible to train an eye detector with very high accuracy and low false positive rates for many cases with OpenCV.

Of course, for building more general object detectors I recommend using Deep Learning. You can learn more about it in my previous post here. In this post, I will describe how our team at Big Vision LLC trained a near perfect Haar-based eye-detector for a client. I will also provide code and the steps to train a Haar-based object detector.

Problem Definition and Challenges

Our client builds a device that automatically determines the power of the corrective lens needed to fix a user’s eyesight. The device is ridiculously simple — the user looks into the device for a few seconds and out comes the prescription.

The device needs a very accurate eye detector that could be integrated into their system. On their dataset, the Haar-based eye detector, that is bundled with OpenCV had an accuracy of about 89%. In other words, 11% of the time the eye detector failed — either the location of the detected eyes were wrong, or there were more than or fewer than two eyes detected. A state of the art system deserves much better!

They were looking for an accuracy of 95% or higher with a near-zero false positive rate. In addition, speed was of the essence and so we wanted a detection time budget of 20 ms or less. Finally, they needed to ensure that closed eyes were not detected to avoid taking a picture while the user was blinking.

Using Deep Learning on a mobile device was out of the scope for this project. We were restricted to using OpenCV and that made the problem challenging.

Training a better OpenCV Eye Detector

My first instinct is always to advise a Deep learning based solution to any recognition or detection problem as it’s more accurate. However, unlike academic problems, real world problems come with constraints. Sometimes the constraints are non-technical. For example, the budget allocated to a project can sometimes dictate the choice of technology. At other times, the platform, the speed requirements and ease of integration with a client’s existing infrastructure guide your choices. But, we, like 37-Signals (a.k.a Basecamp) embrace constraints.

Instead of freaking out about these constraints, embrace them. Let them guide you. Constraints drive innovation and force focus. Instead of trying to remove them, use them to your advantage.

So, we got down to business and built a kickass eye detector! We can’t share the eye detector and we can’t share the training data. But we can tell you how we went about the process.

Data Collection

In the game of AI, data is the King. The organization with the largest dataset and more representative dataset will always win.

Before we begin a project, we always try to get the data right because a superior algorithm will never be able to fix a bad data problem. Our data collection team collected approximately 1000 images of human eyes. We also gathered around 7000 negative images randomly from the internet.

First Stab at Training

Initial training was disappointing. Our eye detector was not better than the one bundled with OpenCV. Oops! So much for getting the data right.

There were a lot of suggestions made by the team. Maybe we needed more positive examples or maybe optimizing the ratio of positive and negative images would work. Should we optimize the hyperparameters? How about augmenting the data?

Data Augmentation and Hyerparameter Optimizations

In machine learning, often the thing you need to make a good solution into an excellent solution is systematic trial and error. Repeated trials and experimentation brings good luck! Bet on getting a head on a single coin toss, you will lose 50% of the time. Try two coin tosses times and your odds jump to 75%. Try four times and the odds are 93.75%!

After a few iterations of data augmentation, smart approaches to collecting and creating negative data we were finally able to make models which were far superior that that of OpenCV.

For data augmentation, we flipped the eyes vertically to double the dataset. We considered randomly applying color transforms to account for illumination variations, but it turned out to be not necessary.

We had intially started with a smaller negative dataset, but because we needed a very low false positive rate, we increased the negative set quite a bit.

The biggest win came when we did hard negative mining. We noticed that a lot of false positives were detected on the face. These regions were extracted and put back in the training set as negative examples. This simple trick significantly reduced the false positive rate.

But, was our model better than 95%? The client had provided us a test-set of around 600 test images. Now was the time to test it.

When we ran our test scripts for the first time, our Haar-based detector was at 97% and LBP was at 94% accuracy. Euphoria! We had earned our paycheck.

But we are craftsmen. We try harder. We give our best.

With a few more optimization of hyperparameters, our Haar detector was more than 99% accurate and LBP was close to 96% accurate.

Needless to say, our client was extremely pleased with the results.

How to Train an OpenCV Object Detector ?

While we cannot share the model or the training data because of confidentiality agreement with our client, we are happy to share the tools you would need to do your own experiments.

We used the following freely shared collection of utilities, scripts and deployment code to create a quick training module. The scripts we are sharing assume you have Python, Perl, and OpenCV installed on your Linux/OSX machine.

Step 1 : Data Collection

Collect images of the object you want to detect, crop them to some fixed aspect ratio and put these images in the positive_images folder. For example, we collected 1000 images of eyes, cropped them into square images and put them in the positive_images directory. Similarly, collect a large set of negative examples, crop them in the same aspect ratio as the positive samples, and put them in a directory named negative_images.

Step 2 : Create Training Data files

You need to create text files postives.txt and negatives.txt using the commands below:

find ./negative_images -iname "*.jpg" > negatives.txt

find ./positive_images -iname "*.jpg" > positives.txt

Step 3: Create Samples

- Use createsamples.pl to create .vec file for each image.

perl bin/createsamples.pl positives.txt negatives.txt samples 5000 "opencv_createsamples -bgcolor 0 -bgthresh 0 -maxxangle 1.1 -maxyangle 1.1 maxzangle 0.5 -maxidev 40 -w 40 -h 40"

The script is a wrapper around opencv_createsamples. As mentioned in the OpenCV documentation —

“opencv_createsamples is used to prepare a training dataset of positive and test samples. opencv_createsamples produces dataset of positive samples in a format that is supported by both opencv_haartraining and opencv_traincascade applications. The output is a file with *.vec extension, it is a binary format which contains images.”

2. Use mergevec.py to merge .vec files into samples.vec like this:

python ./tools/mergevec.py -v samples/ -o samples.vec

Run Training Scripts

The training commands for LBP and Haar cascade training are provided below. We trained on a machine with 64 GB RAM, reduce the values of precalcValBufSize and precalcIdxBufsize to 1024 if your machine hangs.

Training command for Local Binary Patterns (LBP) cascade

LBP is much faster than Haar but is less accurate. You can train using the following command.

opencv_traincascade -data lbp -vec samples.vec -bg negatives.txt -numStages 20 -minHitRate 0.999 -maxFalseAlarmRate 0.5 -numPos 4000 -numNeg 7000 -w 40 -h 40 -mode ALL -precalcValBufSize 4096 -precalcIdxBufSize 4096 -featureType LBP

Training command for HAAR cascade

Haar cascades take a long time to train, but are definitely more accurate. You can train a Haar cascade using the following command.

opencv_traincascade -data haar -vec samples.vec -bg negatives.txt -numStages 20 -minHitRate 0.999 -maxFalseAlarmRate 0.5 -numPos 4000 -numNeg 7000 -w 40 -h 40 -mode ALL -precalcValBufSize 4096 -precalcIdxBufSize 4096

Eye Detector Results

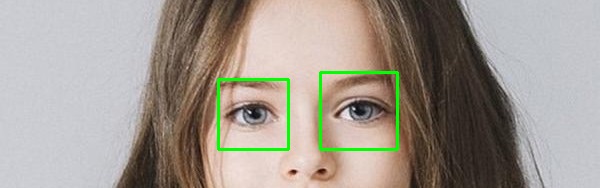

Our typical results look like the one shown below.

On such clean images, OpenCV would give similar results. Where we shine are difficult examples as shown below. The OpenCV eye detector shows a false positive.

In contrast, we do much better

Here is side by side comparison on a video

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning