Small object detection refers to the task of identifying and localizing objects that are relatively small in size within digital images. These objects typically have limited spatial extent and low pixel coverage and may be challenging to detect due to their small appearance and low signal-to-noise ratio.

In this comprehensive research article we will explore the existing approaches for small object detection, and then perform inference using the SAHI technique using YOLOv8.

To see the results, you may SCROLL BELOW to the concluding part of the article or click here to see the experimental results.

Applications of Small Object Detection

There are multiple applications of small object detection:

- Surveillance and Security: Identification and tracking of small objects in crowded areas for enhanced public safety.

- Autonomous Driving: Accurate detection of pedestrians, cyclists, and traffic signs in real-time for safe navigation.

- Medical Imaging: Localization of abnormalities and lesions in medical images for early disease diagnosis and treatment planning.

- Remote Sensing and Aerial Imagery: Identification of small objects in satellite imagery for urban planning and environmental monitoring.

- Industrial Inspection: Quality control and defect detection in manufacturing processes to ensure high product quality.

- Microscopy and Life Sciences: Analysis of cellular structures and microorganisms for research in biology and genetics.

- Robotics and Object Manipulation: Accurate detection of small objects for effective robotic object manipulation in automation tasks.

Here is what the creator of SAHI, Fatih C. Aykon said about this research article and the accompanying video tutorial:

Let’s explore the challenges associated with small object detection along with a few existing approaches and the revolutionary SAHI: Slicing Aided Hyper Inference technique in this research article.

Why do Normal Object Detectors Fail to Detect Smaller Objects?

There are multiple object detection algorithms, such as Faster RCNN, YOLO, SSD, RetinaNet, EfficientDet, and more, in the current generation. Generally, these models are trained on COCO (Common Objects in Context) dataset. It is a large-scale dataset containing a wide range of object categories and annotations, making it popular for training object detectors. However, it turns out that these models fail to detect small objects. Have you ever thought about this?

Limited Receptive Field

The receptive field refers to the spatial extent of the input image that influences the output of a particular neuron or filter in a convolutional neural network (CNN). In normal object detectors, the receptive field may be limited, meaning that the network might not have a sufficient understanding of the contextual information surrounding smaller objects. As a result, the detector may struggle to accurately detect and localize these objects due to the inadequate receptive field. Figure 2 illustrates the receptive field of a neural network.

Feature Representation

Object detectors typically rely on learned features within CNN architectures to recognize objects. However, the inherent limitations of feature representation may hinder the detection of smaller objects, as the learned features may not adequately capture the subtle and intricate details. Figure 3 shows the convolutional neural network feature representation. Consequently, the detector may fail to differentiate them from the background or other similar-looking objects.

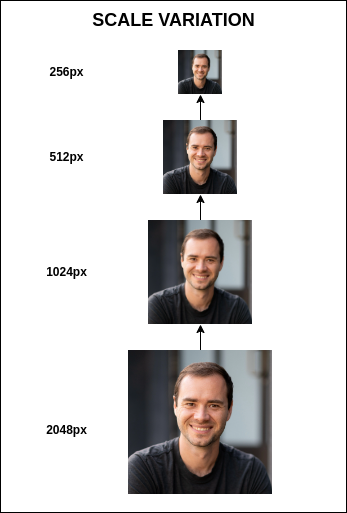

Scale Variation

Small objects exhibit significant scale variations compared to larger objects within an image. Object detectors trained on datasets predominantly consisting of larger objects, such as ImageNet or COCO, might struggle to generalize to small objects due to the discrepancy in scale. In Figure 4, scale variations have been applied. The variations in size can lead to difficulties in matching the learned object representations, resulting in decreased detection performance for smaller objects.

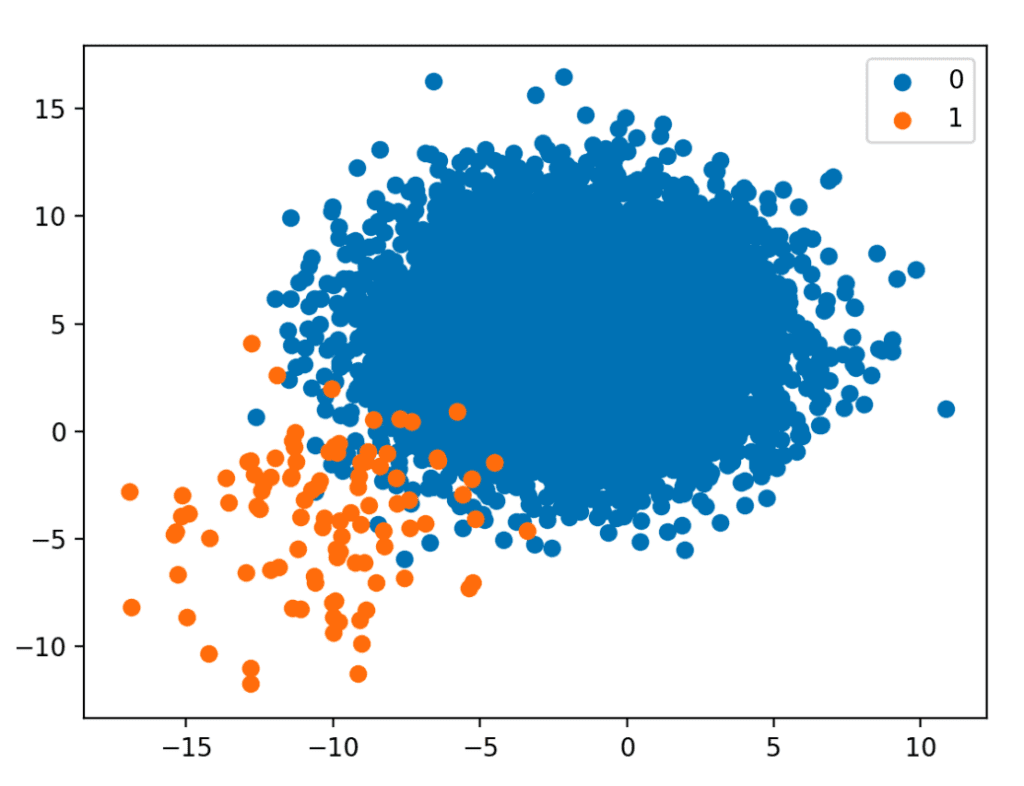

Training Data Bias

Object detection models are typically trained on large-scale datasets, which may contain biases toward larger objects due to their prevalence. This bias can inadvertently affect the performance of object detectors when it comes to smaller objects. As a consequence, the model may not have been exposed to enough diverse training examples of small objects. This leads to a lack of robustness and reduced detection accuracy for smaller object instances. Figure 5, illustrates a scatter plot of a dataset with two class objects. It can be observed that class ‘0’ has significantly more data points than class ‘1’.

Localization Challenges

Localizing smaller objects accurately can be challenging due to the limited spatial resolution of feature maps within CNN architectures. The fine-grained details necessary for precise localization may be lost or become indistinguishable at lower resolutions. Small objects may be occluded by other larger objects or cluttered backgrounds, further worsening the localization challenges. These factors can contribute to the failure of normal object detectors in accurately localizing and detecting smaller objects.

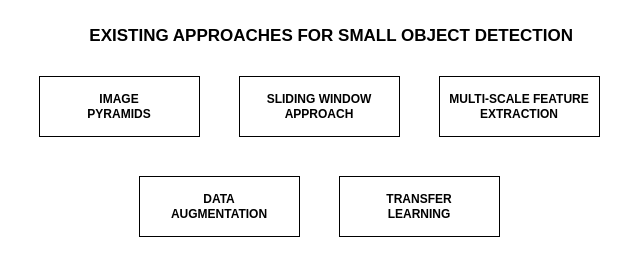

Existing Approaches for Small Object Detection

There are a few techniques that assist with small object detection in computer vision that encompass a range of techniques that aim to address the challenges associated with detecting small objects accurately. These approaches utilize various strategies and algorithms to improve the detection performance, specifically for smaller-sized objects. Here are some commonly employed methods:

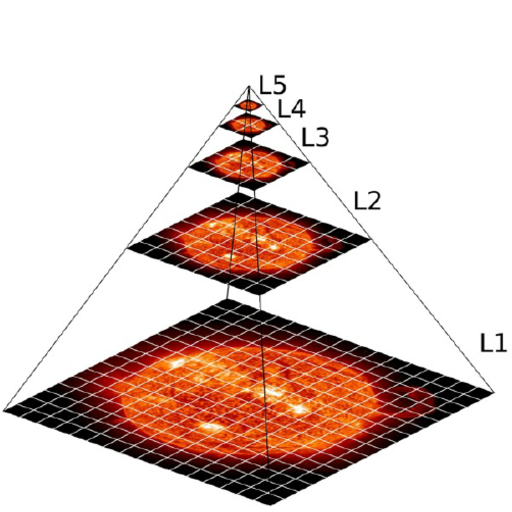

Image Pyramid

It involves the creation of multiple scaled versions of the input image by down-sampling or up-sampling. These scaled versions, or pyramid levels, provide different image resolutions. Object detectors can apply the detection algorithm at each pyramid level to handle objects at different scales. In Figure 8, an image pyramid-based technique has been applied to an image of the sun. This approach allows the detection of small objects by searching for them at lower pyramid levels, where they may be more prominent and distinguishable.

Sliding Window Approach

This method involves sliding a fixed-sized window across the image at various positions and scales. At each window position, the object detector applies a classification model to determine if an object is present. By considering different window sizes and positions, the detector can effectively search for small objects across the image. However, the sliding window approach can be computationally expensive, especially when dealing with large images or multiple scales.

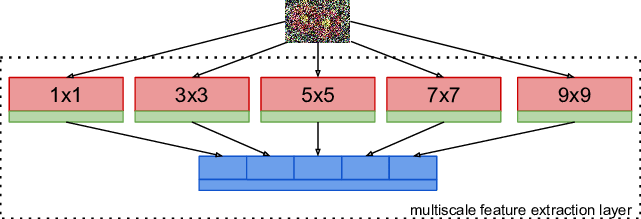

Multi-scale Feature Extraction

Object detectors can utilize multi-scale feature extraction techniques to capture information at different levels of detail. This involves processing the image at multiple resolutions or applying convolutional layers with different receptive fields. By incorporating features from different scales, the detector can effectively capture small and large objects in the scene. This approach helps in preserving fine-grained details relevant to detecting small objects.

Data Augmentation

It is one of the most famous techniques in computer vision that can enhance the detection performance of small objects by generating additional training samples. Augmentation methods like random cropping, resizing, rotation, or introducing artificial noise can help create variations in the dataset, allowing the detector to learn robust features for small objects. Augmentation techniques can also simulate different object scales, perspectives, and occlusions, helping the detector generalize better to real-world scenarios.

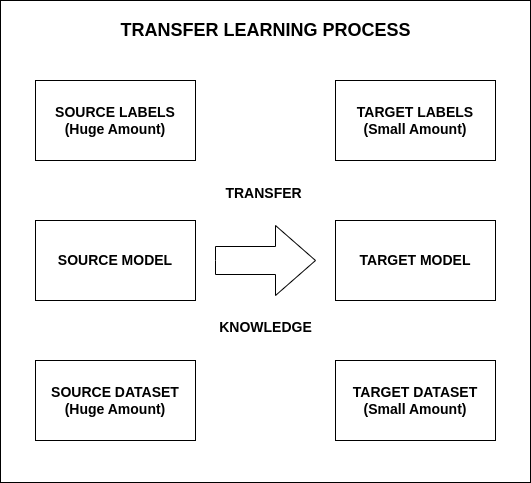

Transfer Learning

This method involves leveraging knowledge learned from pretraining on large-scale datasets (e.g., ImageNet) and applying it to object detection tasks. Pretrained models, especially those with deep convolutional neural network (CNN) architectures, capture rich hierarchical features that are beneficial for small object detection. By fine-tuning pre-trained models on target datasets, object detectors can quickly adapt to new tasks, using the learned representations and enabling better detection of small objects.

Slicing Aided Hyper Inference: Revolutionary Pipeline for Small Object Detection

Introducing SAHI, a cutting-edge pipeline designed specifically for small object detection. SAHI harnesses the power of slicing-aided inference and fine-tuning techniques, revolutionizing how objects are detected. What sets SAHI object detection apart is its seamless integration with any object detector, eliminating the need for tedious fine-tuning. This breakthrough allows for quick and effortless adoption without compromising on performance.

Incredible experimental evaluations conducted on the Visdrone and xView datasets showcase the unparalleled effectiveness of SAHI. Without making any modifications to the detectors themselves, SAHI elevates object detection average precision (AP) by a remarkable 6.8% for FCOS, 5.1% for VFNet, and 5.3% for TOOD (Task-Aligned One-Stage Object Detection) detectors.

But SAHI doesn’t stop there. It also introduces a groundbreaking slicing-aided fine-tuning technique, taking detection accuracy to new heights. By fine-tuning the models, the cumulative gains in AP soared to an astonishing 12.7% for FCOS, 13.4% for VFNet, and 14.5% for TOOD detectors.

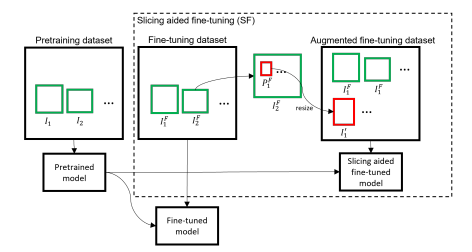

Slicing Aided Fine Tuning

- Popular object detection frameworks like Detectron2, MMDetection, and YOLOv8 provide pre-trained weights on widely-used datasets such as ImageNet and MS COCO.

- Pretraining enables efficient fine-tuning of models using smaller datasets and shorter training durations, eliminating the need for training from scratch with large datasets.

- However, the datasets commonly used for pretraining consist of low-resolution images with relatively large objects covering a significant portion of the image height.

- As a result, pre-trained models perform well for similar inputs but struggle with small object detection in high-resolution images captured by advanced drones and surveillance cameras.

- To overcome this limitation, an approach is proposed to augment the dataset by extracting patches from the fine-tuning dataset images. This technique is depicted in Figure 14.

- Each image is sliced into overlapping patches with dimensions M and N.

- The dimensions M and N are selected from predefined ranges [Mmin, Mmax] and [Nmin, Nmax] as hyperparameters.

- During fine-tuning, these patches are resized while maintaining the aspect ratio.

- The resized patches create augmentation images that aim to increase the relative size of objects compared to the original image.

- The original images are also utilized during the fine-tuning process.

- This utilization of original images helps in detecting large objects effectively.

Slicing Aided Hyper Inference

- The slicing method is also employed during the inference step, as illustrated in Figure 15. Initially, the original query image is divided into overlapping patches with dimensions M × N. Subsequently, each patch is resized while maintaining the aspect ratio. An independent object detection forward pass is then applied to each overlapping patch.

- Additionally, an optional full-inference step can be performed using the original image to detect larger objects.

- Finally, the prediction results from the overlapping patches, along with the results, if applicable, are merged back to the original image size using Non-Maximum Suppression (NMS).

- During the NMS process, boxes with Intersection over Union (IoU) ratios higher than a predefined matching threshold are considered matches. For each match, results with detection probabilities lower than a threshold are discarded. This ensures that only the most confident and non-overlapping detections are retained.

Inference on YOLOv8 using SAHI Technique

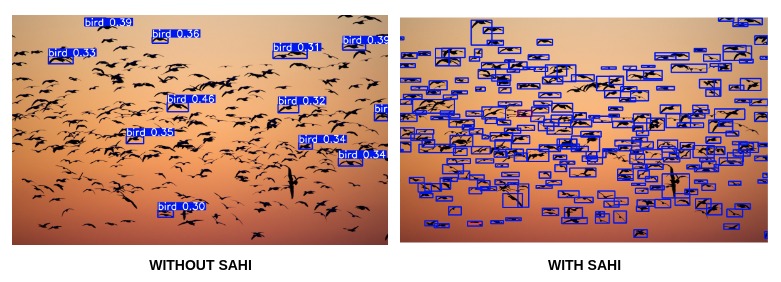

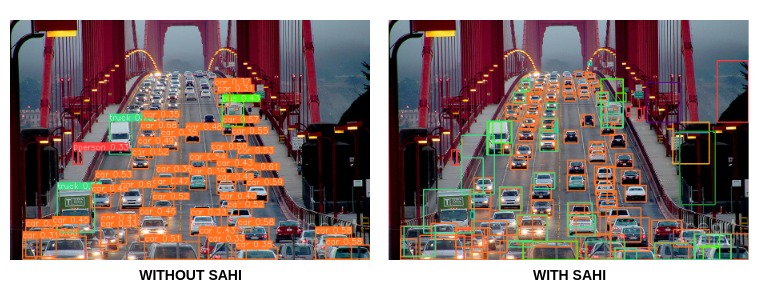

In this final section of the article, a pre-trained YOLOv8-S model has been used to perform object detection inference on images. We will also look at a side-by-side comparison between the results obtained without and with SAHI for small object detection. There is a download link to the notebook that has been used in this experiment.

Model Initialization

detection_model = AutoDetectionModel.from_pretrained(

model_type='yolov8',

model_path=yolov8_model_path,

confidence_threshold=0.3,

device="cuda:0", # or 'cpu'

)

In the above code snippet, a detection_model has been initialized. In this experiment, ‘model_type’ is yolov8, ‘model_path’ points to the directory where the model has been saved, and the default ‘confidence_threshold’ has been set to 0.2. If you have a machine with NVIDIA GPUs, you can enable CUDA acceleration by changing the ‘device’ flag to ‘cuda:0’; otherwise, leave it as ‘cpu’. But keep in mind that inference speeds might be slower.

Perform Sliced Inference

Slice parameters such as ‘slice_height’, ‘slice_width’, ‘overlap_height_ratio’, ‘overlap_width_ratio’ need to be mentioned based on the size of the input image. This is mostly a trial-error process, as there are no default values that work best with all types of images. As the number of slices increases, more computing power is required. This is definitely where CUDA acceleration helps the most.

result = get_sliced_prediction(

"demo_data/small-vehicles1.jpeg",

detection_model,

slice_height = 512,

slice_width = 512,

overlap_height_ratio = 0.2,

overlap_width_ratio = 0.2

)

Visualize Predicted Objects

By using the below code, you can view the detected objects from the input image. Furthermore, you can also export the output image with detection on it. The code snippet shown below reads an input image, performs preprocessing, and uses the visualize_object_predections() method to show the output. Object labels have been hidden for better visualization. It has been done using the ‘hide_labels’ parameter.

img = cv2.imread("demo_data/cars.jpg", cv2.IMREAD_UNCHANGED)

img_converted = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

numpydata = asarray(img_converted)

visualize_object_predictions(

numpydata,

object_prediction_list = result.object_prediction_list,

hide_labels = 1,

output_dir='/content/demo_data',

file_name = 'result',

export_format = 'png'

)

Image('demo_data/result.png')

Inference Results

Aren’t these experimental results crazy? For exploring the SAHI inference pipeline, click here.

Conclusions

The introduction of the Slice Aided Hyper Inference (SAHI) framework marks a significant milestone in small object detection. With its innovative pipeline, seamless integration with any object detector, and remarkable performance improvements, SAHI has revolutionized how we detect and identify small objects.

Through extensive experimental evaluations on the Visdrone and xView datasets, SAHI has demonstrated its effectiveness in enhancing object detection average precision (AP) across various detectors without needing modifications. The slicing-aided fine-tuning technique further boosts detection accuracy, resulting in substantial gains in AP. The potential of SAHI to significantly enhance object detection performance cannot be overstated. Its versatility and ability to adapt to different scenarios make it a game-changer in the field of computer vision.

You are invited to access the accompanying notebook used for conducting the experiments. The notebook can be downloaded by following the provided link. This resource offers an opportunity to delve deeper into the implementation details and further explore the proposed techniques in small object detection.

As we move forward, the impact of SAHI on the field of object detection will continue to unfold, empowering researchers and practitioners to push the boundaries of what is possible in visual perception. The future of small object detection has arrived, and it is bright with the promise of SAHI leading the way. Have you ever worked with small object detection before? If so, please share your experience and comments below!

References

Research Paper: Slicing Aided Hyper Inference and Fine Tuning for Small Object Detection

GitHub: SAHI – Repository

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning