SegFormer: Segmentation has heavily impacted the development of advanced driver assistance systems. It has been pivotal in the rapid development of autonomous vehicle technology. It is built up of multiple complex components. For any vehicle to navigate through roads, lane detection is essential. Lanes are markings on the road that help differentiate between the drivable and non-drivable areas on the road. In the current generation, there are multiple lane detection algorithms each with their own strengths and weaknesses.

In this research article, we will fine-tune the ever so famous SegFormer Model from HuggingFace (Enze Xie, Wenhai Wang, Zhiding Yu et al) using the Berkeley Deep Drive dataset to perform lane detection on POV videos of vehicles. This experiment holds true even for night driving scenarios, which is complex to handle.

Stay tuned for the highlight of this article – the HuggingFace SegFormer model’s inference results. SCROLL BELOW to the concluding part of the article or click here to see the experimental results.

Role of Lane Detection in ADAS

In general, lane detection has profoundly impacted ADAS systems. Let’s explore a few of them here:

- Lane Keeping: Beyond warning systems, lane detection is integral to Lane Keeping Assist (LKA) technologies, which not only alert the driver but can also take corrective actions, such as gentle steering interventions, to keep the vehicle centered in its lane.

- Traffic Flow Analysis: Lane detection enables the vehicle to understand the road geometry, which is critical in complex driving scenarios such as merging and lane changing, and is essential for adaptive cruise control systems that adjust speed based on the surrounding traffic flow.

- Autonomous Navigation: For semi-autonomous or autonomous vehicles, lane detection is a fundamental component that allows the vehicle to navigate and maintain its position within the road infrastructure. It’s essential for route planning and decision-making processes in self-driving algorithms.

- Driver Comfort: Systems that use lane detection can take over some of the driving tasks, reducing driver fatigue, and enabling a more comfortable driving experience, especially during long journeys on highways.

- Road Condition Monitoring: Lane detection systems can also contribute to monitoring road conditions. For instance, if the system consistently detects poor lane markings or none at all, this information can be fed back for infrastructure maintenance and improvements.

Berkeley Deep Drive Dataset

The Berkeley Deep Drive 100K (BDD100K) dataset is a comprehensive collection of diverse driving video sequences gathered from various urban and suburban locations. It is primarily designed to facilitate research and development in autonomous driving. The dataset is notably large, encompassing approximately 100,000 videos, each of 40 seconds in length, and covering a wide range of driving scenarios, weather conditions, and times of day. Each video in the BDD100K dataset is accompanied by a rich set of frame-level annotations. These annotations include labels for lanes, drivable areas, objects (such as vehicles, pedestrians, and traffic signs), and full-frame instance segmentation. The diversity in the dataset is crucial for developing robust lane detection algorithms, as it exposes models to various lane markings, road types, and environmental conditions.

In the context of this research work, a 10% sample of the BDD100K dataset was used for fine-tuning the SegFormer model. This subsampling approach allows for a more manageable dataset size while maintaining a representative subset of the overall diversity present in the full dataset. The 10% sample includes 10,000 images, selectively chosen to represent the dataset’s comprehensive range of driving conditions and scenarios.

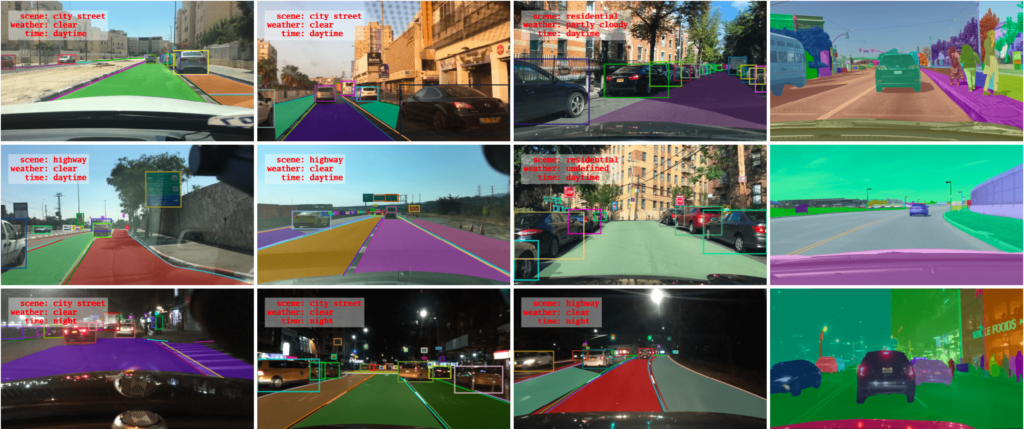

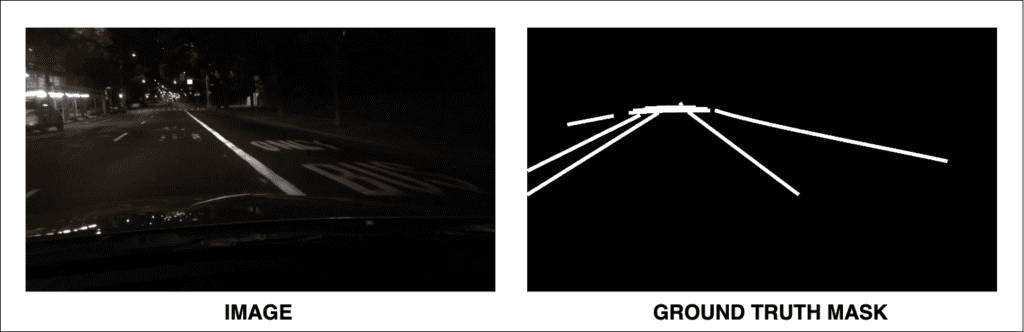

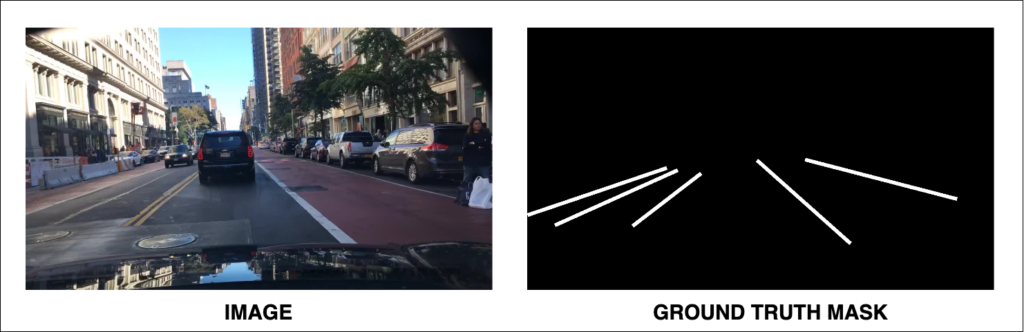

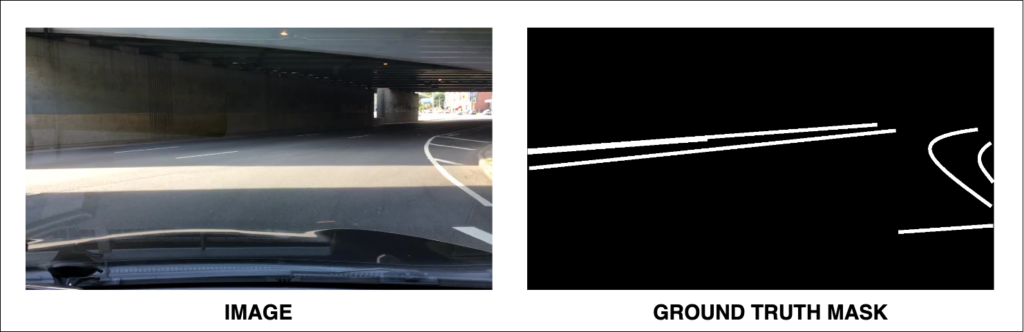

Let’s have a look at a few sample images and annotation masks from the sample dataset:

From the illustrations shown above, it can be observed that for each image in the BDD dataset, there is a valid ground truth binary mask that assists with the task of lane detection. This can be treated as a 2-class segmentation problem, where the lanes are represented by one class and the background is another class. In this case, there are 7000 images and masks for the train set, and about 3000 images and masks for the valid set.

Alright, let’s build the training pipeline for this experiment.

Code Walkthrough

In this section, we will explore the various processes involved in fine-tuning the HuggingFace SegFormer Model (this post also explains the internal architecture) using the BDD dataset. You can download the code in this article by clicking on the Download Code button.

Prerequisites

The primary purpose of the ‘BDDDataset’ class is to efficiently load and preprocess image data and their corresponding segmentation masks from a specified directory. It’s responsible for the below functionalities:

- Loads an image and its corresponding mask using their paths.

- The image is converted to RGB format, while the mask is converted to grayscale (single channel).

- The mask is then transformed into a binary format, where non-zero pixels are considered part of the lane (assuming lane segmentation task).

- The mask is resized to match the image dimensions and then converted to a tensor.

- Finally, the mask is also thresholded back to binary values and converted to a LongTensor, suitable for segmentation tasks in PyTorch.

class BDDDataset(Dataset):

def __init__(self, images_dir, masks_dir, transform=None):

self.images_dir = images_dir

self.masks_dir = masks_dir

self.transform = transform

self.images = [img for img in os.listdir(images_dir) if img.endswith('.jpg')]

self.masks = [mask.replace('.jpg', '.png') for mask in self.images]

def __len__(self):

return len(self.images)

def __getitem__(self, idx):

image_path = os.path.join(self.images_dir, self.images[idx])

mask_path = os.path.join(self.masks_dir, self.masks[idx])

image = Image.open(image_path).convert("RGB")

mask = Image.open(mask_path).convert('L') # Convert mask to grayscale

# Convert mask to binary format with 0 and 1 values

mask = np.array(mask)

mask = (mask > 0).astype(np.uint8) # Assuming non-zero pixels are lanes

# Convert to PIL Image for consistency in transforms

mask = Image.fromarray(mask)

if self.transform:

image = self.transform(image)

# Assuming to_tensor transform is included which scales pixel values between 0-1

# mask = to_tensor(mask) # Convert the mask to [0, 1] range

mask = TF.functional.resize(img=mask, size=[360, 640], interpolation=Image.NEAREST)

mask = TF.functional.to_tensor(mask)

mask = (mask > 0).long() # Threshold back to binary and convert to LongTensor

return image, mask

Dataloader Definition and Initialization

Using the previously created ‘BDDDataset’ class, we need to define and initialize dataloaders. For this, two separate dataloaders must be created, one for the train set and the other for the validation set. The train dataloader also requires a few transformations. The code snippet below can be used for this:

# Define the appropriate transformations

transform = TF.Compose([

TF.Resize((360, 640)),

TF.ToTensor(),

TF.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

# Create the dataset

train_dataset = BDDDataset(images_dir='deep_drive_10K/train/images',

masks_dir='deep_drive_10K/train/masks',

transform=transform)

valid_dataset = BDDDataset(images_dir='deep_drive_10K/valid/images',

masks_dir='deep_drive_10K/valid/masks',

transform=transform)

# Create the data loaders

train_loader = DataLoader(train_dataset, batch_size=4, shuffle=True, num_workers=6)

valid_loader = DataLoader(valid_dataset, batch_size=4, shuffle=False, num_workers=6)

Let’s have a look at the transformations used in this pipeline.

- TF.Resize((360, 640)): Resizes the images to a uniform size of 360×640 pixels.

- TF.ToTensor(): Converts the images to PyTorch tensors.

- TF.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]): Normalizes the images using the specified mean and standard deviation, which are typically derived from the ImageNet dataset. This step is crucial for models pre-trained on ImageNet.

Based on your compute resources, you may wish to adjust parameters such as ‘batch_size’ and ‘num_workers’.

HuggingFace SegFormer 🤗 Model Initialization

# Load the pre-trained model

model = SegformerForSemanticSegmentation.from_pretrained('nvidia/segformer-b2-finetuned-ade-512-512')

# Adjust the number of classes for BDD dataset

model.config.num_labels = 2 # Replace with the actual number of classes

The code snippet above initializes the SegFormer-b2 model from the HuggingFace pre-trained semantic segmentation models library. Since we are trying to segment out the lanes from the road, this will be considered as a 2-class segmentation problem.

# Check for CUDA acceleration

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device);

While you’re at it, check if your deep learning environment supports CUDA acceleration using Nvidia GPUs. For this experiment, an Nvidia RTX 3080 Ti with 12GB vRAM was used for training.

Training and Validation

In this section, let’s have a look at the training and validation pipeline required for fine-tuning this model. But before that, how would you evaluate the performance of this model?

For a semantic segmentation problem, like this one, IoU (or) Intersection over Union is the primary metric for evaluation. This helps us understand how much of the predicted mask overlaps the GT mask.

def mean_iou(preds, labels, num_classes):

# Flatten predictions and labels

preds_flat = preds.view(-1)

labels_flat = labels.view(-1)

# Check that the number of elements in the flattened predictions

# and labels are equal

if preds_flat.shape[0] != labels_flat.shape[0]:

raise ValueError(f"Predictions and labels have mismatched shapes: "

f"{preds_flat.shape} vs {labels_flat.shape}")

# Calculate the Jaccard score for each class

iou = jaccard_score(labels_flat.cpu().numpy(), preds_flat.cpu().numpy(),

average=None, labels=range(num_classes))

# Return the mean IoU

return np.mean(iou)

The above function `mean_iou` does the following things:

- Flattening Predictions and Labels: preds and labels are flattened using the .view(-1) method. This reshaping is necessary to compare each prediction with its corresponding label on a pixel-wise basis.

- Shape Validation: The function checks if the number of elements in preds_flat and labels_flat are equal. This is a crucial check to ensure that each prediction corresponds to a label.

- Jaccard Score Calculation: Uses the jaccard_score function, typically from a library like scikit-learn, to calculate the Jaccard score (IoU) for each class. The IoU is calculated between the flattened predictions and labels. It is calculated for each class separately, as indicated by average=None and labels=range(num_classes).

- Mean IoU Computation: The Mean IoU is calculated by taking the average of the IoU scores across all classes. This provides a single performance metric that summarizes how well the model’s predictions align with the ground truth across all classes.

# Define the optimizer

optimizer = AdamW(model.parameters(), lr=5e-5)

# Define the learning rate scheduler

num_epochs = 30

num_training_steps = num_epochs * len(train_loader)

lr_scheduler = get_scheduler(

"linear",

optimizer=optimizer,

num_warmup_steps=0,

num_training_steps=num_training_steps

)

# Placeholder for best mean IoU and best model weights

best_iou = 0.0

best_model_wts = copy.deepcopy(model.state_dict())

For model optimization, the famous Adam optimizer has been used with a `learning_rate` of 5e-5. In this experiment, the fine-tuning process was done for 30 `epochs`.

for epoch in range(num_epochs):

model.train()

train_iterator = tqdm(train_loader, desc=f"Epoch {epoch + 1}/{num_epochs}", unit="batch")

for batch in train_iterator:

images, masks = batch

images = images.to(device)

masks = masks.to(device).long() # Ensure masks are LongTensors

# Remove the channel dimension from the masks tensor

masks = masks.squeeze(1) # This changes the shape from [batch, 1, H, W] to [batch, H, W]

optimizer.zero_grad()

# Pass pixel_values and labels to the model

outputs = model(pixel_values=images, labels=masks,return_dict=True)

loss = outputs["loss"]

loss.backward()

optimizer.step()

lr_scheduler.step()

outputs = F.interpolate(outputs["logits"], size=masks.shape[-2:], mode="bilinear", align_corners=False)

train_iterator.set_postfix(loss=loss.item())

In the above code snippet, the training loop for the fine-tuning process has been illustrated. For each epoch, the loop iterates over the training dataloader `train_loader`, which provides batches of image and mask pairs. These are lane images and their corresponding segmentation masks. Each batch of images and masks is moved to a computational device (like a GPU, referred to as `device`). The mask tensor’s channel dimension is removed to match the required input format for the model.

This model performs a forward pass, receiving the images and masks as inputs. In this case, the `pixel_values` parameter receives the images, and the labels parameter receives the masks. The model outputs include a loss value (for training) and logits (raw predictions). After this, the loss is back propagated to update the model’s weights. Following this, the optimizer and learning rate scheduler `lr_scheduler` adjusts the learning rate and other parameters during training. The logits from the model are resized to match the size of the masks using bilinear interpolation. This step is crucial for comparing the model’s predictions with the ground truth masks.

# Evaluation loop for each epoch

model.eval()

total_iou = 0

num_batches = 0

valid_iterator = tqdm(valid_loader, desc="Validation", unit="batch")

for batch in valid_iterator:

images, masks = batch

images = images.to(device)

masks = masks.to(device).long()

with torch.no_grad():

# Get the logits from the model and apply argmax to get the predictions

outputs = model(pixel_values=images,return_dict=True)

outputs = F.interpolate(outputs["logits"], size=masks.shape[-2:], mode="bilinear", align_corners=False)

preds = torch.argmax(outputs, dim=1)

preds = torch.unsqueeze(preds, dim=1)

preds = preds.view(-1)

masks = masks.view(-1)

# Compute IoU

iou = mean_iou(preds, masks, model.config.num_labels)

total_iou += iou

num_batches += 1

valid_iterator.set_postfix(mean_iou=iou)

epoch_iou = total_iou / num_batches

print(f"Epoch {epoch+1}/{num_epochs} - Mean IoU: {epoch_iou:.4f}")

# Check for improvement

if epoch_iou > best_iou:

print(f"Validation IoU improved from {best_iou:.4f} to {epoch_iou:.4f}")

best_iou = epoch_iou

best_model_wts = copy.deepcopy(model.state_dict())

torch.save(best_model_wts, 'best_model.pth')

For the validation aspect of this process, the model is set to evaluation mode (model.eval()), which disables certain layers and behaviors (like dropout) that are only used during training. In this case, for each batch in the validation dataset, the model generates predictions. These predictions are resized and processed to calculate the Intersection over Union (IoU) metric. The mean IoU for each batch is calculated and aggregated to derive an average IoU for the epoch. After each epoch, the IoU is compared with the best IoU obtained in previous epochs. If the current IoU is higher, it indicates an improvement, and the model’s state is saved as the best model so far.

Video Inference

Alright, we now have a fully fine-tuned SegFormer that works specifically for lane detection in autonomous vehicles. But, how do we view the results? In this section, let’s explore the inference component of this experiment.

Initially, the pre-trained SegFormer weights have to be loaded. The number of classes also needs to be defined. This has been done using `model.config.num_labels=2`, since we are dealing with 2 classes.

From here, the `best_model.pth` weights file exported by the previous code snippet needs to be loaded as well. This contains the best trained weights for the fine-tuned model. The model has to be set to evaluation mode.

# Load the trained model

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = SegformerForSemanticSegmentation.from_pretrained('nvidia/segformer-b2-finetuned-ade-512-512')

# Replace with the actual number of classes

model.config.num_labels = 2

# Load the state from the fine-tuned model and set to model.eval() mode

model.load_state_dict(torch.load('segformer_inference-360640-b2/best_model.pth'))

model.to(device)

model.eval()

# Video inference

cap = cv2.VideoCapture('test-footages/test-2.mp4')

fourcc = cv2.VideoWriter_fourcc(*'XVID')

out = cv2.VideoWriter('output_video.avi', fourcc, 20.0, (int(cap.get(3)), int(cap.get(4))))

For loading and reading the video, OpenCV has been used, and the `cv2.VideoWriter` method is used to export the final inference video with the masks overlapped over the source video footage.

# Perform transformations

data_transforms = TF.Compose([

TF.ToPILImage(),

TF.Resize((360, 640)),

TF.ToTensor(),

TF.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

One very important thing to keep in mind is that the same `transforms` that were used during the preprocessing of the dataset, have to be used during the inference stage as well. Each frame from the video undergoes a series of transformations to match the input format expected by the model. These transformations include resizing, tensor conversion, and normalization.

# Inference loop

while(cap.isOpened()):

ret, frame = cap.read()

if ret == True:

# Preprocess the frame

input_tensor = data_transforms(frame).unsqueeze(0).to(device)

with torch.no_grad():

outputs = model(pixel_values=input_tensor,return_dict=True)

outputs = F.interpolate(outputs["logits"], size=(360, 640), mode="bilinear", align_corners=False)

preds = torch.argmax(outputs, dim=1)

preds = torch.unsqueeze(preds, dim=1)

predicted_mask = (torch.sigmoid(preds) > 0.5).float()

# Create an RGB version of the mask to overlay on the original frame

mask_np = predicted_mask.cpu().squeeze().numpy()

mask_resized = cv2.resize(mask_np, (frame.shape[1], frame.shape[0]))

# Modify this section to create a green mask

mask_rgb = np.zeros((mask_resized.shape[0], mask_resized.shape[1], 3), dtype=np.uint8)

mask_rgb[:, :, 1] = (mask_resized * 255).astype(np.uint8) # Set only the green channel

# Post-processing for mask smoothening

# Remove noise

kernel = np.ones((3,3), np.uint8)

opening = cv2.morphologyEx(mask_rgb, cv2.MORPH_OPEN, kernel, iterations=2)

# Close small holes

closing = cv2.morphologyEx(opening, cv2.MORPH_CLOSE, kernel, iterations=2)

# Overlay the mask on the frame

blended = cv2.addWeighted(frame, 0.65, closing, 0.6, 0)

# Write the blended frame to the output video

out.write(blended)

else:

break

cap.release()

out.release()

cv2.destroyAllWindows()

During the inference loop, each preprocessed frame is fed into the model. The model outputs logits, which are then interpolated to the original frame size and passed through an argmax function to obtain the predicted segmentation mask. A thresholding operation converts these predictions into a binary mask, highlighting the detected lanes.

For better visualization, the binary mask is converted into an RGB format with green color for the lanes. Some post-processing steps like noise removal and hole filling are applied to smoothen the mask. This mask is then blended with the original frame to create a visual overlay of the detected lanes.

Finally, this blended frame is written into the output video file, and the script continues this process for all frames in the input video and closes all file streams. This results in an output video where the detected lanes are visually highlighted, demonstrating the model’s ability to perform lane detection in real-world scenarios.

Experimental Results

Now for the most interesting part of this article – the inference results!. In this final section, let’s have a look at the inference results from the fine-tuned HuggingFace SegFormer model for lane detection.

From the inference results shown above, we can conclude that SegFormer works great for lane detection. As mentioned in this article the SegFormer-b2 model was fine-tuned on a sub-sample of the massive BDD dataset for just 30 epochs. To enhance your understanding and engage hands-on with the code, take a walk through the code here.

For better and accurate results, it is recommended to choose the larger and more accurate SegFormer-b5 model and possibly train it for more epochs on the entire dataset.

Conclusions

In this experiment, we have successfully demonstrated the application of a fine-tuned SegFormer model for the task of lane detection, leveraging the rich and diverse data offered by the BDD (Berkeley DeepDrive) Lane Detection Dataset. This approach underlines the efficacy of fine-tuning and the robustness of the SegFormer architecture in handling complex semantic segmentation tasks in autonomous driving and road safety, even during pitch dark night times.

The resulting output, where detected lanes are overlaid on the original video frames, not only serves as a proof of concept but also showcases the potential of this technology in real-time applications. The smoothness and accuracy of the lane detection, visualized in the overlaid green mask, are testaments to the model’s effectiveness. In the end, it is safe to understand that even through there are multiple cutting-edge lane detection algorithms, fine-tuning a model like SegFormer gives great results!

We’d love to hear your thoughts on fine-tuning SegFormer for improved lane detection. Please share your comments and insights below – your perspectives are invaluable to us!

References

HuggingFace SegFormer: https://huggingface.co/docs/transformers/model_doc/segformer

Berkeley Deep Drive Dataset: https://deepdrive.berkeley.edu/