ROS (Robot Operating System) is more than a decade old open-source robotics middleware software, initially developed by two PhD students from Stanford University. Fast-forward to 2024, ROS has evolved into a rich ecosystem of utilities, algorithms, and sample applications, transcending its origins as middleware software, and is now used by millions of people and thousands of companies worldwide.

ROS 2 was announced at ROSCon 2014 to address challenges like security, reliability in diverse environments, and support for large-scale embedded systems, prompting a complete rebuild of ROS1 from the ground up. Currently, one of the stable releases of ROS 2 is Humble Hawksbill released in May 2022, however, the latest ROS 2 version is Jazzy Jalisco released in May 2024. ROS 2 has support for robotics perception, planning, control as well as simulations. Thus, apart from hardware design and setup, everything from hardware integration to building a complete autonomous stack can now be accomplished single-handedly using ROS. So, as a robotics developer,it’s very important to understand how ROS works and how to use it.

This is the 4th article of the robotics article series. In the previous articles we discussed Monocular SLAM using opencv python and ROS 2 Carla setup guide. In this blog post, we aim to provide an in-depth understanding of ROS 2, including its internal workings and key components such as Nodes, Topic Publishers, Subscribers, Services, and Clients, culminating in a small ROS 2 project where we integrate Monocular SLAM code from the previous article with ROS 2. A thorough understanding of ROS 2 is crucial, as we will utilize it in our final project of this blog post series: Building a 6DoF Autonomous Vehicle for Static Obstacle Avoidance Using ROS 2 and Carla.

NB:

- ROS 2 Installation Assumption: We assume that you have already installed ROS 2 in your operating system. If not, please follow the ROS 2 installation guide for Ubuntu 22.04.

- Python for ROS 2: In this ROS 2 tutorial, we will exclusively use Python to demonstrate all the ROS 2 functionalities. We look forward to covering C++ in the upcoming articles, so be sure to follow the series.

- Role of DDS in Robot Operating System 2

- Working of ROS 2

- How to use ROS 2 in Python

- Integrate SLAM with ROS 2 in Python

- Key Takeaways

- Conclusion

- References

Role of DDS in Robot Operating System 2

What is DDS?

Development in Autonomous vehicles Industry

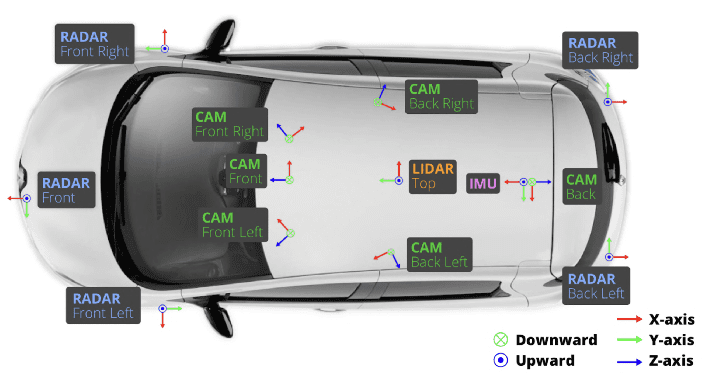

To understand what DDS (Data Distribution Service) is and why it is needed, we should start by looking at the evolution of autonomous vehicles. There was a time when vehicles were driven manually. As time progressed, more electronic components were added, bringing automation to everyday vehicles. As the level of autonomy increased, more sensors were added to the vehicles, and the amount of data generated also increased, creating a need for better communication mediums.

Autonomous vehicles generate a massive data flow due to the increasing number of sensors added for improved decision-making and to avoid unexpected scenarios. According to a Stanford study, a single autonomous vehicle generates 5TB of data per hour, amounting to 100TB per day. Contrary to popular belief, this amount of data cannot be processed in the cloud through 5G, as 5G supports a range of 100 Mbps (at the cell edge) to 10 Gbps (theoretical). The data varies in size and frequency; some is high volume but low frequency, while others are low volume but high frequency, or a mix of both. For many messages, it is crucial to preserve all data points, but for some, packets can be dropped. This necessitates a wide range of quality of service for autonomous vehicles.

To handle this we need a single piece of technology that can handle this entire range of dataflow and QoS.

Evolution in Networking

We have understood how automation vehicle and robotics industry has progressed over the years now lets understand how the field of networking has progressed.

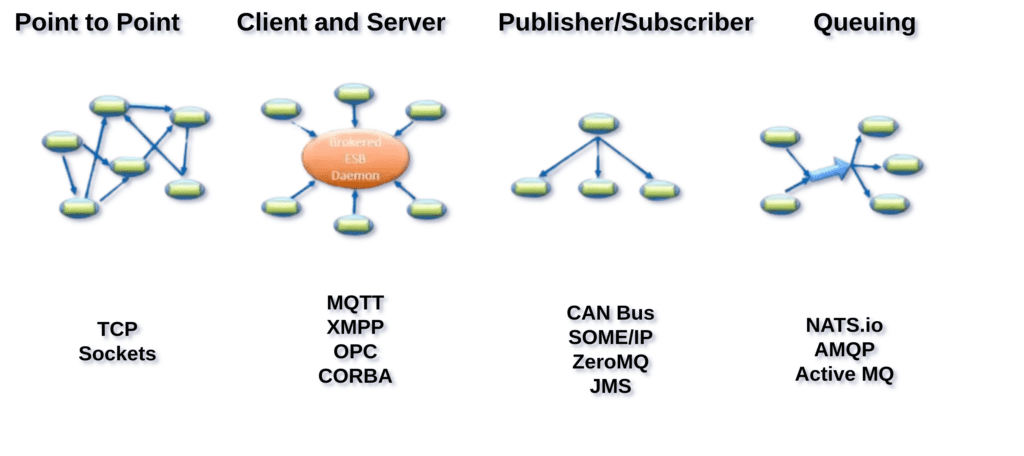

- Point to Point: ensures the reliable delivery of messages between two endpoints. eg: TCP Sockets.

- Client and Server focuses on lightweight, efficient message exchange between clients and a central broker. eg: MQTT etc.

- Publisher and Subscriber broadcasts messages across a network where devices selectively listen for relevant messages. eg: CAN Bus etc.

- Queuing provides flexible messaging patterns, including queuing, where the focus is on the efficient handling of messages within a distributed system. eg: NATS.io etc.

While traditional messaging systems like TCP, MQTT, CANBUS, and NATS.io focus primarily on the reliable delivery of messages with the payload being a secondary concern, DDS places data at the center of its architecture. By managing dataflow and providing robust QoS policies, DDS ensures that the right data is always available to the right consumers at the right time, making it a powerful solution for complex, real-time, and data-intensive applications.

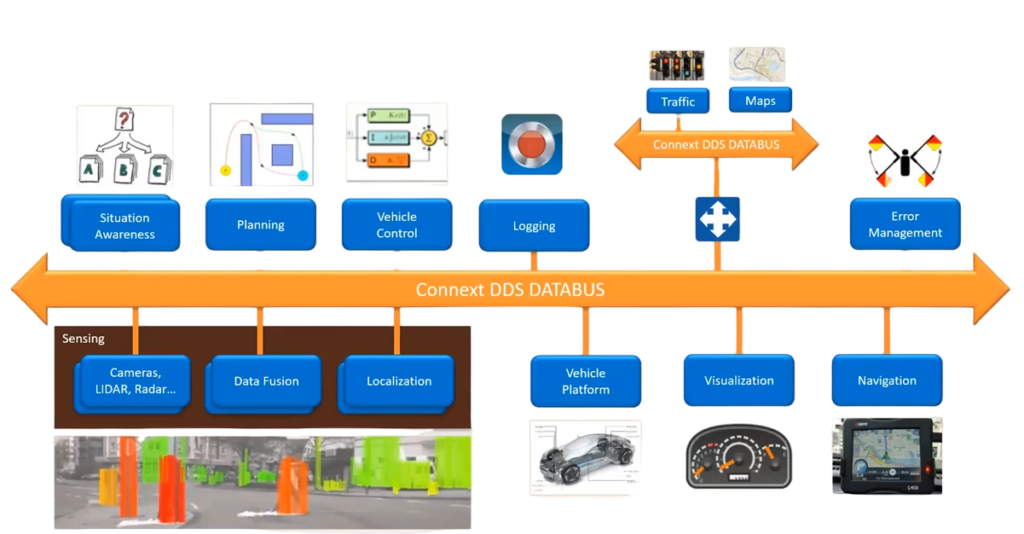

Above is how the DDS connectivity framework looks like in an autonomous vehicle. On the left we have planning and control modules and the cloud we have the HDmap information connected by a DDS DATABUS. A DDS (Data Distribution Service) databus (coloured in orange) is a communication model that facilitates the seamless and real-time exchange of data between distributed systems and applications. In the bottom left we have the sensory input and sensor fusion and localization connected to the databus. To communicate with the vehicle, vehicle platform such as CAN Bus etc. as well as the visualization and navigation components is connected with the databus. DDS creates the databus that all the services need to be attached with it for either sharing the captured/estimated information or to receive the shared information.

How DDS is Being Used in ROS 2?

ROS (Robot Operating System) is not an Operating System, its a middleware framework having a rich ecosystem. The main value of ROS lies in it’s, tools, capabilities and ecosystem. But the component that was holding the original ROS back, was its middleware architecture and in the backend how node management, Publisher/Subscriber, RPCs were designed and its scalability. The same has been said by the folks from Open Robotics,

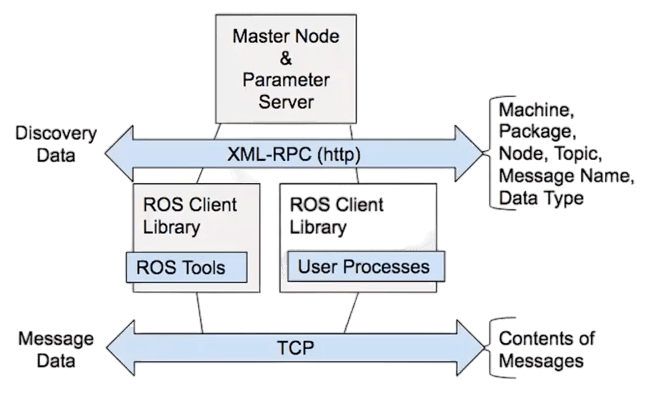

ROS 1 RMW Architecture

As commercial opportunities transitioned into products, ROS’s foundation as a research platform began to show its limitations. Security, reliability in non-traditional environments, and support for large scale embedded systems became essential to push the industry forward.

– Robot Operating System 2: Design, Architecture, and Uses In The Wild

This diagram represents the architecture of the Robot Operating System 1 (ROS 1) communication infrastructure, specifically focusing on how nodes (the basic building blocks of ROS-based robot control systems) communicate with each other.

- Master Node & Parameter Server:

The Master Node is a central node that is responsible for other nodes to find each other and establish communication. It maintains a registry of all active nodes, topics, and services. The Parameter Server is used to store and retrieve parameters at runtime.

- XML-RPC (http):

XML-RPC is a remote procedure call (RPC) protocol that uses XML to encode its calls and HTTP as a transport mechanism. This protocol is used for the “Discovery Data” phase where nodes register themselves with the Master Node, and it allows nodes to query the master for information about other nodes, topics, and services.

- ROS Tools and User Processes:

These are tools provided by ROS to facilitate various operations like visualization, logging, debugging, etc. These are the actual ROS nodes created by users, which perform specific tasks such as controlling sensors, actuators, or processing data.

- TCP (Transport Layer Protocol)

TCP (Transmission Control Protocol) is used for the “Message Data” phase, where the actual contents of the messages are transmitted between nodes. This protocol ensures reliable, ordered, and error-checked delivery of data between nodes.

Working of ROS 2:

At its core, ROS 2 is a middleware framework, and its functionality is fundamentally based on this concept. Let’s take a simple example, say you have a webcam attached via USB port to your machine, and now you want to access the camera feed. When you connect the webcam to your machine, the operating system recognizes the device and assigns a device file (e.g., /dev/video0). To interface with the hardware, you’ll need a camera driver node, such as V4L2 in Linux. This is where a specific ROS 2 package, like usb_cam or ros2_usb_camera, comes into play. Here’s how they work,

- Initializing the camera using the appropriate driver (e.g., V4L2 for Linux).

- Opening the device file and starting capturing frames from the webcam.

- Converting the raw frame format to RGB image format and creating a ROS 2 message using the image.

- Publishing the ROS 2 message to a specific topic (eg:

/camera/image_raw)

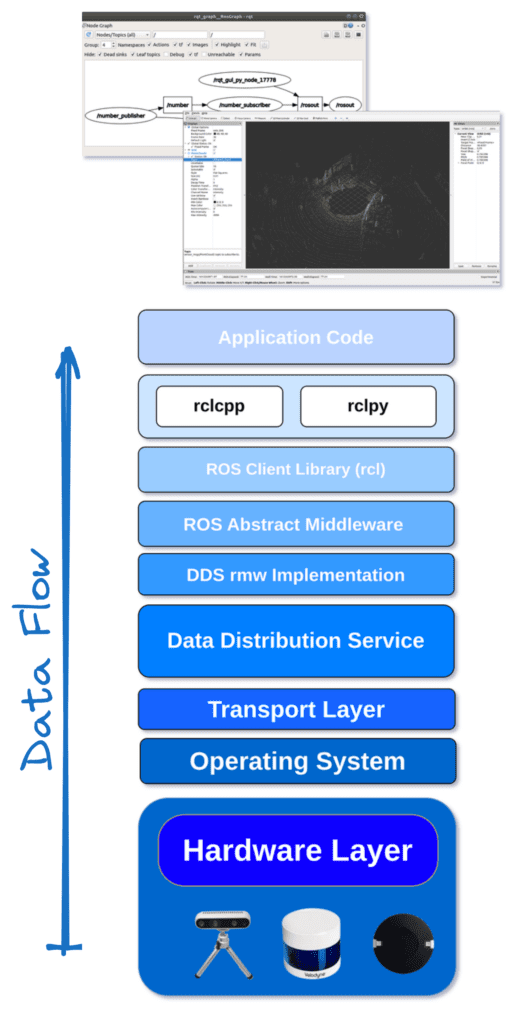

Dataflow from OS to DDS to ROS 2

But, you might wonder what the role of DDS is, how DDS is connected with the OS, and how DDS communicates with ROS 2? For understanding this we need to trace the data flow through each layer of the architecture,

- Hardware Layer: This layer provides an interface to the Camera via a USB port.

- Operating System Layer:

- The operating system (Linux, Windows, or MacOS) recognizes the webcam and assigns it a device file (e.g.,

/dev/video0on Linux). - The OS provides low-level drivers to interface with the webcam hardware.

- The operating system (Linux, Windows, or MacOS) recognizes the webcam and assigns it a device file (e.g.,

- Transport Layer: The transport layer manages the data transmission between the hardware and higher layers. In this case, it involves USB communication managed by the OS.

- DDS Implementation Layer:

- DDS handles the reliable transmission of messages (camera feed) between ROS 2 nodes.

- One of the DDS implementations (Fast DDS, Cyclone DDS, or Connext DDS) is chosen based on the system configuration.

- ROS Abstract Middleware (rmw) Layer: This layer ensures that the ROS 2 application code can interact with the underlying DDS without depending on its specific implementation details.

- ROS Client Library (rcl) Layer: The rcl (ROS Client Library) layer provides core ROS 2 functionalities such as node management, topic publishing/subscribing, and service calls. The application interacts with the camera through this library.

- Language-specific Client Libraries: Developers use different languages to program a robot, such as C++, Python, or Java. Based on the language, ROS 2 has provided libraries to connect with the ROS Client Library.

- Application Code: In this stage we create our own packages and nodes to communicate with the attached sensor.

How to use ROS 2 in Python

Now, you might wonder what is a package, node, subscriber, publisher, service, client etc? Let’s understand these ROS 2 internals one by one.

ROS 2 has four ways to communicate:

- Messages: Publisher/Subscribers are used to share messages via specified topic.

- Services: Request/Reply model for remote procedure calls.

- Actions: Goal/Feedback/Result model for long-running tasks. Feedback is the feedback of the request, and its progress.

- Parameters: modify global data using a request/reply pattern.

We will be explaining the first two in the article to follow.

Workspace

Robot Operating System 2 Workspace is the environment where you create your ROS 2 packages. These packages have nodes for different functions. This is the same as a Python environment. Below is how a ROS 2 workspace is created,

$ mkdir -p ros2_ws/src

ROS 2 workspace is nothing but a folder which contains a sub folder src. Generally, src folder contains the source code for ROS 2 packages. After you compile the packages using colcon build you will see, there are three more folders, build, log and install.

buildfolder contains compiled files,logfolder stores log files and debugging informationinstallfolder holds the final installed files and executables ready for deployment.

Packages

In ROS 2, you can create a package that contains Python nodes, C++ nodes, or a combination of both. Say, you have an autonomous vehicle designed to navigate from one place to another avoiding any static obstacles. To do that you can have a separate package for integrating hardware sensors, perception, planning and control. So, based on the use cases you decide how many packages to create and how to design the nodes. Treat ROS 2 Packages like python libraries with different functionalities.

If you want to create a package in Python use the below commands,

$ cd ros2_ws/src

$ ros2 pkg create <package_name> --build-type ament_python

The only change in both the commands is the build type for C++ package we use ament_cmake and for python it is ament_python.

Nodes

Nodes in ROS 2 are responsible to connect with the ROS Client Library and communicate with the hardware and software. Nodes in ROS 2 are redesigned from ROS1. Node is a part of the rcl library, offering enhanced modularity, flexibility and performance. By leveraging advanced features like Lifecycle Management, DDS Integration, QoS Policies, and built-in security, ROS 2 nodes enable developers to build sophisticated and reliable robotic systems. Whether you are a seasoned robotics engineer or a newcomer to the field, understanding and utilizing ROS 2 nodes is essential for creating the next generation of robotic applications.

Generally there are two types of ROS 2 Nodes,

- Standard Node

- Lifecycle Management Node

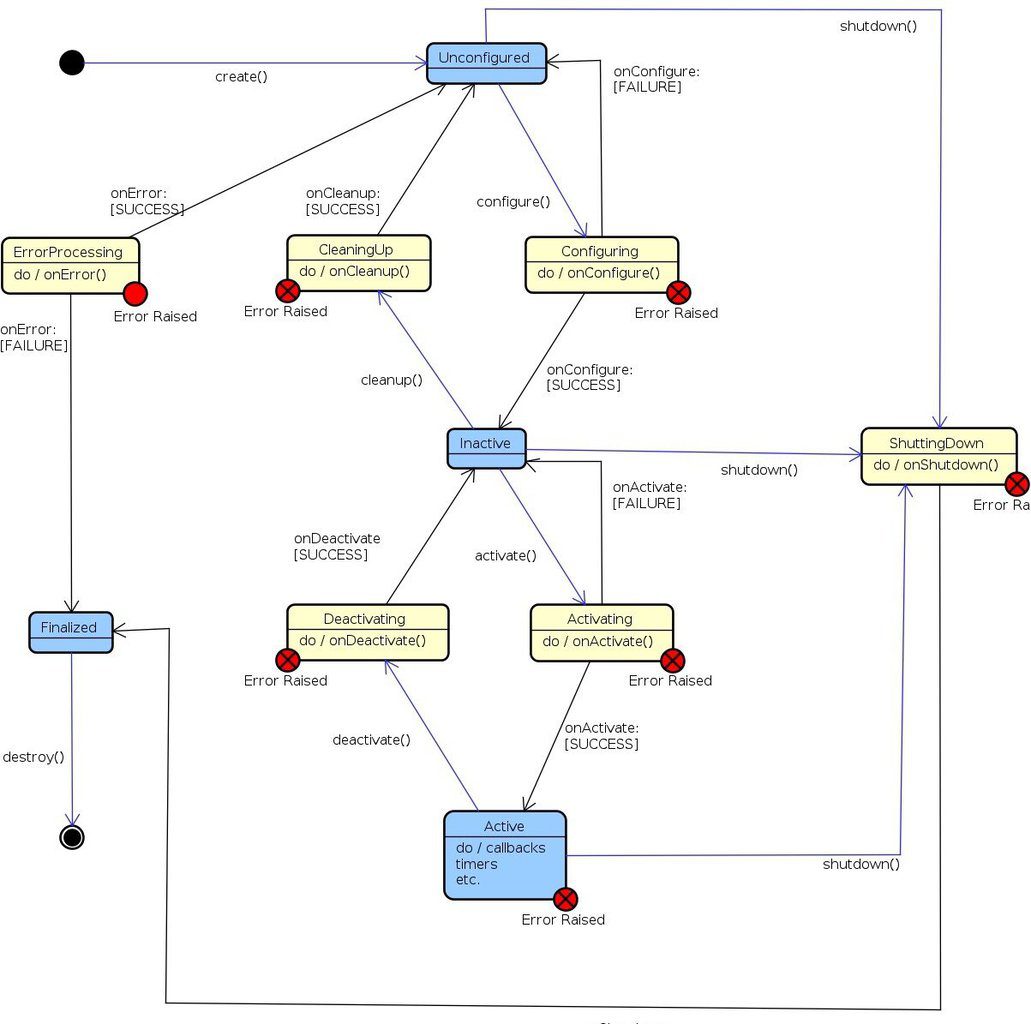

Lifecycle Management Node ROS 2:

We will see how to implement a ROS 2 standard node in the ROS 2 project section. Right now, let’s check out how to implement a Lifecycle Node. An example of a library where a Lifecycle Node is being implemented is the Nav2 navigation library. In Nav2, lifecycle nodes are used to ensure that the stack can successfully execute without missing any node.

Generally, the Standard Node has only two states inactive and active. But, Lifecycle Node has 4 states, unconfigured, inactive, active, and finalized.

- When a

Nodeis created, it exists but is not ready to perform any tasks; this is the Unconfigured state. From this state the node can only transition to an inactive state via configured state or finalize via shutdown. - In an inactive state the node is configured and can be introspective but does not perform any process. From here the node can transition to activated or unconfigured via activate() or cleanup() transitions respectively.

- When in the active state the node is fully functional and able to publish or subscribe to topics, provide services. From here the node can transition to Inactive or finalized state if deactivate or shutdown transition is invoked.

- This is the terminal state. The node is no longer operational and has released any resources it used. From this state Node doesn’t transition to another state.

Now that we understand the underlying concepts of a Lifecycle Node, let’s implement it. Create a Python file named lifecycle_node.py under the src/<package_name>/<package_name>/ folder.

import rclpy

from rclpy.lifecycle import State, TransitionCallbackReturn, LifecycleNode

from std_msgs.msg import String

import sys

class LifecycleNodeExample(LifecycleNode):

def __init__(self) -> None:

super().__init__("lifecycle_publisher")

self.publisher_ = self.create_lifecycle_publisher(String, 'topic', 10)

self.timer = None

self.i = 0

self.mock_file = None # Placeholder for a mock resource

def on_configure(self, state: State) -> TransitionCallbackReturn:

self.get_logger().info(f"Node '{self.get_name()}' is in state '{state.label}'. Transitioning to 'configure'")

return TransitionCallbackReturn.SUCCESS

def on_activate(self, state: State) -> TransitionCallbackReturn:

self.get_logger().info(f"Node '{self.get_name()}' is in state '{state.label}'. Transitioning to 'activate'")

timer_period = 0.5 # seconds

self.timer = self.create_timer(timer_period, self.timer_callback)

# Creating a mock resource (e.g., opening a file)

self.mock_file = open('/tmp/mock_file.txt', 'w')

self.mock_file.write("This is a mock file resource.\n")

self.get_logger().info("Mock file resource created.")

return TransitionCallbackReturn.SUCCESS

def on_deactivate(self, state: State) -> TransitionCallbackReturn:

self.get_logger().info(f"Node '{self.get_name()}' is in state '{state.label}'. Transitioning to 'deactivate'")

if self.timer:

self.timer.cancel()

return TransitionCallbackReturn.SUCCESS

def on_shutdown(self, state: State) -> TransitionCallbackReturn:

self.get_logger().info(f"Node '{self.get_name()}' is in state '{state.label}'. Transitioning to 'shutdown'")

if self.timer:

self.timer.cancel() # Cancel the timer to stop publishing messages

# Additional cleanup for mock resource

if self.mock_file:

self.mock_file.write("Shutting down. Cleaning up resources.\n")

self.mock_file.close()

self.get_logger().info("Mock file resource cleaned up.")

return TransitionCallbackReturn.SUCCESS

def timer_callback(self):

msg = String()

msg.data = f'Hello, world: {self.i}'

self.publisher_.publish(msg)

self.get_logger().info(f'Publishing: {msg.data}')

self.i += 1

def main(args=None) -> None:

rclpy.init(args=args)

lifecycle_node = LifecycleNodeExample()

rclpy.spin(lifecycle_node)

rclpy.shutdown()

if __name__ == "__main__":

main()

In this node file, we declared a LifecyclePublisherNode class inherited from LifecycleNode, and defined a method for each of the node’s transitions between its 4 states. Each method must return either FAILURE or SUCCESS. To run the above code, follow the below instructions,

Include this node in the entry_points of setup.py file:

entry_points={

'console_scripts': [

"node_name = <package_name>.lifecycle_node:main",

],

}

Run the above script using the executable, and check the current state of the lifecycle node.

# in new terminal

$ ros2 run <package_name> lifecycle_publisher

# in new terminal run below commands

$ ros2 lifecycle list lifecycle_publisher

# Transition to configure state

$ ros2 lifecycle set /lifecycle_publisher configure

# Transition to activate state

$ ros2 lifecycle set /lifecycle_publisher activate

# Transition to deactivate state

$ ros2 lifecycle set /lifecycle_publisher deactivate

# Transition to shutdown state

$ ros2 lifecycle set /lifecycle_publisher shutdown

If you are unable to configure please don’t worry, because in the project part of this ROS 2 tutorial we will see how to create a Python ROS 2 package and configure it.

Topic

Topics are a primary method for transferring data between nodes, enabling communication across different parts of the system. Topics are represented as /topic_name, and have a message type. Say you are sending an image to this topic, then the topic type will be Image . Similarly, you can create custom message types and have the topic receive that message.

Subscriber

Subscriber is a node component that receives messages from a specified topic. Let’s go back to the previous USB camera example. Say you have installed usb_cam and after running that package, you have a topic /camera/image_raw where the camera frames will be sent. If you want to use those frames for performing certain tasks, how would you access these topics? Well, this can be done using a Subscriber. Below is the code details,

self.subscription = self.create_subscription(String,'/camera/image_raw',

self.listener_callback, 10)

self.create_subscription is an inherited function from Node class. The arguments are message type (here, String), topic name (here, /camera/image_raw), callback function (self.listener_callback) and queue size (here, 10). Use of the callback function is to interpret the message and take the useful information from the message.

Publisher

A publisher is a node component that sends messages to a specific topic. We have understood how to get data from a topic, now lets understand how to send our own data to a topic. In ROS 2, publishing data to a topic involves several steps under the hood. When a node creates a publisher for a specific topic, the ROS 2 middleware (typically based on DDS – Data Distribution Service) handles the setup of communication channels. When the publish() method is called on the publisher, the message is serialized into a binary format suitable for transmission. This serialized data is then handed off to the DDS layer, which delivers the message to all subscribers of the topic. DDS handles the discovery of subscribers, ensuring that messages are routed efficiently. It also applies any configured Quality of Service (QoS) policies, such as reliability and durability, to manage how messages are delivered and stored. The entire process is designed to be transparent to the user, providing a robust and flexible communication mechanism for ROS 2 applications.

self.publisher_ = self.create_publisher(String, '/topic', 10)

Here, self.create_publisher is the inherited topic from the Node class, String is the message type of the topic, and /topic is the topic name and 10 is the queue size.

Service

In ROS 2, a service is a communication mechanism that enables synchronous, request-response interactions between nodes. Unlike topics, which are used for continuous, asynchronous data streams, services are designed for tasks that require a request followed by a response. A service consists of a server and a client: the server provides the service, defining the logic to process requests, while the client sends requests to the server and waits for responses. Each service has a specific type, defined by a pair of message types (one for the request and one for the response). When a client makes a request, the message is sent to the server, which processes it and sends back a response. This pattern is useful for operations that need confirmation or specific results, such as querying the state of a robot or commanding it to perform a specific action. The underlying DDS middleware ensures reliable communication and applies QoS policies to manage the interaction between clients and servers efficiently.

self.srv = self.create_service(AddTwoInts, 'add_two_ints', self.add_two_ints_callback)

The above line creates a ROS 2 service named ‘add_two_ints’ with the type AddTwoInts and sets the callback function self.add_two_ints_callback to handle incoming service requests.

Integrate SLAM with ROS 2 in Python

Finally we arrived at the capstone ROS 2 project, where we integrate Monocular SLAM with ROS 2. As mentioned, for any ROS 2 project we first need to create a workspace. That workspace generally has multiple packages, each package having multiple nodes and each node having multiple functionality. So, let’s create a workspace, package and nodes. In this ROS 2 tutorial we will see how to wrap the SLAM code as a ROS 2 Python package.

Workspace Creation:

$ mkdir -p /py_ws/src

py_ws is the workspace folder and src is the place where the packages will reside. Now that we know how to create a workspace, let’s create a package.

Package Creation:

$ cd /py_ws/src

$ ros2 pkg create slam --build-type ament_python

First you get inside the src folder and then create the package slam. ros2 pkg create is used to create the package, --build-type is used to mention the build system, we will pass ament_python because this will be a python package. If it was C++ package or a package containing both C++ and Python nodes then we would have used ament_cmake.

Package File Structure:

After you create the package, the file structure inside the package slam will look like below.

slam

├── package.xml

├── resource

│ └── slam

├── setup.cfg

├── setup.py

├── slam

│ └── __init__.py

└── test

├── test_copyright.py

├── test_flake8.py

└── test_pep257.py

Let’s understand the file structure under the slam folder.

package.xml: This file is used to define the package metadata and dependencies.resource: In a ROS 2 package, theresourcefolder is used to store non-source code files that are essential for the package’s functionality. These files can include configuration files, launch files, URDF models, maps, datasets, and other resources that the package needs to operate correctly.setup.cfg:setup.cfgfile in the ROS 2 package is used to help manage in organizing package configuration, and also to simplify thesetup.py.setup.py: It is a standard file in Python packages that uses thesetuptoolslibrary to provide instructions for tasks such as, specifying entry Points, Dependency Management, specifying Build Configuration etc.slam: This folder contains the source code for the ros2 nodes.__init__.pyis generally empty.test: These scripts inside thetestfolder, help maintain high code quality, consistency, and clear documentation.

Now, that we understand the utility of each of these files and folders, lets use them to write nodes for following tasks,

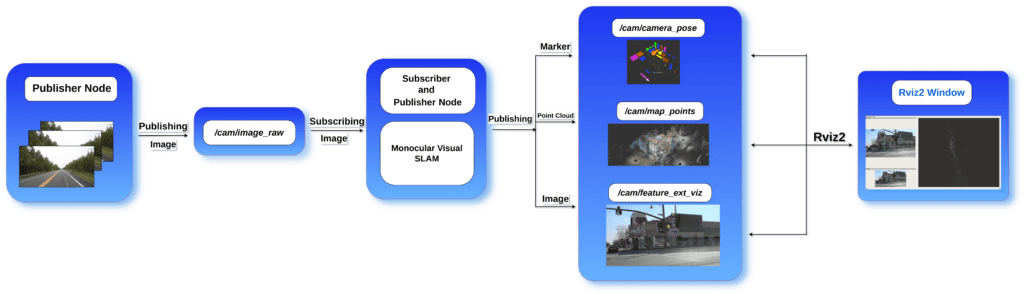

vod_img_pub_node: Publishes the Video frames to/cam/image_rawtopic.slam_pub_node: Publishes the 3D map points and the camera trajectory to/cam/map_pointsand/cam/camera_posetopic. The feature extraction Image is published to/cam/feature_ext_viztopic.

We will create two files, vod_pub.py and slam_pub.py. vod_pub.py will contain the code for video frame publishing, and slam_pub.py will contain the code for publishing the SLAM code output.

You don’t need to worry, we will provide all of this in a structured manner. Download the code using the below button,

Video Frame Publisher

Let’s start with, vod_pub.py. Below is the code for video frame publishing,

# Import the necessary libraries

import cv2

import rclpy

from rclpy.node import Node

import numpy as np

from sensor_msgs.msg import Image

from cv_bridge import CvBridge, CvBridgeError

import sys

# Define a class for publishing camera images as ROS 2 messages

class VodImagePublisher(Node):

def __init__(self, capture):

# node name is mentioned here, 'vod_img_pub'

super().__init__('vod_img_pub')

# Create a publisher for the '/cam/image_raw' topic with a queue size of 1

self.publisher_ = self.create_publisher(Image, '/cam/image_raw', 1)

# a timer is created with a callback to execute every 0.5 seconds

timer_period = 0.1

self.timer = self.create_timer(timer_period, self.image_callback)

# Initialize the image counter

self.i = 0

# Store the video capture object

self.capture = capture

# Callback function for capturing and publishing images

def image_callback(self):

# Check if the video capture is open

if self.capture.isOpened():

# Read a frame from the video capture

ret, frame = self.capture.read()

# Resize the frame to 640x480

frame = cv2.resize(frame, (640, 480))

# Create an Image message

msg = Image()

# Set the message header timestamp and frame ID

msg.header.stamp = Node.get_clock(self).now().to_msg()

msg.header.frame_id = "base"

# Set the width and height of the image

msg.width = frame.shape[1]

msg.height = frame.shape[0]

# Set the encoding format of the image

msg.encoding = "bgr8"

# Specify the endianness of the image data

msg.is_bigendian = False

# Set the step size (row length in bytes)

msg.step = np.shape(frame)[2] * np.shape(frame)[1]

# Convert the frame to bytes and set the image data

msg.data = np.array(frame).tobytes()

# Publish the image message

self.publisher_.publish(msg)

# Log and print the number of images published

self.get_logger().info('%d Images Published' % self.i)

# Increment the image counter

self.i += 1

# Main function to initialize and run the node

def main(args=None):

# Get the video path from command-line arguments

video_path = sys.argv[1]

# Open a connection to the specified video file

capture = cv2.VideoCapture(video_path)

# Set the buffer size for the video capture

capture.set(cv2.CAP_PROP_BUFFERSIZE, 2)

# Initialize the rclpy library

rclpy.init(args=args)

# Initialize the CamImagePublisher object

cam_img_publisher = None

# Check if the video capture is opened successfully

if not capture.isOpened():

# Print an error message if the video capture cannot be opened

print("Error: Could not open video stream from webcam.")

# Destroy the node and shutdown rclpy

cam_img_publisher.destroy_node()

rclpy.shutdown()

# Release the video capture object

capture.release()

else:

# Create the CamImagePublisher object and start the node

cam_img_publisher = VodImagePublisher(capture)

# Spin the node to keep it running

rclpy.spin(cam_img_publisher)

if __name__ == '__main__':

# Call the main function

main()

Let’s break it down.

main():

In the main() function, we first take the video path from the command line argument. read the video file using cv2.VideoCapture() and initialize the rclpy, rclpy is a python client library for ROS 2, which allows you to create nodes, publish and subscribe to topics etc. After this VodImagePublisher is called which starts the node, and rclpy.spin(cam_img_publisher) keeps the node running until there is any outside intervention.

VodImagePublisher:

In the class initialization, self.create_publisher is used to create a publisher, which takes the message type, topic name, queue size. self.create_timer is used to execute the callback function every timer_period. In the image_callback function we first check if the video frames are coming using self.capture.isOpened(), if yes, then read the frame using self.capture.read().

Now these frames are converted into Image message type. For doing that we first create an object of Image() class and fill the header, mentioning frame_id and stamp is very important, after that we fill the other fields which are width, height, encoding and data. After that we publish the message using self.publisher_.publish(msg).

# Create an Image message

msg = Image()

msg.header.stamp = Node.get_clock(self).now().to_msg()

msg.header.frame_id = "base"

msg.width = frame.shape[1]

msg.height = frame.shape[0]

msg.encoding = "bgr8"

msg.is_bigendian = False

msg.step = np.shape(frame)[2] * np.shape(frame)[1]

msg.data = np.array(frame).tobytes()

Some people have difficulty understanding, what is queue size?

queue size is nothing but the buffer size. A publisher having queue size 10, means that if the publishing rate exceeds the rate at which messages can be sent then the buffer temporarily stores these messages. If the buffer reaches its capacity of 10 messages, the oldest messages will be discarded to make room for new ones, ensuring that the most recent messages are prioritized for delivery.

Now that we have figured out how to get the video frame from a topic, let’s write the slam_pub.py to get the slam output. After that we will set up the configuration files to run the nodes.

SLAM ROS 2 Publisher Subscriber Loop in Python:

In this slam_pub.py file we will have both publisher and subscriber.

Publishers:

- Map Point Publisher: Publishes the 3D points in

/cam/map_pointstopic. - Camera Pose Publisher: Publishes camera pose in

/cam/camera_posetopic. - Feature Points: Publishes the feature points in

/cam/feature_ext_viztopic.

Subscriber:

- We subscribed to the

/cam/image_rawtopic as the input to the SLAM algorithm.

ROS 2 Monocular SLAM Code

We start by importing the necessary libraries. we import the slam code as, from slam.pyslam import process_frame, here process_frame is the function that takes the image frames as input and produces the map point and camera pose as output. we import it from slam.pyslam slam is the folder name and pyslam is the file name.

import rclpy

import numpy as np

from rclpy.node import Node

from sensor_msgs.msg import Image

from cv_bridge import CvBridge, CvBridgeError

from visualization_msgs.msg import Marker, MarkerArray

from slam.pyslam import process_frame # Assuming process_frame is a function from the SLAM package

from sensor_msgs.msg import PointCloud2, PointField

class SlamPublisher(Node):

def __init__(self):

super().__init__('slam_pub')

# Publisher for point cloud data with a larger queue size to handle high message rates

self.pcd_publisher = self.create_publisher(PointCloud2, '/cam/map_points', 100)

# Publisher for markers representing camera poses with a moderate queue size

self.marker_publisher = self.create_publisher(MarkerArray, '/cam/camera_pose', 10)

# Publisher for visualizing feature extraction results with a moderate queue size

self.feature_publisher = self.create_publisher(Image, '/cam/feature_ext_viz', 10)

# Subscriber for raw camera images with a moderate queue size

self.subscription = self.create_subscription(

Image,

'/cam/image_raw',

self.listener_callback,

10)

self.subscription # Prevent unused variable warning

self.bridge = CvBridge() # Bridge to convert between ROS and OpenCV images

self.timer_period = 0.5 # Timer period in seconds

self.timer = self.create_timer(self.timer_period, self.publish_callback) # Create a timer to call the publish callback periodically

self.latest_frame = None # Variable to store the latest frame

self.itr = 0 # Iterator counter

def listener_callback(self, msg):

# Callback function to handle incoming images

self.latest_frame = self.bridge.imgmsg_to_cv2(msg, "bgr8")

def publish_callback(self):

if self.latest_frame is not None:

frame = self.latest_frame

result = process_frame(frame) # Process the frame using the SLAM library

if result is not None:

img, mapp = result # Unpack the result from process_frame

marker_array = MarkerArray()

# Create markers for each frame in the map

for i, frame in enumerate(mapp.frames):

pose = frame.pose

translation = pose[0:3, 3]

marker = Marker()

marker.header.frame_id = "base"

marker.header.stamp = self.get_clock().now().to_msg()

marker.ns = "slam_points"

marker.id = i

marker.type = Marker.SPHERE # Use a cube to represent the marker

marker.action = Marker.ADD

marker.pose.position.x = translation[0]

marker.pose.position.y = translation[1]

marker.pose.position.z = translation[2]

marker.pose.orientation.x = 0.0

marker.pose.orientation.y = 0.0

marker.pose.orientation.z = 0.0

marker.pose.orientation.w = 1.0

marker.scale.x = 0.3

marker.scale.y = 0.3

marker.scale.z = 0.4

marker.color.a = 1.0

marker.color.r = 1.0

marker.color.g = 0.0

marker.color.b = 0.0

marker_array.markers.append(marker)

self.marker_publisher.publish(marker_array) # Publish the marker array

self.get_logger().info('Publishing Markers')

# Create a PointCloud2 message

msg = PointCloud2()

msg.header.stamp = self.get_clock().now().to_msg()

msg.header.frame_id = 'base'

points = np.array(mapp.points)

msg.height = 1

msg.width = points.shape[0]

# Define the fields for the point cloud

msg.fields = [

PointField(name='x', offset=0, datatype=PointField.FLOAT32, count=1),

PointField(name='y', offset=4, datatype=PointField.FLOAT32, count=1),

PointField(name='z', offset=8, datatype=PointField.FLOAT32, count=1)

]

msg.is_bigendian = False

msg.point_step = 12

msg.row_step = msg.point_step * points.shape[0]

msg.is_dense = True

msg.data = np.asarray(points, np.float32).tobytes()

self.pcd_publisher.publish(msg) # Publish the point cloud

self.get_logger().info('Publishing point cloud')

# Convert the image to a ROS Image message and publish it

image_message = self.bridge.cv2_to_imgmsg(img, encoding="bgr8")

self.feature_publisher.publish(image_message)

self.get_logger().info('Published Feature Extraction Image')

else:

self.get_logger().warn("Function returned None, cannot unpack")

def main(args=None):

rclpy.init(args=args) # Initialize the rclpy library

minimal_subscriber = SlamPublisher() # Create an instance of the SlamPublisher node

rclpy.spin(minimal_subscriber) # Spin the node to keep it running

# Destroy the node explicitly (optional - otherwise it will be done automatically)

minimal_subscriber.destroy_node()

rclpy.shutdown() # Shutdown the node

if __name__ == '__main__':

main()

As before we start from main() function,

rclpy.init(args=args)is how we start the ros2 client.minimal_subscriber = SlamPublisher()we create an object ofSlamPublisherclass.rclpy.spin(minimal_subscriber)keeps the node running. the code below that line is not executed until there is any external intervention.- If any external intervention is applied then the node is destroyed using the below code,

minimal_subscriber.destroy_node()

rclpy.shutdown() # Shutdown the node

Now let’s look how SlamPublisher is working. We first define the three publishers. We publish point cloud data in the /cam/map_points topic, in the /cam/camera_pose we are publishing the sequence of markers, here it’s a sphere and finally we publish the feature extraction image in the /cam/feature_ext_viz topic. The queue size for the point cloud publisher is kept 100, because the map point processing was quite fast.

# Publisher for point cloud data with

self.pcd_publisher = self.create_publisher(PointCloud2, '/cam/map_points', 100)

# Publisher for markers representing camera poses

self.marker_publisher = self.create_publisher(MarkerArray, '/cam/camera_pose', 10)

# Publisher for visualizing feature extraction results

self.feature_publisher = self.create_publisher(Image, '/cam/feature_ext_viz', 10)

After that we define the video frame subscriber using self.create_subscription. This function takes message type (Image), topic name (/cam/image_raw), callback function (self.listener_callback) and queue size(10). As the object self.subscription is not called again in the code we write self.subscription in the next line, otherwise it will throw a warning.

In the 29th line we call the publisher callback. In this single callback we are publishing point cloud, camera pose and feature extraction image. In the listener_callback function we get the image frame and update the self.latest_frame variable with the latest image frames.

This variable is used as input in the publisher callback publish_callback(). We first check if self.latest_frame is not None, if that’s true we pass the image to the process_frame() function. After that we get the mapp and img from the result of that function. mapp object has two attributes, .points which contains the 3D map points and .frames which contains the camera pose.

mapp.framesis a list containing the camera pose, and with each input it appends the new pose to the list. As it is a sequence of poses, we useMarkerArrayto visualize the point in Rviz2.MarkerArrayis an array of markers, each marker has a type, here it is a sphere. marker.scale decides the size of the sphere, marker.color defines the color, marker.pose.position and marker.pose.orientation need to be filled up with the camera position and orientation.mapp.pointsis also a list, and appends new points with each iteration. We convert the map points into point-cloud messages.

The feature extraction images are published using CvBridge.

Now that we are done with coding, let’s update the configuration files. We will look into the following files,

launch/slam.launch.py: A single file for executing all the nodes consecutively.setup.py: We will update the external files we will use to test the SLAM code. Also provide the launch file path, so that the package can understand that the launch file actually belongs to that package itself.

Creating a Launch File in Python:

Writing a launch file in python is very simple, first we define an unique function generate_launch_description() under which we mention which nodes to execute and in which order.

The launch file name needs to end with launch.py to be recognized and autocomplete by ros2 launch. Your launch file should define the generate_launch_description() function which returns a launch.LaunchDescription() to be used by the ros2 launch verb.

First we need to import two important objects, LaunchDescription and Node.

from launch import LaunchDescription

from launch_ros.actions import Node

def generate_launch_description():

ld = LaunchDescription()

video_pub = Node(

package="slam",

executable="vod_img_pub", # Ensure this matches the entry point defined in your setup.py or CMakeLists.txt

name='vod_img_pub', # Optional, but useful for debugging

arguments=['src/slam/videos/test_ohio.mp4'],

output='screen',

emulate_tty=True, # Ensures proper handling of console output

)

slam_pub = Node(

package='slam',

executable='slam_pub',

name='slam_pub',

output='screen',

emulate_tty=True, # Optional, useful for debugging

)

rviz = Node(

package="rviz2",

executable="rviz2", # Ensure this matches the entry point defined in your setup.py or CMakeLists.txt

output='screen',

arguments=['-d' + 'rviz/py_slam_ros2.rviz']

)

ld.add_action(video_pub)

ld.add_action(slam_pub)

ld.add_action(rviz)

return ld

After the imports we create an instance of LaunchDescription() and later we will add the nodes in that. Now, we will create the Nodes. As discussed before there will be one Node for publishing the video frames and another for SLAM output. We will also create a Node for Rviz2, so that we can visualize the topics.

We will discuss how to define one Node, which will be enough to understand how to add python package nodes to launch files.

video_pub = Node(

package="slam",

executable="vod_img_pub",

name='vod_img_pub',

arguments=['src/slam/videos/test_ohio.mp4'],

output='screen',

emulate_tty=True,

)

In the above code, we initiate the Node class, with package name, which is slam in our case, name of the node vod_img_pub, name field is generally optional, you can rename the node using this. The command line argument is passed using arguments parameter. output='screen' means that the output will be shown in the terminal screen. you can provide log as input, by doing that the output will directly go to the log files not in the screen. If you provide prefix='xterm -e' and keep output='screen' then output will be shown in a different xterm window. emulate_tty=True is provided for debugging.

After defining the Nodes we add that to the launch description object like this, ld.add_action(video_pub). and finally return ld.

Update setup.py:

There are only two changes in the setup.py,

- Update the launch file location in the

data_filesargument. - Update the

entry_points, which is nothing but a description of from which function the execution should start, its formatted ascommand_name = package_name.file_name:function_name.

from setuptools import find_packages, setup

from glob import glob

import os

package_name = 'slam'

setup(

name=package_name,

version='0.0.0',

packages=find_packages(exclude=['test']),

data_files=[

('share/ament_index/resource_index/packages',

['resource/' + package_name]),

('share/' + package_name, ['package.xml']),

(os.path.join('share', package_name), glob('launch/*.launch.py'))

],

install_requires=['setuptools'],

zip_safe=True,

maintainer='somusan',

maintainer_email='your@gmail.com',

description='TODO: Package description',

license='TODO: License declaration',

tests_require=['pytest'],

entry_points={

'console_scripts': [

"vod_img_pub = slam.vod_pub:main",

"slam_pub = slam.slam_pub:main"

],

},

)

Now that we are done with all the code and configuration setup, let’s build the package and run it. Before doing that make sure you have installed required python packages using the command pip install -r requirements.txt.

We will build the package with the below command,

$ cd ../../ # come to the workspace home directory

$ colcon build # this will build all the packages inside src

$ source install/setup.bash # source the workspace

$ ros2 launch slam slam.launch.py

ROS 2 Important Commands

Further more you can use various ROS 2 command to see which topics are available, which nodes are running, at what rate the messages are being published.

$ ros2 topic list: Shows the available topics.$ ros2 node list: Shows currently running nodes.$ ros2 topic hz /example_topic: Gives the rate of message published in that topic$ ros2 topic show /example_topic: Shows the message thats being published in that topic.$ ros2 node info /node1: Get Information About a Specific Node$ ros2 bag record /topic1 /topic2 /topic3: Record a bagfile from the specified topics$ ros2 bag record -a: Record all available topics$ ros2 bag record -o my_bag /topic_name: By default, the bag file will be saved in a directory named after the current timestamp. You can specify a custom directory name using the-ooption$ ros2 bag play my_bag: To play back a recorded bag file, use the ROS 2 bag play command.$ ros2 bag info my_bag: To list information about a bag file, such as the topics it contains and the message types:

Key Takeaways

- ROS 2 has evolved significantly from its initial version (ROS1), addressing critical needs such as security, reliability, and support for complex, large-scale embedded systems. This evolution has made ROS 2 an essential tool for modern robotics development, used widely by individuals and companies globally.

- The integration of Data Distribution Service (DDS) in ROS 2 provides a robust communication framework, facilitating efficient, scalable, and real-time data exchange. DDS supports advanced Quality of Service (QoS) policies, which are crucial for ensuring data reliability, low latency, and efficient resource usage in mission-critical applications.

- Essential ROS 2 components include Nodes, Topic Publishers, Subscribers, Services, and Clients. Nodes, which can be either standard or lifecycle managed, offer enhanced modularity, flexibility, and performance. Topic Publishers and Subscribers enable communication across different parts of the system, while Services and Clients facilitate synchronous, request-response interactions for tasks that require confirmation or specific results.

- The article provides a comprehensive guide on integrating Monocular SLAM with ROS 2 using Python. It covers the creation of ROS 2 workspaces, packages, and nodes, demonstrating how to publish and subscribe to topics, and implement services and clients. This practical example underscores the capability of ROS 2 to handle complex robotics tasks, from hardware integration to building autonomous systems.

Conclusion

ROS is a very common component in robotics and has many technical tutorials and resources available on the internet. However, through this blog, our objective is to provide a detailed understanding of the internal workings of ROS 2, how DDS works, the need for DDS, the ROS1 middleware architecture, and the data flow in ROS 2. Additionally, we discuss how to use this tool in Python, covering various topics such as packages, nodes, topics, publishers, subscribers, and services. At the end, for more hands-on understanding, we have created a capstone project where we integrate Monocular SLAM with ROS 2 using Python. We hope this will serve as a beginner-friendly gateway for anyone who wants to learn ROS 2 and get into robotics. Please follow the Robotics Blog Series for more such articles.

References

- ROS 2 + DDS Interoperation

- ROS 2 Humble Documentation

- Robot Operating System 2: Design, Architecture, and Uses In The Wild

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning