In our last few blogs we are learning and exploring lot of things about PyTorch and it’s advantages over other existing Deep Learning libraries. We learnt how to build an image classifier using PyTorch’s Python front-end. But, do you know PyTorch also supports C++ as another language to build your Machine learning models?

From past one year, there are lot of development activities happening in PyTorch community to popularise the C++ front-end. This should not come as a surprise, as the PyTorch’s, Python front-end sits on top of the C++ back-end. So it makes perfect sense to provide the C++ libraries as extensions to develop the ML/DL models directly using C++. PyTorch’s C++ front-end libraries will help the researchers and developers who want to do research and develop models for performance critical applications using C++. This avoids any unnecessary complications of binding the C++ applications with python or Lua or some other scripting language to talk to our Python front-end based models.

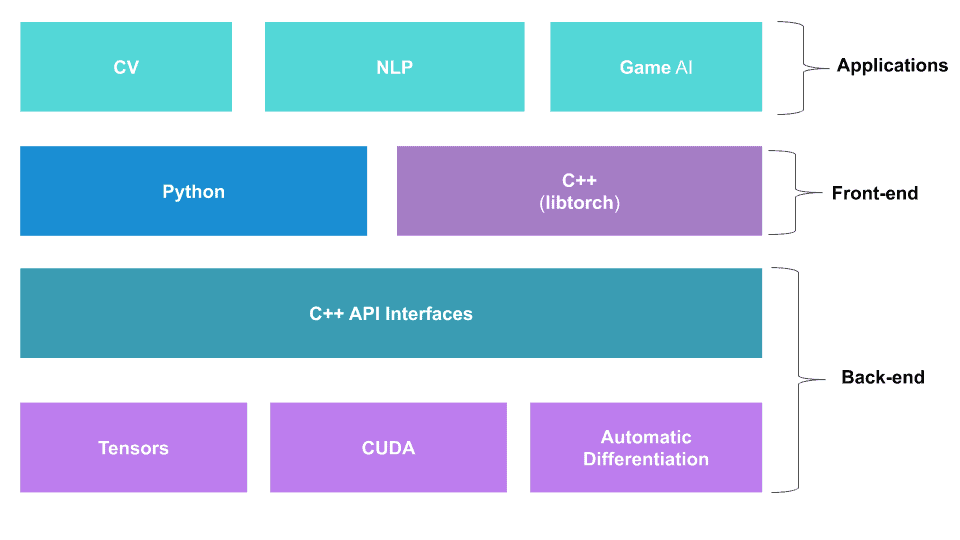

Let us try to understand high level overview of PyTorch’s components and how they fit in.

Back-end

PyTorch backend is written in C++ which provides API’s to access highly optimized libraries such as; Tensor libraries for efficient matrix operations, CUDA libaries to perform GPU operations and Automatic differentiation for gradience calculations etc.

Front-end

The C++ back-end API interfaces can be accessed using Python or C++ front-end (libpytroch) to build, train and test the ML/DL models.

Applications

Using the front-end libraries (Python/C++), you can build ML/DL models for different applications such as Computer vision (CV), Natural Language processing (NLP) and Game AI etc.

With this high level birds view of the PyTorch components in mind, we will fix our focus on C++ front-end i.e libtorch. Using libtorch, we will learn, how one can easily implement deep learning models using PyTorch’s C++ front-end.

But, before we jump in;

- We assume reader has at least some basic programming knowledge in C++ and python.

- This blog is NOT a C++ language tutorial. But rather a tutorial which explains how to use or implement the PyTorch models using libtorch.

Writing everything in a single blog, is like trying to pack everything in one season of GOT! We all know how does that end!

Here is what to expect from this series of blogs.

- Initial setup and building the PyTorch C++ front-end code (Part-I)

- Weights-Biases and Perceptrons from scratch, using PyTorch Tensors (Part-II)

- MNIST from simple Perceptrons (Part-III)

- Implement a CNN for CIFAR-10 dataset (Part-IV)

1. Initial setup

To work with C++ front-end we need the PyTorch libraries. Let us see how to install and setup one.

- First download the libraries

wget https://download.pytorch.org/libtorch/nightly/cpu/libtorch-shared-with-deps-latest.zip

- Next, extract the zip file

unzip libtorch-shared-with-deps-latest.zip -d /opt/pytorch

Note that, with “unzip” command, we are using the “-d” option to re-direct the extracted libs to the path /opt/pytorch/. This is just for our convenience. You can extract it where ever you want. This approach has slight advantage. If you are working in a team, having such common paths defined for the libraries will help in maintaining a common build environment across the team. Without such common path isolation, build systems becomes more complicated and error prone.

NOTE: You can also build libtorch from source but we will not be covering it in this blog.

With this initial setup out of our way, let us write a small piece of code in Python to understand what we are doing and figure out how the same operations can be done in C++. Througout Part-I & II of the blog we follow the approach of using python way of creating models and try to figure out how similar results can be achived using C++ equivalent API’s using libtorch. Once we gain more confidence in using C++ front-end, we stick with C++ to build the complete models (Part-III & IV).

2. Tensor creation using PyTorch

2.1. Python Code

# First, import PyTorch

import torch

# Generate some data

torch.manual_seed(7)

# Set the random seed so things are predictable

# Features are 6 random normal variables

features = torch.randn((2, 3))

print(features)

2.2. C++ Code

// main.cpp

#include <torch/torch.h>

#include <ATen/Context.h>

#include <iostream>

int main()

{

// Seed for Random number

torch::manual_seed(7);

torch::Tensor features = torch::rand({2, 3});

std::cout << "features = " << features << "\n";

}

If you carefully observe the code above, you will clearly notice the similarity between the Python and C++ code. In fact if you copy and paste the Python code in to a file and replace all the “.” with “::” operator you have an almost working C++ code.

This is mainly because the authors of PyTorch lib have gone an extra mile to keep the syntax as simple and as similar as possible to python, so that the people from python and C++ background can work seamlessly. It is one of the philosophy behind the libtorch development.

2.3. Building C++ Code Using CMake

C++ code can be easily integrated with the PyTorch libraries using CMake. Let us see how to write one for our example.

CMAKE_MINIMUM_REQUIRED(VERSION 3.0 FATAL_ERROR)

PROJECT(HelloPyTorch)

SET(CMAKE_CXX_STANDARD 11)

SET(CMAKE_PREFIX_PATH /opt/pytorch/libtorch)

FIND_PACKAGE(Torch REQUIRED)

INCLUDE_DIRECTORIES(${TORCH_INCLUDE_DIRS})

ADD_EXECUTABLE(HelloWorld main.cpp)

TARGET_LINK_LIBRARIES(HelloWorld "${TORCH_LIBRARIES}")

Let us understand the CMakeLists.txt file in detail.

CMAKE_MINIMUM_REQUIRED(VERSION 3.0 FATAL_ERROR)

- This is to mention which version of CMake is required to build the project.

PROJECT(HelloPyTorch)

- This field is optional. But, it lets us give some name to the project. Let us call it “HelloPyTorch“.

SET(CMAKE_CXX_STANDARD 11)

- PyTorch requires a C++ compiler with C++11 features enabled. By setting the CMAKE_CXX_STANDARD key word of cmake, we can controll which version is required to build the project.

SET(CMAKE_PREFIX_PATH /opt/pytorch/libtorch)

- We are setting the path where we extracted the libpytorch. i.e. /opt/pytorch/libtorch. ( If you have extracted the libs in different path, you need to set the path appropriately. )

FIND_PACKAGE(Torch REQUIRED)

INCLUDE_DIRECTORIES(${TORCH_INCLUDE_DIRS})

- By using FIND_PACKAGE() parameter, we are asking CMake to find the required dependencies to build the project.

ADD_EXECUTABLE(HelloWorld main.cpp)

- We are giving our output binary a name – “HelloWorld“. We are also telling CMake which C++ file to use. In our case we just have “main.cpp”

TARGET_LINK_LIBRARIES(HelloWorld "${TORCH_LIBRARIES}")

- We are linking the libtorch libraries using the TARGET_LINK_LIBRARIES() option. CMake will be able to find and resolve the dependencies as required while linking the libraries with the output binary file.

With the CMakeLists.txt file ready, all you need to do is run the following commands to build our first HelloWorld code.

mkdir build

cd build

cmake ..

cmake --build . --config Release

And here is a sample output.

./HelloWorld

features = 0.5349 0.1988 0.6592

0.6569 0.2328 0.4251

[ Variable[CPUFloatType]{2,3} ]

We are able to create a Tensor of 2×3 dimension using C++. Simple std::cout print gives more information about the type of variable and where it resides etc. Let us not worry about these details too much right now. We will dig more in detail later on.

3. Shape of Tensor

3.1. Python code

In the previous section we saw how to create tensors using PyTorch. In this section, we will focus more on the shape of the tensors.

features = torch.randn((2, 3))

print(features.shape)

print(features.shape[0])

print(features.shape[1])

You should get the following output.

torch.Size([2, 3])

2

3

If you are familiar with NumPy, you would have noticed that this syntax is very similar to what you have been using in NumPy.

3.2. C++ Code

#include <torch/torch.h>

#include <ATen/Context.h>

#include <iostream>

int main()

{

// Seed for Random number

torch::manual_seed(7);

torch::Tensor features = torch::rand({2, 3});

std::cout << "features shape = " << features.sizes() << "\n";

std::cout << "features shape rows = " << features.sizes()[0] << "\n";

std::cout << "features shape col = " << features.size(1) << "\n";

}

You should be seeing the following output.

features shape = [2, 3]

features shape rows = 2

features shape col = 3

Similar to python code, we have sizes() option instead of shape(). But the way to access the data is quite similar to NumPy syntax.

Notice the different ways of accessing the indexes of a Tensors.

features.sizes()[0]

features.size(0)

In the subsequent blogs, we will use the later way of accessing Tensor indexes, as our choice to access the shape of a Tensor. There is no major advantage as such, other than you save few keystrokes.

4. Reshaping a Tensor

Similar to NumPy’s reshape method, we can also change the dimensions of the tensor which we created initially using PyTorch’s view method.

In the newer versions of the PyTorch, there is also a method called reshape available. There are subtle differences between the two methods. The new reshape method, which has similar semantics to numpy.reshape, will return a view of the source tensor if possible, otherwise, it returns a copy of the tensor.

Later in the blogs we will see how we can use the view, resize_ and reshape methods. For now let us focus only on the view method.

4.1. Python Code

We will start off with creating a tensor with 16 random normal elements and then reshape it to a 4×4 tensor.

import torch

x = torch.randn(16)

y = x.view(4,4)

print(x)

print(y)

Your output values might vary. But important to note is that, the dimensions of the created tensors, before and after reshaping it.

tensor([ 1.9898, 0.9479, 1.1773, 2.6453, 0.4446, 0.4736, 1.4591, 1.1316, -1.1997, 0.6398, 0.1749, -0.1467, 0.8116, -0.4637, -0.8970, 0.1903])

tensor([[ 1.9898, 0.9479, 1.1773, 2.6453],

[ 0.4446, 0.4736, 1.4591, 1.1316],

[-1.1997, 0.6398, 0.1749, -0.1467],

[ 0.8116, -0.4637, -0.8970, 0.1903]])

4.2. C++ Code

#include <torch/torch.h>

#include <iostream>

int main() {

torch::Tensor numbers = torch::rand(16);

std::cout << numbers << "\n";

auto twoDtensor = numbers.view({4,4});

std::cout << twoDtensor << "\n";

}

Notice that we are using “auto” variables.

auto twoDtensor = numbers.view({4,4});

This is possible because we are using C++11 compatible compiler. If you have a look inside the libtorch source code there are lot of places where auto variables are used. It is advised to make use of auto variables as much as possible, which makes code less verbose and more readable.

The expected output is shown below.

0.4383

0.0095

0.3075

0.5128

0.6614

0.9248

0.8508

0.9324

0.6059

0.5974

0.7215

0.2678

0.2845

0.3625

0.0417

0.9183

[ Variable[CPUType]{16} ]

0.4383 0.0095 0.3075 0.5128

0.6614 0.9248 0.8508 0.9324

0.6059 0.5974 0.7215 0.2678

0.2845 0.3625 0.0417 0.9183

[ Variable[CPUType]{4,4} ]

With this much information, we are all set to take the next step. That is, building your own first neural net! In our next blog we will have a look at how we can build a simple perceptron network using libtorch and use the same perceptron network to build the more complex network such as Convolution Neural Net (CNN). Stay tuned!