In many of our previous posts, we used OpenCV DNN Module, which allows running pre-trained neural networks. One of the module’s main drawback is its limited CPU-only inference use since it was the only supported mode. Starting from OpenCV version 4.2, the DNN module supports NVIDIA GPU usage, which means acceleration of CUDA and cuDNN when running deep learning networks on it. This post will help us learn compiling the OpenCV library with DNN GPU support to speed up the neural network inference. We will learn optimizing OpenCV DNN Module with NVIDIA GPUs.

Installation Instructions for Ubuntu 18.04

To enable NVIDIA GPU support in OpenCV, we have to compile it from scratch with proper configurations.

Step 1. Prerequisites

To make sure you have everything we will need to start, run the following commands to install packages you may be missing:

sudo apt-get update

sudo apt-get upgrade

sudo apt-get install build-essential cmake unzip pkg-config

sudo apt-get install libjpeg-dev libpng-dev libtiff-dev

sudo apt-get install libavcodec-dev libavformat-dev libswscale-dev

sudo apt-get install libv4l-dev libxvidcore-dev libx264-dev

sudo apt-get install libgtk-3-dev

sudo apt-get install libblas-dev liblapack-dev gfortran

sudo apt-get install python3-dev

Step 2. Getting OpenCV Sources

The first step is to download the OpenCV library sources. We will use the latest release, which is 4.5.1.

To download it to the $HOME folder, simply run the following commands in the terminal:

cd ~

wget -O opencv-4.5.1.zip https://github.com/opencv/opencv/archive/4.5.1.zip

unzip -q opencv-4.5.1.zip

mv opencv-4.5.1 opencv

rm -f opencv-4.5.1.zip

You will also need to do the same for the opencv_contrib module:

wget -O opencv_contrib-4.5.1.zip https://github.com/opencv/opencv_contrib/archive/4.5.1.zip

unzip -q opencv_contrib-4.5.1.zip

mv opencv_contrib-4.5.1 opencv_contrib

rm -f opencv_contrib-4.5.1.zip

Step 3. CUDA Installation

Download and install a suitable CUDA version for your system from the NVIDIA website. Best practice would be to follow the installation instructions for Ubuntu from the NVIDIA docs.

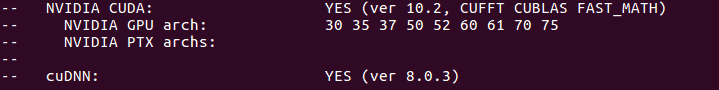

The instructions in post are tested with CUDA 10.2, we recommend you the same version.

Step 4. cuDNN Installation

Download and install a suitable cuDNN version for your system from the NVIDIA website. You can follow the installation instructions for Ubuntu from the NVIDIA docs.

The post has been tested with cuDNN v8.0.3, we recommend you the same version.

Step 5. Python Dependencies

If we also want to use OpenCV with DNN GPU support in our Python scripts, we will need to generate OpenCV-Python bindings. You can think of them as bridges that enable calling C++ OpenCV functions from the inside of a python script.

Note that we are using Python 3.7.5.

First of all, let’s create a new virtual environment. Make sure you have virtualenv package, else install it by running the following command:

pip install virtualenv

Now we can create a new virtualenv variable and call it env:

python3 -m venv ~/env

The last thing we have to do is to activate it:

source env/bin/activate

Now, install numpy package:

pip install numpy

Step 6. Building OpenCV Library

Now that we met all the dependencies, let’s go ahead and build the OpenCV library.

Let’s create a build directory and navigate to it:

cd ~/opencv

mkdir build

cd build

Next thing to do is to configure properly, our cmake build by passing correct arguments:

cmake \

-D CMAKE_BUILD_TYPE=RELEASE \

-D CMAKE_INSTALL_PREFIX=/usr/local \

-D INSTALL_PYTHON_EXAMPLES=OFF \

-D INSTALL_C_EXAMPLES=OFF \

-D OPENCV_ENABLE_NONFREE=ON \

-D OPENCV_EXTRA_MODULES_PATH=~/opencv_contrib/modules \

-D PYTHON_EXECUTABLE=~/env/bin/python3 \

-D BUILD_EXAMPLES=ON \

-D WITH_CUDA=ON \

-D WITH_CUDNN=ON \

-D OPENCV_DNN_CUDA=ON \

-D WITH_CUBLAS=ON \

-D CUDA_TOOLKIT_ROOT_DIR=/usr/local/cuda-10.2 \

-D OpenCL_LIBRARY=/usr/local/cuda-10.2/lib64/libOpenCL.so \

-D OpenCL_INCLUDE_DIR=/usr/local/cuda-10.2/include/ \

..

Note that we,

- Set

OPENCV_EXTRA_MODULES_PATHto the location of the opencv_contrib folder, we downloaded earlier - Set

PYTHON_EXECUTABLEto the created python virtual environment - Set

CUDA_TOOLKIT_ROOT_DIRto the installed CUDA - Set

OpenCL_LIBRARYto the shared OpenCL library - Set

OpenCL_INCLUDE_DIRto the directory with the OpenCL header - Set

WITH_CUDA=ON,WITH_CUDNN=ONto enable CUDA and cuDNN support - Set

OPENCV_DNN_CUDA=ONto build the DNN module with CUDA support. This is the most important flag. Without it, the DNN module with CUDA support will not be generated. - The Flag

WITH_CUBLASis enabled for optimization purposes

Additionally, there are two more optimization flags, ENABLE_FAST_MATH and CUDA_FAST_MATH, which are used to optimise and speed up the math operations. However, the results of floating-point calculations are not guaranteed to be IEEE compliant when you enable these flags. If you want quick calculations and precision is not an issue, you can go ahead with these options. This link explains in detail the problems with accuracy.

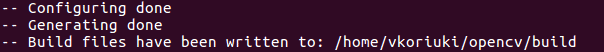

If everything is correct, you will get the following message specifying that the configuration is successful:

Double check, that NVIDIA is ON and CUDA has been found:

Now, you can run the make command:

make -j12

Building will take some time. After it’s done, run:

sudo make install

sudo ldconfig

The only thing left is to add a symlink to the Python environment. To do so, navigate to your virtual environment site-packages directory and link the freshly-built OpenCV library:

cd ~/env/lib/python3.x/site-packages/

ln -s /usr/local/lib/python3.x/site-packages/cv2/python-3.x/cv2.cpython-3xm-x86_64-linux-gnu.so cv2.so

Don’t forget to replace the “x” symbol to the version of Python you have. Since we use Python 3.7, in our case, x is 7.

That’s it! Now you can implement the code using OpenCV library with DNN GPU Support.

Test an example code

We will be testing the OpenPose code, which is available in the post https://learnopencv.com/deep-learning-based-human-pose-estimation-using-opencv-cpp-python/

Read models

C++:

// Specify the paths for the 2 files

string protoFile = "pose/mpi/pose_deploy_linevec_faster_4_stages.prototxt";

string weightsFile = "pose/mpi/pose_iter_160000.caffemodel";

// Read the network into Memory

Net net = readNetFromCaffe(protoFile, weightsFile);

Python:

# Specify the paths for the 2 files

protoFile = "pose/mpi/pose_deploy_linevec_faster_4_stages.prototxt"

weightsFile = "pose/mpi/pose_iter_160000.caffemodel"

# Read the network into Memory

net = cv2.dnn.readNetFromCaffe(protoFile, weightsFile)

Read Image and preprocess

C++:

// Read Image

Mat frame = imread("single.jpg");

// Specify the input image dimensions

int inWidth = 368;

int inHeight = 368;

// Prepare the frame to be fed to the network

Mat inpBlob = blobFromImage(frame, 1.0 / 255, Size(inWidth, inHeight), Scalar(0, 0, 0), false, false);

// Set the prepared object as the input blob of the network

net.setInput(inpBlob);

Python:

# Read image

frame = cv2.imread("single.jpg")

# Specify the input image dimensions

inWidth = 368

inHeight = 368

# Prepare the frame to be fed to the network

inpBlob = cv2.dnn.blobFromImage(frame, 1.0 / 255, (inWidth, inHeight), (0, 0, 0), swapRB=False, crop=False)

# Set the prepared object as the input blob of the network

net.setInput(inpBlob)

Make prediction and pass key point

C++:

Mat output = net.forward()

int H = output.size[2];

int W = output.size[3];

// find the position of the body parts

vector<Point> points(nPoints);

for (int n=0; n < nPoints; n++)

{

// Probability map of corresponding body's part.

Mat probMap(H, W, CV_32F, output.ptr(0,n));

Point2f p(-1,-1);

Point maxLoc;

double prob;

minMaxLoc(probMap, 0, &prob, 0, &maxLoc);

if (prob > thresh)

{

p = maxLoc;

p.x *= (float)frameWidth / W ;

p.y *= (float)frameHeight / H ;

circle(frameCopy, cv::Point((int)p.x, (int)p.y), 8, Scalar(0,255,255), -1);

cv::putText(frameCopy, cv::format("%d", n), cv::Point((int)p.x, (int)p.y), cv::FONT_HERSHEY_COMPLEX, 1, cv::Scalar(0, 0, 255), 2);

}

points[n] = p;

}

Python:

output = net.forward()

H = out.shape[2]

W = out.shape[3]

# Empty list to store the detected keypoints

points = []

for i in range(len()):

# confidence map of corresponding body's part.

probMap = output[0, i, :, :]

# Find global maxima of the probMap.

minVal, prob, minLoc, point = cv2.minMaxLoc(probMap)

# Scale the point to fit on the original image

x = (frameWidth * point[0]) / W

y = (frameHeight * point[1]) / H

if prob > threshold :

cv2.circle(frame, (int(x), int(y)), 15, (0, 255, 255), thickness=-1, lineType=cv.FILLED)

cv2.putText(frame, "{}".format(i), (int(x), int(y)), cv2.FONT_HERSHEY_SIMPLEX, 1.4, (0, 0, 255), 3, lineType=cv2.LINE_AA)

# Add the point to the list if the probability is greater than the threshold

points.append((int(x), int(y)))

else :

points.append(None)

cv2.imshow("Output-Keypoints",frame)

cv2.waitKey(0)

cv2.destroyAllWindows()

Draw Skeleton

C++:

for (int n = 0; n < nPairs; n++)

{

// lookup 2 connected body/hand parts

Point2f partA = points[POSE_PAIRS[n][0]];

Point2f partB = points[POSE_PAIRS[n][1]];

if (partA.x<=0 || partA.y<=0 || partB.x<=0 || partB.y<=0)

continue;

line(frame, partA, partB, Scalar(0,255,255), 8);

circle(frame, partA, 8, Scalar(0,0,255), -1);

circle(frame, partB, 8, Scalar(0,0,255), -1);

}

Python:

for pair in POSE_PAIRS:

partA = pair[0]

partB = pair[1]

if points[partA] and points[partB]:

cv2.line(frameCopy, points[partA], points[partB], (0, 255, 0), 3)

We are using AWS system. The system configuration is

processor: Intel(R) Xeon(R) CPU E5-2686 v4 @ 2.30GHz

Number of cores: 18

GPU: Tesla K80 12GB

RAM: 56GB

To run the code with CUDA, we will do a simple addition to the C++ and Python code:

net.setPreferableBackend(DNN_BACKEND_CUDA);

net.setPreferableTarget(DNN_TARGET_CUDA);

net.setPreferableBackend(cv2.dnn.DNN_BACKEND_CUDA)

net.setPreferableTarget(cv2.dnn.DNN_TARGET_CUDA)

This is all the code updation you will need to run code with CUDA acceleration. The results with GPU and CPU back end are as follows.

In this example, the GPU outputs are 10 times FASTER than the CPU output!

GPU takes ~0.2 seconds to execute a frame, whereas CPU takes ~2.2 seconds. CUDA backend has reduced the execution time by upwards of 90% for this code example. Try the CUDA optimisation with our other posts and let us know the time improvement you get in the comments.

This video is sped up to help us visualise easily. In reality, CPU version is rendered much slower than GPU.

With GPU, we get 7.48 fps, and with CPU, we get 1.04 fps.

Summary

In this post, we have installed OpenCV with CUDA support. First, we have prepared the system by installing the required OS libraries. Then, we install CUDA and cuDNN on the system. Finally, we build OpenCV from source and explained the different CMake options which we have used. OpenCV is also built for Python virtual environment.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning