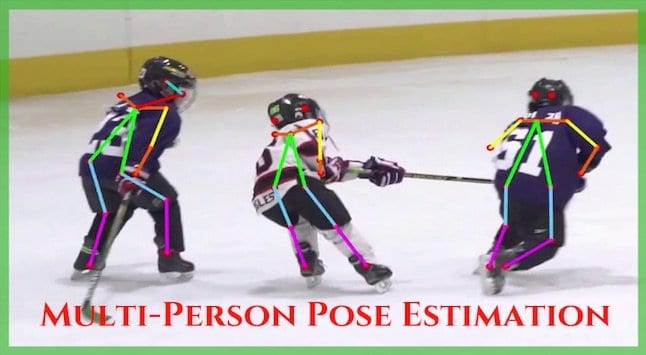

In our previous post, we used the OpenPose model to perform Human Pose Estimation for a single person. In this post, we will discuss how to perform multi person pose estimation.

When there are multiple people in a photo, pose estimation produces multiple independent keypoints. We need to figure out which set of keypoints belong to the same person.

We will be using the 18 point model trained on the COCO dataset for this article. The keypoints along with their numbering used by the COCO Dataset is given below:

Nose – 0, Neck – 1, Right Shoulder – 2, Right Elbow – 3, Right Wrist – 4,

Left Shoulder – 5, Left Elbow – 6, Left Wrist – 7, Right Hip – 8,

Right Knee – 9, Right Ankle – 10, Left Hip – 11, Left Knee – 12,

LAnkle – 13, Right Eye – 14, Left Eye – 15, Right Ear – 16,

Left Ear – 17, Background – 18

1. Network Architecture

The OpenPose architecture is shown below. Click to enlarge the image.

The model takes as input a color image of size h x w and produces, as output, an array of matrices which consists of the confidence maps of Keypoints and Part Affinity Heatmaps for each keypoint pair. The above network architecture consists of two stages as explained below:

- Stage 0: The first 10 layers of the VGGNet are used to create feature maps for the input image.

- Stage 1: A 2-branch multi-stage CNN is used where

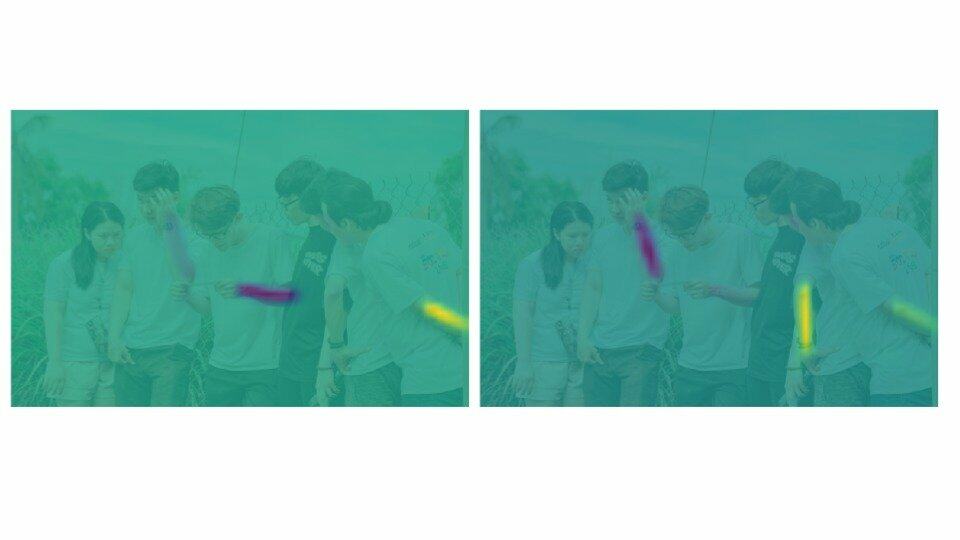

- The first branch predicts a set of 2D Confidence Maps (S) of body part locations ( e.g. elbow, knee etc.). A Confidence Map is a grayscale image which has a high value at locations where the likelihood of a certain body part is high. For example, the Confidence Map for the Left Shoulder is shown in Figure 2 below. It has high values at all locations where the there is a left shoulder.

For the 18 point model, the first 19 matrices of the output correspond to the Confidence Maps.

Figure 2 : Showing confidence maps for Left Shoulder for the given image

Figure 2 : Showing confidence maps for Left Shoulder for the given image

- The second branch predicts a set of 2D vector fields (L) of Part Affinities (PAF), which encode the degree of association between parts (keypoints). The 20th to 57th matrices are the PAF matrices. In the figure below – part affinity between the Neck and Left shoulder is shown. Notice there is a large affinity between parts belonging to the same person.

Figure 3 : Showing Part Affinity maps for Neck – Left Shoulder pair for the given image

Figure 3 : Showing Part Affinity maps for Neck – Left Shoulder pair for the given image

- The first branch predicts a set of 2D Confidence Maps (S) of body part locations ( e.g. elbow, knee etc.). A Confidence Map is a grayscale image which has a high value at locations where the likelihood of a certain body part is high. For example, the Confidence Map for the Left Shoulder is shown in Figure 2 below. It has high values at all locations where the there is a left shoulder.

2. Download Model Weights

Use the getModels.sh file provided with the code to download the model weights file. Note that the configuration proto files are already present in the folders.

From the command line, execute the following from the downloaded folder.

sudo chmod a+x getModels.sh

./getModels.sh

Check the folders to ensure that the model binaries (.caffemodel files ) have been downloaded. If you are not able to run the above script, then you can download the model by clicking here. Once you download the weight file, put it in the “pose/coco/” folder.

3. Step 1: Generate output from image

3.1. Load Network

Python

protoFile = "pose/coco/pose_deploy_linevec.prototxt"

weightsFile = "pose/coco/pose_iter_440000.caffemodel"

net = cv2.dnn.readNetFromCaffe(protoFile, weightsFile)

C++

cv::dnn::Net inputNet = cv::dnn::readNetFromCaffe("./pose/coco/pose_deploy_linevec.prototxt","./pose/coco/pose_iter_440000.caffemodel");

3.2. Load Image and create input blob

Python

image1 = cv2.imread("group.jpg")

# Fix the input Height and get the width according to the Aspect Ratio

inHeight = 368

inWidth = int((inHeight/frameHeight)*frameWidth)

inpBlob = cv2.dnn.blobFromImage(image1, 1.0 / 255, (inWidth, inHeight),

(0, 0, 0), swapRB=False, crop=False)

C++

std::string inputFile = "./group.jpg";

if(argc > 1){

inputFile = std::string(argv[1]);

}

cv::Mat input = cv::imread(inputFile,CV_LOAD_IMAGE_COLOR);

cv::Mat inputBlob = cv::dnn::blobFromImage(input,1.0/255.0,

cv::Size((int)((368*input.cols)/input.rows),368),

cv::Scalar(0,0,0),false,false);

3.3. Forward pass through the Net

Python

net.setInput(inpBlob)

output = net.forward()

C++

inputNet.setInput(inputBlob);

cv::Mat netOutputBlob = inputNet.forward();

3.4. Sample Output

We first resize the output to the same size as that of the input. Then we check the confidence map corresponding to the nose keypoint. You can also use cv2.addWeighted function for alpha blending the probMap on the image.

i = 0

probMap = output[0, i, :, :]

probMap = cv2.resize(probMap, (frameWidth, frameHeight))

plt.imshow(cv2.cvtColor(image1, cv2.COLOR_BGR2RGB))

plt.imshow(probMap, alpha=0.6)

Figure 4 : Showing the confidence map corresponding to the nose Keypoint.

4. Step 2: Detection of keypoints

As seen from the above figure, the zeroth matrix gives the confidence map for the nose. Similarly, the first Matrix corresponds to the neck and so on. As discussed in our previous post, for a single person, it is very easy to find the location of each keypoint just by finding the maximum of the confidence map. But for multi-person scenario, we can’t do this.

NOTE: The explanation and code snippets in this section belong to the getKeypoints() function.

For every keypoint, we apply a threshold ( 0.1 in this case ) to the confidence map.

Python

mapSmooth = cv2.GaussianBlur(probMap,(3,3),0,0)

mapMask = np.uint8(mapSmooth>threshold)

C++

cv::Mat smoothProbMap;

cv::GaussianBlur( probMap, smoothProbMap, cv::Size( 3, 3 ), 0, 0 );

cv::Mat maskedProbMap;

cv::threshold(smoothProbMap,maskedProbMap,threshold,255,cv::THRESH_BINARY);

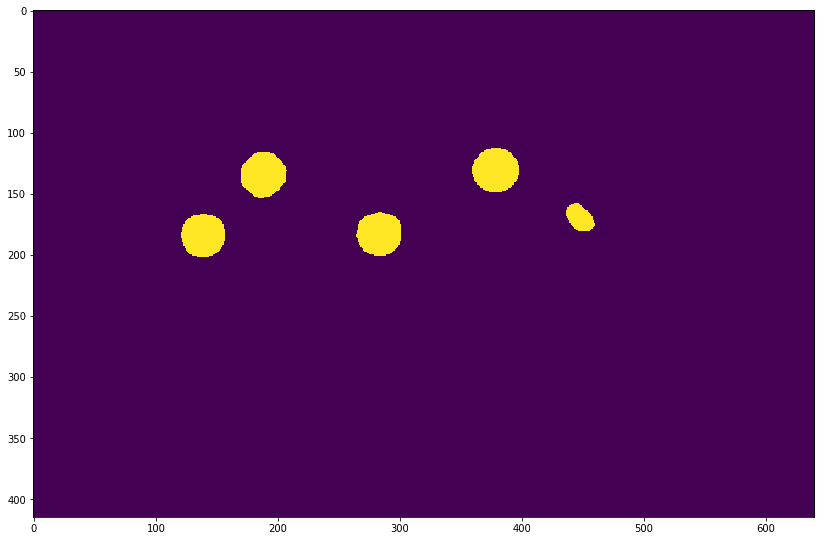

This gives a matrix containing blobs in the region corresponding to the keypoint as shown below.

Figure 5 : Confidence Map after applying threshold

In order to find the exact location of the keypoints, we need to find the maxima for each blob. We do the following :

- First find all the contours of the region corresponding to the keypoints.

- Create a mask for this region.

- Extract the probMap for this region by multiplying the probMap with this mask.

- Find the local maxima for this region. This is done for each contour ( keypoint region ).

Python

#find the blobs

_, contours, _ = cv2.findContours(mapMask, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

#for each blob find the maxima

for cnt in contours:

blobMask = np.zeros(mapMask.shape)

blobMask = cv2.fillConvexPoly(blobMask, cnt, 1)

maskedProbMap = mapSmooth * blobMask

_, maxVal, _, maxLoc = cv2.minMaxLoc(maskedProbMap)

keypoints.append(maxLoc + (probMap[maxLoc[1], maxLoc[0]],))

C++

std::vector<std::vector<cv::Point> > contours;

cv::findContours(maskedProbMap,contours,cv::RETR_TREE,cv::CHAIN_APPROX_SIMPLE);

for(int i = 0; i < contours.size();++i){

cv::Mat blobMask = cv::Mat::zeros(smoothProbMap.rows,smoothProbMap.cols,smoothProbMap.type());

cv::fillConvexPoly(blobMask,contours[i],cv::Scalar(1));

double maxVal;

cv::Point maxLoc;

cv::minMaxLoc(smoothProbMap.mul(blobMask),0,&maxVal,0,&maxLoc);

keyPoints.push_back(KeyPoint(maxLoc, probMap.at<float>(maxLoc.y,maxLoc.x)));

We save the x, y coordinates and the probability score for each keypoint. We also assign an ID to each key point that we have found. This will be used later while joining the parts or connections between keypoint pairs.

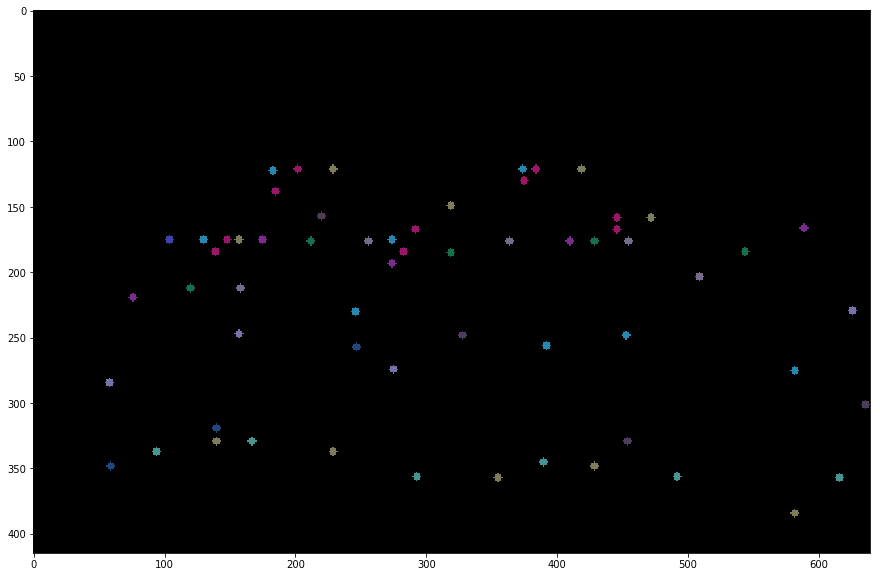

Given below are the detected keypoints for the input image. You can see that it does a nice job even for partly visible person and even for person facing away from the camera.

Figure 6 : Detected Keypoints overlayed on the input image

Also, the keypoints without overlaying it on the input image is shown below.

Figure 7 : Detected points overlayed on a black background.

5. Step 3 : Find Valid Pairs

A valid pair is a body part joining two keypoints, belonging to the same person. One simple way of finding the valid pairs would be to find the minimum distance between one joint and all possible other joints. For example, in the figure given below, we can find the distance between the marked Nose and all other Necks. The minimum distance pair should be the one corresponding to the same person.

Figure 8 : Getting the connection between keypoints by using a simple distance measure.

This approach might not work for all pairs; specially, when the image contains too many people or there is occlusion of parts. For example, for the pair, Left-Elbow -> Left Wrist – The wrist of the 3rd person is closer to the elbow of the 2nd person as compared to his own wrist. Thus, it will not result in a valid pair.

Figure 9 : Only using distance between keypoints might fail in some cases.

Given below is the Heatmap for the Left-Elbow -> Left-Wrist connection.

Figure 10 : Showing Part Affinity Heatmaps for the Left-Elbow -> Left-Wrist pair.

Thus, in the above case, even though the distance measure wrongly identifies the pair, OpenPose gives correct result since the PAF will comply only with the unit vector joining Elbow and Wrist of the 2nd person.

- Divide the line joining the two points comprising the pair. Find “n” points on this line.

- Check if the PAF on these points have the same direction as that of the line joining the points for this pair.

- If the direction matches to a certain extent, then it is valid pair.

Let’s see how it is done in code; The code snippets belong to the getValidPairs() function in code provided.

For each body part pair, we do the following :

- Take the keypoints belonging to a pair. Put them in separate lists (candA and candB). Each point from candA will be connected to some point in candB. The figure given below shows the points in candA and candB for the pair Neck -> Right-Shoulder.

Figure 11 : Showing the candidates for matching for the pair Neck -> Nose.

Python

pafA = output[0, mapIdx[k][0], :, :]

pafB = output[0, mapIdx[k][1], :, :]

pafA = cv2.resize(pafA, (frameWidth, frameHeight))

pafB = cv2.resize(pafB, (frameWidth, frameHeight))

# Find the keypoints for the first and second limb

candA = detected_keypoints[POSE_PAIRS[k][0]]

candB = detected_keypoints[POSE_PAIRS[k][1]]

C++

//A->B constitute a limb

cv::Mat pafA = netOutputParts[mapIdx[k].first];

cv::Mat pafB = netOutputParts[mapIdx[k].second];

//Find the keypoints for the first and second limb

const std::vector<KeyPoint>& candA = detectedKeypoints[posePairs[k].first];

const std::vector<KeyPoint>& candB = detectedKeypoints[posePairs[k].second];

- Find the unit vector joining the two points in consideration. This gives the direction of the line joining them.

Python

d_ij = np.subtract(candB[j][:2], candA[i][:2])

norm = np.linalg.norm(d_ij)

if norm:

d_ij = d_ij / norm

C++

std::pair<float,float> distance(candB[j].point.x - candA[i].point.x,candB[j].point.y - candA[i].point.y);

float norm = std::sqrt(distance.first*distance.first + distance.second*distance.second);

if(!norm){

continue;

}

distance.first /= norm;

distance.second /= norm;

- Create an array of 10 interpolated points on the line joining the two points.

Python

# Find p(u)

interp_coord = list(zip(np.linspace(candA[i][0], candB[j][0], num=n_interp_samples),

np.linspace(candA[i][1], candB[j][1], num=n_interp_samples)))

# Find L(p(u))

paf_interp = []

for k in range(len(interp_coord)):

paf_interp.append([pafA[int(round(interp_coord[k][1])), int(round(interp_coord[k][0]))],

pafB[int(round(interp_coord[k][1])), int(round(interp_coord[k][0]))] ])

C++

//Find p(u)

std::vector<cv::Point> interpCoords;

populateInterpPoints(candA[i].point,candB[j].point,nInterpSamples,interpCoords);

//Find L(p(u))

std::vector<std::pair<float,float>> pafInterp;

for(int l = 0; l < interpCoords.size();++l){

pafInterp.push_back(

std::pair<float,float>(

pafA.at<float>(interpCoords[l].y,interpCoords[l].x),

pafB.at<float>(interpCoords[l].y,interpCoords[l].x)

));

}

- Take the dot product between the PAF on these points and the unit vector d_ij

Python

# Find E

paf_scores = np.dot(paf_interp, d_ij)

avg_paf_score = sum(paf_scores)/len(paf_scores)

C++

std::vector<float> pafScores;

float sumOfPafScores = 0;

int numOverTh = 0;

for(int l = 0; l< pafInterp.size();++l){

float score = pafInterp[l].first*distance.first + pafInterp[l].second*distance.second;

sumOfPafScores += score;

if(score > pafScoreTh){

++numOverTh;

}

pafScores.push_back(score);

}

float avgPafScore = sumOfPafScores/((float)pafInterp.size());

- Term the pair as valid if 70% of the points satisfy the criteria.

Python

# Check if the connection is valid

# If the fraction of interpolated vectors aligned with PAF is higher then threshold -> Valid Pair

if ( len(np.where(paf_scores > paf_score_th)[0]) / n_interp_samples ) > conf_th :

if avg_paf_score > maxScore:

max_j = j

maxScore = avg_paf_score

C++

if(((float)numOverTh)/((float)nInterpSamples) > confTh){

if(avgPafScore > maxScore){

maxJ = j;

maxScore = avgPafScore;

found = true;

}

}

6. Step 4 : Assemble Person-wise Keypoints

Now that we have joined all the keypoints into pairs, we can assemble the pairs that share the same part detection candidates into full-body poses of multiple people.

Let us see how it is done in code; The code snippets in this section belong to the getPersonwiseKeypoints() function in the provided code

- We first create empty lists to store the keypoints for each person. Then we go over each pair, check if partA of the pair is already present in any of the lists. If it is present, then it means that the keypoint belongs to this list and partB of this pair should also belong to this person. Thus, add partB of this pair to the list where partA was found.

Python

for j in range(len(personwiseKeypoints)):

if personwiseKeypoints[j][indexA] == partAs[i]:

person_idx = j

found = 1

break

if found:

personwiseKeypoints[person_idx][indexB] = partBs[i]

C++

for(int j = 0; !found && j < personwiseKeypoints.size();++j){

if(indexA < personwiseKeypoints[j].size() &&

personwiseKeypoints[j][indexA] == localValidPairs[i].aId){

personIdx = j;

found = true;

}

}/* j */

if(found){

personwiseKeypoints[personIdx].at(indexB) = localValidPairs[i].bId;

}

- If partA is not present in any of the lists, then it means that the pair belongs to a new person not in the list and thus, a new list is created.

Python

elif not found and k < 17:

row = -1 * np.ones(19)

row[indexA] = partAs[i]

row[indexB] = partBs[i]

C++

else if(k < 17){

std::vector<int> lpkp(std::vector<int>(18,-1));

lpkp.at(indexA) = localValidPairs[i].aId;

lpkp.at(indexB) = localValidPairs[i].bId;

personwiseKeypoints.push_back(lpkp);

}

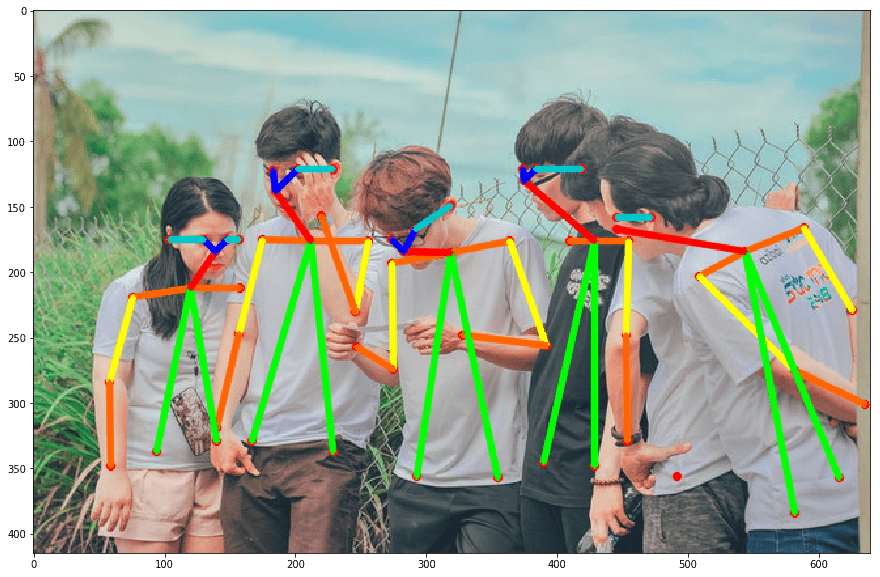

7. Results

We go over each each person and plot the skeleton on the input image

Python

for i in range(17):

for n in range(len(personwiseKeypoints)):

index = personwiseKeypoints[n][np.array(POSE_PAIRS[i])]

if -1 in index:

continue

B = np.int32(keypoints_list[index.astype(int), 0])

A = np.int32(keypoints_list[index.astype(int), 1])

cv2.line(frameClone, (B[0], A[0]), (B[1], A[1]), colors[i], 2, cv2.LINE_AA)

cv2.imshow("Detected Pose" , frameClone)

cv2.waitKey(0)

C++

for(int i = 0; i< nPoints-1;++i){

for(int n = 0; n < personwiseKeypoints.size();++n){

const std::pair<int,int>& posePair = posePairs[i];

int indexA = personwiseKeypoints[n][posePair.first];

int indexB = personwiseKeypoints[n][posePair.second];

if(indexA == -1 || indexB == -1){

continue;

}

const KeyPoint& kpA = keyPointsList[indexA];

const KeyPoint& kpB = keyPointsList[indexB];

cv::line(outputFrame,kpA.point,kpB.point,colors[i],3,cv::LINE_AA);

}

}

cv::imshow("Detected Pose",outputFrame);

cv::waitKey(0);

The figure below shows the skeletons for each of the detected persons!

Do check out the code provided with the post!

I would like to thank my teammate Chandrashekara Keralapura for writing the C++ version of the code.

References

[Video used for demo]

[OpenPose Paper]

[OpenPose reimplementation in Keras]