Dalle2 is a cutting-edge technology developed by OpenAI that has taken the world of image generation by storm. It is a remarkable breakthrough in the field of artificial intelligence, enabling users to generate high-quality images with unprecedented levels of detail and complexity.

Dalle2 is built on the foundation of GPT-3, one of the most advanced language models in the world, and has been trained on an enormous dataset of images, allowing it to generate stunningly realistic and diverse images.

In this article, we will delve into the intricacies of Dalle2, its capabilities, and its potential applications in various industries. We will explore the underlying technology and how to use it to generate stunning images.

- What is DALL·E?

- DALL·E 2, How It Got Here?

- What Can It Do?

- How To Use DALL E 2 ? – Getting Hands Dirty

- How Does It Work?

- API

- Limitations/Content Policy

- DALL·E Mini (renamed as Craiyon)

- Conclusion

What is DALL·E?

DALL·E is a generative deep learning model that takes in a text prompt and generates a novel image that depicts the text visually. It was introduced in January 2021 by OpenAI.

DALL-E is a diffusion model and uses the process of diffusion to fuse multiple visual concepts together to create novel images that have not been seen before, like:

“a bowl of soup that looks like a monster, knitted out of wool”

OR

“panda wearing a knight armor riding a horse in the snow with fireworks in the sky”

OR

“panda mad scientist mixing sparkling chemicals, digital art”

As it uses a text prompt to generate a desired image and not just any random image, it is classified as a controlled generative model.

DALL·E 2, How It Got Here?

DALL-E 2 is the 2nd generation of the diffusion model by OpenAI and was released in April 2022. It is built on top of the previous DallE model.

Researchers at OpenAI managed to increase the model’s performance as it creates more realistic images at 1024×1024 pixels, i.e, 4x the previous resolution. The number of parameters used in the model has also been reduced from 12 Billion in Dall-E to 3.5 Billion in Dall-E 2.

We will get into the details of the architecture of DALLE2 in the later section. Let’s first see what is Dall·E 2 capable of.

What Can It Do?

The best way to experience the power of this model is to experience it firsthand and let your imagination fly in the prompt you give to the model.

Here are a few prompts we tried, and the results speak for themselves.

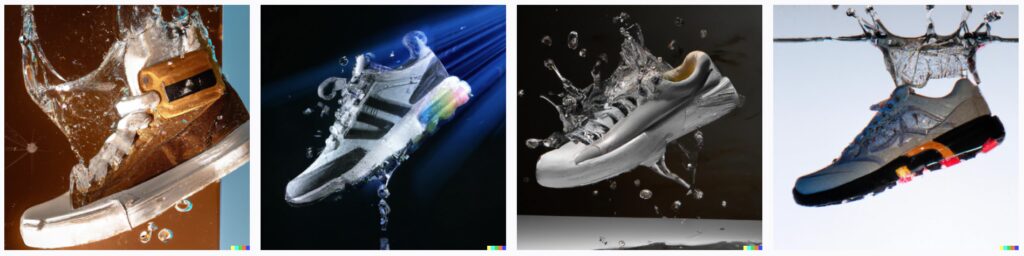

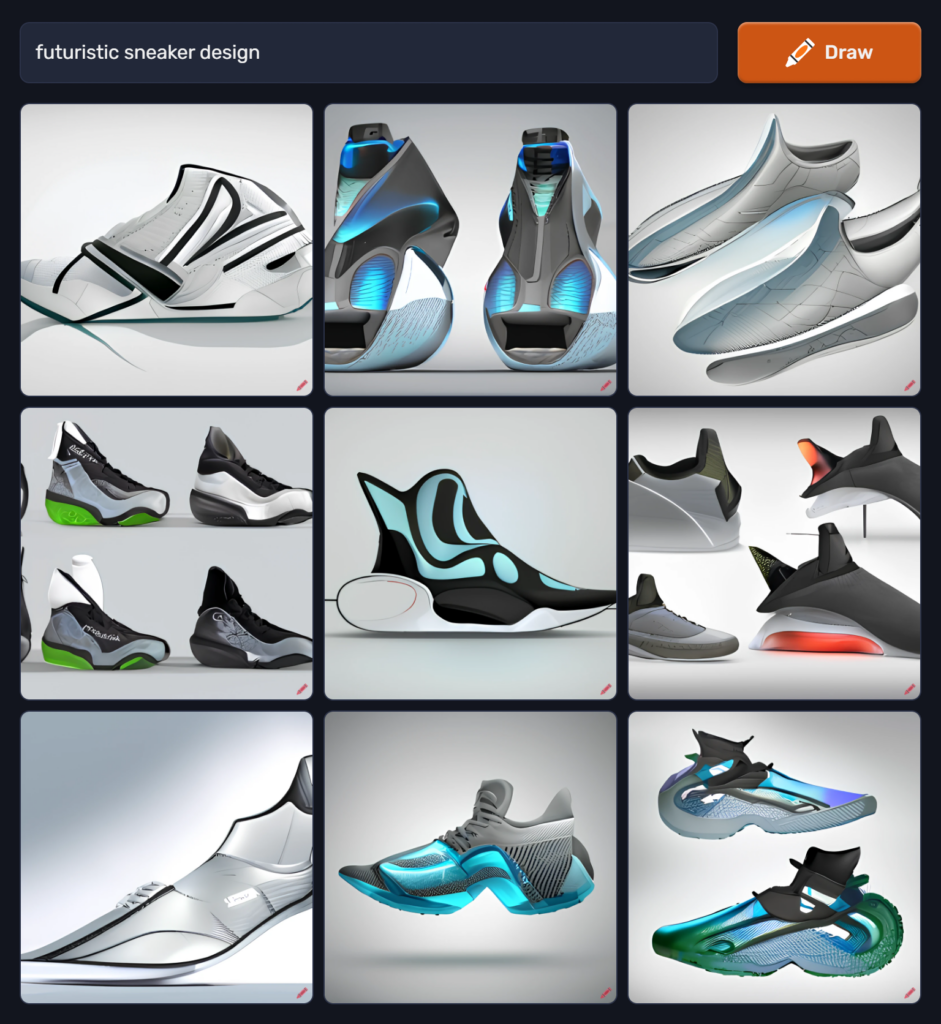

Product / Commercials

Prompt: a realistic photographic close up of a floating sneaker with liquid splashing in zero gravity with light rays refracting through the water

Pop Culture

Prompt: darth vader in cyberpunk look taking a bubble bath

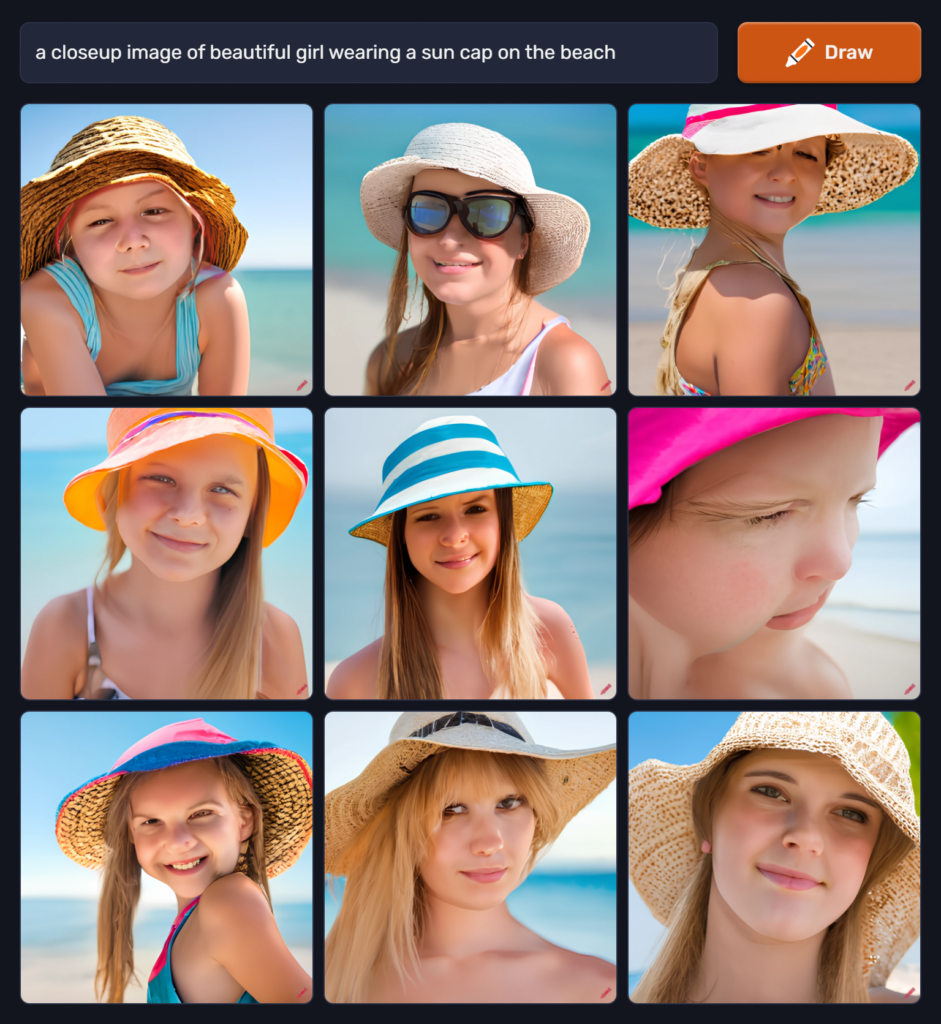

Stock Photography

Prompt: studio photography set of high detail irregular marble stones with gold lines stacked in impossible balance, perfect composition, cinematic light photo studio, beige color scheme, indirect lighting, 8k, elegant and luxury style

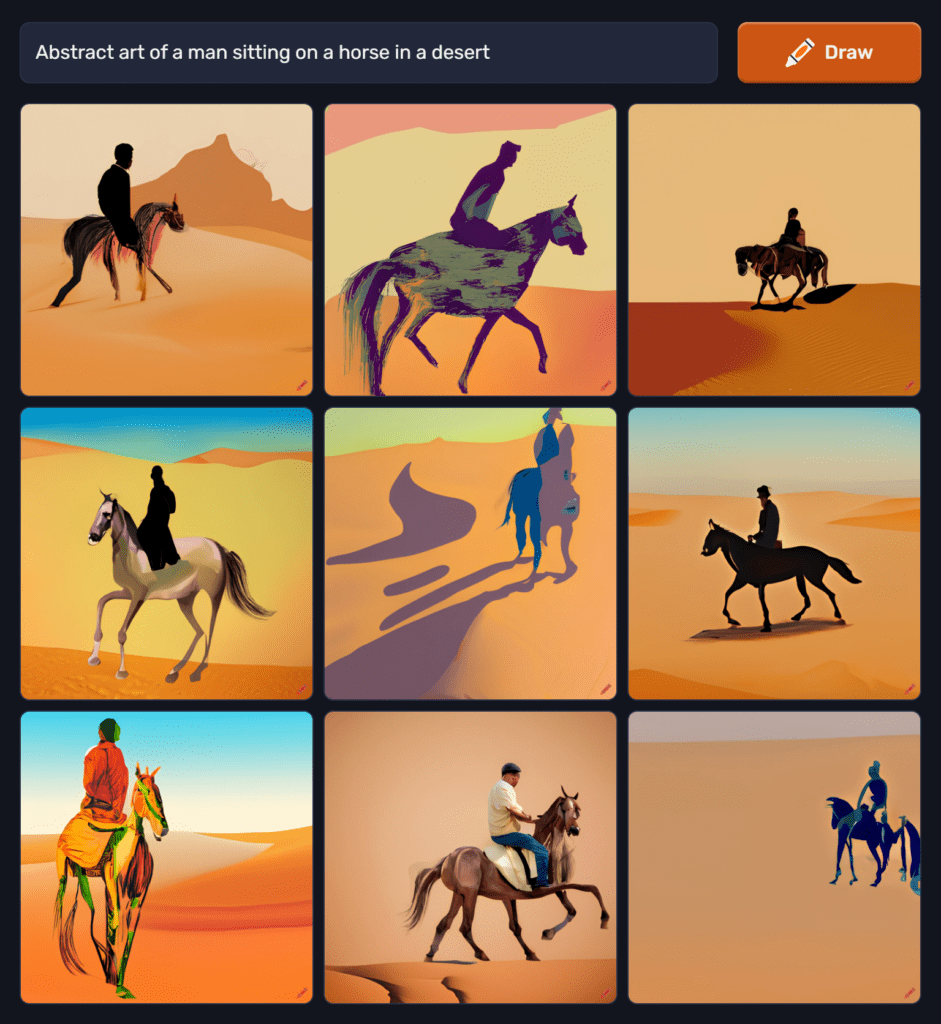

Digital Art

Prompt: A digital Illustration of the an anime boy character with backpack, 4k, detailed, fantasy vivid colors

Art Styles

Prompt: Renaissance painting of three lovers lamenting a broken glass sphere

The possibilities are endless.

How To Use DALL E 2 ? – Getting Hands Dirty

Let’s dive a bit deeper and get familiar with DALL.E 2. We will understand how to use Dall-e 2, generate the image we want, and how we can manipulate and finetune the generated image.

How to Get Access to DALL·E 2?

In September 2022, OpenAI opened access to DALLE.2, and it is no longer required to join the waitlist to get access. You simply need to sign up, and you are good to go.

Understand the UI

The UI of the website is very basic and very intuitive, with just a text box for your prompt and a “Generate” button.

Once you enter the text prompt and click the “Generate” button, four images will be generated with different visual variations.

Generating a Good Prompt

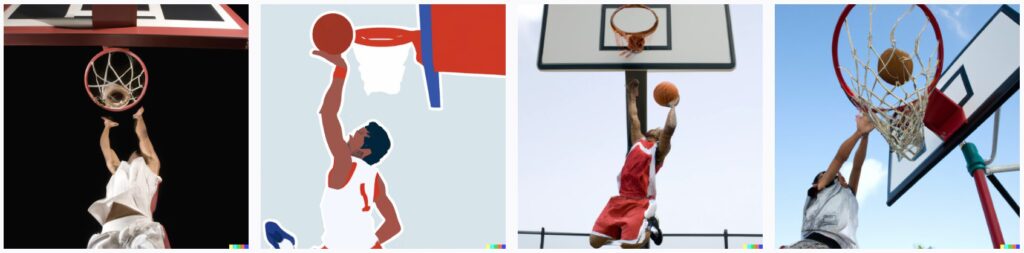

The quality of the image produced by the DALL E 2 image generator directly depends on how well you ask it. Here are two examples.

Prompt: a basketball player dunking

Prompt: An expressive photograph of a basketball player dunking in hoop, depicted as an explosion of a nebula

To get what you want, be as detailed as possible in your prompts. This gives the model more information to work with. A well-detailed prompt is needed if we want to merge multiple visual concepts together.

Inpainting

Once you get your generated art, suppose you want to edit some parts of the image without changing the overall visual composition and design. You can do that by using the inpainting feature added to DALLE2.

For editing, you have to mask the area of the image you want to change and give it a prompt to generate a replacement image to be put in that place. All the previous rules of crafting a prompt still apply.

Out Painting

Out painting is another feature added later to their image editing tool by OpenAI. It is conceptually similar to inpainting, where you specify an area and generates a new image to merge it with the existing image. But it works outside the existing image instead of editing a part inside of the image.

Think of it as reversing the crop process and extending the image further by adding new information.

Generate Image Variations

Multiple variations of existing images can also be generated.

The process of generating variation is even simpler than using inpainting. One has to simply upload the image and click ‘Generate Variations’.

How Does It Work?

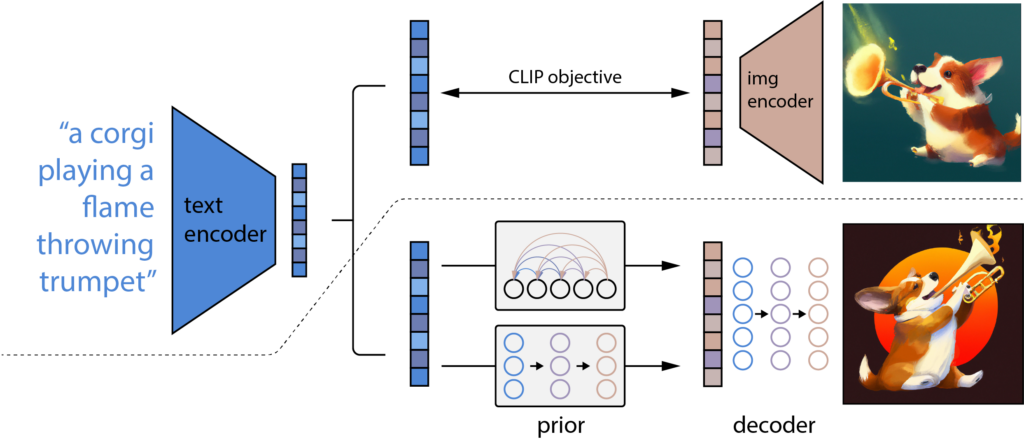

To understand how DALLE-2 works, let’s look at its architecture and understand what concepts make DALLE possible. The architecture consists of 3 different models working together to achieve the desired result, namely:

- CLIP

- A Prior Neural Network

- A Decoder Neural Network

First, CLIP is a model that takes image-caption pairs and creates text/image embeddings.

Second, the Prior model takes a caption or a CLIP text embedding and generates CLIP image embeddings.

Third, the Decoder diffusion model (unCLIP) takes a CLIP image embedding and generates images.

The decoder is called unCLIP because it does the opposite process of the original CLIP model. Instead of generating an embedding from an image, it creates an original image from an embedding.

The clip embedding encodes the semantic features of the image, such as people, animals, objects, style, colors, and background, enabling DALL-E 2 to generate a novel image that retains these characteristics while changing the non-essential features.

API

Apart from the website, OpenAI also announced an API that can be used to interact with the DallE 2 model.

The API provides three methods:

- Create an image with a text prompt.

- Create edits to an existing image based on a new text prompt.

- Creating variations of an existing image.

OpenAI also provides a Python library which makes it easier to integrate the capabilities of the Dall-e 2 model in your own app. Here’s a simple example of calling the API and generating an image using the Python library.

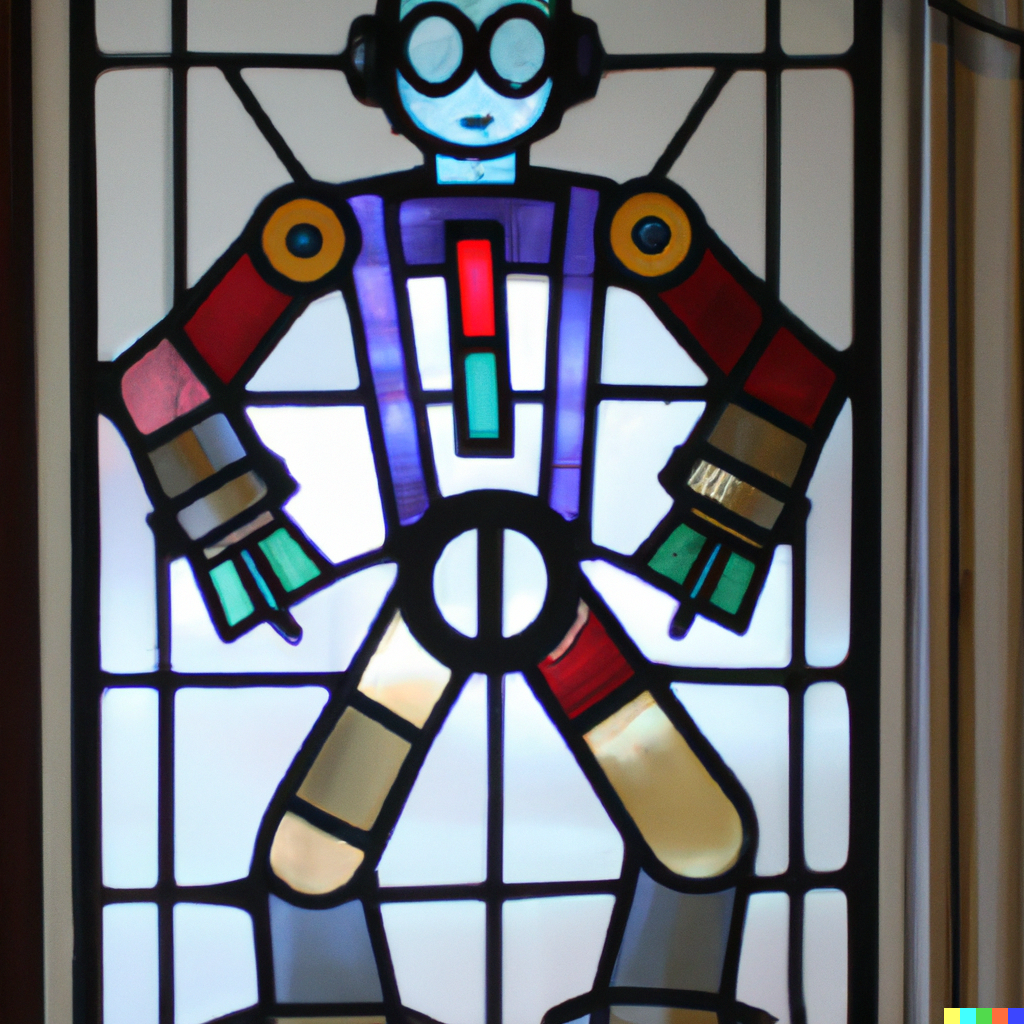

import openai

response = openai.Image.create(

prompt="a stained glass window depicting a robot",

n=1,

size="1024x1024"

)

image_url = response['data'][0]['url']

OUTPUT

At the time of writing, the API is still in Beta. So, the model is subject to evolve constantly, and you will also be limited to generating 50 images per minute.

Limitations/Content Policy

As with any new technology, there are potential downsides that should be taken care of.

With Dall·E’s ability to create virtually any image one can imagine, there is potential that people’s imagination might wander to some sinister domains. Keeping that in mind, OpenAI has restricted the use of its image generation model.

The guidelines put in place include not creating or sharing harmful, violent, sexually explicit, illegal, or misleading content and respecting the rights of others by not uploading images of people without their consent or images that the user does not have appropriate usage rights for.

DALL·E Mini (renamed as Craiyon)

Now you have seen what DALLE can do. The problem is DALL-E is a closed-source project. One can only access it through the website and API provided by OpenAI. Although the research paper is available if one tries to replicate the original model, it will require a large amount of resources to train and run the model, which is out of the capacity of an average user.

What if someone wants to deploy DALL-E on their own system using fewer resources?

DALL·E Mini is another AI art generation tool which was created to fulfill just that use case. It was released by the Craiyon team led by Boris Dayman. It is an OpenSource project created to replicate the results of the original DALLE model while using only a fraction of the resources used for the DALL-E model.

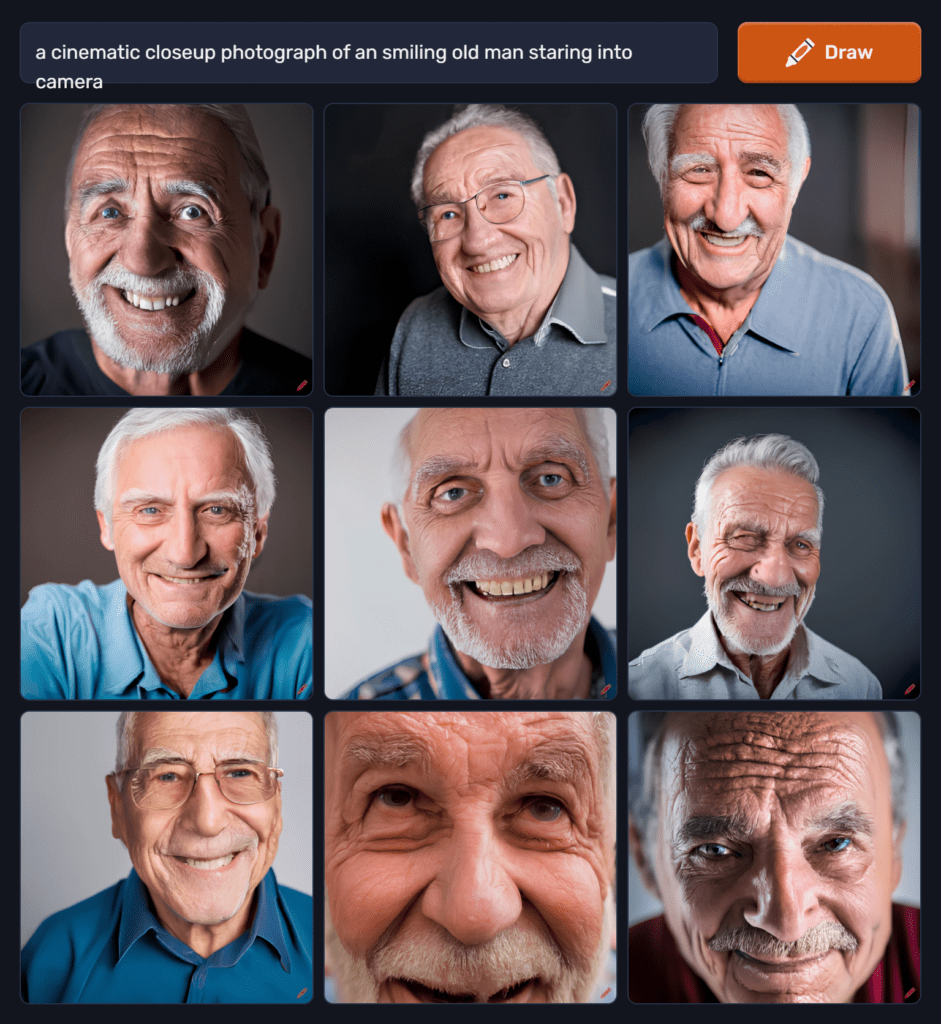

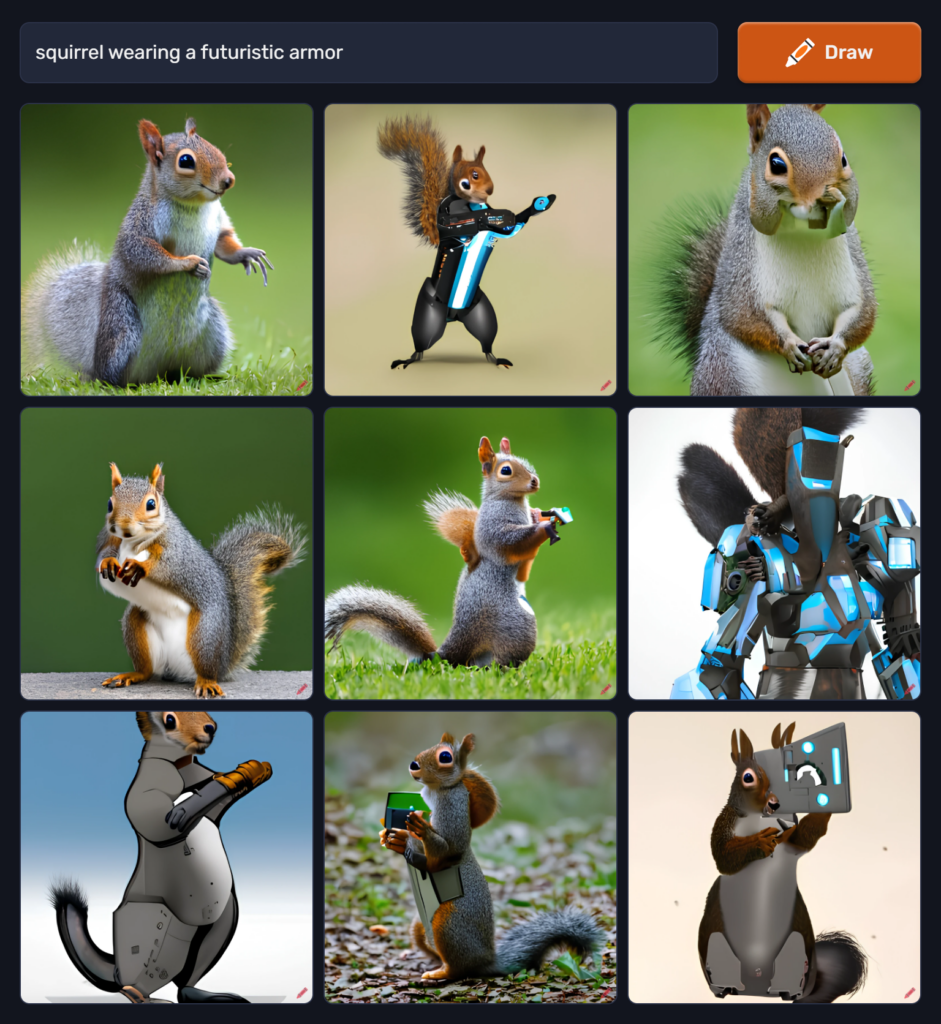

The model is available to deploy on your own system, and there is also a web interface to try out the model. Here are a few images generated by the DALL-E Mini model.

The model typically generates images that are quite abstract and don’t seem to make much sense, and lack intricate details. However, occasionally it produces an image that matches what you’re searching for, and for such a lightweight model, it is like hitting the jackpot. For this reason, the model is not suitable for generating human faces and images requiring a lot of details; it might be better suited for generating artworks where the tolerance is more.

Conclusion

There, now you know the ins and outs of the DALLE 2 image generation model, how it works, what it can do, and where it fails. If you know how to use it properly, DALLE is one of the best generative models and can be used to make some pretty fun and interesting stuff.

If you are curious to know more about generative AI models, don’t miss out on other posts in this series.

- Introduction to Diffusion Models for Image Generation – A Comprehensive Guide

- Top 10 AI Art Generation Tools using Diffusion Models

References

- https://openai.com/dall-e-2/

- https://arxiv.org/abs/2204.06125

- https://openai.com/api/

- https://labs.openai.com/policies/content-policy

- https://github.com/borisdayma/dalle-mini

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning