Introduction

Taking good pictures in poor lighting conditions can seem like magic to non-photographers. It takes a combination of skill, experience and the right equipment to accomplish low light photography. Images captured in low light lack color and distinctive edges. They also suffer from poor visibility and unknown depth. These drawbacks make such images inappropriate for personal use or image processing or computer vision tasks. We will learn improving illumination in night time images.

For everyone not blessed with photography skills, we can enhance such images using image processing techniques. A method was presented by Shi et al. for this purpose in their paper, “Nighttime low illumination image enhancement with a single image using bright/dark channel prior.” This paper will act as the base of this post.

For the layman, the solution for low lighting is using flash, but you must have noticed, sometimes flash can result in unwanted effects like red-eye, glare, etc. As a bonus for our dear readers, we will discuss how to rectify pictures with inconvenient lighting and try to solve the limitations faced by this technique.

We will be using this image given below throughout our explanation. The image is taken from the paper cited above.

- Introduction

- Theory

- Framework of Improving illumination in night time images

- Further Improvements

- Limitations

- Results

- Summary

Theory

We aim to utilise a dual channel prior-based method for low illumination image enhancement with a single image.

Compared to using multiple images, image enhancement with a single image is simpler. Single image enhancement does not need additional assistant images or require exact point-to-point fusion between different images.

Many conventional image processing techniques such as the well-known histogram equalization-based methods, wavelet transform-based method, retinex-based methods can be used to get brighter images. However, they might lead to contrast over-enhancement or noise amplification.

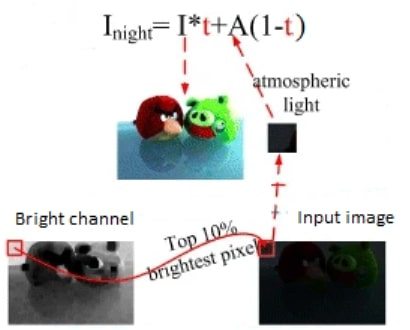

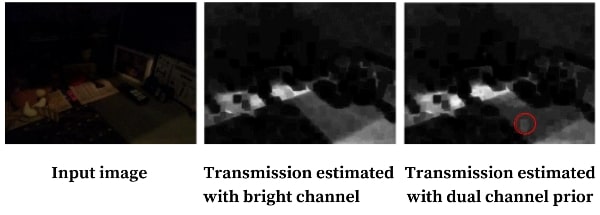

This is where the dual channel prior based solution comes in. An image prior is simply put, “prior information” of an image that you can use in your image processing problem. You would wonder why we use dual channel instead of utilising just the bright channel of a low light image since it would contain the maximum leftout information. Taking the dark channel into consideration removes block effects in some regions and helps see artefacts clearly as illustrated in the image below.

Framework of Improving Illumination in Night Time Images

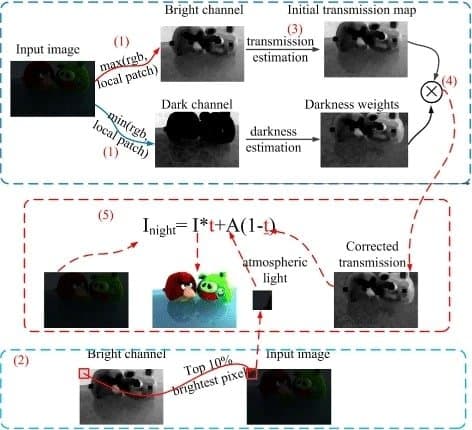

Before we dive into enhancing our images let us understand the steps involved. The flowchart below enlists the steps that we will be following in order to obtain an illuminated version of the night-time image.

This is done by first, obtaining the bright and dark channel images. These are just the maximum and minimum pixel values in the local patch of the original image, respectively. Next, we compute the global atmosphere light since that gives us the most information about the relatively brighter parts of the image.

We use the channels and atmospheric light value to obtain the respective transmission maps and take the darkness weights into consideration under special circumstances. We will discuss this in detail here.

Now we are all set.

From step 5 in the flow chart, note that the improved illumination image can be found by using the formula:

(1) ![\[I(x) = \frac{I_{night}(x) - A}{t(x)} + A \]](https://learnopencv.com/wp-content/ql-cache/quicklatex.com-bdde25db96e1946222cd0e152a231b3b_l3.png)

where I(x) is the enhanced image, Inight(x) is the original low-light image, A is the atmospheric light, and t(x) is the corrected transmission map.

Step 1: Obtaining the Bright and Dark channel Prior

The first step is to estimate the bright and dark channel priors. They represent the maximum and minimum intensity of pixels, respectively, in a local patch. This procedure can be imagined as a sliding convolutional window, helping us find the maximum or minimum value of all channels.

Estimating the dark channel prior

(2) ![\[I^{\text{dark}}(x) = \min_{c \in \left[r,g,b\right] }\left(\min_{y \in \Omega(x)} \left(I^{c}\left(y\right)\right)\right) \]](https://learnopencv.com/wp-content/ql-cache/quicklatex.com-a1c0d532d586b2f8e3621413f7b6ae8e_l3.png)

Estimating the bright channel prior

(3) ![\[I^{\text{bright}}(x) = \max_{c \in \left[r,g,b\right] }\left(\max_{y \in \Omega(x)} \left(I^{c}\left(y\right)\right)\right) \]](https://learnopencv.com/wp-content/ql-cache/quicklatex.com-62ba111849a596c2aa74f1ec8473f92d_l3.png)

where Ic is a color channel of I and Ω(x) is a local patch centered at x. y is a pixel in the local path Ω(x).

Let us dive into the code now!

Python

import cv2

import numpy as np

def get_illumination_channel(I, w):

M, N, _ = I.shape

# padding for channels

padded = np.pad(I, ((int(w/2), int(w/2)), (int(w/2), int(w/2)), (0, 0)), 'edge')

darkch = np.zeros((M, N))

brightch = np.zeros((M, N))

for i, j in np.ndindex(darkch.shape):

darkch[i, j] = np.min(padded[i:i + w, j:j + w, :]) # dark channel

brightch[i, j] = np.max(padded[i:i + w, j:j + w, :]) # bright channel

return darkch, brightch

We first import cv2 and NumPy and write the function to get the illumination channel. The image dimensions are stored in the variables M and N. Padding of half the kernel size is applied to the images to ensure their size remains the same. The dark channel is obtained using np.min to get the lowest pixel value in that block. Similarly, the bright channel is obtained by using np.max to get the highest pixel value in that block. We will need the value of the dark channel and the bright channel for further steps. So we return these values. A similar code is written for C++ and given below.

C++

std::pair<cv::Mat, cv::Mat> get_illumination_channel(cv::Mat I, float w) {

int N = I.size[0];

int M = I.size[1];

cv::Mat darkch = cv::Mat::zeros(cv::Size(M, N), CV_32FC1);

cv::Mat brightch = cv::Mat::zeros(cv::Size(M, N), CV_32FC1);

int padding = int(w/2);

// padding for channels

cv::Mat padded = cv::Mat::zeros(cv::Size(M + 2*padding, N + 2*padding), CV_32FC3);

for (int i=padding; i < padding + M; i++) {

for (int j=padding; j < padding + N; j++) {

padded.at<cv::Vec3f>(j, i).val[0] = (float)I.at<cv::Vec3b>(j-padding, i-padding).val[0]/255;

padded.at<cv::Vec3f>(j, i).val[1] = (float)I.at<cv::Vec3b>(j-padding, i-padding).val[1]/255;

padded.at<cv::Vec3f>(j, i).val[2] = (float)I.at<cv::Vec3b>(j-padding, i-padding).val[2]/255;

}

}

for (int i=0; i < darkch.size[1]; i++) {

int col_up, row_up;

col_up = int(i+w);

for (int j=0; j < darkch.size[0]; j++) {

double minVal, maxVal;

row_up = int(j+w);

cv::minMaxLoc(padded.colRange(i, col_up).rowRange(j, row_up), &minVal, &maxVal);

darkch.at<float>(j,i) = minVal; //dark channel

brightch.at<float>(j,i) = maxVal; //bright channel

}

}

return std::make_pair(darkch, brightch);

}

The dark and bright channels are obtained by initializing a matrix with zeroes and filling them with values from the image array, where CV_32FC1 defines the depth of each element and the number of channels.

Padding is applied to the images by half the kernel size to ensure their size remains the same. We iterate over the matrix to get the lowest pixel value in that block which is used to set the dark channel pixel value. Obtaining the highest pixel value in that block gives us the bright channel pixel value. cv::minMaxLoc is used to find the global minimum and maximum values in an array.

Step 2: Computing Global Atmosphere Lighting

The next step is to compute the global atmosphere lighting. It is computed using the bright channel obtained above by taking the mean of the top ten percent intensities. Ten percent of values are taken to ensure that a small anomaly does not affect it highly.

Python

def get_atmosphere(I, brightch, p=0.1):

M, N = brightch.shape

flatI = I.reshape(M*N, 3) # reshaping image array

flatbright = brightch.ravel() #flattening image array

searchidx = (-flatbright).argsort()[:int(M*N*p)] # sorting and slicing

A = np.mean(flatI.take(searchidx, axis=0), dtype=np.float64, axis=0)

return A

To achieve this via code, the image array is reshaped, flattened and sorted according to maximum intensity. The array is sliced to include only the top ten percent of pixels, and then the mean of these is taken.

C++

cv::Mat get_atmosphere(cv::Mat I, cv::Mat brightch, float p=0.1) {

int N = brightch.size[0];

int M = brightch.size[1];

// flattening and reshaping image array

cv::Mat flatI(cv::Size(1, N*M), CV_8UC3);

std::vector<std::pair<float, int>> flatBright;

for (int i=0; i < M; i++) {

for (int j=0; j < N; j++) {

int index = i*N + j;

flatI.at<cv::Vec3b>(index, 0).val[0] = I.at<cv::Vec3b>(j, i).val[0];

flatI.at<cv::Vec3b>(index, 0).val[1] = I.at<cv::Vec3b>(j, i).val[1];

flatI.at<cv::Vec3b>(index, 0).val[2] = I.at<cv::Vec3b>(j, i).val[2];

flatBright.push_back(std::make_pair(-brightch.at<float>(j, i), index));

}

}

// sorting and slicing the array

sort(flatBright.begin(), flatBright.end());

cv::Mat A = cv::Mat::zeros(cv::Size(1, 3), CV_32FC1);

for (int k=0; k < int(M*N*p); k++) {

int sindex = flatBright[k].second;

A.at<float>(0, 0) = A.at<float>(0, 0) + (float)flatI.at<cv::Vec3b>(sindex, 0).val[0];

A.at<float>(1, 0) = A.at<float>(1, 0) + (float)flatI.at<cv::Vec3b>(sindex, 0).val[1];

A.at<float>(2, 0) = A.at<float>(2, 0) + (float)flatI.at<cv::Vec3b>(sindex, 0).val[2];

}

A = A/int(M*N*p);

return A/255;

}

Step 3: Finding the Initial Transmission Map

The transmission map describes the portion of the light that is not scattered and reaches the camera. In this algorithm, it will be estimated from the bright channel prior using the following equation:

(4) ![]()

Ac is simply the maximum of a local patch of the atmospheric light.

Python

def get_initial_transmission(A, brightch):

A_c = np.max(A)

init_t = (brightch-A_c)/(1.-A_c) # finding initial transmission map

return (init_t - np.min(init_t))/(np.max(init_t) - np.min(init_t)) # normalized initial transmission map

In the code, the initial transmission map is calculated using the formula and then used to calculate the normalized initial transmission map.

C++

cv::Mat get_initial_transmission(cv::Mat A, cv::Mat brightch) {

double A_n, A_x, minVal, maxVal;

cv::minMaxLoc(A, &A_n, &A_x);

cv::Mat init_t(brightch.size(), CV_32FC1);

init_t = brightch.clone();

// finding initial transmission map

init_t = (init_t - A_x)/(1.0 - A_x);

cv::minMaxLoc(init_t, &minVal, &maxVal);

// normalized initial transmission map

init_t = (init_t - minVal)/(maxVal - minVal);

return init_t;

}

Step 4: Using Dark Channel to Estimate Corrected Transmission Map

A transmission map is also calculated from the dark channel prior, and the difference between the priors is calculated. This calculation is done to correct potentially erroneous transmission estimates attained from the bright channel prior.

Any pixel x with an Idifference channel of less than the set value of alpha (determined by an empirical experiment as 0.4) is in a dark object which makes its depth unreliable. This makes the transmission of pixel x unreliable. Hence the unreliable transmission can be corrected by taking the transmission maps’ product.

Python

def get_corrected_transmission(I, A, darkch, brightch, init_t, alpha, omega, w):

im = np.empty(I.shape, I.dtype);

for ind in range(0, 3):

im[:, :, ind] = I[:, :, ind] / A[ind] #divide pixel values by atmospheric light

dark_c, _ = get_illumination_channel(im, w) # dark channel transmission map

dark_t = 1 - omega*dark_c # corrected dark transmission map

corrected_t = init_t # initializing corrected transmission map with initial transmission map

diffch = brightch - darkch # difference between transmission maps

for i in range(diffch.shape[0]):

for j in range(diffch.shape[1]):

if(diffch[i, j] < alpha):

corrected_t[i, j] = dark_t[i, j] * init_t[i, j]

return np.abs(corrected_t)

The above code for Python and below for C++, does precisely this:

We use the get_illumination_channel function we created in the first code snippet to obtain the dark channel transmission map. The parameter omega, usually set to 0.75, is used to correct the initial transmission map. The corrected transmission map is initialized as the initial transmission map. Its value will remain the same as the initial transmission map if the difference between dark and bright channel is more than alpha i.e. 0.4. If the difference at any place is less than alpha, we take the transmission maps’ product as mentioned above.

C++

cv::Mat get_corrected_transmission(cv::Mat I, cv::Mat A, cv::Mat darkch, cv::Mat brightch, cv::Mat init_t, float alpha, float omega, int w) {

cv::Mat im3(I.size(), CV_32FC3);

//divide pixel values by atmospheric light

for (int i=0; i < I.size[1]; i++) {

for (int j=0; j < I.size[0]; j++) {

im3.at<cv::Vec3f>(j, i).val[0] = (float)I.at<cv::Vec3b>(j, i).val[0]/A.at<float>(0, 0);

im3.at<cv::Vec3f>(j, i).val[1] = (float)I.at<cv::Vec3b>(j, i).val[1]/A.at<float>(1, 0);

im3.at<cv::Vec3f>(j, i).val[2] = (float)I.at<cv::Vec3b>(j, i).val[2]/A.at<float>(2, 0);

}

}

cv::Mat dark_c, dark_t, diffch;

std::pair<cv::Mat, cv::Mat> illuminate_channels = get_illumination_channel(im3, w);

// dark channel transmission map

dark_c = illuminate_channels.first;

// corrected dark transmission map

dark_t = 1 - omega*dark_c;

cv::Mat corrected_t = init_t;

diffch = brightch - darkch; //difference between transmission maps

for (int i=0; i < diffch.size[1]; i++) {

for (int j=0; j < diffch.size[0]; j++) {

if (diffch.at<float>(j, i) < alpha) {

// initializing corrected transmission map with initial transmission map

corrected_t.at<float>(j, i) = abs(dark_t.at<float>(j, i)*init_t.at<float>(j, i));

}

}

}

return corrected_t;

}

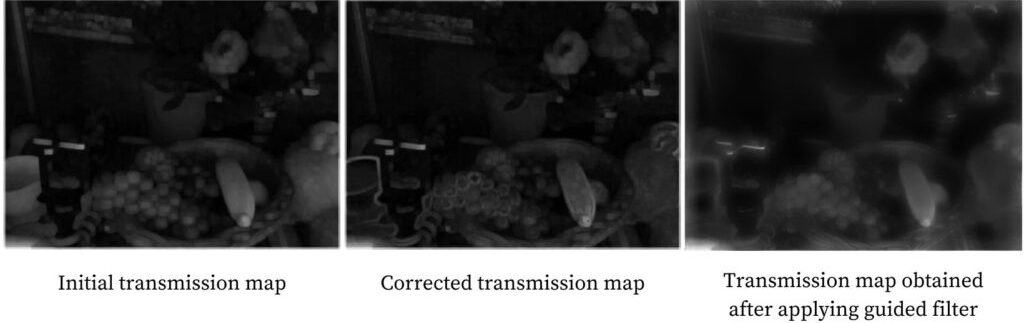

Step 5: Smoothing Transmission Map using Guided Filter

Let us take a look at the definition of the guided filter.

Guided image filtering is a neighborhood operation, like other filtering operations, but takes into account the statistics of a region in the corresponding spatial neighborhood in the guidance image when calculating the value of the output pixel.

In essence, it is an edge-preserving smoothing filter. I have used the implementation of this GitHub repository for it. This filter is applied to the corrected transmission map obtained above to get a more refined image.

Step 6: Calculating the Resultant Image

A transmission map and the atmospheric light value were required to get the enhanced image. Now that we have the required values, the first equation can be applied to get the result.

Python

def get_final_image(I, A, refined_t, tmin):

refined_t_broadcasted = np.broadcast_to(refined_t[:, :, None], (refined_t.shape[0], refined_t.shape[1], 3)) # duplicating the channel of 2D refined map to 3 channels

J = (I-A) / (np.where(refined_t_broadcasted < tmin, tmin, refined_t_broadcasted)) + A # finding result

return (J - np.min(J))/(np.max(J) - np.min(J)) # normalized image

First, the grayscale refined transformation map is converted to a grayscale image to ensure the number of channels in the original image and the transformation map are the same. Next, the output image is calculated using the equation. This image is then max-min normalized and returned from the function.

C++

cv::Mat get_final_image(cv::Mat I, cv::Mat A, cv::Mat refined_t, float tmin) {

cv::Mat J(I.size(), CV_32FC3);

for (int i=0; i < refined_t.size[1]; i++) {

for (int j=0; j < refined_t.size[0]; j++) {

float temp = refined_t.at<float>(j, i);

if (temp < tmin) {

temp = tmin;

}

// finding result

J.at<cv::Vec3f>(j, i).val[0] = (I.at<cv::Vec3f>(j, i).val[0] - A.at<float>(0,0))/temp + A.at<float>(0,0);

J.at<cv::Vec3f>(j, i).val[1] = (I.at<cv::Vec3f>(j, i).val[1] - A.at<float>(1,0))/temp + A.at<float>(1,0);

J.at<cv::Vec3f>(j, i).val[2] = (I.at<cv::Vec3f>(j, i).val[2] - A.at<float>(2,0))/temp + A.at<float>(2,0);

}

}

double minVal, maxVal;

cv::minMaxLoc(J, &minVal, &maxVal);

// normalized image

for (int i=0; i < J.size[1]; i++) {

for (int j=0; j < J.size[0]; j++) {

J.at<cv::Vec3f>(j, i).val[0] = (J.at<cv::Vec3f>(j, i).val[0] - minVal)/(maxVal - minVal);

J.at<cv::Vec3f>(j, i).val[1] = (J.at<cv::Vec3f>(j, i).val[1] - minVal)/(maxVal - minVal);

J.at<cv::Vec3f>(j, i).val[2] = (J.at<cv::Vec3f>(j, i).val[2] - minVal)/(maxVal - minVal);

}

}

return J;

}

Further Improvements

Although the image is full of color, it looks blurry and sharpening would improve the picture. We can utilize cv2.detailEnhance() for this task but this will increase noise. So we can use cv2.edgePreservingFilter() to limit it. However, this function will still induce some noise. Hence it is not ideal to do this if the images were noisy from the beginning.

img = cv2.detailEnhance(img, sigma_s=10, sigma_r=0.15)

img = cv2.edgePreservingFilter(img, flags=1, sigma_s=64, sigma_r=0.2)

For a deeper understanding of these techniques, refer to this article.

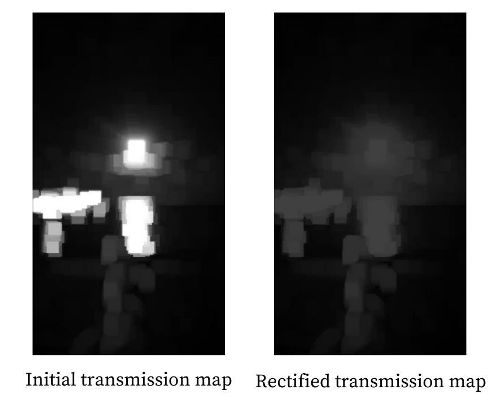

Limitations

The method does not perform well if there is any explicit light source in the images such as a lamp or a natural light source like the moon covering a significant portion of the image. Why is this a problem? Because such light sources will push up the value of the atmosphere intensity. As we were looking for the top 10% percent of the brightest pixels, this will cause those areas to overexpose.

This cause and effect is visualized in the image comparison set shown below.

To overcome this, let us analyze the initial transmission map made by the bright channel.

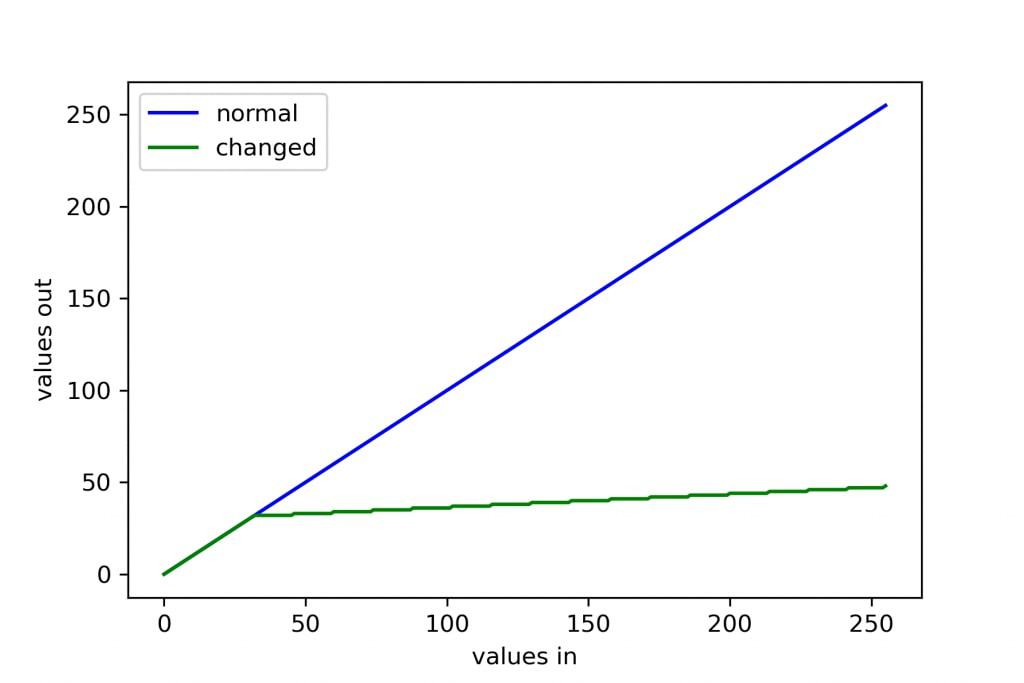

The task seems to reduce these intense spots of white, which are causing those areas to over-expose. This can be done by limiting the values from 255 to some minimal value.

Python

def reduce_init_t(init_t):

init_t = (init_t*255).astype(np.uint8)

xp = [0, 32, 255]

fp = [0, 32, 48]

x = np.arange(256) # creating array [0,...,255]

table = np.interp(x, xp, fp).astype('uint8') # interpreting fp according to xp in range of x

init_t = cv2.LUT(init_t, table) # lookup table

init_t = init_t.astype(np.float64)/255 # normalizing the transmission map

return init_t

To implement this with code, the transmission map is converted to the range of 0-255. A lookup table is then used to interpolate the points from the original values to a new range, which reduces the effect of high exposure.

C++

cv::Mat reduce_init_t(cv::Mat init_t) {

cv::Mat mod_init_t(init_t.size(), CV_8UC1);

for (int i=0; i < init_t.size[1]; i++) {

for (int j=0; j < init_t.size[0]; j++) {

mod_init_t.at<uchar>(j, i) = std::min((int)(init_t.at<float>(j, i)*255), 255);

}

}

int x[3] = {0, 32, 255};

int f[3] = {0, 32, 48};

// creating array [0,...,255]

cv::Mat table(cv::Size(1, 256), CV_8UC1);

//Linear Interpolation

int l = 0;

for (int k = 0; k < 256; k++) {

if (k > x[l+1]) {

l = l + 1;

}

float m = (float)(f[l+1] - f[l])/(x[l+1] - x[l]);

table.at<int>(k, 0) = (int)(f[l] + m*(k - x[l]));

}

//Lookup table

cv::LUT(mod_init_t, table, mod_init_t);

for (int i=0; i < init_t.size[1]; i++) {

for (int j=0; j < init_t.size[0]; j++) {

// normalizing the transmission map

init_t.at<float>(j, i) = (float)mod_init_t.at<uchar>(j, i)/255;

}

}

return init_t;

}

The graph below is a visual representation of how this tweak in the code would affect the pixels.

We can see the difference between the images obtained by enhancement using the method in the paper and the results obtained following the workaround we have just discussed.

Results

The final step is to create a function that combines all the techniques and passes it as an image.

Python

def dehaze(I, tmin=0.1, w=15, alpha=0.4, omega=0.75, p=0.1, eps=1e-3, reduce=False):

I = np.asarray(im, dtype=np.float64) # Convert the input to a float array.

I = I[:, :, :3] / 255

m, n, _ = I.shape

Idark, Ibright = get_illumination_channel(I, w)

A = get_atmosphere(I, Ibright, p)

init_t = get_initial_transmission(A, Ibright)

if reduce:

init_t = reduce_init_t(init_t)

corrected_t = get_corrected_transmission(I, A, Idark, Ibright, init_t, alpha, omega, w)

normI = (I - I.min()) / (I.max() - I.min())

refined_t = guided_filter(normI, corrected_t, w, eps) # applying guided filter

J_refined = get_final_image(I, A, refined_t, tmin)

enhanced = (J_refined*255).astype(np.uint8)

f_enhanced = cv2.detailEnhance(enhanced, sigma_s=10, sigma_r=0.15)

f_enhanced = cv2.edgePreservingFilter(f_enhanced, flags=1, sigma_s=64, sigma_r=0.2)

return f_enhanced

C++

int main() {

cv::Mat img = cv::imread("dark.png");

float tmin = 0.1;

int w = 15;

float alpha = 0.4;

float omega = 0.75;

float p = 0.1;

double eps = 1e-3;

bool reduce = false;

std::pair<cv::Mat, cv::Mat> illuminate_channels = get_illumination_channel(img, w);

cv::Mat Idark = illuminate_channels.first;

cv::Mat Ibright = illuminate_channels.second;

cv::Mat A = get_atmosphere(img, Ibright);

cv::Mat init_t = get_initial_transmission(A, Ibright);

if (reduce) {

init_t = reduce_init_t(init_t);

}

double minVal, maxVal;

// Convert the input to a float array

cv::Mat I(img.size(), CV_32FC3), normI;

for (int i=0; i < img.size[1]; i++) {

for (int j=0; j < img.size[0]; j++) {

I.at<cv::Vec3f>(j, i).val[0] = (float)img.at<cv::Vec3b>(j, i).val[0]/255;

I.at<cv::Vec3f>(j, i).val[1] = (float)img.at<cv::Vec3b>(j, i).val[1]/255;

I.at<cv::Vec3f>(j, i).val[2] = (float)img.at<cv::Vec3b>(j, i).val[2]/255;

}

}

cv::minMaxLoc(I, &minVal, &maxVal);

normI = (I - minVal)/(maxVal - minVal);

cv::Mat corrected_t = get_corrected_transmission(img, A, Idark, Ibright, init_t, alpha, omega, w);

cv::Mat refined_t(normI.size(), CV_32FC1);

// applying guided filter

refined_t = guidedFilter(normI, corrected_t, w, eps);

cv::Mat J_refined = get_final_image(I, A, refined_t, tmin);

cv::Mat enhanced(img.size(), CV_8UC3);

for (int i=0; i < img.size[1]; i++) {

for (int j=0; j < img.size[0]; j++) {

enhanced.at<cv::Vec3b>(j, i).val[0] = std::min((int)(J_refined.at<cv::Vec3f>(j, i).val[0]*255), 255);

enhanced.at<cv::Vec3b>(j, i).val[1] = std::min((int)(J_refined.at<cv::Vec3f>(j, i).val[1]*255), 255);

enhanced.at<cv::Vec3b>(j, i).val[2] = std::min((int)(J_refined.at<cv::Vec3f>(j, i).val[2]*255), 255);

}

}

cv::Mat f_enhanced;

cv::detailEnhance(enhanced, f_enhanced, 10, 0.15);

cv::edgePreservingFilter(f_enhanced, f_enhanced, 1, 64, 0.2);

cv::imshow("im", f_enhanced);

cv::waitKey(0);

return 0;

}

Take a look at the gif below showing some other images enhanced with this algorithm.

Summary

To sum it up, we started with understanding the problems associated with images taken in poor or low lighting conditions. We discussed step by step the method presented by Shi et al. to enhance such images. We also discussed further improvements and limitations of the technique presented in the paper.

The paper presented an excellent technique to increase the illumination of low-light images. However, it works only on those images with constant illumination throughout. As promised, we also explained a workaround to overcome the limitations for images with bright spots, such as a full moon or lamp within the image.

For future development of this method, we can try to control this reduction via a trackbar. The trackbar would help users play around to better understand the appropriate values for enhancement and set the optimum values needed for an individual image.

We hope you enjoyed the discussion and explanation. Do let us know your experience and results by leaving a comment.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning