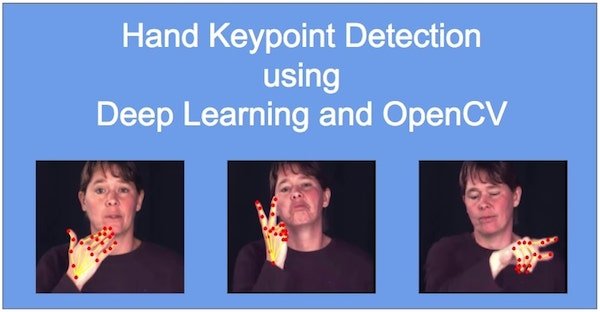

Hand Keypoint detection is the process of finding the joints on the fingers as well as the finger-tips in a given image. It is similar to finding keypoints on Face ( a.k.a Facial Landmark Detection ) or Body ( a.k.a Human Body Pose Estimation ), but, different from Hand Detection since in that case, we treat the whole hand as one object.

In our previous posts on Pose estimation – Single Person, Multi-Person, we had discussed how to use deep learning models in OpenCV to extract body pose in an image or video.

The researchers at CMU Perceptual Computing Lab have also released models for keypoint detection of Hand and Face along with the body. The Hand Keypoint detector is based on this paper. We will take a quick look at the network architecture and then share code in C++ and Python for predicting hand keypoints using OpenCV.

1. Background

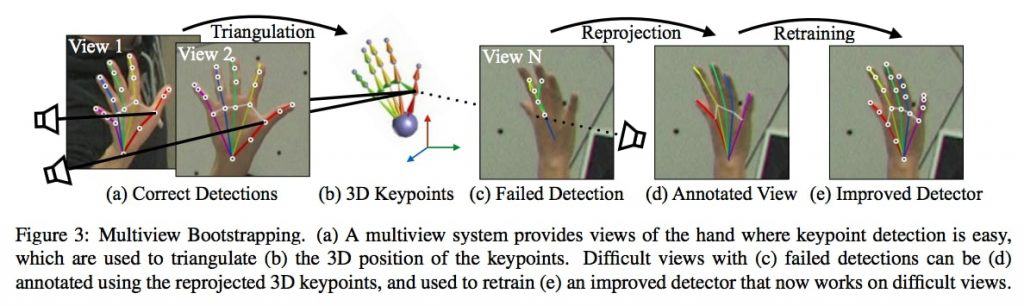

They start with a small set of labelled hand images and use a neural network ( Convolutional Pose Machines – similar to Body Pose ) to get rough estimates of the hand keypoints. They have a huge multi-view system set up to take images from different view-points or angles comprising of 31 HD cameras.

They pass these images through the detector to get many rough keypoint predictions. Once you get the detected keypoints of the same hand from different views, Keypoint triangulation is performed to get the 3D location of the keypoints. The 3D location of keypoints is used to robustly predict the keypoints through reprojection from 3D to 2D. This is especially crucial for images where keypoints are difficult to predict. This way they get a much improved detector in a few iterations.

In summary, they use keypoint detectors and multi-view images to come up with an improved detector. The detection architecture used is similar to the one used for body pose. The main source of improvement is the multi-view images for the labelled set of images.

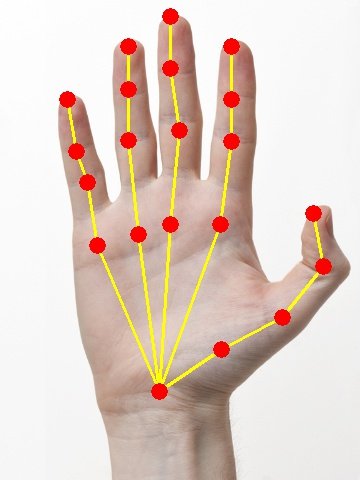

The model produces 22 keypoints. The hand has 21 points while the 22nd point signifies the background. The points are as shown below:

Let us see how to use the model in OpenCV.

2. Code for Hand Keypoint Detection

Please download code using the link below to follow along with the tutorial.

2.1. Download model for Hand Keypoint

First thing is to download the model weights file. The config file has been provided with the code. You can either use the getModels.sh script to download the file to the hand/ folder. Go to the code folder and run the following command from the Terminal.

sudo chmod a+x getModels.sh

./getModels.sh

You can also download the model from this link. Please put the model in the hand/ folder after downloading.

2.2. Load Model and Image

First, we will load the image and the model into memory. Make sure you have the model downloaded and in the correct folder as specified in the variable.

C++

string protoFile = "hand/pose_deploy.prototxt";

string weightsFile = "hand/pose_iter_102000.caffemodel";

int nPoints = 22;

string imageFile = "hand.jpg";

Mat frame = imread(imageFile);

Net net = readNetFromCaffe(protoFile, weightsFile);

Python

protoFile = "hand/pose_deploy.prototxt"

weightsFile = "hand/pose_iter_102000.caffemodel"

nPoints = 22

frame = cv2.imread("hand.jpg")

net = cv2.dnn.readNetFromCaffe(protoFile, weightsFile)

2.3. Get Predictions

We convert the BGR image to blob so that it can be fed to the network. Then we do a forward pass to get the predictions.

C++

Mat inpBlob = blobFromImage(frame, 1.0 / 255, Size(inWidth, inHeight), Scalar(0, 0, 0), false, false);

net.setInput(inpBlob);

Mat output = net.forward();

Python

inpBlob = cv2.dnn.blobFromImage(frame, 1.0 / 255, (inWidth, inHeight),

(0, 0, 0), swapRB=False, crop=False)

net.setInput(inpBlob)

output = net.forward()

2.4. Show Detections

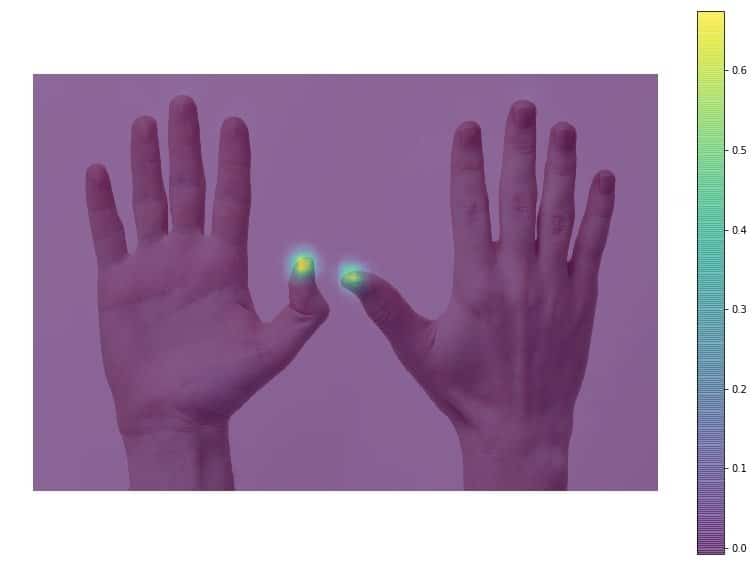

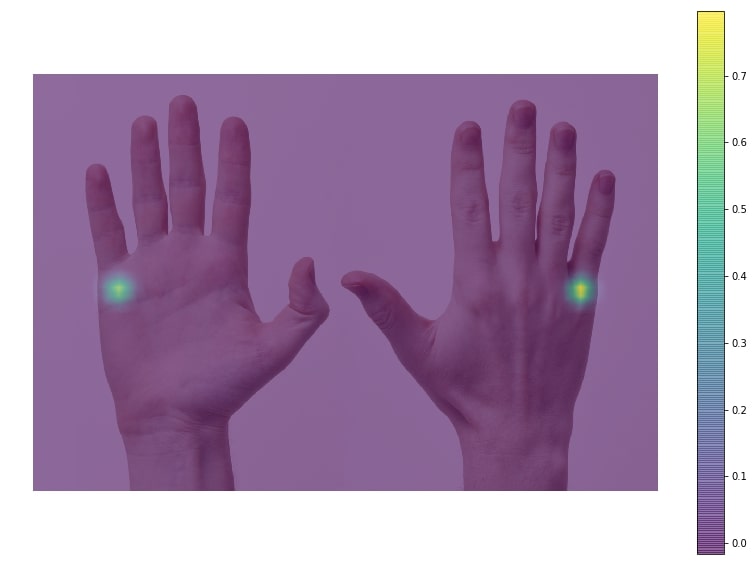

The output has 22 matrices with each matrix being the Probability Map of a keypoint. Given below is the probability heatmap superimposed on the original image for the keypoint belonging to the tip of thumb and base of little finger.

For finding the exact keypoints, first, we scale the probabilty map to the size of the original image. Then find the location of the keypoint by finding the maxima of the probability map. This is done using the minmaxLoc function in OpenCV. We draw the detected points along with the numbering on the image.

C++

// find the position of the body parts

vector<Point> points(nPoints);

for (int n=0; n < nPoints; n++)

{

// Probability map of corresponding body's part.

Mat probMap(H, W, CV_32F, output.ptr(0,n));

resize(probMap, probMap, Size(frameWidth, frame_width));

Point maxLoc;

double prob;

minMaxLoc(probMap, 0, &prob, 0, &maxLoc);

if (prob > thresh)

{

circle(frameCopy, cv::Point((int)maxLoc.x, (int)maxLoc.y), 8, Scalar(0,255,255), -1);

cv::putText(frameCopy, cv::format("%d", n), cv::Point((int)maxLoc.x, (int)maxLoc.y), cv::FONT_HERSHEY_COMPLEX, 1, cv::Scalar(0, 0, 255), 2);

}

points[n] = maxLoc;

}

imshow("Output-Keypoints", frameCopy);

Python

points = []

for i in range(nPoints):

# confidence map of corresponding body's part.

probMap = output[0, i, :, :]

probMap = cv2.resize(probMap, (frameWidth, frameHeight))

# Find global maxima of the probMap.

minVal, prob, minLoc, point = cv2.minMaxLoc(probMap)

if prob > threshold :

cv2.circle(frameCopy, (int(point[0]), int(point[1])), 8, (0, 255, 255), thickness=-1, lineType=cv2.FILLED)

cv2.putText(frameCopy, "{}".format(i), (int(point[0]), int(point[1])), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 255), 2, lineType=cv2.LINE_AA)

# Add the point to the list if the probability is greater than the threshold

points.append((int(point[0]), int(point[1])))

else :

points.append(None)cv2.imshow('Output-Keypoints', frameCopy)

2.5. Draw Skeleton

We will use the detected points to get the skeleton formed by the keypoints and draw them on the image.

C++

int nPairs = sizeof(POSE_PAIRS)/sizeof(POSE_PAIRS[0]);

for (int n = 0; n < nPairs; n++)

{

// lookup 2 connected body/hand parts

Point2f partA = points[POSE_PAIRS[n][0]];

Point2f partB = points[POSE_PAIRS[n][1]];

if (partA.x<=0 || partA.y<=0 || partB.x<=0 || partB.y<=0)

continue;

line(frame, partA, partB, Scalar(0,255,255), 8);

circle(frame, partA, 8, Scalar(0,0,255), -1);

circle(frame, partB, 8, Scalar(0,0,255), -1);

}

imshow("Output-Skeleton", frame);

Python

# Draw Skeleton

for pair in POSE_PAIRS:

partA = pair[0]

partB = pair[1]

if points[partA] and points[partB]:

cv2.line(frame, points[partA], points[partB], (0, 255, 255), 2)

cv2.circle(frame, points[partA], 8, (0, 0, 255), thickness=-1, lineType=cv2.FILLED)

cv2.imshow('Output-Skeleton', frame)

Also, the code given can detect only one hand at a time. You can easily port it to detecting multiple hands with the help of the probability maps and applying some heuristics.

4. Applications

The model just discussed can be used in many practical applications such as:

- Gesture Recognition

- Sign Language understanding for disabled

- Activity Recognition based on Hand

We would love to see if the readers create some useful applications using the post. Do comment!

References

1. [Image used in the post]

2. [Hand Keypoint Detection Paper]

3. [Hand Keypoint Detection model]

4. [Link to demo video]

5. [Link to american sign language video]

6. [Hand Image from Wikipedia]

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning