Imagine trying to teach a toddler a new skill, like stacking blocks to build a tower. You’d show them, maybe guide their little hands, and explain, “This one goes on top.” After a few tries, they start to get it. Now, picture doing that for a robot, not just for stacking blocks, but for a whole universe of tasks – picking up a specific fruit from a bowl, opening a microwave, or even handing you a tool. Teaching robots to perform diverse tasks in our ever-changing world has been a monumental challenge, often requiring painstaking, task-specific training for every new skill.

But what if robots could learn more like humans do? What if they could build on a foundational understanding of the world, language, and actions to pick up new skills much faster? This is where the magic of models like NVIDIA’s GR00T N1.5 comes into play. It’s like giving our robotic friends a head start, a sort of “common sense” that allows them to generalize and adapt in ways we’ve only dreamed of.

In this article, we will explore:

- What GR00T N1.5 is and why it’s a significant leap forward for humanoid robotics.

- The clever architectural improvements that boost its performance and efficiency.

- How NVIDIA is training these sophisticated VLA (Vision-Language-Action) models using diverse datasets, including innovative synthetic data.

- The impressive real-world capabilities and performance gains demonstrated by GR00T N1.5.

- A peek into how you can get started with the model and its codebase.

Now, that’s exciting, right? So, grab a cup of coffee, and let’s dive in!

- What’s All the Buzz About GR00T N1.5?

- Architectural Improvements in GR00T N1.5: The Brain Upgrade

- Training GR00T N1.5: The Making of a Robotic Polymath

- Key Performance Highlights of GR00T N1.5: Putting Knowledge into Action

- Getting Hands-On: The GR00T N1.5 Code Pipeline

- Quick Recap: GR00T N1.5 in a Nutshell

- Conclusion: The Dawn of More Human-Like Robots

- References

What’s All the Buzz About GR00T N1.5?

So, what exactly is this GR00T N1.5 we’re talking about? Think of it as a highly intelligent brain designed for humanoid robots. Developed by NVIDIA, GR00T N1.5 is an open foundation model specifically engineered to give robots the ability to understand and perform tasks in a more generalized way. It’s the successor to the earlier GR00T N1, bringing significant upgrades in performance and capabilities.

At its core, GR00T N1.5 is a VLA (Vision-Language-Action) model. Let’s break that down:

- Vision: It can “see” and interpret the world through cameras, much like we do with our eyes. This allows it to understand its surroundings, identify objects, and perceive the environment.

- Language: It can understand human language instructions. You can tell it, “pick up the red apple and place it on the plate,” and it can process that command.

- Action: Based on what it sees and the instructions it receives, it can generate the appropriate motor actions for the robot to perform the task.

Imagine telling your robot helper to “grab the screwdriver from the table.” A VLA model like GR00T N1.5 processes this by first visually identifying the screwdriver among other objects on the table (vision), understanding the command (language), and then calculating the precise movements needed to reach, grasp, and pick up the screwdriver (action).

This ability to take multimodal input – combining visual information with language commands – is crucial for creating robots that can operate effectively in diverse and dynamic human environments. GR00T N1.5 isn’t just about performing pre-programmed routines; it’s about reasoning, adapting, and learning, making it a cornerstone for the next generation of generalist humanoid robots. It’s trained on a massive and varied dataset, which includes real-world robot data, synthetically generated data from NVIDIA’s Isaac Sim, and even large-scale video data from the internet. This diverse training diet helps it learn robustly and generalize its skills to new situations, objects, and tasks, even those it hasn’t encountered directly during its initial training.

Architectural Improvements in GR00T N1.5: The Brain Upgrade

To truly appreciate what makes GR00T N1.5 so special, we need to peek under the hood and see how its “brain” – its architecture – has evolved from its predecessor, GR00T N1. Think of GR00T N1 as a clever student, and N1.5 as that same student after a summer of intense, focused learning and a few key upgrades to their study habits.

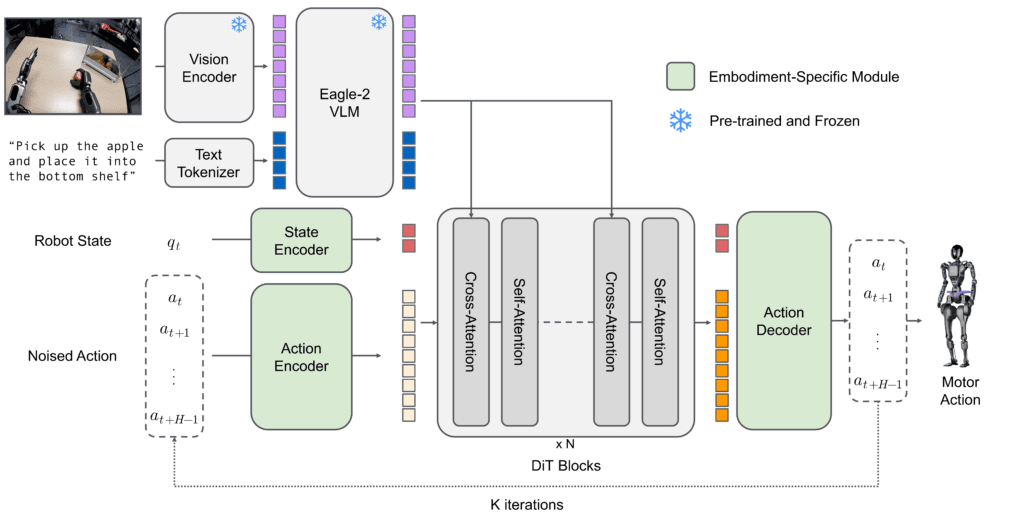

The original GR00T N1 already had a pretty sophisticated design, inspired by how humans process information. It featured a “dual-system” approach:

- System 2 (The Thinker): This was the reasoning part, powered by NVIDIA Eagle VLM. Its job was to look at the world (vision) and understand your instructions (language). Imagine this as the part of your brain that identifies an apple and understands, “I need to pick that up.”

- System 1 (The Doer): This was the action module, a Diffusion Transformer (DiT). It took the understanding from System 2 to figure out the actual motor commands to make the robot move fluidly and perform the task. This is like your brain signaling your arm and hand to reach out and grasp the apple.

Both these systems were designed to work closely together, trained end-to-end. Now, GR00T N1.5 takes this solid foundation and makes some really smart enhancements:

- Keeping the Language Whiz Intact (Frozen VLM): One of the most significant changes in N1.5 is that the core Vision-Language Model (the “thinker” part) is kept frozen during both the initial large-scale training (pretraining) and the later specialized training (finetuning). Why is this a big deal? Imagine an expert linguist who also needs to learn robotics. If you try to teach them both simultaneously from scratch, they might get confused, and their language skills could even degrade. By freezing the VLM, NVIDIA ensures that its powerful language understanding and visual interpretation capabilities, learned from vast amounts of data, are preserved. This helps the model generalize better to new tasks and instructions because its fundamental understanding of language and vision remains robust.

- Sharper Eyes and Better Understanding (Enhanced VLM Grounding): The VLM in N1.5 isn’t just frozen; it’s also an upgraded version, specifically based on NVIDIA’s Eagle 2.5, which has been fine-tuned for better “grounding.” Grounding, in this context, means connecting words and concepts to the real, physical world. For example, when the robot is told to “pick up the blue cup,” improved grounding helps it more accurately identify which object is the blue cup, especially if there are other objects around. The N1.5 VLM shows significantly better performance in linking language to visual elements compared to previous versions and even other comparable models.

- Smoother Connection Between Thinking and Doing (Simplified Adapter): There’s a crucial link between the vision part of the VLM and the language processing part (the Large Language Model or LLM). In N1.5, the “adapter” – think of it as a translator or a bridge – that connects these components has been streamlined. They’ve made the MLP (a type of neural network) connection simpler and added something called layer normalization. This refinement helps the visual information and the text instructions integrate more effectively within the LLM, leading to a more coherent understanding and action.

- Learning from Watching (FLARE Integration): This is a really cool one! GR00T N1.5 incorporates an objective called Future Latent Representation Alignment (FLARE). Instead of just learning from explicit robot demonstrations (where the robot is guided through every action), FLARE allows the model to learn effectively from human videos, like someone performing a task from their own point of view (ego-centric videos). It’s like the robot learning by watching YouTube tutorials, figuring out the underlying intent and action sequence even without direct robot data. This dramatically expands the kind of data the model can learn from.

- Dreaming Up New Skills (DreamGen Integration): To help GR00T N1.5 generalize to entirely new behaviors and tasks that might not even be in its teleoperation data, NVIDIA has integrated synthetic neural trajectories generated via a system called DreamGen. DreamGen uses video world models to create new, synthetic robot data. Think of it as the robot “dreaming” or imagining how to perform a new action based on what it already knows. This synthetic data, representing novel behaviors, is then fed back into the training process, significantly boosting the model’s ability to tackle tasks it has never been explicitly shown on a real robot.

These architectural improvements, working in concert, mean that GR00T N1.5 isn’t just a minor update. It’s a more data-efficient, better-generalizing, and more language-aware model. It’s particularly good in situations where you don’t have a ton of data for a new task (few-shot learning) or even no data at all (zero-shot learning for certain aspects), and it’s much better at following nuanced language commands.

Training GR00T N1.5: The Making of a Robotic Polymath

So, we have this sophisticated architecture for GR00T N1.5. But how does it actually learn to become so capable? The training process is as crucial as the architecture itself, and NVIDIA has employed a clever strategy here, treating it like building a pyramid of knowledge.

Imagine you’re trying to become an expert in, say, cooking. You wouldn’t just start with Michelin-star recipes, right? You’d probably begin with basic knife skills (broad foundation), then learn various cooking techniques (more specific skills), and finally, master complex dishes (highly specialized expertise). GR00T N1.5’s training follows a similar “data pyramid” philosophy to overcome a big hurdle in robotics: the scarcity of real-world humanoid robot data. Unlike text or images, where the internet provides a virtually endless supply, high-quality data from robots performing tasks is expensive and time-consuming to collect.

Here’s how the data pyramid for GR00T N1.5 looks:

- The Broad Base (Web Data & Human Videos): At the very bottom, forming the largest layer, is internet-scale data. This includes:

- Web Data for VLM Pretraining: The Vision-Language Model (VLM), the “thinker” part of GR00T, gets its initial, broad understanding of language and vision from the vast amounts of text and images available on the web. This is what gives it its powerful general knowledge.

- Human Egocentric Videos: Remember FLARE? This is where it shines. The model learns from a diverse set of human videos where people are performing everyday tasks, filmed from their own perspective (e.g., videos from datasets like Ego4D). This helps the model understand human actions, object interactions, and common-sense physics, even without explicit robot action labels. For these actionless videos, clever techniques are used to infer “latent actions” – essentially, the model learns a sort of action codebook that represents common movements.

- The Middle Layer (Synthetic Data): This layer is all about boosting the dataset with artificially generated, yet highly valuable, information.

- Neural Trajectories from DreamGen: As we discussed in the architecture, DreamGen generates synthetic robot videos. These “neural trajectories” can depict the robot performing tasks it has never done in real life, or doing known tasks in new environments. These videos are then given “pseudo-action” labels (either latent actions or actions predicted by an Inverse Dynamics Model – IDM) and used for training. This is a massive force multiplier, allowing NVIDIA to expand their effective robot training data by roughly 10 times compared to just their collected real-robot data. It allows GR00T to learn a wider variety of skills and adapt to new scenarios. For example, if the real robot data mostly shows “pick up X and place on Y,” DreamGen can create videos of “push X,” “open Z,” etc., all based on the learned dynamics of the robot.

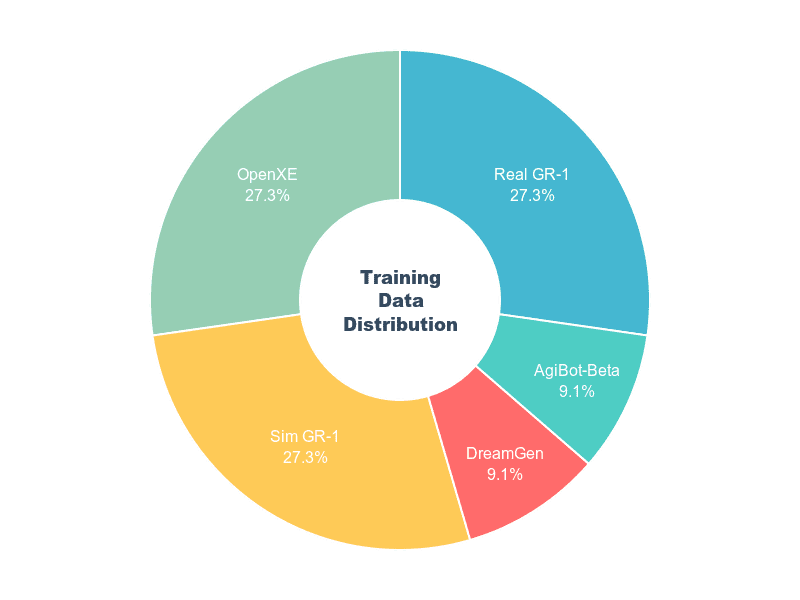

- Simulation Trajectories: Using physics simulators like NVIDIA Isaac Sim, additional synthetic data is generated. Systems like DexMimicGen can take a small set of human demonstrations and automatically expand them into a large dataset of the robot performing tasks in simulation. This is great for learning precise manipulation and can be generated much faster than real-world data collection. For instance, GR00T N1’s training included 780,000 simulation trajectories (equal to 6,500 hours of human demo data) generated in just 11 hours!

- The Peak (Real-World Robot Data): At the very top of the pyramid is the most specific and crucial data: real-robot trajectories. This is data collected from actual humanoid robots (like the Fourier GR-1 or Unitree G1) being teleoperated (controlled by a human) to perform various tasks. This data grounds the model in the complexities and nuances of the real world and the specific robot embodiment. While this data is the most valuable for fine-grained control, it’s also the scarcest.

The Co-Training Strategy:

GR00T N1.5 is pre-trained end-to-end using this entire data pyramid. The model learns to process (annotated) video datasets, synthetic datasets, and real-robot trajectories by sampling training batches from this diverse mixture. This co-training strategy ensures that GR00T N1.5 develops broad visual and behavioral priors from the lower layers while being finely tuned for embodied, real-robot execution by the top layer.

Fine-tuning for Specifics:

After this extensive pre-training, the model can be further fine-tuned on smaller, custom datasets for specific robot embodiments, tasks, or environments. During this phase, the language component of the VLM is kept frozen to retain its strong language understanding, while other parts of the model are adjusted. This allows researchers and developers to adapt the powerful base model to their unique needs with relatively little data. For example, the GR00T N1.5 model is available on Hugging Face, and there are scripts and notebooks to help users fine-tune it on their own data.

This comprehensive training approach, combining real, synthetic, and web-scale data, is what gives GR00T N1.5 its impressive ability to understand language, perceive the world, and act intelligently in a wide range of situations.

Key Performance Highlights of GR00T N1.5: Putting Knowledge into Action

We’ve talked about GR00 T N1.5’s sophisticated architecture and comprehensive training. But the real question is: How well does it actually perform? The proof, as they say, is in the pudding—or, in this case, in the robot’s ability to successfully complete tasks. And GR00T N1.5 delivers some impressive results, showcasing significant improvements over its predecessor, GR00T N1, and demonstrating strong capabilities in crucial areas for robotics.

Here are some of the standout performance improvements:

- Vastly Improved Language Following: This is a big one. One of the primary goals for a VLA model is to accurately follow language instructions. GR00T N1.5 shows a dramatic leap here. For instance, in tasks on the real GR-1 humanoid robot where it had to pick a specific fruit from two options and place it on a plate based on a language command, GR00T N1.5 achieved a language following rate of 93.3%. Compare that to GR00T N1’s 46.6% on the same tasks! This means N1.5 is much more reliable at understanding which object you want it to interact with and what you want it to do with it. This improvement is largely attributed to the frozen, enhanced VLM and the refined adapter architecture.

- Better Data Efficiency and Few-Shot Learning: Training robots often requires a lot of data. GR00T N1.5 demonstrates better performance even when data is scarce.

- Low-Data Regimes: In simulated environments like RoboCasa, when trained with only 30 demonstrations per task, GR00T N1.5 significantly outperformed N1 (e.g., 47.5% success vs. 17.4% in RoboCasa).

- Zero-Shot Generalization (for some aspects): Because the pre-training mixture for N1.5 includes other Sim GR-1 tasks with the same embodiment, it shows better 0-shot performance on new Sim GR-1 tasks (43.9% vs 39.6% for N1). It also showed a 15% success rate in picking and placing novel objects without any prior demonstrations of those specific objects, whereas GR00T N1 scored 0%.

- Real-World Data Efficiency: When post-trained on real-world tasks with the GR-1 humanoid, GR00T N1.5 trained on just 10% of the human teleoperation dataset performed almost as well (and sometimes better in specific task categories) than the older Diffusion Policy baseline trained on the full dataset. This highlights its ability to learn effectively from much less data, which is a huge advantage.

- Superior Generalization to Novel Objects and Environments:

- Novel Objects: GR00T N1.5 is better at manipulating objects it hasn’t seen during its pre-training. When further post-trained using FLARE on human videos that included these novel objects, its success rate jumped to 55.0% for those objects.

- Novel Behaviors (Thanks to DreamGen): The integration of DreamGen allows GR00T N1.5 to perform tasks involving new verbs (actions) that were not in its original teleoperation data. It achieved a 38.3% success rate across 12 new DreamGen-introduced tasks, compared to only 13.1% for GR00T N1, which mostly just repeated pick-and-place behaviors.

- Environment Generalization: When prompted with initial frames from completely new environments (after being fine-tuned on data from only a single environment), GR00T N1.5 could still generate realistic robot videos and achieve non-trivial success rates (28.5% on average for new behaviors in new environments) when policies were trained solely on these neural trajectories. The baseline GR00T N1 trained only on single-environment pick-and-place data scored 0% in these new environments.

- Broader Robot Compatibility (New Embodiment Heads): GR00T N1.5 isn’t just for one type of robot control. It comes with added support for different robot configurations through EmbodimentTag:

- EmbodimentTag.OXE_DROID: Optimized for single-arm robots using end-effector (EEF) control (controlling the position and orientation of the robot’s “hand”).

- EmbodimentTag.AGIBOT_GENIE1: Built for humanoid robots with simpler grippers (as opposed to dexterous hands), still using joint space control.

This expands its usability beyond just joint space control for highly dexterous humanoids, making the powerful foundation model accessible to a wider range of robot platforms.

- Strong Performance on New Robots (e.g., Unitree G1): When post-trained with 1,000 teleoperation episodes on the Unitree G1 robot (a different humanoid), GR00T N1.5 achieved a 98.8% success rate on placing known fruits, compared to 44.0% for N1. It also showed an impressive 84.2% success rate when dealing with previously unseen objects on the G1.

These performance metrics clearly indicate that the architectural and data strategy enhancements in GR00T N1.5 have translated into a more capable, adaptable, and efficient foundation model for humanoid robotics. It’s better at understanding you, better at learning with less data, and better at taking its skills to new places and objects.

Getting Hands-On: The GR00T N1.5 Code Pipeline

Alright, we’ve talked about the “what” and “why” of GR00T N1.5. Now for the really exciting part: how can you start experimenting with it? NVIDIA has made GR00T N1.5 an open foundation model, and the code is available on GitHub. This section will walk you through the general procedure to get up and running, assuming you’re keen to dive in.

Think of this as your starter map for an adventure. The treasure? A powerful VLA model you can fine-tune and deploy!

Before You Begin: The Prerequisites

Every good quest has some requirements. For GR00T N1.5, you’ll generally need:

- Operating System: The code has been tested on Ubuntu 20.04 and 22.04.

- GPU Power: For fine-tuning, NVIDIA recommends GPUs like the H100, L40, RTX 4090, or A6000. For inference (just running the pre-trained model), an RTX 3090, RTX 4090, or A6000 should work. GPU availability will influence training times.

- CUDA: You’ll need CUDA version 12.4. This is crucial, especially for getting modules like flash-attn to work correctly. Instructions for installing CUDA are readily available on NVIDIA’s developer site.

- TensorRT (Optional but Recommended for Deployment): If you plan to deploy the model for optimized inference, especially on platforms like Jetson, installing TensorRT is a good idea.

- Python: Python 3.10 is the recommended version.

- System Dependencies: Make sure you have ffmpeg, libsm6, and libxext6 installed on your system. These are common libraries used for image and video processing.

Step 1: Clone the Repository

First things first, you need the code! Open your terminal and run:

git clone https://github.com/NVIDIA/Isaac-GR00T

cd Isaac-GR00T

This downloads the entire project to your local machine.

Step 2: Set Up Your Conda Environment

It’s always a good practice to create a dedicated environment for your projects to avoid conflicting dependencies. Conda is a great tool for this:

conda create -n gr00t python=3.10

conda activate gr00t

Step 3: Install the Dependencies

Now, install the necessary Python packages. The repository provides a setup.py file that makes this easier.

pip install --upgrade setuptools

pip install -e .[base]

pip install --no-build-isolation flash-attn==2.7.1.post4

The .[base] part installs the core dependencies. flash-attn is a specific library for efficient attention mechanisms, and installing the correct version is important. Remember that CUDA 12.4 is key here!

Step 4: Understanding the Data – LeRobot Compatibility

GR00T N1.5 uses a data schema compatible with Hugging Face’s LeRobot, but with some added details for richer modality and annotation. This means your robot demonstration data (videos, states, actions) needs to be in this format.

from gr00t.data.dataset import LeRobotSingleDataset

from gr00t.data.embodiment_tags import EmbodimentTag

from gr00t.data.dataset import ModalityConfig

from gr00t.experiment.data_config import DATA_CONFIG_MAP

# get the data config

data_config = DATA_CONFIG_MAP["fourier_gr1_arms_only"]

# get the modality configs and transforms

modality_config = data_config.modality_config()

transforms = data_config.transform()

# This is a LeRobotSingleDataset object that loads the data from the given dataset path.

dataset = LeRobotSingleDataset(

dataset_path="demo_data/robot_sim.PickNPlace",

modality_configs=modality_config,

transforms=None, # we can choose to not apply any transforms

embodiment_tag=EmbodimentTag.GR1, # the embodiment to use

)

# This is an example of how to access the data.

dataset[5]

- Data Structure: Your dataset will likely consist of (video, state, action) triplets. The GitHub repository (getting_started/LeRobot_compatible_data_schema.md) provides a detailed explanation of this format. You’ll also find an example dataset in ./demo_data/robot_sim.PickNPlace.

- EmbodimentTag: The system uses EmbodimentTag to handle different robot types (e.g., EmbodimentTag.GR1 for the GR-1 humanoid).

- ModalityConfig: This defines the types of data used (e.g., video, joint states, end-effector poses, actions).

The repository includes Jupyter notebooks (./getting_started/0_load_dataset.ipynb) and Python scripts (./scripts/load_dataset.py) to help you understand how to load and process data in this format. You can try running the example script:

python scripts/load_dataset.py --dataset-path ./demo_data/robot_sim.PickNPlace

Step 5: Running Inference (Trying Out the Pre-trained Model)

Want to see GR00T N1.5 in action without training it yourself yet? You can! The pre-trained model is hosted on Hugging Face (nvidia/GR00T-N1.5-3B).

PyTorch Inference: The getting_started/1_gr00t_inference.ipynb notebook shows you how to load the pre-trained model and run inference on a sample from the dataset. Essentially, you’ll:

from gr00t.model.policy import Gr00tPolicy

from gr00t.data.embodiment_tags import EmbodimentTag

# 1. Load the modality config and transforms, or use above

modality_config = ComposedModalityConfig(...)

transforms = ComposedModalityTransform(...)

# 2. Load the dataset

dataset = LeRobotSingleDataset(.....<Same as above>....)

# 3. Load pre-trained model

policy = Gr00tPolicy(

model_path="nvidia/GR00T-N1.5-3B",

modality_config=modality_config,

modality_transform=transforms,

embodiment_tag=EmbodimentTag.GR1,

device="cuda"

)

# 4. Run inference

action_chunk = policy.get_action(dataset[0])

- Load your modality configurations and transforms.

- Load your dataset.

- Load the Gr00tPolicy from the specified Hugging Face model path.

- Pass a data sample to the policy to get an action.

Inference Service: You can also run an inference service in server mode:

python scripts/inference_service.py --model-path nvidia/GR00T-N1.5-3B --server

And then, in a different terminal, send requests in client mode:

python scripts/inference_service.py --client

Step 6: Fine-Tuning the Model

This is where you can adapt the pre-trained GR00T N1.5 model to your own specific robot, tasks, or datasets.

- Get Your Data Ready: Ensure your custom dataset is in the LeRobot-compatible format mentioned in Step 4.

- Run the Fine-tuning Script: The

scripts/gr00t_finetune.pyscript is your main tool. Run it with your dataset path:

python scripts/gr00t_finetune.py --dataset-path ./path_to_your_dataset --num-gpus 1

PS: the getting_started folder contains all the interactive tutorial notebooks that you can check as well.

Hugging Face gave a detailed guide where they fine-tuned GR00T N1.5 for LeRobot SO-101 ARM. We recommend you check this out if you have a high-end GPU on you.

If we look at one of the inferences with a Humanoid robot:

This pipeline provides a comprehensive toolkit for exploring, using, and customizing GR00T N1.5. The Jupyter notebooks and scripts provided in the repository are invaluable resources for understanding each step in detail. Now let’s recap what we have learned so far!

Quick Recap: GR00T N1.5 in a Nutshell

We’ve covered a lot of ground exploring NVIDIA’s GR00T N1.5! Let’s quickly recap the main takeaways to reinforce what makes this model a game-changer for humanoid robotics:

- A Leap in Generalized Robot Learning: GR00T N1.5 is an open foundation VLA (Vision-Language-Action) model that significantly enhances a humanoid robot’s ability to understand multimodal instructions (vision and language) and perform a wide variety of tasks, moving beyond single-task programming.

- Smarter Architecture, Better Performance: Key architectural improvements over GR00T N1, such as a frozen and enhanced VLM (Vision-Language Model) for better language grounding, a simplified adapter, and the integration of FLARE (for learning from human videos) and DreamGen (for synthetic behavior generation), lead to superior language following, data efficiency, and generalization.

- Innovative Data Strategy: GR00T N1.5 is trained using a “data pyramid” that combines real-robot data, diverse synthetic data (including neural trajectories from DreamGen and simulation data), and broad web-scale/human video data. This approach allows it to learn robustly despite the scarcity of real-world robot demonstrations.

- Impressive Real-World Capabilities: The model demonstrates significant performance gains in following language commands (93.3% on GR-1 language tasks), generalizing to novel objects and behaviors (even those learned from synthetic data), and adapting to new robot embodiments (like the Unitree G1) with high success rates, often with limited fine-tuning data.

- Accessible and Adaptable: As an open model with code available on GitHub, GR00T N1.5 provides researchers and developers with a powerful tool and a clear pipeline for fine-tuning on custom datasets and deploying on various robot platforms, accelerating progress in the field.

These points highlight how GR00T N1.5 is pushing the boundaries of what’s possible, paving the way for more intelligent, adaptable, and versatile humanoid robots.

Conclusion: The Dawn of More Human-Like Robots

With NVIDIA’s GR00T N1.5, we’re taking a significant step closer to that reality. This isn’t just another incremental update; it’s a testament to how far we’ve come in building more generalized AI for physical systems. By cleverly combining advanced VLM architectures with Diffusion Transformer (DiT) to leverage everything from real robot movements to synthetic “dreams” (thanks, DreamGen!) and human videos, GR00T N1.5 is equipping humanoid robots with a more foundational, human-like understanding of the world.

Build your first robot, experiment with the model, and share some cool experiments with us!

See you in the next one, Bye 😀

References

GR00T N1.5 – An Improved Open Foundation Model for Generalist Humanoid Robots

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning