If you’ve ever watched two toddlers swap toys without an adult translating (“Truck!” … “Dino!” … trade accepted), you’ve glimpsed the vision behind Google’s A2A Protocol. We live in an age where artificial intelligence (AI) systems increasingly need to talk to each other. Whether they’re summarizing documents, generating images, or making recommendations, these AI “agents” must coordinate to get real work done. That’s where Google’s Agent2Agent Protocol comes into the picture. If you’ve ever wondered how we’ll wrangle a sprawling set of specialized AI models so they can communicate seamlessly, you’ll want to keep reading.

This blog post will serve as an A2A protocol overview, shedding light on the need for standardization in multi-agent AI environments and unveiling key aspects of Google’s A2A architecture. We’ll keep it friendly, but we’re also going to dive into some technical nuances.

Ready to peek under the hood? Let’s get started then!

- A Quick Story: AI Agents at Work

- Why We Need a Standardized Protocol

- A2A Protocol Overview: The Core Concept

- Google’s A2A Protocol vs. Traditional Approaches

- Using MCP with A2A for Scalable Agents

- Real-World Example: Candidate Sourcing

- Challenges and Future Possibilities

- Conclusion and Next Steps

- References

A Quick Story: AI Agents at Work

Let’s say you’re building a multi-agent system to summarize breaking news and publish digestible updates across multiple platforms. You’ve got a fleet of AI agents, each with its own specialty:

- Agent A (News Scraper + Search Tool): Scans multiple RSS feeds, indexed news APIs, and even open web content for trending headlines and stories. It performs semantic search and keyword extraction to detect breaking news.

- Agent B (Summarizer): Takes raw article content, strips out boilerplate, and uses a transformer-based model (like T5 or GPT) to generate concise summaries, optimized for mobile reading or Twitter threads.

- Agent C (Distribution Manager): Decides how to format and publish the summaries to various platforms like email newsletters, social media, or in-app notifications, based on audience segmentation and past engagement data.

Here’s the challenge: these agents are great at what they do, but they don’t naturally “speak the same language.”

Enter Google’s A2A Protocol.

With A2A, each agent follows a shared schema and messaging flow.

So instead of Agent A throwing raw data over the wall and hoping for the best:

- Agent A emits a

NewStoryDetectedmessage with fields liketitle,source,content, andtags. - Agent B listens for this message type and knows exactly how to parse it—thanks to the A2A spec. It replies with a

StorySummaryReadyevent containing a human-readable summary, along with estimated reading time, tone analysis, and compression score. - Agent C is subscribed to

StorySummaryReadyevents and checks publishing rules. It responds with aDistributionActionevent that triggers publishing across the correct channels, with minimal code orchestration needed.

Instead of custom APIs and glue code between every component, the A2A Protocol acts as the universal handshake—a shared language that keeps the entire pipeline clean, modular, and scalable.

Now, you can easily plug in a new Agent D that does fact-checking or Agent E that performs tone optimization for different audiences, and they can join the conversation seamlessly.

Why We Need a Standardized Protocol

The Challenges of Agent Communication

- Redundant Work: Every time you add a new AI agent, you need to figure out how it speaks to the rest of your ecosystem, leading to redundant or “copy-paste” integration code.

- Compatibility Headaches: Suppose your NLP model runs on Python, and your knowledge graph is hosted in a Java-based microservice. Each might pass around data differently, making it hard for them to play nicely together.

- Scaling Bottlenecks: As you incorporate more specialized agents (e.g., image recognition, forecasting, robotics), the complexity explodes. Non-standardized communication turns into a big tangly mess, harming your ability to innovate quickly.

The Vision of A2A

- A2A = Agent-to-Agent: A shared set of rules (like an API specification and underlying data model) that ensures every agent “talks” in a consistent way.

- Plug-and-Play: If your newly deployed agent follows the same A2A guidelines, it can instantly exchange messages with the rest of the system.

- Unified Monitoring & Security: Standard protocols often integrate security features (authentication, authorization) and logging so you can control who does what, where, and when.

A2A Protocol Overview: The Core Concept

So, what exactly is Google’s A2A Protocol? In short, it’s a set of specifications (and possibly open-source libraries) that define how AI agents communicate at a messaging level. Think of it like how web browsers talk to web servers via HTTP, but now for AI agents specifically.

Key Components of an A2A Protocol

- Message Format: The structure of requests and responses. For example, each agent might produce or consume JSON that follows a particular schema (such as an “intents” field, a “metadata” field, etc.).

- Transport Mechanism: How messages travel between agents. This could be over standard web protocols (HTTP/HTTPS) or specialized messaging buses (like gRPC or MQTT).

- Discovery: How an agent finds other agents. This might be analogous to DNS in the internet world or a registry service in the microservices world.

- Security and Authentication: Ensuring that only authorized agents can talk to each other, or that sensitive data is encrypted.

Why Standardization Matters

Consider the early days of the internet. Without a universal protocol like TCP/IP or HTTP, every company might’ve built its own communication method (and we’d have a million “mini-internets” that don’t talk to each other). Similarly, if each AI agent has its own method of message exchange, we can’t truly harness multi-agent synergy.

Quick Review: What aspects of a protocol (message format, transport, or security) do you find most critical for your AI use cases?

Google’s A2A Protocol vs. Traditional Approaches

Traditional AI Integration

- API Explosion: Typically, you have a set of REST endpoints (or maybe GraphQL) for each AI service. Each endpoint has its own request/response structure.

- Coupling: Because your system is coded to each service’s custom interface, switching to a new model or adding an extra step in your workflow often breaks existing code.

Google’s A2A Architecture

By contrast, in Google’s A2A architecture, the integration is not about custom endpoints but about adopting a unifying specification. Each agent implements the protocol’s interface. This interface might define:

RegisterAgent: A method for an agent to say, “Hey, I’m here, these are my capabilities.”PublishIntent: A method for an agent to broadcast an intent to other agents (e.g., “I have a summarized text that might need classification.”)SubscribeAndRespond: A method for an agent to listen for relevant broadcasts and respond in a standardized way.

This approach decouples your system from the nitty-gritty of each service. It’s reminiscent of how microservices use events and messaging to remain loosely coupled, but are specialized for AI tasks.

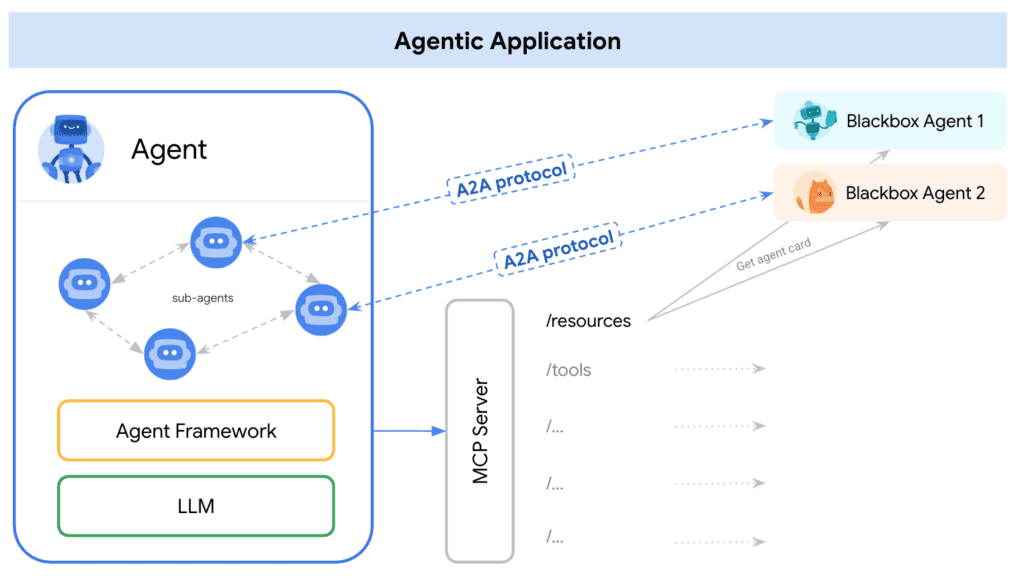

Using MCP with A2A for Scalable Agents

Now that we have a handle on Google’s A2A Protocol, you might be wondering: How does MCP (Multi-Chain Protocol) fit into the picture?

Think about the whole ecosystem: all the agents are communicating using the A2A protocol, it’s like their shared language. Each agent connects to different services like search, databases, and APIs to perform specific tasks. To interact with these tools, individual agents use MCP. In this way, A2A acts as the language for agent-to-agent communication, while MCP serves as the language for agents to communicate with tools.

Distinct Roles of A2A and MCP

- A2A (Agent-to-Agent Protocol): Developed by Google, A2A focuses on enabling AI agents to discover, communicate, and collaborate with one another. It standardizes the way agents share tasks, negotiate capabilities, and coordinate actions across diverse environments.

- MCP (Model Context Protocol): Introduced by Anthropic, MCP provides a standardized method for AI models to interact with external tools, data sources, and systems. It simplifies the integration process, allowing AI agents to access necessary resources without bespoke connectors.

Synergistic Integration for Enhanced Functionality

While A2A facilitates inter-agent communication, MCP equips agents with the means to interact with external tools and data. By integrating MCP within the A2A framework, agents can not only coordinate among themselves but also access and utilize external resources effectively.

Example Scenario: Travel Planning Assistant

Consider a travel planning AI agent that needs to:

- Coordinate with Other Agents: Using A2A, the travel agent communicates with a flight booking agent and a hotel reservation agent to compile a comprehensive itinerary.

- Access External Data: When interpreting user preferences or ambiguous requests, the travel agent employs MCP to retrieve data from external sources, such as weather forecasts or local events, to provide contextually relevant suggestions.

In this scenario, A2A manages the orchestration among agents, while MCP handles the interaction with external data sources, resulting in a cohesive and efficient user experience.

Benefits of Combining A2A and MCP

Security: Both protocols incorporate security measures, ensuring safe and authorized interactions among agents and with external systems.

Modularity: Agents can be developed independently, focusing on specific tasks, and later integrated into the system using A2A.

Scalability: New agents or tools can be added without overhauling the existing infrastructure, thanks to the standardized protocols.

Flexibility: Agents can dynamically access a wide range of external resources through MCP, enhancing their decision-making capabilities.

Real-World Example: Candidate Sourcing

Google showcased a powerful example of candidate sourcing using multiple agents working seamlessly through the A2A protocol. The system included:

- A sourcing agent that shortlists profiles based on the provided job description.

- An interview scheduling agent that arranges interviews at preferred dates and time slots.

- A follow-up agent that sends interview details and updates about selected candidates.

How beautifully all these agents communicate with each other—it’s truly something to marvel at.

Without a standardized approach, each agent might speak a different “language.” You’d be writing custom code to wire them up. With an A2A protocol:

- All data and messages pass through a known format—no guesswork.

- Each agent can discover the other’s capabilities automatically (like a registry).

- If you need to add a new agent for more advanced forecasting, you simply register it. It then subscribes to the relevant events without rewriting your entire pipeline.

P.S. – In the next post, we’ll implement a simple multi-agent system ourselves. Don’t forget to subscribe so you don’t miss it!

Challenges and Future Possibilities

Potential Challenges

- Adoption Curve: To benefit from Google’s A2A architecture, you need multiple agents to follow the protocol. If some prefer their own way, you end up bridging them manually.

- Protocol Fragmentation: Protocol evolution can lead to version fragmentation—some agents might support “A2A v1,” others “A2A v2,” introducing compatibility issues.

- Governance: Who sets the standards for Google’s A2A protocol as it evolves? Will it become community-driven or remain controlled by a single entity? And how will it align with MCP’s governance?

Future Possibilities

- Industry-Wide Collaboration: If a critical mass of AI frameworks, cloud providers, and open-source projects adopt a standard A2A spec, we might see explosive growth in multi-agent solutions.

- Cross-Cloud Interoperability: The dream is that you can run some agents on Google Cloud, others on AWS, or in your local HPC cluster. If they all speak A2A, orchestrated by something like MCP, everyone stays in sync.

- Automated Agent Discovery: Advanced service registries could help new agents automatically find the best partner agents for a given task. A2A would provide the universal handshake, while MCP coordinates advanced consensus or resource management tasks.

Conclusion and Next Steps

Google’s A2A Protocol isn’t just a technical curiosity; it’s potentially the next big leap in orchestrating complex, multi-agent AI systems. By providing a standardized way for agents to register, publish tasks, and securely respond to requests, we’re one step closer to an AI ecosystem that functions more like a coherent team than a set of isolated tools. Paired with MCP for higher-level orchestration and cross-platform connectivity, the potential for truly scalable and robust multi-agent systems only grows.

In practice, adopting Google A2A architecture requires embracing new patterns for messaging, security, and logging. However, the payoff can be enormous, unlocking agile, plug-and-play AI environments that scale without creating massive integration headaches.

References

Google’s Announcing the Agent2Agent Protocol (A2A)

A Visual Guide to Agent2Agent (A2A) Protocol

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning