In this tutorial, we will discuss the various Face Detection methods in OpenCV, Dlib, and Deep Learning and compare the methods quantitatively. We will share code in C++ and Python for the following Face Detectors:

- Haar Cascade Face Detector in OpenCV

- Deep Learning based Face Detector in OpenCV.

- HoG Face Detector in Dlib

- Deep Learning based Dlib Face Detection

We will not go into the theory of any of them and only discuss their usage. We will also share some rules of thumb on which model to prefer according to your application.

1. Haar Cascade Face Detector in OpenCV

Haar Cascade-based Face Detector was the state-of-the-art in Face Detection for many years since 2001 when Viola and Jones introduced it. There have been many improvements in recent years. OpenCV has many Haar-based models, which can be found in Github.

Code

Please download the code from the link below. We have provided code snippets throughout the blog for better understanding. You will find CPP and python files for each face detector and a separate file that compares all the methods together ( run-all.py and run-all.cpp ). We also share all the models required for running the code.

To easily follow along this tutorial, please download code by clicking on the button below. It’s FREE!

Python

faceCascade = cv2.CascadeClassifier('./haarcascade_frontalface_default.xml')

faces = faceCascade.detectMultiScale(frameGray)

for face in faces:

x1, y1, w, h = face

x2 = x1 + w

y2 = y1 + h

C++

faceCascadePath = "./haarcascade_frontalface_default.xml";

faceCascade.load( faceCascadePath )

std::vector<Rect> faces;

faceCascade.detectMultiScale(frameGray, faces);

for ( size_t i = 0; i < faces.size(); i++ )

{

int x1 = faces[i].x;

int y1 = faces[i].y;

int x2 = faces[i].x + faces[i].width;

int y2 = faces[i].y + faces[i].height;

}

The above code snippet loads the haar cascade model file and applies it to a grayscale image. the output is a list containing the detected faces. Each list member is again a list with 4 elements indicating the (x, y) coordinates of the top-left corner and the width and height of the detected face.

Pros

- Works almost real-time on CPU

- Simple Architecture

- Detects faces at different scales

Cons

- The major drawback of this method is that it gives many false predictions.

- Doesn’t work on non-frontal images.

- It doesn’t work under occlusion.

2. DNN Face Detector in OpenCV

This model was included in OpenCV from version 3.3. It is based on a Single-Shot-Multibox detector and uses ResNet-10 Architecture as the backbone. The model was trained using images from the web, but the source is not disclosed. OpenCV provides 2 models for this face detector.

- Floating point 16 version of the original caffe implementation ( 5.4 MB )

- 8 bit quantized version using Tensorflow ( 2.7 MB )

We have included both models along with the code.

Code

Python

DNN = "TF"

if DNN == "CAFFE":

modelFile = "res10_300x300_ssd_iter_140000_fp16.caffemodel"

configFile = "deploy.prototxt"

net = cv2.dnn.readNetFromCaffe(configFile, modelFile)

else:

modelFile = "opencv_face_detector_uint8.pb"

configFile = "opencv_face_detector.pbtxt"

net = cv2.dnn.readNetFromTensorflow(modelFile, configFile)

C++

const std::string caffeConfigFile = "./deploy.prototxt";

const std::string caffeWeightFile = "./res10_300x300_ssd_iter_140000_fp16.caffemodel";

const std::string tensorflowConfigFile = "./opencv_face_detector.pbtxt";

const std::string tensorflowWeightFile = "./opencv_face_detector_uint8.pb";

#ifdef CAFFE

Net net = cv::dnn::readNetFromCaffe(caffeConfigFile, caffeWeightFile);

#else

Net net = cv::dnn::readNetFromTensorflow(tensorflowWeightFile, tensorflowConfigFile);

#endif

We load the required model using the above code. If we want to use the floating point model of Caffe, we use the caffemodel and prototxt files. Otherwise, we use the quantized TensorFlow model. Also, note the difference in how we read the networks for Caffe and Tensorflow.

Python

blob = cv2.dnn.blobFromImage(frameOpencvDnn, 1.0, (300, 300), [104, 117, 123], False, False)

net.setInput(blob)

detections = net.forward()

bboxes = []

for i in range(detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > conf_threshold:

x1 = int(detections[0, 0, i, 3] * frameWidth)

y1 = int(detections[0, 0, i, 4] * frameHeight)

x2 = int(detections[0, 0, i, 5] * frameWidth)

y2 = int(detections[0, 0, i, 6] * frameHeight)

C++

#ifdef CAFFE

cv::Mat inputBlob = cv::dnn::blobFromImage(frameOpenCVDNN, inScaleFactor, cv::Size(inWidth, inHeight), meanVal, false, false);

#else

cv::Mat inputBlob = cv::dnn::blobFromImage(frameOpenCVDNN, inScaleFactor, cv::Size(inWidth, inHeight), meanVal, true, false);

#endif

net.setInput(inputBlob, "data");

cv::Mat detection = net.forward("detection_out");

cv::Mat detectionMat(detection.size[2], detection.size[3], CV_32F, detection.ptr<float>());

for(int i = 0; i < detectionMat.rows; i++)

{

float confidence = detectionMat.at<float>(i, 2);

if(confidence > confidenceThreshold)

{

int x1 = static_cast<int>(detectionMat.at<float>(i, 3) * frameWidth);

int y1 = static_cast<int>(detectionMat.at<float>(i, 4) * frameHeight);

int x2 = static_cast<int>(detectionMat.at<float>(i, 5) * frameWidth);

int y2 = static_cast<int>(detectionMat.at<float>(i, 6) * frameHeight);

cv::rectangle(frameOpenCVDNN, cv::Point(x1, y1), cv::Point(x2, y2), cv::Scalar(0, 255, 0),2, 4);

}

}

In the above code, the image is converted to a blob and passed through the network using the forward() function. The output detections is a 4-D matrix, where

- The 3rd dimension iterates over the detected faces. (i is the iterator over the number of faces)

- The fourth dimension contains information about the bounding box and the score for each face. For example, detections[0,0,0,2] gives the confidence score for the first face, and detections[0,0,0,3:6] give the bounding box.

The output coordinates of the bounding box are normalized between [0,1]. Thus the coordinates should be multiplied by the height and width of the original image to get the correct bounding box on the image.

Pros

The method has the following merits :

- Most accurate out of the four methods

- Runs at real-time on CPU

- Works for different face orientations – up, down, left, right, side-face, etc.

- Works even under substantial occlusion

- Detects faces across various scales ( detects big as well as tiny faces )

The DNN-based detector overcomes all the drawbacks of the Haar cascade based detector without compromising on any benefit provided by Haar. We could not see any major drawback for this method except that it is slower than the Dlib HoG-based Face Detector discussed next.

3. HoG Face Detector in Dlib

This is a widely used face detection model based on the Histogram of Oriented Gradients (HoG) features and SVM. The model is built out of 5 HOG filters – front looking, left looking, right looking, front looking but rotated left, and a front looking but rotated right. The model comes embedded in the header file itself.

The dataset used for training consists of 2825 images obtained from the LFW dataset and manually annotated by Davis King, the author of Dlib. It can be downloaded from here.

Code

Python

hogFaceDetector = dlib.get_frontal_face_detector()

faceRects = hogFaceDetector(frameDlibHogSmall, 0)

for faceRect in faceRects:

x1 = faceRect.left()

y1 = faceRect.top()

x2 = faceRect.right()

y2 = faceRect.bottom()

C++

frontal_face_detector hogFaceDetector = get_frontal_face_detector();

// Convert OpenCV image format to Dlib's image format

cv_image<bgr_pixel> dlibIm(frameDlibHogSmall);

// Detect faces in the image

std::vector<dlib::rectangle> faceRects = hogFaceDetector(dlibIm);

for ( size_t i = 0; i < faceRects.size(); i++ )

{

int x1 = faceRects[i].left();

int y1 = faceRects[i].top();

int x2 = faceRects[i].right();

int y2 = faceRects[i].bottom();

cv::rectangle(frameDlibHog, Point(x1, y1), Point(x2, y2), Scalar(0,255,0), (int)(frameHeight/150.0), 4);

}

In the above code, we first load the face detector. Then we pass it the image through the detector. The second argument is the number of times we want to upscale the image. The more you upscale, the better the chances of detecting smaller faces. However, upscaling the image will have a substantial impact on the computation speed. The output is in the form of a list of faces with the (x, y) coordinates of the diagonal corners.

Pros

- Fastest method on CPU

- Works very well for frontal and slightly non-frontal faces

- Lightweight model as compared to the other three.

- Works under small occlusion

Basically, this method works under most cases except a few, as discussed below.

Cons

- The major drawback is that it does not detect small faces, as it is trained for a minimum face size of 80×80. Thus, you need to ensure that the face size is more than that in your application. You can, however, train your own face detector for smaller-sized faces.

- The bounding box often excludes part of the forehead and even part of the chin sometimes.

- It does not work very well under substantial occlusion.

- It does not work for side faces and extreme non-frontal faces, like looking down or up.

4. Convolutional Neural Network – CNN Face Detector in Dlib

This method uses a Maximum-Margin Object Detector ( MMOD ) with CNN-based features. The training process for this method is very simple and you don’t need a large amount of data to train a custom object detector. For more information on training, visit the dlib c++ library blog.

The model can be downloaded from the dlib-models repository.

It uses a dataset manually labeled by its Author, Davis King, consisting of images from various datasets like ImageNet, PASCAL VOC, VGG, WIDER, Face Scrub. It contains 7220 images. The dataset can be downloaded from here.

Code

Python

dnnFaceDetector = dlib.cnn_face_detection_model_v1("./mmod_human_face_detector.dat")

faceRects = dnnFaceDetector(frameDlibHogSmall, 0)

for faceRect in faceRects:

x1 = faceRect.rect.left()

y1 = faceRect.rect.top()

x2 = faceRect.rect.right()

y2 = faceRect.rect.bottom()

C++

String mmodModelPath = "./mmod_human_face_detector.dat";

net_type mmodFaceDetector;

deserialize(mmodModelPath) >> mmodFaceDetector;

// Convert OpenCV image format to Dlib's image format

cv_image<bgr_pixel> dlibIm(frameDlibMmodSmall);

matrix<rgb_pixel> dlibMatrix;

assign_image(dlibMatrix, dlibIm);

// Detect faces in the image

std::vector<dlib::mmod_rect> faceRects = mmodFaceDetector(dlibMatrix);

for ( size_t i = 0; i < faceRects.size(); i++ )

{

int x1 = faceRects[i].rect.left();

int y1 = faceRects[i].rect.top();

int x2 = faceRects[i].rect.right();

int y2 = faceRects[i].rect.bottom();

cv::rectangle(frameDlibMmod, Point(x1, y1), Point(x2, y2), Scalar(0,255,0), (int)(frameHeight/150.0), 4);

}

The code is similar to the HoG detector except that, in this case, we load the CNN face detection model. Also, the coordinates are present inside a rect object.

Pros

- Works for different face orientations

- Robust to occlusion

- Works very fast on GPU

- Very easy training process

Cons

- Very slow on CPU

- It does not detect small faces as it is trained for a minimum face size of 80×80. Thus, you need to ensure that the face size is more than that in your application. You can, however, train your own face detector for smaller-sized faces.

- The bounding box is even smaller than the HoG detector.

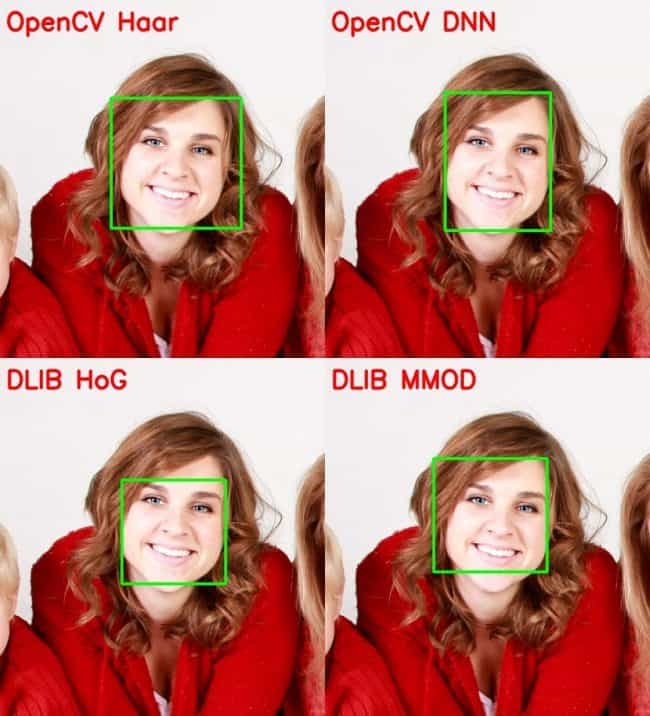

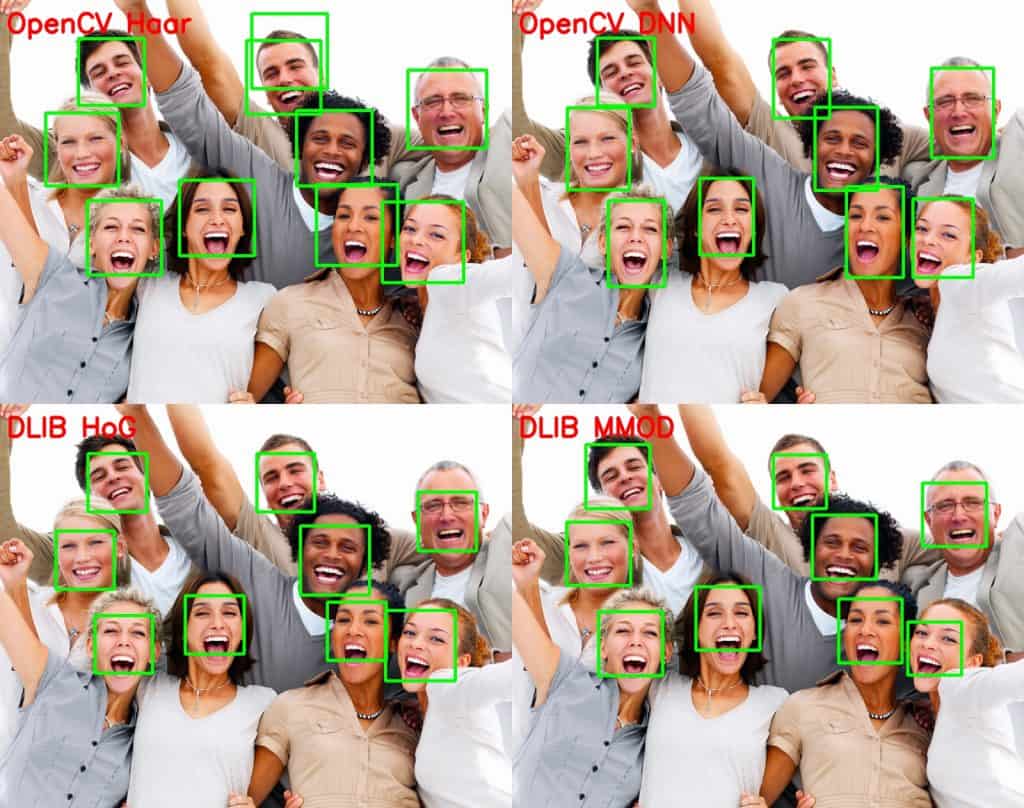

5. Accuracy Comparison

I tried to evaluate the 4 models using the FDDB dataset using the script used for evaluating the OpenCV-DNN model. However, I found surprising results. Dlib had worse numbers than Haar, although visually dlib outputs look much better. Given below are the Precision scores for the 4 methods.

Where,

AP_50 = Precision when the overlap between Ground Truth and predicted bounding box is at least 50% ( IoU = 50% )

AP_75 = Precision when the overlap between Ground Truth and predicted bounding box is at least 75% ( IoU = 75% )

AP_Small = Average Precision for small size faces ( Average of IoU = 50% to 95% )

AP_medium = Average Precision for medium size faces ( Average of IoU = 50% to 95% )

AP_Large = Average Precision for large size faces ( Average of IoU = 50% to 95% )

mAP = Average precision across different IoU ( Average of IoU = 50% to 95% )

On closer inspection, I found that this evaluation is not fair for Dlib.

5.1. Evaluating accuracy the wrong way!

According to my analysis, the reasons for lower numbers for dlib are as follows :

Thus, the only relevant metric for a fair comparison between OpenCV and Dlib is AP_50 ( or even less than 50 since we mostly compare the number of detected faces ). However, this point should always be considered while using the Dlib Face detectors.

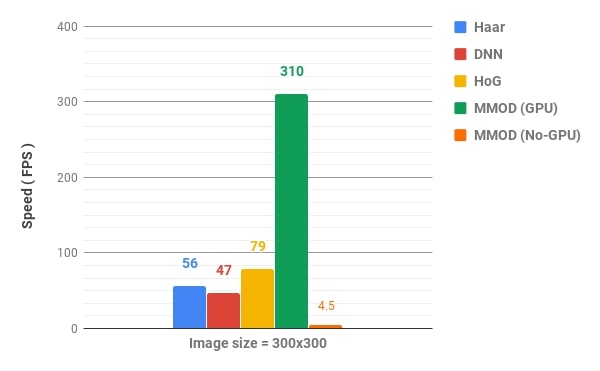

6. Speed Comparison

We used a 300×300 image for the comparison of the methods. The MMOD detector can be run on a GPU, but the support for NVIDIA GPUs in OpenCV is still not there. So, we evaluate the methods on CPU only and also report results for MMOD on GPU as well as CPU.

Hardware used

Processor : Intel Core i7 6850K – 6 Core

RAM : 32 GB

GPU : NVIDIA GTX 1080 Ti with 11 GB RAM

OS : Linux 16.04 LTS

Programming Language : Python

We run each method 10000 times on the given image and take 10 such iterations and average the time taken. Given below are the results.

As you can see from the image of this size, all the methods perform in real-time, except MMOD. MMOD detector is very fast on a GPU but is very slow on a CPU.

It should also be noted that these numbers can be different on different systems.

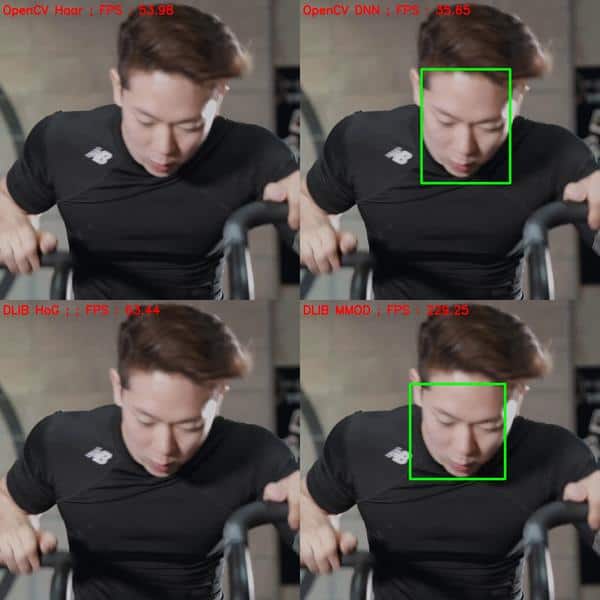

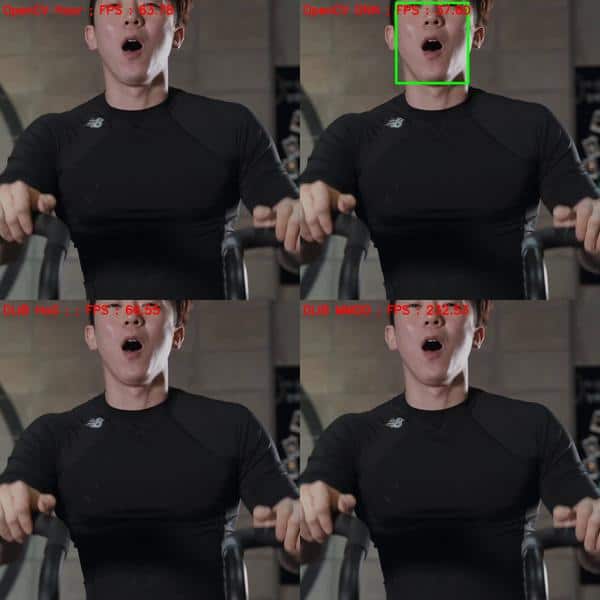

7. Comparison under various conditions

Apart from accuracy and speed, there are some other factors that help us decide which one to use. In this section, we will compare the methods based on various other factors which are also important.

7.1. Detection Across Scale

We will see an example where, in the same video, the person goes back n forth, thus making the face smaller and bigger. We notice that the OpenCV DNN (Deep Neural network) detects all the faces while Dlib detects only those faces which are bigger in size. We also show the size of the detected face along with the bounding box.

It can be seen that dlib based methods can detect faces of size up to ~(70×70) after which they fail to detect. As we discussed earlier, I think this is the major drawback of Dlib based methods. Since it is impossible to know the size of the face beforehand in most cases, we can get rid of this problem by upscaling the image, but then the speed advantage of dlib as compared to OpenCV-DNN goes away.

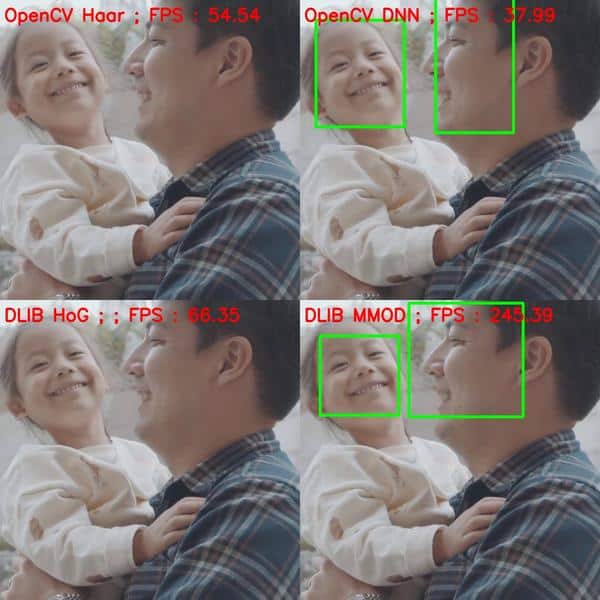

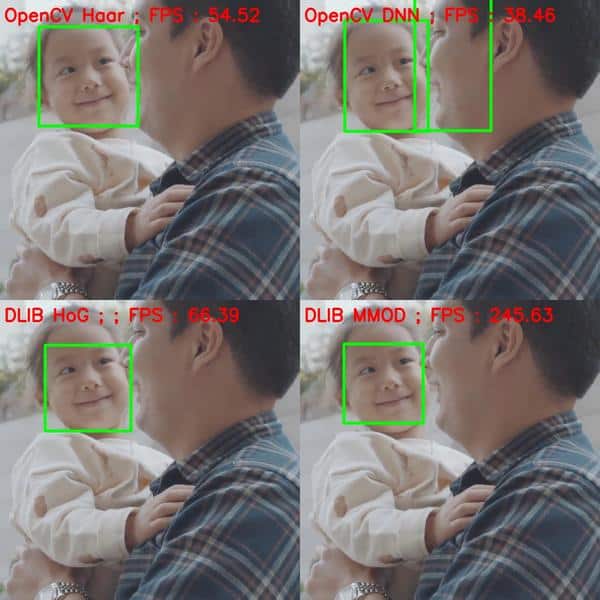

7.2. Non-frontal Face

Non-frontal can look towards the right, left, up, or down. Again, to be fair with dlib, we ensure the face size is more than 80×80. Given below are some examples.

As expected, Haar based detector fails totally. The hoG-based detector does detect faces for left or right looking faces ( since it was trained on them ) but is not as accurate as the DNN-based detectors of OpenCV and Dlib.

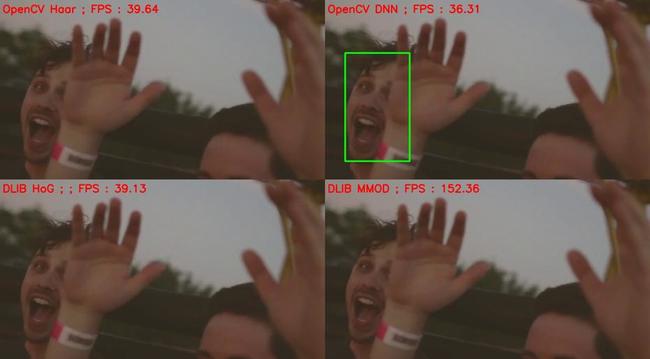

7.3. Occlusion

Let us see how well the methods perform under occlusion.

Again, the DNN methods outperform the other two, with OpenCV-DNN slightly better than Dlib-MMOD. This is mainly because the CNN features are much more robust than HoG or Haar features.

8. Conclusion

We discussed the pros and cons of each method in the respective sections. I recommend trying both OpenCV-DNN and HoG methods for your application and deciding accordingly. We share some tips to get started.

General Case

In most applications, we won’t know the face size in the image beforehand. Thus, it is better to use OpenCV – DNN method as it is pretty fast and very accurate, even for small sized faces. It also detects faces at various angles. We recommend using OpenCV-DNN in most.

For medium to large image sizes

Dlib HoG is the fastest method on the CPU. But it does not detect small sized faces ( < 70×70 ). So, if you know that your application will not be dealing with very small sized faces ( for example, a selfie app ), then HoG based Face detector is a better option. Also, If you can use a GPU, then the MMOD face detector is the best option as it is very fast on GPU and provides detection at various angles.

High-resolution images

Since feeding high-resolution images is not possible with these algorithms ( for computation speed ), HoG / MMOD detectors might fail when you scale down the image. On the other hand, the OpenCV-DNN method can be used for these since it detects small faces.

Have any other suggestions? Please mention them in the comments, and we’ll update the post accordingly!

Must Read Articles

| We have crafted the following articles especially for you. 1. What is Face Detection? – The Ultimate Guide 2. Anti-Spoofing Face Recognition System using OAK-D and DepthAI 3. Face Recognition with ArcFace 4. Face Recognition: An Introduction for Beginners |

References

[FDDB Comparison code]

[Dlib Blog]

[dlib mmod python example]

[dlib mmod cpp example]

[OpenCV DNN Face detector]

[Haar Based Face Detector]

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning