1. Deep Learning Frameworks

Deep Learning is a branch of AI which uses Neural Networks for Machine Learning. In the recent years, it has shown dramatic improvements over traditional machine learning methods with applications in Computer Vision, Natural Language Processing, Robotics among many others. A very light introduction to Convolutional Neural Networks ( a type of Neural Network ) is covered in this article.

Deep Learning became a household name for AI engineers since 2012 when Alex Krizhevsky and his team won the ImageNet challenge. ImageNet is a computer vision competition in which the computer is required to correctly classify the image of an object into one of 1000 categories. The objects include different types of animals, plants, instruments, furniture, Vehicles to name a few.

This attracted a lot of attention from the Computer vision community and almost everyone started working on Neural Networks. But at that time, there were not many tools available to get you started in this new domain. A lot of effort has been put in by the community of researchers to create useful libraries making it easy to work in this emerging field. Some popular deep learning frameworks at present are Tensorflow, Theano, Caffe, Pytorch, CNTK, MXNet, Torch, deeplearning4j, Caffe2 among many others.

Keras is a high-level API, written in Python and capable of running on top of TensorFlow, Theano, or CNTK. The above deep learning libraries are written in a general way with a lot of functionalities. This can be overwhelming for a beginner who has limited knowledge in deep learning. Keras provides a simple and modular API to create and train Neural Networks, hiding most of the complicated details under the hood. This makes it easy to get you started on your Deep Learning journey.

Once you get familiar with the main concepts and want to dig deeper and take control of the process, you may choose to work with any of the above frameworks.

2. Keras installation and configuration

As mentioned above, Keras is a high-level API that uses deep learning libraries like Theano or Tensorflow as the backend. These libraries, in turn, talk to the hardware via lower level libraries. For example, if you run the program on a CPU, Tensorflow or Theano use BLAS libraries. On the other hand, when you run on a GPU, they use CUDA and cuDNN libraries.

If you are setting up a new system, you might want to look at this article for installing the most common deep learning frameworks. We will mention only the Keras specific part here.

It is advisable to install everything on virtual environments. If virtual environment is not installed on the system, then check step 5 of the above article.

We will install Theano and Tensorflow as backend libraries for Keras, along with some more libraries which are useful for working with data ( h5py ) and visualization ( pydot, graphviz and matplotlib ).

Create virtual environment

Create the virtual environment for python 3.

mkvirtualenv virtual-py3 -p python3

# Activate the virtual environment

workon virtual-py3

Install libraries

pip install Theano

#If using only CPU

pip install tensorflow

#If using GPU

pip install tensorflow-gpu

pip install h5py pydot matplotlib

Also install graphviz

#For Ubuntu

sudo apt-get install graphviz

#For MacOs

brew install graphviz

Configure Keras

By default, Keras is configured to use Tensorflow as the backend since it is the most popular choice. However, If you want to change it to Theano, open the file ~/.keras/keras.json which looks as shown:

{

"epsilon": 1e-07,

"floatx": "float32",

"image_data_format": "channels_last",

"backend": "tensorflow"

}

and change it to

{

"epsilon": 1e-07,

"floatx": "float32",

"image_data_format": "channels_first",

"backend": "theano"

}

3. Keras Workflow

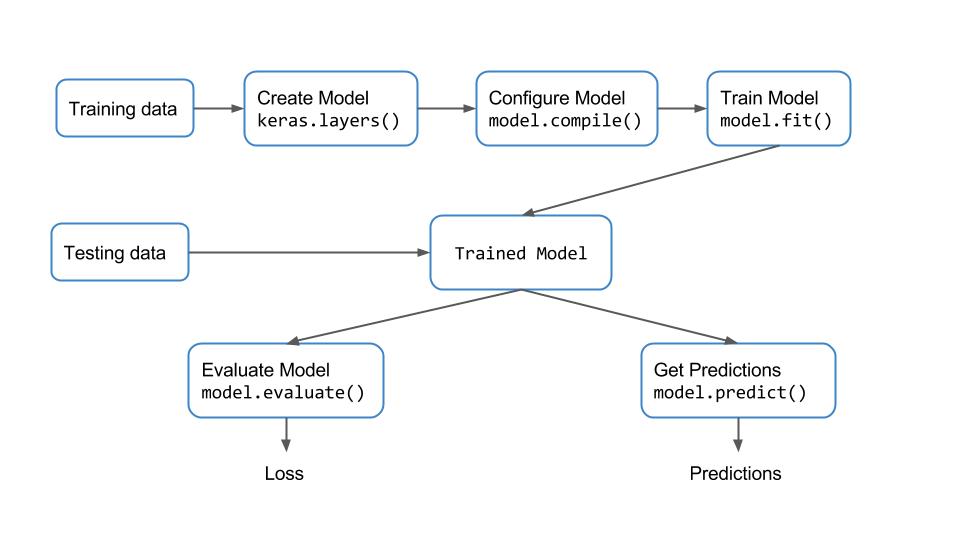

Keras provides a very simple workflow for training and evaluating the models. It is described with the following diagram.

Basically, we are creating the model and training it using the training data. Once the model is trained, we take the model to perform inference on test data. Let us understand the function of each of the blocks.

3.1. Keras Layers

Layers can be thought of as the building blocks of a Neural Network. They process the input data and produce different outputs, depending on the type of layer, which are then used by the layers which are connected to them. We will cover the details of every layer in future posts.

Keras provides a number of core layers which include

- Dense layers, also called fully connected layer, since, each node in the input is connected to every node in the output,

- Activation layer which includes activation functions like ReLU, tanh, sigmoid among others,

- Dropout layer – used for regularization during training,

- Flatten, Reshape, etc.

Apart from these core layers, some important layers are

- Convolution layers – used for performing convolution,

- Pooling layers – used for down sampling,

- Recurrent layers,

- Locally-connected, normalization, etc.

We can use the code snippet to import the respective layers.

from tensorflow.keras.layers import Dense, Conv2D, MaxPooling2D

3.2. Keras Models

Keras provides two ways to define a model:

- Sequential, used for stacking up layers – Most commonly used.

- Functional API, used for designing complex model architectures like models with multiple-outputs, shared layers etc.

from tensorflow.keras.models import Sequential

For creating a Sequential model, we can either pass the list of layers as an argument to the constructor or add the layers sequentially using the model.add() function.

For example, both the code snippets for creating a model with a single dense layer with 10 outputs are equivalent.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation

model = Sequential([Dense(10, input_shape=(nFeatures,)),

Activation('linear') ])

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation

model = Sequential()

model.add(Dense(1, input_shape=(nFeatures,), kernel_initializer='uniform'))

model.add(Activation('linear'))

An important thing to note in the model definition is that we need to specify the input shape for the first layer. This is done in the above snippet using the input_shape parameter passed along with the first Dense layer. The shapes of other layers are inferred by the compiler.

3.3. Configuring the training process

Once the model is ready, we need to configure the learning process. This means

- Specify an Optimizer which determines how the network weights are updated

- Specify the type of cost function or loss function.

- Specify the metrics you want to evaluate during training and testing.

- Create the model graph using the backend.

- Any other advanced configuration.

This is done in Keras using the model.compile() function. The code snippet shows the usage.

model.compile(optimizer='rmsprop', loss='mse', metrics=['mse', 'mae'])

The mandatory parameters to be specified are the optimizer and the loss function.

Optimizers

Keras provides a lot of optimizers to choose from, which include

- Stochastic Gradient Descent ( SGD ),

- Adam,

- RMSprop,

- AdaGrad,

- AdaDelta, etc.

RMSprop is a good choice of optimizer for most problems.

Loss functions

In a supervised learning problem, we have to find the error between the actual values and the predicted value. There can be different metrics which can be used to evaluate this error. This metric is often called loss function or cost function or objective function. There can be more than one loss function depending on what you are doing with the error. In general, we use

- binary-cross-entropy for a binary classification problem,

- categorical-cross-entropy for a multi-class classification problem,

- mean-squared-error for a regression problem and so on.

3.4. Training

Once the model is configured, we can start the training process. This can be done using the model.fit() function in Keras. The usage is described below.

model.fit(trainFeatures, trainLabels, batch_size=4, epochs = 100)

We just need to specify the training data, batch size and number of epochs. Keras automatically figures out how to pass the data iteratively to the optimizer for the number of epochs specified. The rest of the information was already given to the optimizer in the previous step.

3.5. Evaluating the model

Once the model is trained, we need to check the accuracy on unseen test data. This can be done in two ways in Keras.

model.evaluate()– It finds the loss and metrics specified in themodel.compile()step. It takes both the test data and labels as input and gives a quantitative measure of the accuracy. It can also be used to perform cross-validation and further finetune the parameters to get the best model.model.predict()– It finds the output for the given test data. It is useful for checking the outputs qualitatively.

Now, let’s see how to use keras models and layers to create a simple Neural Network.

4. Linear Regression Example

We will learn how to create a simple network with a single layer to perform linear regression. We will use the Boston Housing dataset available in Keras as an example. Samples contain 13 attributes of houses at different locations around the Boston suburbs in the late 1970s. Targets are the median values of the houses at a location (in k$). With the 13 features, we have to train the model which would predict the price of the house in the test data.

4.1. Training

We use the Sequential model to create the network graph. Then we add a Dense layer with the number of inputs equal to the number of features in the data and a single output. Then we follow the workflow as explained in the previous section. We compile the model and train it using the fit command. Finally, we use the model.summary() function to check the configuration of the model. All keras datasets come with a load_data() function which returns tuples of training and testing data as shown in the code.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation

from tensorflow.keras.datasets import boston_housing

(X_train, Y_train), (X_test, Y_test) = boston_housing.load_data()

nFeatures = X_train.shape[1]

model = Sequential()

model.add(Dense(1, input_shape=(nFeatures,), kernel_initializer='uniform'))

model.add(Activation('linear'))

model.compile(optimizer='rmsprop', loss='mse', metrics=['mse', 'mae'])

model.fit(X_train, Y_train, batch_size=4, epochs=1000)

model.summary()

The output of model.summary() is given below. It shows 14 parameters – 13 parameters for the weights and 1 for the bias.

_______________________________________________________ Layer (type) Output Shape Param # ======================================================= dense_1 (Dense) (None, 1) 14 ======================================================= Total params: 14 Trainable params: 14 Non-trainable params: 0

4.2. Inference

After the model has been trained, we want to do inference on the test data. We can find the loss on the test data using the model.evaluate() function. We get the predictions on test data using the model.predict() function. Here we compare the ground truth values with the predictions from our model for the first 5 test samples.

model.evaluate(X_test, Y_test, verbose=True)

Y_pred = model.predict(X_test)

print(Y_test[:5])

print(Y_pred[:5,0])

The output is:

[ 7.2 18.8 19. 27. 22.2]

[ 7.2 18.26 21.38 29.28 23.72]

It can be seen that the predictions follow the ground truth values, but there are some errors in the predictions.

References

https://www.tensorflow.org/

https://keras.io

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning