Correct body posture is the key to the overall well-being of a person. However, maintaining a correct body posture can be difficult as we often forget it. This blog post will walk you through the steps required to build a solution for that. Recently, we had a lot of fun playing with Body Posture Detection using MediaPipe POSE. It works like a charm!

- Body Posture Detection using MediaPipe Pose

- Application Objective

- Body Posture Detection and Analysis Application Workflow

- Requirements

- Body Posture Detection Code Explanation

Body Posture Detection using MediaPipe Pose

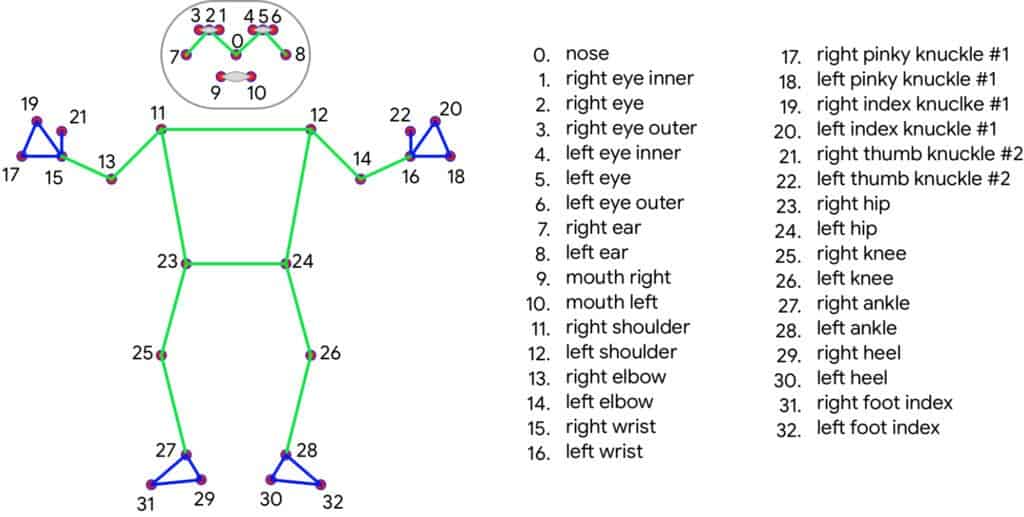

MediaPipe Pose is a high-fidelity body pose tracking solution that renders 33 3D landmarks and a background segmentation mask on the whole body from RGB frames (Note RGB image frame). It utilizes BlazePose[1] topology, a superset of COCO[2], BlazeFace[3], and BlazePalm[4] topology.

Application Objective – Mediapipe Body Tracking

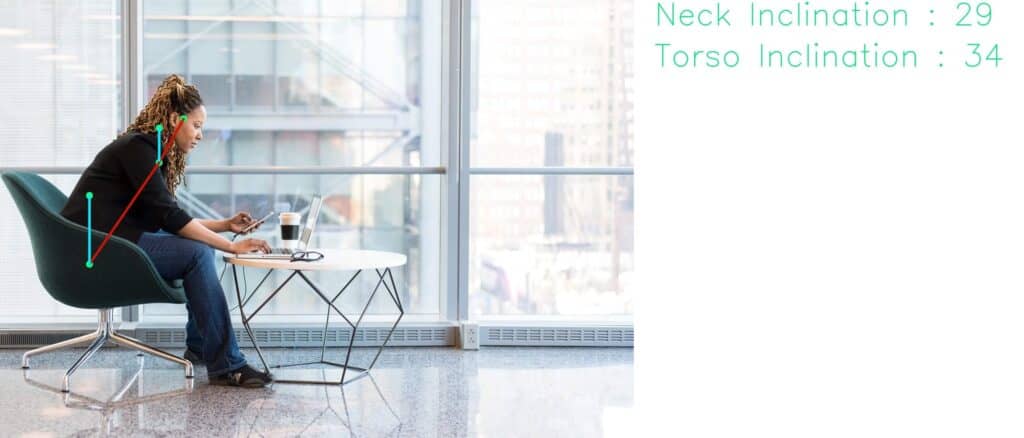

Our goal is to detect a person from a perfect side view and measure the neck and torso inclination to some reference axis. By monitoring the inclination angle when the person bends below a certain threshold angle.

Other features include measuring the time of a particular posture and the camera alignment. We must ensure that the camera looks at the proper side view. Hence we require the alignment feature.

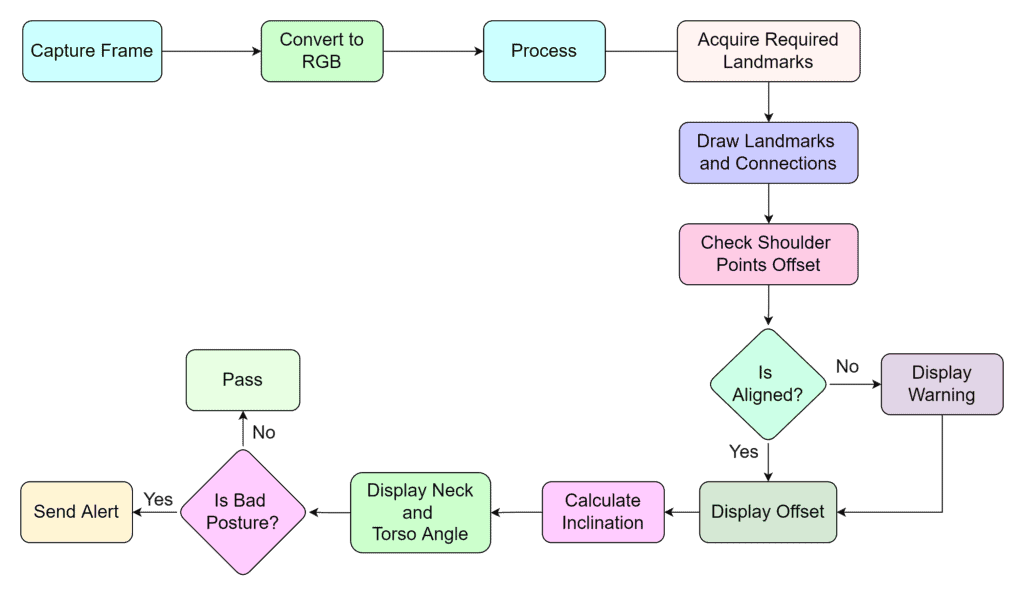

Body Posture Detection and Analysis Application Workflow

Prerequisites

OpenCV and MediaPipe are the primary packages that we will need. Use the requirements.txt file provided within the code folder to install the dependencies.

pip install -r requirements.txt

You will need a working knowledge of OpenCV Python to understand the code. New to OpenCV? Here is a list of specially crafted OpenCV tutorials for beginners.

Ensure that you go through the explanation once before running the script to avoid errors.

Body Posture Detection Code Explanation

1. Import Libraries

import cv2

import time

import math as m

import MediaPipe as mp

2. Function to calculate offset distance

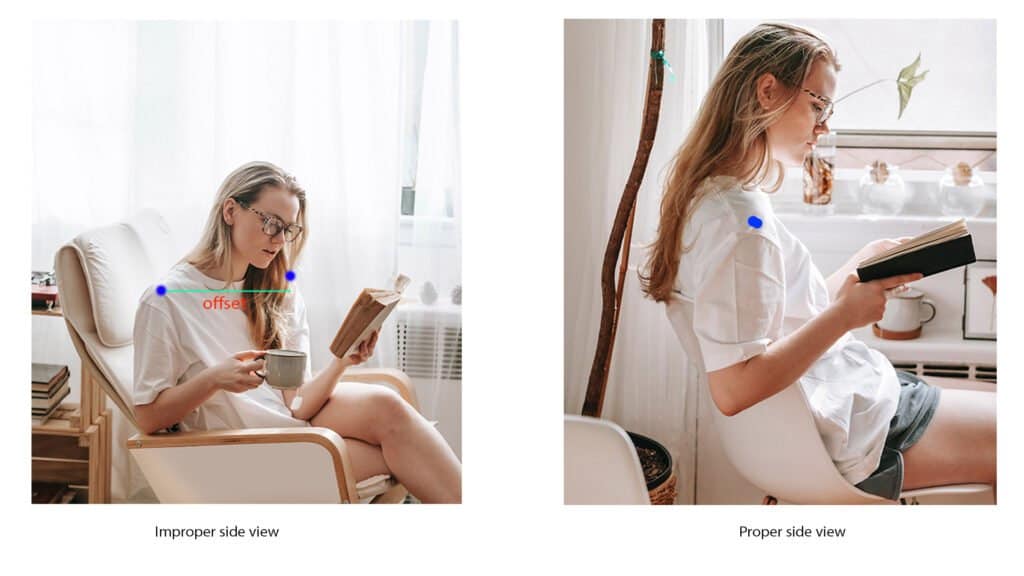

The setup requires the person to be in the proper side view. The function findDistance helps us determine the offset distance between two points. It can be the hip points, the eyes, or the shoulder.

These points have been selected as they are always more or less symmetric about the central axis of the human body. With this, we will incorporate the camera alignment feature in the script.

![]()

def findDistance(x1, y1, x2, y2):

dist = m.sqrt((x2-x1)**2+(y2-y1)**2)

return dist

3. Function to Calculate the Body Posture Inclination

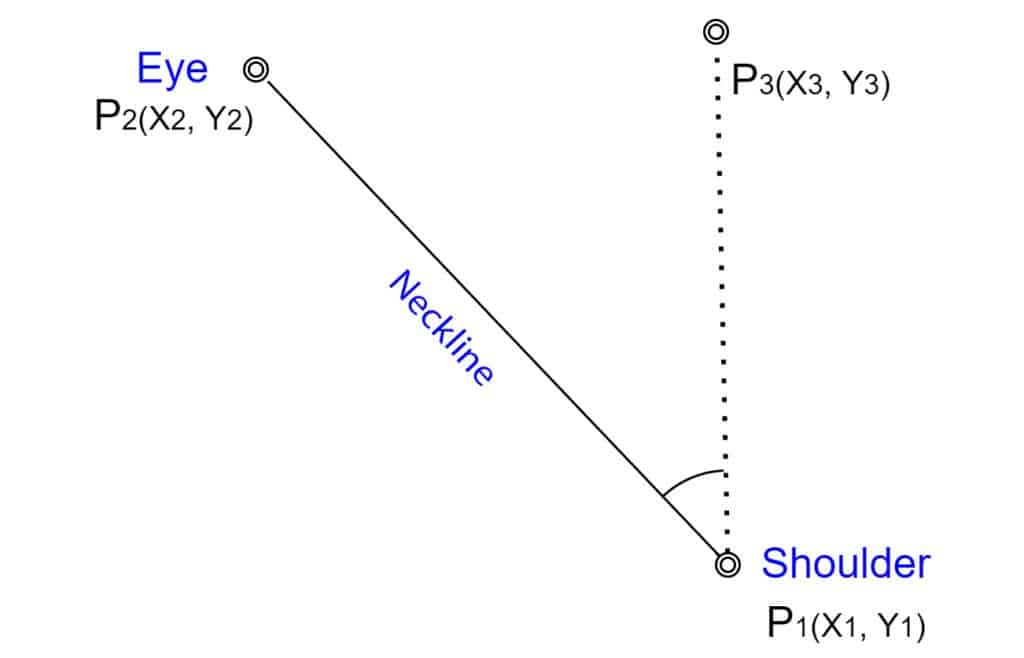

The angle is the primary deterministic factor for the posture. We use the angle subtended by the neckline and the torso line to the y-axis. The neckline connects the shoulder and the eye. Here we take the shoulder as the pivotal point.

Similarly, the torso line connects the hip and the shoulder, where the hip is considered a pivotal point.

Fig. Neckline inclination measurement

Taking the neckline as an example, we have the following points.

- P1(x1, y1): shoulder

- P2(x2, y2): eye

- P3(x3, y3): any point on the vertical axis passing through P1

Apparently, for P3 x-coordinate is the same as that of P1. Since y3 is valid for all y, let’s take y3 = 0 for our simplicity.

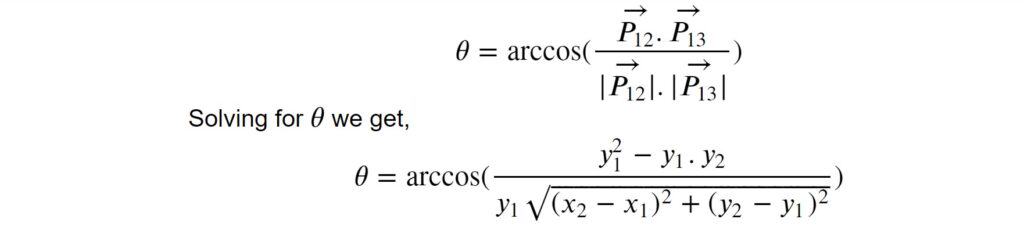

We take the vector approach to find the inner angle of three points. The angle between two vectors P12 and P13 is given by,

# Calculate angle.

def findAngle(x1, y1, x2, y2):

theta = m.acos( (y2 -y1)*(-y1) / (m.sqrt(

(x2 - x1)**2 + (y2 - y1)**2 ) * y1) )

degree = int(180/m.pi)*theta

return degree

4. Function to Send Poor Body Posture Alerts

Use this function to send alerts when bad posture is detected. We are leaving it empty for you to take up. Feel free to get creative and customize at your convenience. For example, you can connect a Telegram Bot for alerts, which is super easy. Link in the reference section [6]. Or you can take it up a notch by creating an android app.

def sendWarning(x):

pass

5. Initializations

Initializing constants and methods here. These should be self-explanatory through the inline comments.

# Initialize frame counters.

good_frames = 0

bad_frames = 0

# Font type.

font = cv2.FONT_HERSHEY_SIMPLEX

# Colors.

blue = (255, 127, 0)

red = (50, 50, 255)

green = (127, 255, 0)

dark_blue = (127, 20, 0)

light_green = (127, 233, 100)

yellow = (0, 255, 255)

pink = (255, 0, 255)

# Initialize mediapipe pose class.

mp_pose = mp.solutions.pose

pose = mp_pose.Pose()

Body Posture Detection Main Function

1. Create Video Capture and Video Writer Objects

For demonstration, we are using pre-recorded video samples. You would need to position the webcam to capture your side view in practice. In the following snippet, video capture and video writer objects are created.

As you can see, we are acquiring the video metadata to create the video capture object. If you want to write in mp4 format, change the codec to *’mp4v’. For a more intuitive guide on video writers and handling codecs, check out the article on OpenCV Video Writer.

# For webcam input replace file name with 0.

file_name = 'input.mp4'

cap = cv2.VideoCapture(file_name)

# Meta.

fps = int(cap.get(cv2.CAP_PROP_FPS))

width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

frame_size = (width, height)

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

# Video writer.

video_output = cv2.VideoWriter('output.mp4', fourcc, fps, frame_size)

2. Body Posture Detection Main Loop

The configurable APIs of the Pose() solution does not require much tweaking. The default values are enough to detect pose landmarks. However, if we want the utility to generate a segmentation mask, the ENABLE_SEGMENTATION flag must be set to True. Following are some of the configurable APIs in the MediaPipe pose solution.

- STATIC_IMAGE_MODE: It’s a boolean. If set to True, person detection runs for every input image. This is not necessary for videos, where detection runs once followed by landmark tracking. The default value is False.

- MODEL_COMPLEXITY: Default value is 1. It can be 0, 1, or 2. If higher complexity is chosen, the inference time increases.

- ENABLE_SEGMENTATION: If set to True, the solution generates a segmentation mask along with the pose landmarks. The default value is False.

- MIN_DETECTION_CONFIDENCE: Ranges from [0.0 – 1.0]. As the name suggests, it is the least confidence value for the detection to be considered valid. The default value is 0.5.

- MIN_TRACKING_CONFIDENCE: Ranges from [0.0 – 1.0]. It is the minimum confidence value for a landmark to be considered tracked. The default value is 0.5.

Usually, the default values do a good job. Hence we are not passing any argument in mp_pose.Pose(). The following section deals with the processing of the RGB frames, from which we can extract the pose landmarks later. At last, we convert the image back to OpenCV-friendly BGR color space.

# Capture frames.

success, image = cap.read()

if not success:

print("Null.Frames")

break

# Get fps.

fps = cap.get(cv2.CAP_PROP_FPS)

# Get height and width of the frame.

h, w = image.shape[:2]

# Convert the BGR image to RGB.

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Process the image.

keypoints = pose.process(image)

# Convert the image back to BGR.

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

3. Acquire Body Posture Landmark Coordinates

The pose_landmarks attribute of the solution output object provides the landmarks’ normalized x and y coordinates. Hence, to get actual values, we need to multiply the outputs by the image’s width and height, respectively.

The landmarks’ LEFT_SHOULDER’, ‘RIGHT_SHOULDER’ etc., are the attributes of PoseLandmark class. To acquire the normalized coordinates, we use the following syntax.

norm_coordinate = pose.process(image).pose_landmark.landmark[MediaPipe.solutions.pose.PoseLandmark.<SPECIFIC_LANDMARK>].coordinate

The methods are shortened using representations as shown below.

# Use lm and lmPose as representative of the following methods.

lm = keypoints.pose_landmarks

lmPose = mp_pose.PoseLandmark

# Left shoulder.

l_shldr_x = int(lm.landmark[lmPose.LEFT_SHOULDER].x * w)

l_shldr_y = int(lm.landmark[lmPose.LEFT_SHOULDER].y * h)

# Right shoulder.

r_shldr_x = int(lm.landmark[lmPose.RIGHT_SHOULDER].x * w)

r_shldr_y = int(lm.landmark[lmPose.RIGHT_SHOULDER].y * h)

# Left ear.

l_ear_x = int(lm.landmark[lmPose.LEFT_EAR].x * w)

l_ear_y = int(lm.landmark[lmPose.LEFT_EAR].y * h)

# Left hip.

l_hip_x = int(lm.landmark[lmPose.LEFT_HIP].x * w)

l_hip_y = int(lm.landmark[lmPose.LEFT_HIP].y * h)

4. Align Camera

This is to ensure the camera captures the person’s proper side view. We are measuring the horizontal distance between the left and right shoulder points. With correct alignment, the left and right points should almost coincide.

Note that the offset distance threshold is based on observation on a dataset having the exact dimensions as the video samples. If you try on higher-resolution samples, this value will change. It does not have to be very concrete; you can set a threshold value based on your intuition.

Actually, the distance method is not at all a correct way to determine the alignment. It should be angle-based.

We are using the distance method for simplicity.

# Calculate distance between left shoulder and right shoulder points.

offset = findDistance(l_shldr_x, l_shldr_y, r_shldr_x, r_shldr_y)

# Assist to align the camera to point at the side view of the person.

# Offset threshold 30 is based on results obtained from analysis over 100 samples.

if offset < 100:

cv2.putText(image, str(int(offset)) + ' Aligned', (w - 150, 30), font, 0.9, green, 2)

else:

cv2.putText(image, str(int(offset)) + ' Not Aligned', (w - 150, 30), font, 0.9, red, 2)

5. Calculate Body Posture Inclination and Draw Landmarks

The inclination angles are obtained using the predefined function findAngle. Landmarks and their connections are drawn as shown below.

# Calculate angles.

neck_inclination = findAngle(l_shldr_x, l_shldr_y, l_ear_x, l_ear_y)

torso_inclination = findAngle(l_hip_x, l_hip_y, l_shldr_x, l_shldr_y)

# Draw landmarks.

cv2.circle(image, (l_shldr_x, l_shldr_y), 7, yellow, -1)

cv2.circle(image, (l_ear_x, l_ear_y), 7, yellow, -1)

# Let's take y - coordinate of P3 100px above x1, for display elegance.

# Although we are taking y = 0 while calculating angle between P1,P2,P3.

cv2.circle(image, (l_shldr_x, l_shldr_y - 100), 7, yellow, -1)

cv2.circle(image, (r_shldr_x, r_shldr_y), 7, pink, -1)

cv2.circle(image, (l_hip_x, l_hip_y), 7, yellow, -1)

# Similarly, here we are taking y - coordinate 100px above x1. Note that

# you can take any value for y, not necessarily 100 or 200 pixels.

cv2.circle(image, (l_hip_x, l_hip_y - 100), 7, yellow, -1)

# Put text, Posture and angle inclination.

# Text string for display.

angle_text_string = 'Neck : ' + str(int(neck_inclination)) + ' Torso : ' + str(int(torso_inclination))

6. Body Posture Detection Conditionals

On the basis of the posture, whether good or bad; results are displayed. Again, the threshold angles are based on intuition. You can set the thresholds as per your need. With every detection, the frame counters are incremented for good posture and bad postures respectively.

The time of a particular posture can be calculated by dividing the number of frames by fps. Check out fps measuring methods in our previous blog post.

# Determine whether good posture or bad posture.

# The threshold angles have been set based on intuition.

if neck_inclination < 40 and torso_inclination < 10:

bad_frames = 0

good_frames += 1

cv2.putText(image, angle_text_string, (10, 30), font, 0.9, light_green, 2)

cv2.putText(image, str(int(neck_inclination)), (l_shldr_x + 10, l_shldr_y), font, 0.9, light_green, 2)

cv2.putText(image, str(int(torso_inclination)), (l_hip_x + 10, l_hip_y), font, 0.9, light_green, 2)

# Join landmarks.

cv2.line(image, (l_shldr_x, l_shldr_y), (l_ear_x, l_ear_y), green, 4)

cv2.line(image, (l_shldr_x, l_shldr_y), (l_shldr_x, l_shldr_y - 100), green, 4)

cv2.line(image, (l_hip_x, l_hip_y), (l_shldr_x, l_shldr_y), green, 4)

cv2.line(image, (l_hip_x, l_hip_y), (l_hip_x, l_hip_y - 100), green, 4)

else:

good_frames = 0

bad_frames += 1

cv2.putText(image, angle_text_string, (10, 30), font, 0.9, red, 2)

cv2.putText(image, str(int(neck_inclination)), (l_shldr_x + 10, l_shldr_y), font, 0.9, red, 2)

cv2.putText(image, str(int(torso_inclination)), (l_hip_x + 10, l_hip_y), font, 0.9, red, 2)

# Join landmarks.

cv2.line(image, (l_shldr_x, l_shldr_y), (l_ear_x, l_ear_y), red, 4)

cv2.line(image, (l_shldr_x, l_shldr_y), (l_shldr_x, l_shldr_y - 100), red, 4)

cv2.line(image, (l_hip_x, l_hip_y), (l_shldr_x, l_shldr_y), red, 4)

cv2.line(image, (l_hip_x, l_hip_y), (l_hip_x, l_hip_y - 100), red, 4)

# Calculate the time of remaining in a particular posture.

good_time = (1 / fps) * good_frames

bad_time = (1 / fps) * bad_frames

# Pose time.

if good_time > 0:

time_string_good = 'Good Posture Time : ' + str(round(good_time, 1)) + 's'

cv2.putText(image, time_string_good, (10, h - 20), font, 0.9, green, 2)

else:

time_string_bad = 'Bad Posture Time : ' + str(round(bad_time, 1)) + 's'

cv2.putText(image, time_string_bad, (10, h - 20), font, 0.9, red, 2)

# If you stay in bad posture for more than 3 minutes (180s) send an alert.

if bad_time > 180:

sendWarning()

MediaPipe Pose Conclusion

So that’s all about building a posture corrector application using MediaPipe Pose. In this post, we discussed the implementation of MediaPipe Pose to detect human body posture. You learned how to obtain pose landmarks, configurable APIs, Outputs, etc. I hope this blog post helped you understand the basics of the MediaPipe pose and helped generate some new ideas for your next project.

Must Read Articles

| Hang on, the journey doesn’t end here. After months of development, we have some new and exciting blog posts specially crafted for you!!! 1. Creating Snapchat/Instagram filters using Mediapipe 2. Gesture Control in Zoom Call using Mediapipe 3. Center Stage for Zoom Calls using MediaPipe 4. Drowsy Driver Detection using Mediapipe 5. Comparing Yolov7 and Mediapipe Pose Estimation models |

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning