This article aims to build an AI fitness trainer that can help you perform squats seamlessly, irrespective of whether you are a beginner or a pro. To achieve this task, we can harness the power of a deep learning based human pose estimation algorithm. Several popular frameworks for estimating human pose include OpenPose, AlphaPose, Yolov7, MediaPipe, etc. However, owing to the crazy inference speed on CPU, we have opted to use Mediapipe’s Pose pipeline for estimating the human keypoints.

The application will also have provisions to perform squats in Beginner and Pro modes, along with appropriate feedback. Want to learn how Human Pose Estimation works? This great article on Human Pose Estimation using OpenCV will help you get started.

- Body Pose Estimation Using MediaPipe

- Intuition of Frontal and Side View for Posture Analysis

- Building an AI Fitness Trainer Using MediaPipe Pose to Analyze Squats

- State Diagram Explanation While Performing Squats

- Application Workflow for the AI Fitness Trainer

- Key Concepts While Designing The Application

- Test Cases in the AI Fitness Trainer Application

- Modes of Squats – Beginner vs. Pro

- Scope for Improvements

- Summary

Body Pose Estimation Using MediaPipe

MediaPipe Pose is an ML solution for high-fidelity body pose tracking, inferring 33 3D landmarks and background segmentation masks on the whole body from RGB video frames utilizing the BlazePose, which is a superset of COCO, BlazeFace, and BlazePalm topologies.

The pipeline for MediaPipe pose consists of a two-step detection-tracking pipeline similar to MediaPipe Hands and MediaPipe Face Mesh solutions. Using a detector, the pipeline first locates the person/pose region-of-interest (ROI) within the frame. The tracker subsequently predicts the pose landmarks and segmentation mask within the ROI using the ROI-cropped frame as input.

The introductory tutorial on MediaPipe will help you learn more about the major components associated with the framework.

Intuition of Frontal and Side View for Posture Analysis

While designing an application to analyze various fitness exercises, one might be curious to perform various calculations keeping in mind the view of the object (person) from the camera.

Using the frontal view, we have access to both left and right sides and hence can leverage the use of slopes and angles of the various landmark points, such as the angle between the knee-hip and knee-knee lines, etc. Such information might help analyze exercises such as overhead presses, side planks, crunches, curls, etc.

We can use the side view to find better estimates of various inclinations concerning the verticals or horizontals. Such information can be beneficial for analyzing exercises such as deadlifts, pushups, squats, dips, etc.

Since we are analyzing squats and all significant computations concerning the appropriate inclinations with the verticals, we have opted for a side view.

To ensure a healthy lifestyle, Romania has taken the initiative to offer free bus tickets to those who perform 20 squats. Check out this Instagram Post!

Building an AI Fitness Trainer Using MediaPipe Pose to Analyze Squats

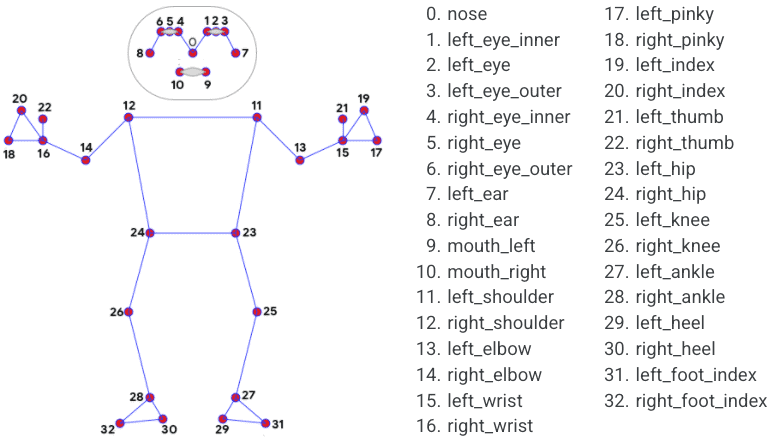

The landmarks that would be required for our application are depicted in the following image.

We will consider the angles of the hip-knee, knee-ankle, and shoulder-hip lines with the verticals to calculate the states (explained in the subsequent sections) and perform the appropriate feedback messages. This is depicted in the image below.

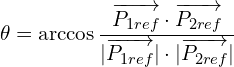

Additionally, we shall calculate the offset angle (the angle subtended by the nose and the shoulders) with a proper warning to maintain a good side view.

Besides, we will also consider the timings for computing inactivity subject to which the counters for proper and improper squats would be reset.

The application will also provide two modes: Beginner and Pro; one can choose either of them and start performing squats seamlessly, irrespective of whether he is a beginner or an expert.

Human Pose Estimation is one of the most exciting research areas in Computer Vision. It finds significance in a wide range of applications. We can use it to build a simple yet exciting application that analyzes poor sitting postures.

State Diagram Explanation While Performing Squats

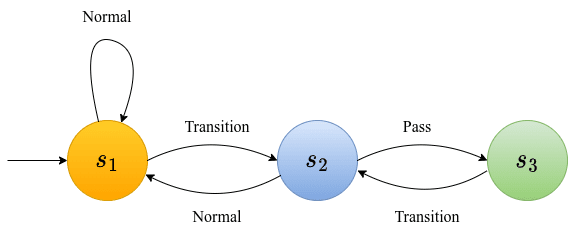

A state transition diagram explains the various states maintained when a squat is performed.

Note that all the states are calculated based on the angle between the hip-knee line and the vertical (for simplicity, we will condense this phase to the angle between the knee and the vertical from hereon).

The following gif illustrates the phases of transitions.

We will deal with three states for our application: s1, s2, and s3.

- State s1: If the angle between the knee and the vertical falls within 32°, then it is in the Normal phase, and its state is s1. It is essentially the state where the counters for proper and improper squats are updated.

- State s2: If the angle between the knee and the vertical falls between 35° and 65°, it is in the Transition phase and subsequently goes to state s2.

- State s3: If the angle between the knee and the vertical lies within a specific range (say, between 75° and 95°), it is in the Pass phase and subsequently goes to state s3.

We can finally provide the state transition diagram as well.

Note:

- All calculations related to feedback are computed for states s2 and s3.

- During our implementation, we maintain a list:

state_sequence. It contains the series of states as the person goes from states s1 through s3 and back to s1. The maximum number of states instate_sequenceis 3 ([s2, s3, s2]). This list determines whether a correct or an incorrect squat is performed.

Once we encounter state s1, we re-initialize state_sequence to an empty list for subsequent squat counts.

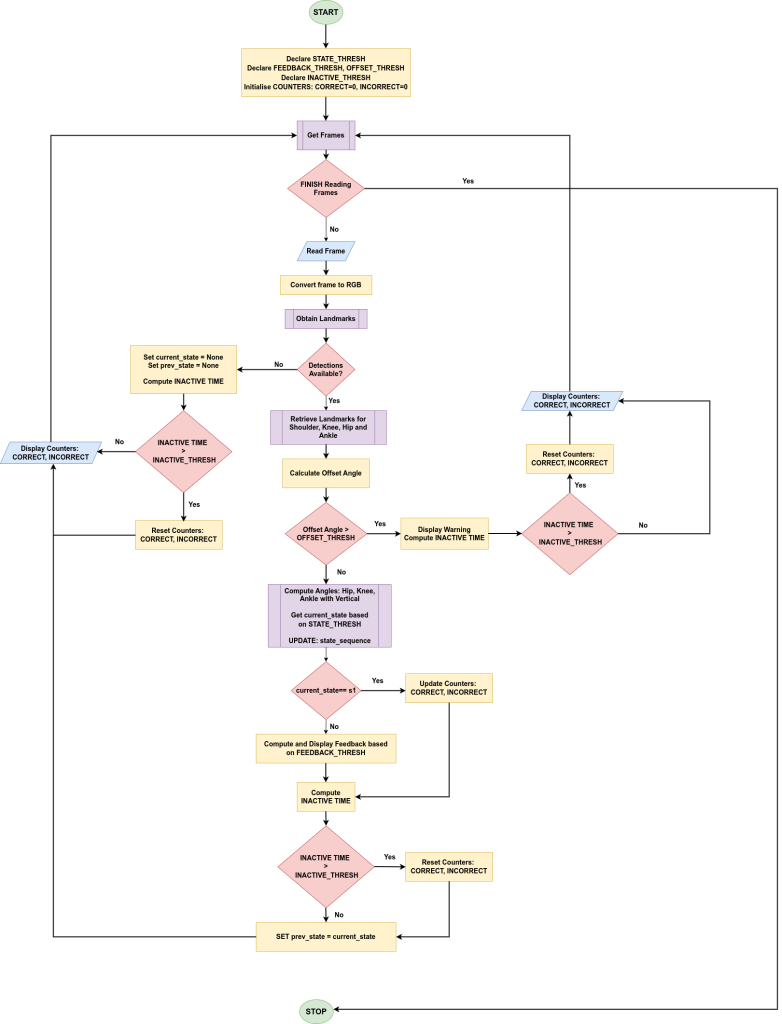

Application Workflow for the AI Fitness Trainer

The flowchart below describes our application workflow.

- We first declare the following thresholds along with the two counters:

STATE_THRESH: A set of thresholds that determine the state that each frame belongs to.FEEDBACK_THRESH: A set of thresholds to determine the feedback information that needs to be displayed.OFFSET_THRESH: Threshold to determine if the person is facing directly toward the camera.INACTIVE_THRESH: Threshold to determine inactivity, failing which the counters:CORRECTandINCORRECTare reset.- Counters:

CORRECTandINCORRECTto count the number of proper and improper squats, respectively.

- We read each frame from the webcam/video, pre-process it and pass it through MediaPipe’s Pose solution.

- We then retrieve the desired landmarks for the Shoulders, Nose, Knee, Hip, and Ankle, provided the detection landmarks are available; else, we move on to compute

INACTIVE TIME(in secs) when there are no detections.- If this

INACTIVE TIMEpasses theINACTIVE_THRESH, we reset the counters:CORRECTandINCORRECT.

- If this

- The offset angle (discussed in the later section) is calculated for the Nose and Shoulder coordinates.

- If the offset angle overshoots the

OFFSET_THRESH, we display the appropriate warning and compute theINACTIVE TIMEas discussed in Step 3

- If the offset angle overshoots the

- When the offset angle is within the

OFFSET_THRESH, we go on to calculate the following:- The angles shoulder-hip, hip-knee, and knee-ankle lines with the verticals.

- The

current_stateof the frame is calculated based on STATE_THRESH. - A list:

state_sequenceis maintained (discussed in the previous section).

- When the current state is encountered as s1, we update the counters:

CORRECTandINCORRECTbased on the contents of state_sequence. Otherwise, we compute and display the feedback messages based onFEEDBACK_THRESHand compute theINACTIVE TIME. - We assign

prev_statewithcurrent_stateand proceed to fetch the subsequent frames.

Key Concepts While Designing The Application

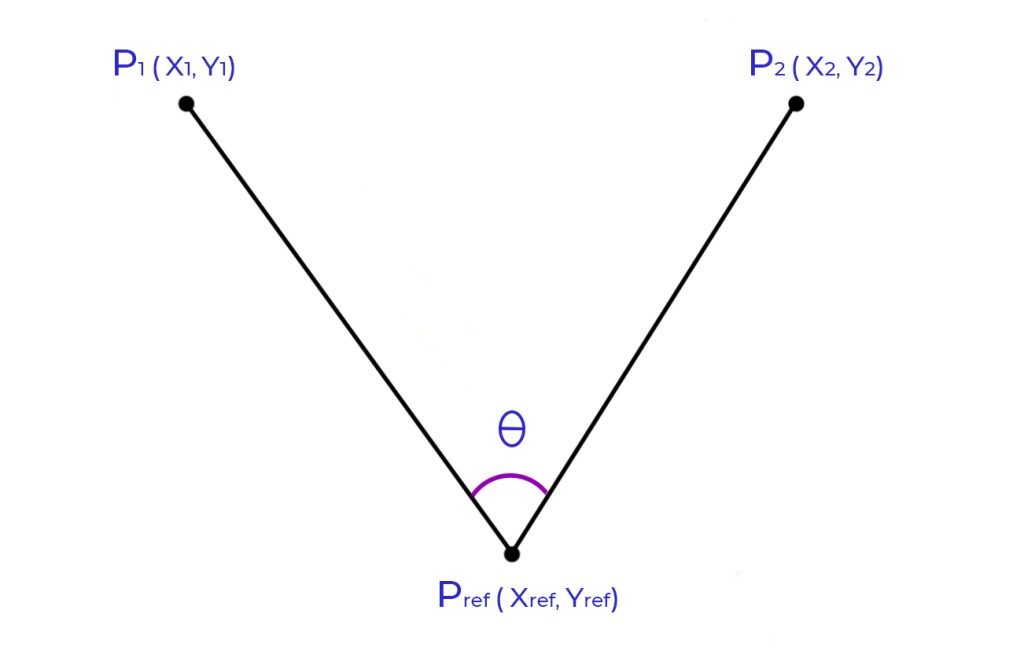

Angle Calculation

The angle between 3 points, with one being the reference point, is shown below.

The equation is given by:

For instance, to calculate the offset angle, we shall find the angle between the nose and the shoulders, with the coordinates of the nose being the reference point.

When the offset angle crosses a certain OFFSET_THRESH, we assume that the person is facing the front of the camera, and an appropriate warning message is displayed.

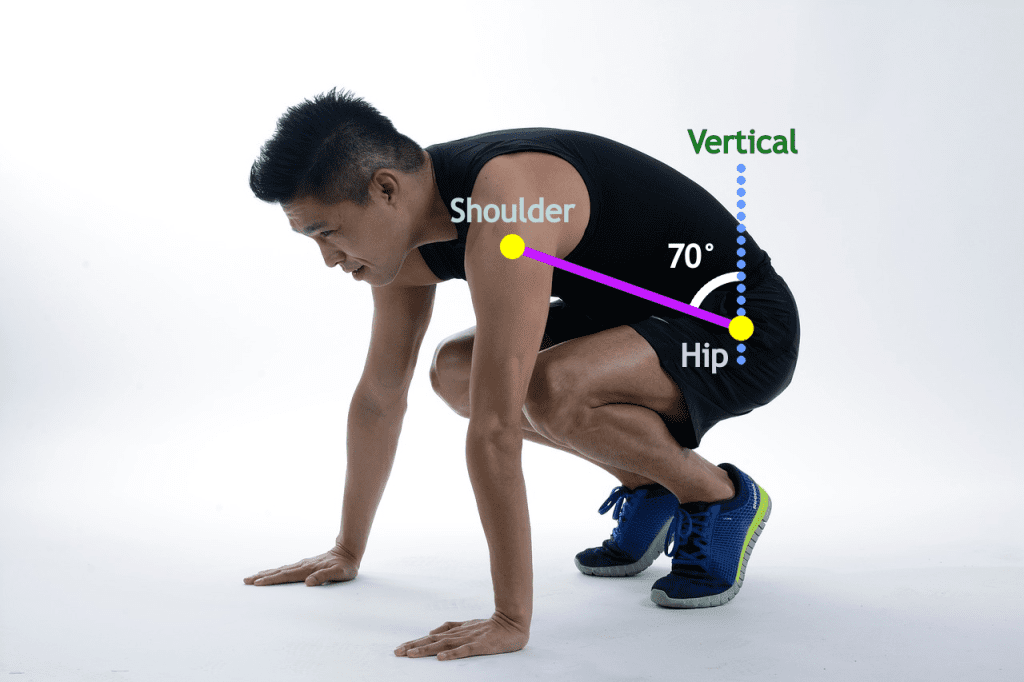

Similarly, the diagram below can be referred to calculate the angle between the shoulder-hip line and the vertical.

Since the vertical passes through the hip coordinates, its x-coordinate is the same as that of the hip. Also, its y-coordinate is valid for all y; hence let’s take y = 0 for simplicity.

Feedback Actions for the AI Fitness Trainer Application

Our application shall provide five feedback messages while one performs a squat, namely:

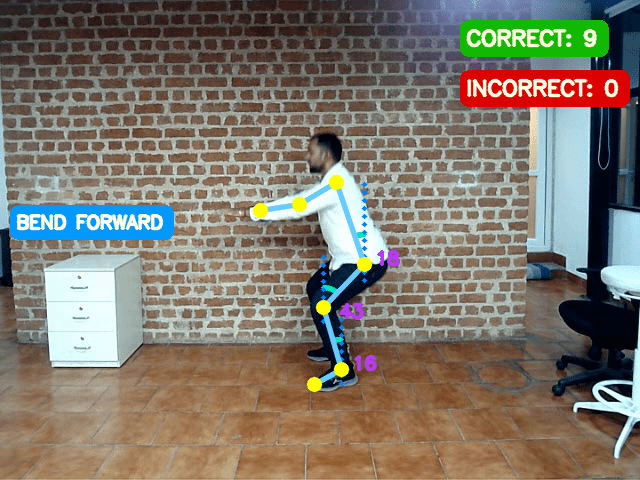

- Bend Forward

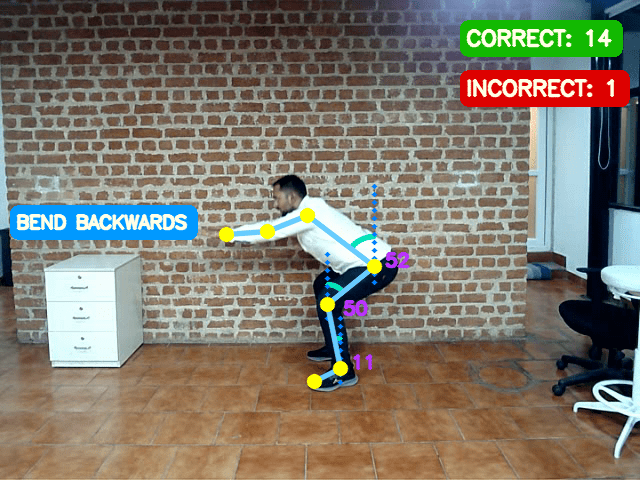

- Bend Backwards

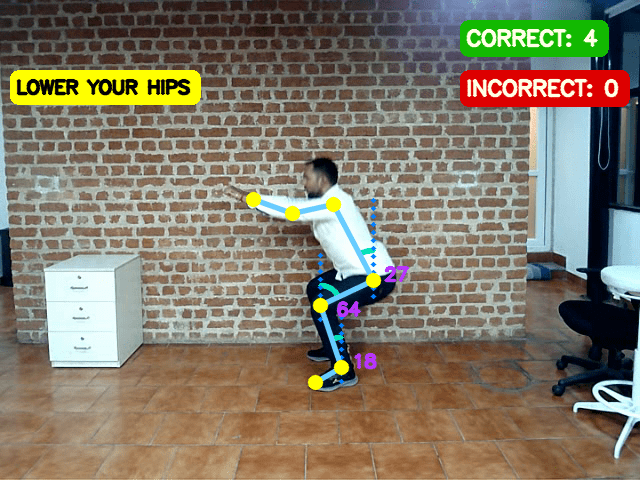

- Lower one’s hips

- Knee falling over toes

- Deep squats

- Feedback 1 is displayed when the hip-vertical angle (i.e., the angle between the shoulder-hip line with the vertical) falls below a threshold, for instance, 20°, as shown in the following figure.

- Feedback 2 is displayed when the hip-vertical angle falls above a threshold, for instance, 45°, as shown below.

- Feedback 3 is responsible when the angle between the hip-knee line with the vertical is within thresholds, say between 50° and 80°, as shown below.

Please observe that feedback 3 should be displayed only when the transition happens from state s1 to s2 and not vice-versa.

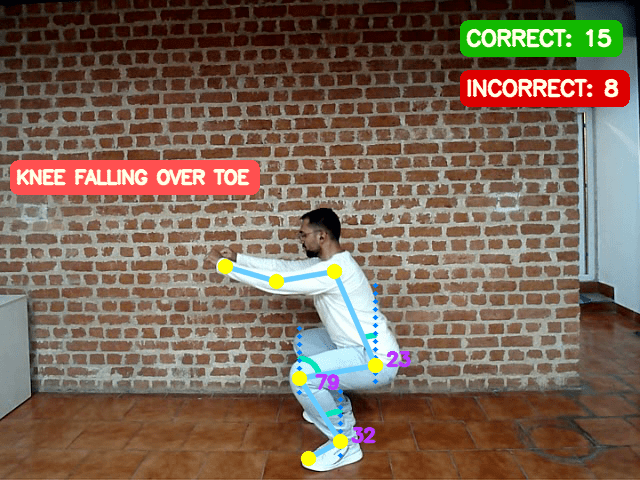

- Feedback 4 is displayed when the angle between the knee-ankle line with the vertical lies above a threshold, for instance, 30°, as shown.

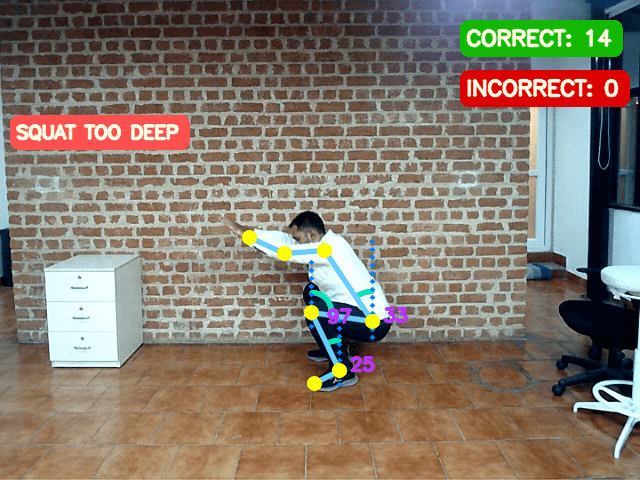

- Feedback 5 is displayed when the angle between the hip-knee line with the vertical; transitions through state s3 and goes beyond a threshold, for instance, 95°.

Note:

- All thresholds have been set based on heuristics and subsequent experimentations. Thresholds have also been set based on whether we opt for Beginner or Pro modes.

- Feedback 4 and 5 are considered severe and contribute to incorrect squat postures.

Computing Inactive Times

As mentioned earlier, another aspect of our application is to reset all counters (for correct and incorrect squats) owing to inactivity. Inactivity is computed when an object (person) maintains a certain state beyond some T time. The threshold T is measured in seconds. We have set the value of T to 15 seconds.

There are three situations when our application encounters inactivity:

- We are facing directly toward the camera (i.e., offset angle >

OFFSET_THRESH) beyond T seconds. - The state of the person remains unchanged beyond T seconds.

- There are no detections beyond T seconds.

Test Cases in the AI Fitness Trainer Application

We will demonstrate a few examples that discriminate between perfect and imperfect squats.

Case 1: Perfect Squat is performed

Case 2: Incorrect Squats (with knee falling over toes)

Case 3: Incorrect Squats (cyclic from state s1 to s2 and again s1)

Case 4: Incorrect Squats (deep squats)

Case 5: Frontal View Warning Message

Modes of Squats – Beginner vs. Pro

As discussed earlier, the application can be operated in two modes: Beginner and Pro. As the namings suggest, the Pro mode has stricter thresholds than the Beginner mode. We can illustrate the differences with an example video.

Scope for Improvements

One of the critical aspects of performing a proper squat is ensuring that the knees do not collapse during the squat. This check can be made possible only when the person is in front of the camera. This, in turn, requires obtaining a good set of thresholds and calculating various angles across the torso.

Therefore, it is always better to have multiple camera views at your disposal such that we can delve more into such aspects, which in turn can be improved during further experiments.

We have used MediaPipe’s Pose and leveraged the power of OpenCV and Numpy to build a simple application to analyze squats. We can further improve it by incorporating more advanced techniques, such as building a Human Action Recognition system using a CNN-LSTM model and training a classifier on some standard dataset. We can also use wearable sensors such as Inertial Measurement Units (IMUs) and perform some time-series analysis.

We have a detailed article that compares YOLOv7 and MediaPipe on Human Pose.

Summary

So far, we have showcased how to build a simple application to analyze squats using MediaPipe’s Pose solution. The critical components of the application include the following:

- Calculate angles such as the shoulder-hip, hip-knee, and knee-ankle lines with their corresponding verticals.

- We maintain various states to display appropriate feedback and distinguish between proper and improper squats.

- Compute inactivity during which the respective counters are reset.

The application assumes that the person should maintain a good side view of the camera. If one faces entirely in front of the camera, we display an appropriate warning message.

We hope this article gives you enough intuition to build a simple yet consequential application to analyze squats using Human Pose Estimation. We plan to incorporate more exercises in the future.

References

Must Read Articles

| We have built a few exciting applications using MediaPipe. Do read them! 1. Creating Snapchat/Instagram filters using Mediapipe 2. Gesture Control in Zoom Call using Mediapipe 3. Center Stage for Zoom Calls using MediaPipe 4. Drowsy Driver Detection using Mediapipe |

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning