Last year saw tremendous innovation in AI image generation. Diffusion models for image generation, and AI Art Generation, in particular, have taken the world by storm. Not just the AI and ML community, the Diffusion Models have gathered a vast audience in the lives of digital artists too. This led to another revolution in the creativity and content creation space and gave rise to numerous AI Art Generation Tools that used Diffusion Models.

With the huge number of AI art generation tools today, it is difficult to find the right one for a particular use case. This article lists all the possible tools so that you can make an informed choice to generate the best art.

Let’s look at the spectrum of tools available for AI art generation: This includes:

- Official websites/tools by organizations.

- GitHub repositories and Hugging Face Spaces.

- 3rd party websites allow users to search and generate images.

Through the course of this article, you will have enough knowledge to choose the right tool and start your journey with AI art generation using diffusion models.

- Official AI Art Generation Tools using Diffusion Models

- Open Source Projects as AI Art Generation Tools

- Websites That Use Diffusion Models as AI Art Generation Tools

- Where Can You Download Diffusion Models?

- Summary

Official AI Art Generation Tools using Diffusion Models

The real wave of AI art generation was started by four organizations/websites. We can easily attribute all that followed to these 4:

- DALL-E 2 by OpenAI

- Imagen by Google

- Stable Diffusion by StabilityAI

- Midjourney by an organization with the same name

Except for Imagen, all other models are accessible either through APIs or GitHub repositories. Let’s dive into the details and see what each can do and how to use them.

1. DALL-E 2

OpenAI released DALL-E 2 in April 2022. In summary, DALL-E 2 is capable of generating realistic images, and artwork through natural language prompts.

It has an accompanying research paper, too – Hierarchical Text-Conditional Image Generation with CLIP Latents.

To let the public get their hands on the model, OpenAI made the DALL-E 2 model accessible through the OpenAI Labs.

Although we do not have access to the model, we use the web interface to generate images of our choice. Here is an example.

DALL-E 2 can even generate images in a particular style. For example, with the same prompt as before, we can additionally inform DALL-E 2 to generate the image in the form of an oil painting.

You may experiment with your own prompts and generate images in other formats like watercolor or pencil drawing.

2. Midjourney

Unlike DALL-E 2, Midjourney does not have a website yet. We can only interact with it using the Midjourney discord bot.

After creating a Discord account, head over to any newbies channels, e.g. newbies-108. Here, you enter the prompts using the chat box. You first need to type /imagine, and the prompt box will appear where you can enter the prompts.

Midjourney has a more artistic diffusion model compared to the others. The images that it creates have a very fantasy-like, sci-fi feel. Nonetheless, it generates extremely beautiful images even with very short prompts.

For example, the following images have been created simply by using tiger as the prompt.

However, we can give longer prompts to Midjourney as well and generate even better images. Check out the one below.

On a much lighter note, here are a few images of Geoffrey Hinton, Yann LeCun, and Yoshua Bengio generated by Midjourney in Pixar style.

There are a lot of tools and commands within the Midjourney discord bot that we can use to become even more productive. This will be the topic of our future posts, which will be dedicated to Midjourney.

3. Stable Diffusion

Now, coming to the most famous diffusion model for AI art generation: Stable Diffusion by Stability.ai. Stable diffusion, while still not the best, is enjoying a high success rate among the AI and artists community. The reasons are quite simple:

- It is open source, with the pre-trained weight files available to everyone.

- Developers hacked away their way into making the fine-tuning process cheap and accessible. So much so that today, we can train the latest version of Stable Diffusion even on a consumer GPU with 6GB of VRAM.

The above two reasons also paved the way for different versions of Stable Diffusion to be trained on a variety of image styles.

But for now, let’s focus on DreamStudio, the official user interface (website) to generate images using Stable Diffusion.

Following is an example after inputting a prompt in the DreamStudio website.

As you may observe, the image is good but not great. Stable Diffusion gives the best results with long prompts. At first, it may seem like a disadvantage. But this actually allows us to have more artistic control over the image that we want to generate.

Now, the same prompt but with added art styles generates:

We can notice the drastic difference in the image with more inputs provided in the prompt.

Do try to generate images with your own prompts and notice the difference between the short and descriptive ones.

Open Source Projects as AI Art Generation Tools

Following the success of the Diffusion Models for image generation, the open source community has been quite busy making it accessible to everyone.

We have a host of open source projects and models based on Diffusion models today. And most of them use Stable Diffusion because of its open source nature.

Most of the tools we will discuss here are GitHub repositories of Hugging Face Spaces which are accessible to everyone.

4. Stable Diffusion WebUI (AUTOMATIC1111)

The Stable Diffusion WebUI (AUTOMATIC1111/stable-diffusion-webui) is one of the most successful GitHub repositories.

The project provides a web-based user interface to use almost any Stable Diffusion model. We just need to clone the repository and put the appropriate model inside models/Stable-diffusion folder.

Then we can launch the app from the terminal using the following command:

python launch.py

This will give us a localhost URL.

We can also generate a shareable link using the following command.

python launch.py --share

This is particularly helpful when executing the code in Google Colab or any other cloud environment.

Opening the URL will pop up the following window.

We will use the Stable Diffusion 2.1 checkpoint to generate images here. The first thing that catches the eye is the txt2img tab and the large prompt box.

Let’s input a prompt and check the output with all other settings set to default.

Isn’t it a nice-looking image? However, the good part is that we can do even better by increasing the number of sampling steps. This decides the number of iterations taken to improve the image after adding the noise.

Note: More steps will result in longer run times but generally produce improved results.

The result is much better this time. The image is more detailed and clearer as well.

That’s not all that Stable Diffusion WebUI has to offer. As you may already have seen, it has a bunch of other tabs as well. A few more functionalities of the WebUI are:

- Img2Img conversion for image inpainting and image outpainting.

- Support for image super-resolution.

- Control over the strength of the text prompt.

- Generating multiple images with different parameters using a single generation run.

- Support of InstructPix2Pix for No Prompt image editing.

- And much more…

Are you intrigued and overwhelmed with the options available for generating images using Stable Diffusion? We are sure you do. We will be launching a course that is sure to make you a master of AI art generation. If this piques your curiosity, then wait for no further and check out Kickstarter “Mastering AI art generation”

5. OpenJourney

OpenJourney (by PromptHero) is a modified version of Stable Diffusion 1.5. It has been trained on images generated by Midjourney v4 to make the outputs more artistic.

The easiest way to use OpenJourney is by using the diffusers library. We can easily do so in a Google Colab environment with a few lines of code.

But we need to include the words mdjrny-v4 style in the prompt to tell the model to generate images in Midjourney style.

We have some additional requirements as well. We need to install the diffusers and transformers library.

Here is a complete example for any Jupyter notebook environment.

!pip install diffusers transformers

from diffusers import StableDiffusionPipeline

import torch

model_id = "prompthero/openjourney"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "enraged warrior, monsterlike armor, living armor, \

full body portrait, organic \

armor, high detail, intricate detail, mdjrny-v4 style"

image = pipe(prompt).images[0]

image.save("./image.png")

The prompt was lengthy (indicative that it is still a Stable Diffusion model), but the output is outstanding. If you wish, you may play around and generate a few more images.

Websites That Use Diffusion Models as AI Art Generation Tools

What are the websites that support and use diffusion models for AI art? The websites we will mention here either provide the functionality to generate images using diffusion modes or search images generated using diffusion models.

6. Playgroundai

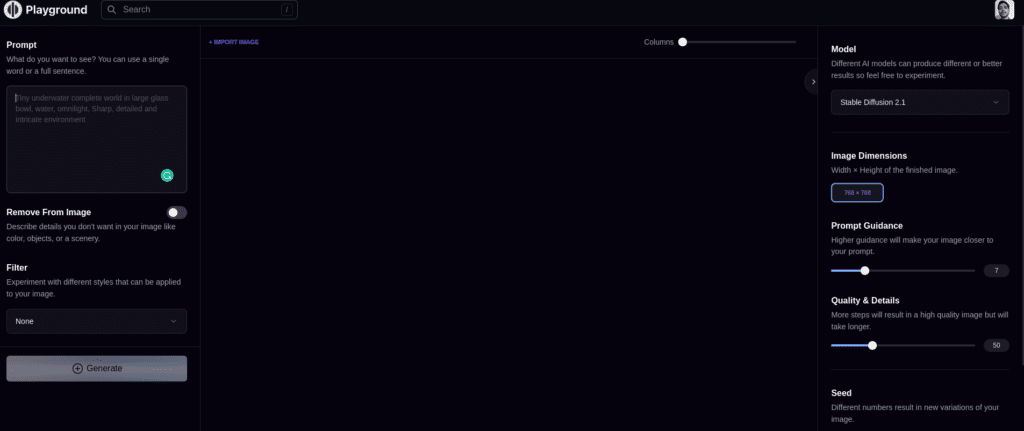

Playgroundai is a website that allows users to search and generate images using different diffusion-based models.

Users can generate 1000 images per day for free when using Stable Diffusion 1.5 and 2.1. They also have a paid tier that provides access to the DALL-E 2 model.

After creating an account on the site, you can click on the Create button in the top right corner. This will redirect you to the following page, where you can enter your prompt and generate images.

On this page, you can enter the prompt in the test box and click on the Generate button. Here is an example using Stable Diffusion 1.5 on the Playgroundai website.

You can also choose different options from the left tab. Some of them are

- Switching between Stable Diffusion 1.5 and 2.1 (for the free tier)

- Changing the resolution of the image that you want to generate

- Number of images to generate

Playgroundai is a good website to start your journey with diffusion models for image generation. 1000 images per day in the free tier will allow you to play around and experiment as much as you want.

7. Prompthero

Prompthero is another such website that allows us to browse through images and also generate images using different models: Not just Stable Diffusion but also DALL-E, Midjourney, and Openjourney, among others.

Further, it even allows you to search images related to different topics such as food, architecture, anime, and much more.

Once you create an account on the website, you can click the Create button to generate images. After which, you will be greeted with this page.

The controls may seem simple compared to Playgroundai, but the choice of models is vast here. We can choose between different versions of Stable Diffusion, Arcane Diffusion, Openjourney, and Modern Disney Diffusion to name a few.

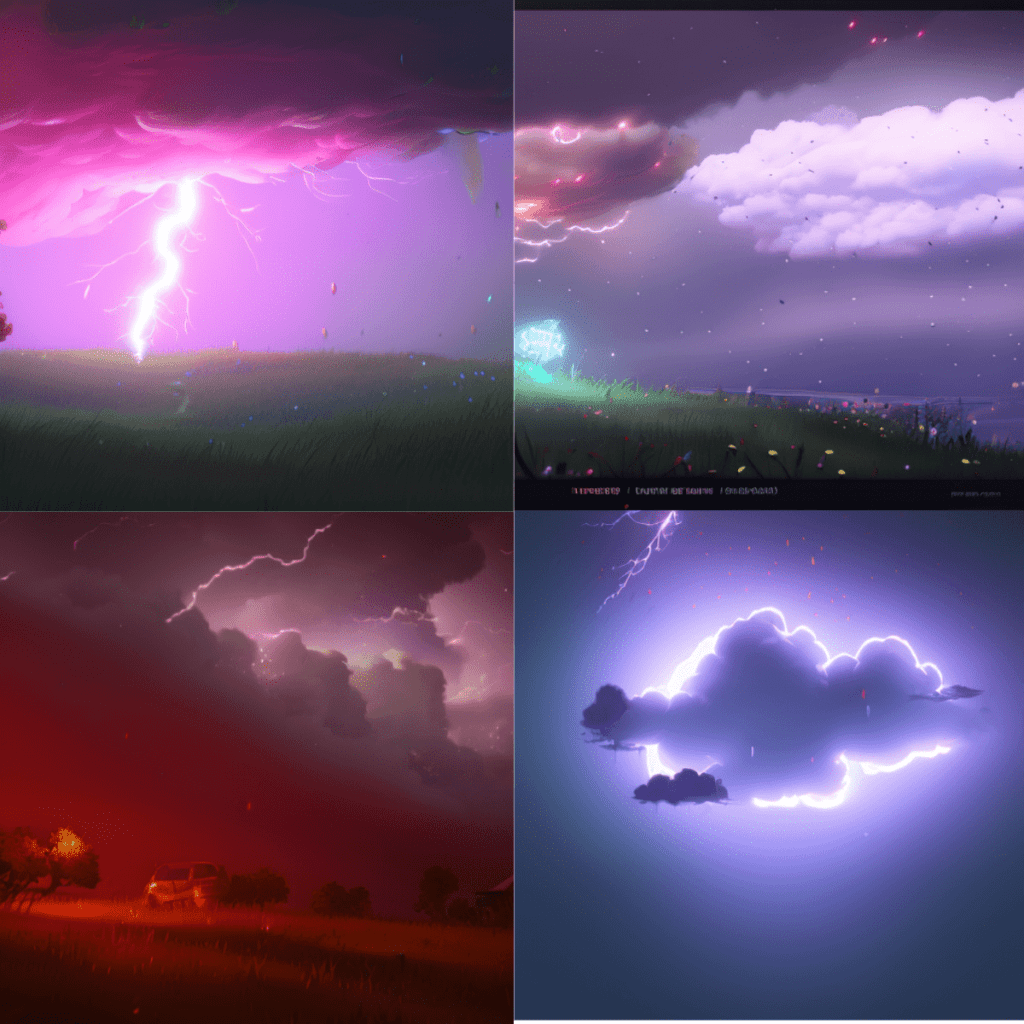

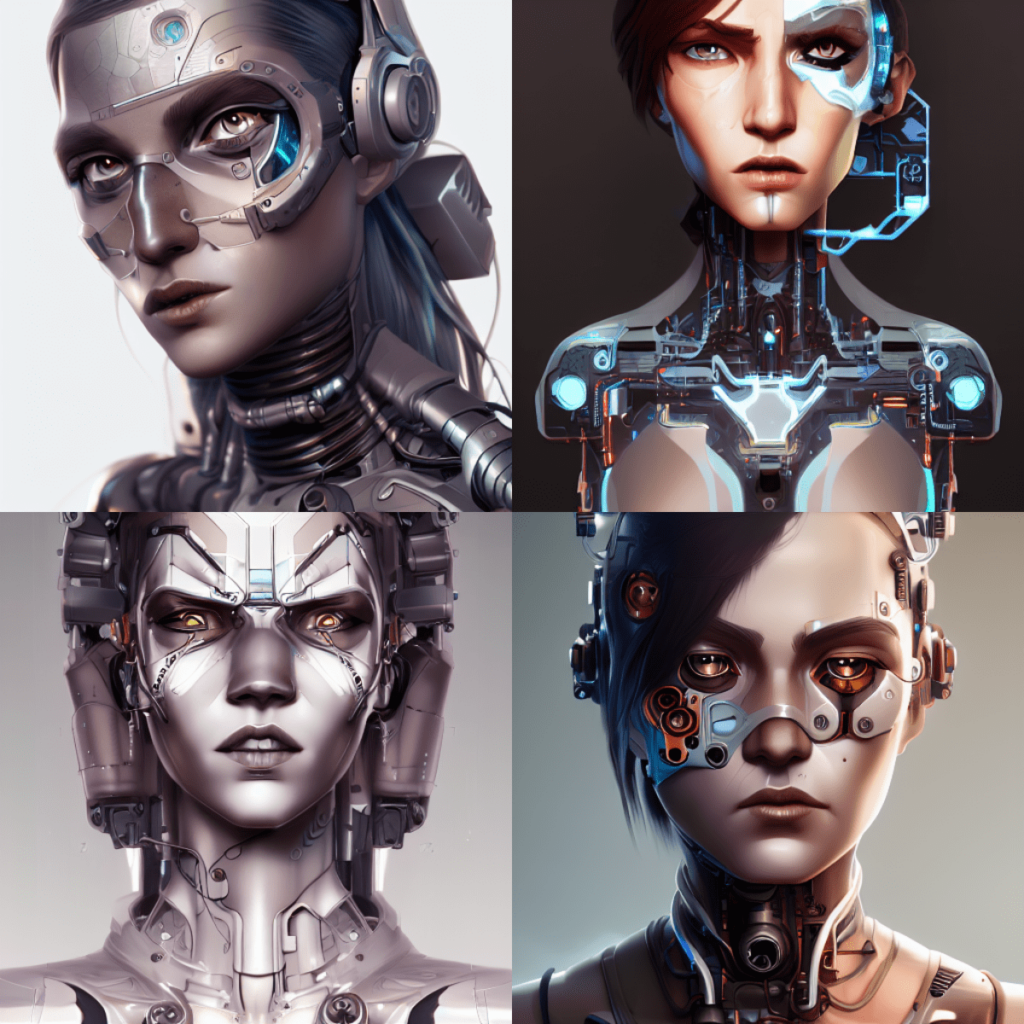

The following figure shows an image generated using the Arcane Diffusion model.

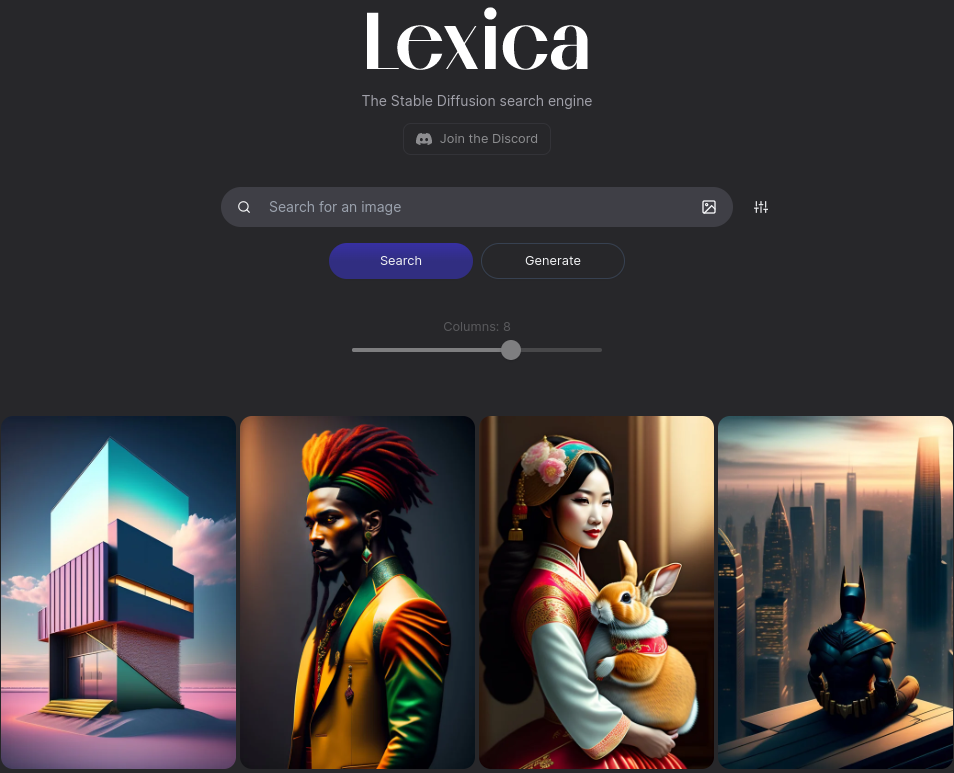

8. Lexica

Lexica.art is another website where we can search through and generate images using diffusion models.

As you see in the above figure, the images look quite different (artistic and colorful) compared to other diffusion models. This is because Lexica uses its own fine-tuned model called Lexica Aperture V2 for generating images.

Lexica provides a few useful tools to work with the images available on the website. For example, we can edit an image in the in-built editor, explore a particular image style, or even upload an image to search for similar images.

For now, we will focus on generating images on the website.

You may find the setting in the image generation tab limited when compared to the previous two options. But as you can see, the images come out amazingly beautiful.

Where Can You Download Diffusion Models?

In the previous sections, we discussed various websites and tools for generating images and AI art using diffusion models.

While using any of the above websites will not require downloading the pre-trained models separately, using a tool like stable-diffusion-webui does. This means that we have to know a few places (websites) on which we can rely on to download the models.

Note: Most of the models here will be Stable Diffusion models or their variations as they are the only ones that are open source.

9. Hugging Face

The AI community has been hard at work since the first ever Stable Diffusion model came out. Along with the official repositories on Hugging Face, here are some other well known repositories that we can rely on to download the models.

CompVis

The stable-diffusion-v-1-4-original repository by CompVis provides the official Stable Diffusion 1.4 versions.

These include sd-v1-4.ckpt (suitable for inference) and sd-v1-4-full-ema.ckpt (suitable for fine-tuning).

RunwayML

The stable-diffusion-v1-5 repository by RunwayML provides the Stable Diffusion 1.5 models.

Just like the CompVis one, we have v1-5-pruned-emaonly.ckpt and v1-5-pruned.ckpt. The former is for fine-tuning and the latter for inference.

StabilityAI

StabilityAI (creator of Stable Diffusion) has two main repositories on Hugging Face to download the models. These include the Stable Diffusion 2 base model and the Stable Diffusion 2.1 model.

This version of Stable Diffusion v2.1 has been fine-tuned by taking Stable Diffusion version 2 as the base mode. Also, it has been trained on 768×768 resolution images instead of the default 512×512 images.

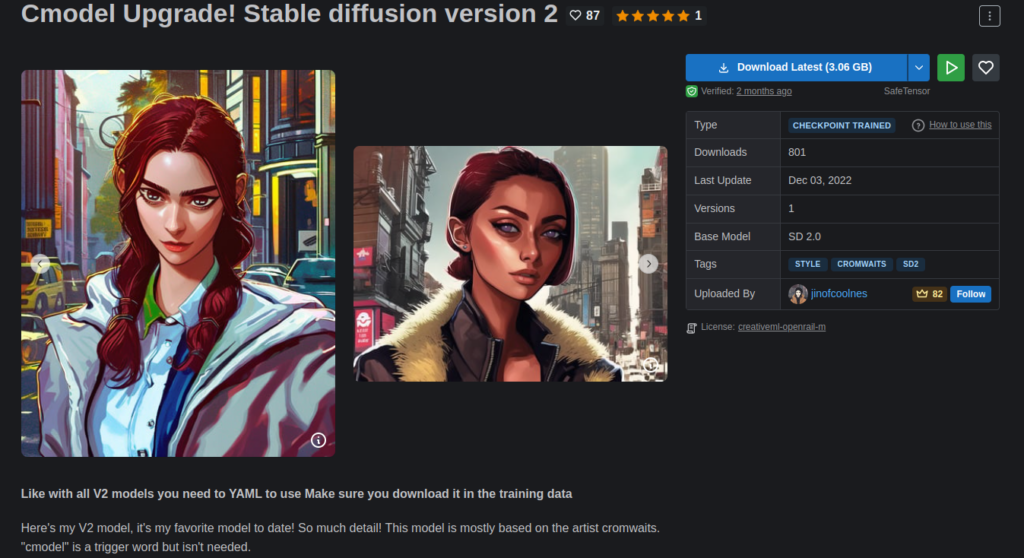

10. Civitai

Civitai is a unique website in terms of accessibility for diffusion models. Civitai mainly focuses on making the generated images and checkpoints accessible to the users.

It provides fine-tuned Stable Diffusion checkpoints for different art styles like Anime, Video Games, Celebrity, and many more.

As of writing this, Civitai is still under active development and some of the art styles may not contain any checkpoints. It may soon provide them as more people fine-tune the checkpoints and upload them on the site.

Bonus Website

Bing Image Creator

Bing recently integrated the OpenAI DALL-E model into Bing Image Creator, a website for generating images.

Although the website is still in Preview mode, after creating an account, we get 25 boosts (to generate images faster) using the DALL-E model.

In fact, it is pretty simple to use compared to the other websites on this list. We just have one model to deal with and a good prompt to generate images of our choice.

Let’s try to create a concept of a new shoe style using Bing Image Creator.

As this uses the DALL-E model, we get good image generations even with simple prompts.

Summary

We covered a lot in this article in terms of accessing Stable Diffusion models and websites. We started with discussing official models and websites, moved on to open-source repositories, and finished with accessing pretrained Stable Diffusion models.

Experimenting with these is a lot of fun, and fine-tuning the Stable Diffusion models on your own images and art style can generate interesting results. In fact, you can train your own models or generate your own art and upload them to websites like Civitai.

The current Diffusion Models will become the future tools for image generation. If you are a content creator or digital artist, you can reduce the time from ideation to execution of a new concept much faster now. Rather than being afraid of the capabilities of these models, embracing their power and using them in the right way will reap much more benefits.

If you carry out your own experiments, let us know in the comment section. We would love to hear from you about it.