Picture this: Dr. Aris, a radiologist with a decade of experience, is staring at his screen. The stack of digital files, chest X-rays, and CT scans seems endless. Each image holds a story, a clue to a patient’s health, but the sheer volume is overwhelming. He sips his now-cold coffee, wishing he had a super-sharp assistant who could help him sort through the noise, highlight potential areas of concern, and even draft preliminary findings. It’s not about replacing his expertise but augmenting it, giving him more time to focus on the most complex cases that truly need his seasoned eye. This isn’t just Dr. Aris’s story; it’s a daily reality in clinics and hospitals worldwide. What if we could give our healthcare heroes that AI assistant they dream of? That’s where MedGemma comes into play!

Built on Google’s Gemma 3 models, MedGemma is a specialized Vision-Language Model (VLM) designed to understand and process the unique language and visuals of the medical world. It’s like a brilliant medical student, trained on vast amounts of clinical data, ready to assist with tasks from interpreting X-rays to answering complex medical questions.

In this article, we will explore:

- The DNA of MedGemma: We’ll look at what MedGemma is, its different versions, and how it inherits its intelligence from the robust Gemma 3 architecture.

- MedGemma in Action: We’ll uncover the exciting real-world applications, from analyzing pathology slides to helping doctors with clinical decisions.

- Bringing MedGemma to Life: We will walk through the code, step-by-step, to see how you can get started and even fine-tune the model for your own specific tasks.

- Peeking at Performance: We’ll touch upon how the model performs and what makes it a powerful tool for developers.

Now, that’s exciting, right? So, grab a cup of coffee, and let’s dive in!

- Introduction to MedGemma

- The Architectural Blueprint: How MedGemma Thinks

- Real-World Impact: The Many Hats of MedGemma

- Code Pipeline

- Inference

- Try the Demo – MedGemma in Action

- Medical Disclaimer

- Quick Recap

- Conclusion

- References

Note: The primary goal of this blog post is to demonstrate the Medgemma Model and it’s possible usecases. The examples serve strictly as a use case to illustrate this process on a challenging dataset, utilizing open-source models and data for research purposes. There is absolutely no intention to build or propose a functional AI radiologist, clinical expert, or medical assistant. Any use of terms like ‘AI assistant’ is purely metaphorical, employed only to create a relatable analogy and provide an intuitive understanding of the model’s evolving behavior, not to suggest any real-world clinical capability.

Introduction to MedGemma

So, what exactly is this AI assistant we’ve been talking about? At its core, MedGemma is a specialized family of models derived from Google’s powerful and open Gemma 3. Think of Gemma 3 as a brilliant, versatile graduate who excels in many fields, from writing poetry to solving complex math problems. MedGemma is the same graduate who has gone on to medical school, immersing herself in clinical knowledge to become a specialist.

It comes in two primary variants, each tailored for different needs:

- MedGemma 4B: A 4 billion parameter multimodal model. This is the version that can both see and read. It’s designed to understand medical images, like X-rays and pathology slides, alongside text.

- MedGemma 27B: A 27 billion parameter text-only model. This is a deep linguistic expert, perfect for tasks that require an in-depth understanding of complex medical literature, patient records, and clinical notes.

MedGemma 4B is available in both pre-trained (suffix: -pt) and instruction-tuned (suffix -it) versions. The instruction-tuned version is a better starting point for most applications, while the pre-trained version is available for those who want to experiment more deeply with the models. MedGemma 27B has been trained exclusively on medical text and optimized for inference-time computation. MedGemma 27B is only available as an instruction-tuned model.

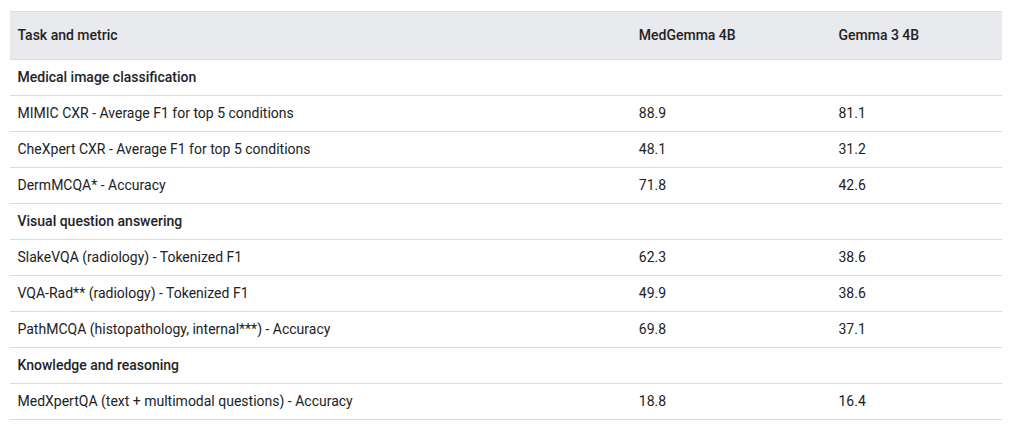

MedGemma variants have been evaluated on clinically relevant benchmarks (both multimodal and text-only) to illustrate their baseline performance. MedGemma 4B outperforms the base Gemma 3 4B model across all tested multimodal health benchmarks.

Also, MedGemma models outperform their respective base Gemma models across all tested text-only health benchmarks.

Now let’s move to the core architecture and explore how Medgemma works under the hood!

Our courses cover Vision Language Models(VLMs), Fundamentals of Computer Vision, and Deep Learning in depth. To get started, just click below on any of our free Bootcamps!

The Architectural Blueprint: How MedGemma Thinks

To understand MedGemma’s power, we need to examine its Gemma 3 foundation. This isn’t just another language model; it features clever architectural designs that make it highly efficient and capable.

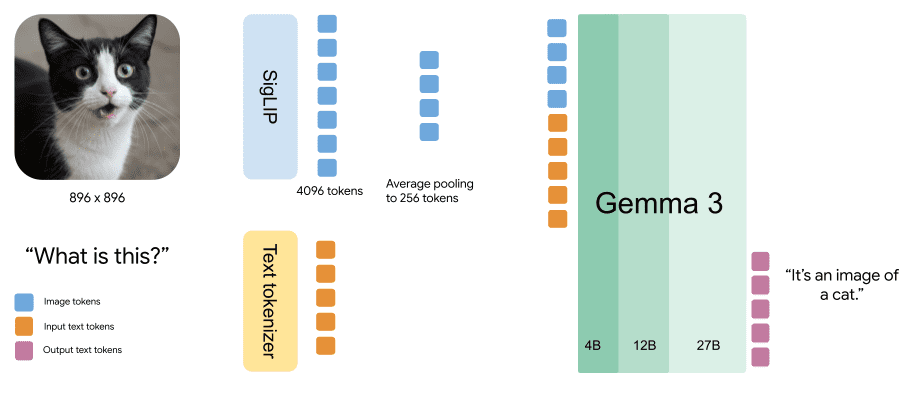

Specialized Vision: The Medically-Tuned SigLIP Encoder

The multimodal MedGemma 4B uses A SigLIP vision encoder. When you show it an image, the model doesn’t see pixels in the way we do. Instead, the SigLIP encoder translates the image into a special language that the model understands, a sequence of “soft tokens.” What’s particularly special here is that this vision encoder was specifically pre-trained on a vast library of de-identified medical data, including chest X-rays, dermatology photos, and pathology slides.

Furthermore, it uses a clever Pan & Scan (P&S) method. If an image is too large or has an odd aspect ratio, P&S intelligently breaks it into smaller, manageable crops, analyzes each one, and then assembles the understanding. This ensures that no crucial detail is lost, whether it’s a tiny anomaly on a large X-ray or text on a lab report.

A Foundation of a Decoder-Only Transformer

The models are built on a decoder-only transformer architecture, which is fantastic for generative tasks like creating text or answering questions. But the real magic lies in the details. One of the biggest challenges for AI models is handling long pieces of information, like a complete patient history or a dense research paper, without losing track or using up massive amounts of memory.

Solving the Memory Puzzle with Efficient Attention

One of the biggest bottlenecks for LLMs or VLMs is managing a long-term “memory.” When processing a lengthy document, like a complete patient history, a standard model can hallucinate after a few chats. It tries to pay equal attention to every single word it has seen, which leads to an explosion in memory usage known as the KV-cache problem. Think of it like trying to hold every detail of a 500-page novel in your mind at once to understand the current page – it’s exhausting and inefficient.

Gemma 3, and thus MedGemma, solves the long-context challenge with a combination of Grouped-Query Attention (GQA) and a hybrid local-global attention architecture. GQA reduces memory usage by allowing multiple query heads to share a smaller number of key-value projections, significantly reducing the size of the KV-cache without compromising model performance. In addition, MedGemma mimics how a human reads: focusing deeply on local text (like a paragraph) while periodically considering the overall structure (like chapter headings). It does this by using a 5:1 ratio of local-to-global attention layers—five layers process shorter spans (e.g., 1024 tokens), while one layer accesses a much longer context (up to 128,000 tokens). This design enables the model to maintain global understanding while staying computationally efficient, making it practical to run on standard developer hardware.

Real-World Impact: The Many Hats of MedGemma

Now for the exciting part: what can MedGemma actually do in a clinical setting? Its capabilities are broad, making it a versatile tool for developers building the next generation of medical AI applications.

- Multimodal Medical Imaging Analysis

- Leveraging the 4B variant, MedGemma excels at interpreting complex medical imagery in conjunction with textual prompts. In a pathology workflow, it can perform fine-grained classification of histopathology slides, accurately distinguishing between tissue types such as “normal colon mucosa” and “colorectal adenocarcinoma epithelium.” Beyond simple classification, it functions as a descriptive engine.

- For radiological assessments, it can analyze a chest X-ray and generate a structured preliminary report, such as: “Findings suggest an opacity in the left lower lung lobe, consistent with potential pneumonia. The cardio mediastinal silhouette and pulmonary vasculature are within normal limits.” This capability streamlines the diagnostic process by providing a high-quality draft for expert clinician review and validation, thereby accelerating the overall workflow.

- Advanced Clinical Text Understanding and Synthesis

- The 27B text-only variant functions as a powerful engine for natural language understanding tasks involving complex medical corpora. For clinicians and researchers, it can serve as an advanced query engine, synthesizing information from vast databases of medical literature. A query like, “What are the current evidence-based treatment guidelines for type 2 diabetes in patients with comorbid chronic kidney disease?” can give a concise, referenced summary, eliminating the need for hours of manual review.

- Furthermore, in clinical practice, it can parse and summarize lengthy electronic health records (EHRs) into succinct summaries, highlighting key events, diagnoses, and treatments. This is invaluable for improving the efficiency and safety of patient handoffs between shifts or care teams.

- Intelligent Patient Intake and Triage

MedGemma can power websites or applications designed for initial patient engagement. Deployed in a patient portal or as a preliminary triage tool, it can guide individuals through a dynamic and context-aware series of questions based on their stated symptoms. For instance, a patient reporting a “cough” would be prompted for clarifying details regarding its duration, nature (e.g., dry or productive), and associated symptoms like fever or dyspnea. The primary benefit is the automated gathering of structured, clinically relevant preliminary data. - Augmented Clinical Decision Support Systems (CDSS)

While MedGemma is not a standalone diagnostic tool, its most profound potential lies in its integration into larger Clinical Decision Support Systems. In this capacity, it acts as a vigilant analytical engine, continuously processing longitudinal patient data from the EHR. By analyzing the latest lab results, imaging reports, and physicians’ notes in concert, the system could flag potential risks requiring expert attention. It can perform trend analysis on vital signs or lab values, detecting subtle deteriorations that might otherwise go unnoticed. As an augmentation tool, MedGemma enhances clinical vigilance and provides a critical layer of safety and support for healthcare providers.

MedGemma provides the foundational blocks for these applications, offering developers a powerful, specialized, and efficient tool to start building with.

Code Pipeline

We’ve seen what MedGemma can do, so let’s get our hands dirty and see how it works in practice. This section will guide you through the code, from running your first simple query to fine-tuning the model for a custom task. We’ll be using the Hugging Face ecosystem, which provides a fantastic set of tools that make working with large models like MedGemma surprisingly straightforward.

To make your journey even easier, all the Python scripts and implementation details we discuss are available for you to experiment with. Just hit the Download Code button to get everything you need to follow along.

1. Getting Started: Running Your First Inference

Before we dive into the complexities of training, let’s start with something simple: asking the model a question. This is a great way to get a feel for how it behaves. The first step is always to set up our environment and install the necessary libraries.

! pip install --upgrade --quiet accelerate bitsandbytes transformers

Next, we need to choose which MedGemma variant we want to use. For this example, we’ll use the 4b-it (4 billion parameter, instruction-tuned) model, which is great for tasks involving both images and text. We’ll also use quantization to make the model lighter on our GPU memory.

import torch

from transformers import BitsAndBytesConfig

model_variant = "4b-it"

model_id = f"google/medgemma-{model_variant}"

# Use 4-bit quantization to reduce memory usage

model_kwargs = dict(

torch_dtype=torch.bfloat16,

device_map="auto",

quantization_config = BitsAndBytesConfig(load_in_4bit=True)

)

Now, let’s give the model a task. We’ll provide it with a chest X-ray and ask it to describe what it sees. We need to structure our input as a conversation, giving the model a “role” and a clear prompt. This helps the instruction-tuned model understand its task better.

from PIL import Image

from IPython.display import display, Markdown

# The prompt we will give to the model

prompt = "Describe this X-ray"

# A sample image from Wikimedia Commons

image_url = "https://upload.wikimedia.org/wikipedia/commons/c/c8/Chest_Xray_PA_3-8-2010.png"

! wget -nc -q {image_url}

image = Image.open("Chest_Xray_PA_3-8-2010.png")

# We create a conversational structure for the input

messages = [

{"role": "system", "content": "You are an expert radiologist."},

{

"role": "user",

"content": [

{"type": "text", "text": prompt},

{"type": "image", "image": image}

]

}

]

With our input ready, we can now generate a response. The Hugging Face pipeline API is the easiest way to do this. It abstracts away all the complex steps of tokenization and decoding, giving you a clean output with just a few lines of code.

from transformers import pipeline

# Create the pipeline for image-to-text generation

pipe = pipeline(

"image-text-to-text",

model=model_id,

model_kwargs=model_kwargs,

)

# Run the inference

output = pipe(text=messages, max_new_tokens=300)

response = output[0]["generated_text"][-1]["content"]

# Display the output

display(Markdown(f"**[ MedGemma ]**\n\n{response}"))

And just like that, you’ve run your first inference with MedGemma! The model will provide a text-based description of the X-ray, demonstrating its multimodal understanding. This simple script is the starting point for building much more complex applications.

2. The Training Room: Fine-Tuning with QLoRA

Now for the fascinating part: teaching MedGemma a new skill. Yes, we can fine-tune both the 4B and 27B. However, that will require a large amount of GPU vRAM (approx. 40 GB). If you have a Colab Pro account, go ahead and try the official colab notebook. Maybe we can try using quantization to do it in our consumer GPU. We will figure it out in any of our future articles. Stay tuned for that!

PS: We have a detailed article on Fine-Tuning Gemma3 on LaTeX-OCR. If you are interested in the fine-tuning part, you can give it a read.

Inference

In this article, we will use the 4b it variant. Even if you are using the MedGemma, the base model demands up to 8 GB of VRAM, so we are tweaking the main pipeline. We will use the 4-bit quantized model released by UnSloth. Also, we made a simple Python web application to interact with the model through a cool UI. Let’s summarize the main changes that we have implemented.

pipe = pipeline(

"image-text-to-text",

model="unsloth/medgemma-4b-it-bnb-4bit",

torch_dtype=torch.bfloat16,

device_map="auto",

)

We changed the default model with a 4-bit quantized model, as it’s quantized using bitsandbytes (bnb), uses the accelerate backend by default. So we are using the device_map param to configure our vRAM uses and setting it to “auto“, letting accelerate handle it.

# Generate analysis

output = pipe(text=messages, max_new_tokens=1024)

full_response = output[0]["generated_text"][-1]["content"]

partial_message = ""

for char in full_response:

partial_message += char

time.sleep(0.01) # Add a small delay to make the typing visible

yield partial_message

After getting the model’s response, we created a hacky patch to mimic real-time text streaming. We took an empty string and used time.sleep(...) to give the typing effect.

We have included the application code in the ‘Download Code‘ button to make it easy for you to get started. Now, let’s run the inference and see some application use cases.

As you can observe, MedGemma performed really well, as promised. We haven’t verified this data with a medical professional. We should obviously use this model with proper medical expert supervision, as it was developed for research and to help our medical professionals.

Try the Demo – MedGemma in Action

PS – We are using an Nvidia GeForce RTX 3070 Ti Laptop GPU to run the inference. Now let’s summarize what we have covered so far!

Medical Disclaimer

This article is for educational and research purposes only. It should not be used as a substitute for professional medical diagnosis or treatment. Always consult qualified healthcare professionals for medical advice.

Quick Recap

We’ve covered a lot of ground, so let’s pause and summarize the journey with a few key takeaways. This is the essence of what makes MedGemma such a groundbreaking tool.

- A Specialist, Not a Generalist: MedGemma is more than a general AI; it’s a specialist built on Google’s robust Gemma 3 architecture and meticulously trained on a vast amount of medical data, making it fluent in the language of healthcare.

- Two Tools for the Job: It comes in two powerful variants to suit different clinical needs: a 4B multimodal model that can both see (interpret images like X-rays) and read, and a 27B text-only reasoning model perfect for deep dives into medical literature and reports.

- Efficient by Design: The architecture is built for efficiency. With its clever mix of local and global attention, MedGemma can understand long clinical documents without needing a supercomputer. Its custom SigLIP vision encoder, pre-trained on medical images, gives it a sharp eye for clinical details.

- Fine-Tuning Made Accessible: You don’t need a massive research lab to customize MedGemma. Thanks to techniques like QLoRA (Quantization + Low-Rank Adaptation) and the Hugging Face ecosystem, you can train a highly specialized model for your specific use case with manageable computational resources.

- Versatile Clinical Applications: Its capabilities provide a strong foundation for a wide array of healthcare solutions. The 4B model is a powerful starting point for image-centric tasks across radiology, digital pathology, dermatology, and ophthalmology, useful for classification and generating interpretations. The 27B model excels at text comprehension and clinical reasoning, enabling applications in patient summarization, triage, Q&A, and decision support.

- Play with the Model: All the Notebooks and Python scripts are available in the Download Code button.

Conclusion

MedGemma provides the essential building blocks, offering a specialized, efficient, and medically tuned foundation derived from the powerful Gemma 3 architecture. It’s not about replacing clinicians but about building powerful, specialized co-pilots that can handle the data deluge, allowing our healthcare heroes to operate at the top of their game. Whether it’s interpreting radiological scans, summarizing patient histories, or augmenting clinical decisions, the tools to begin innovating are at your fingertips.

References

MedGemma Release page

MedGemma Model Card

Gemma 3 Technical Report

Gemma explained: What’s new in Gemma 3