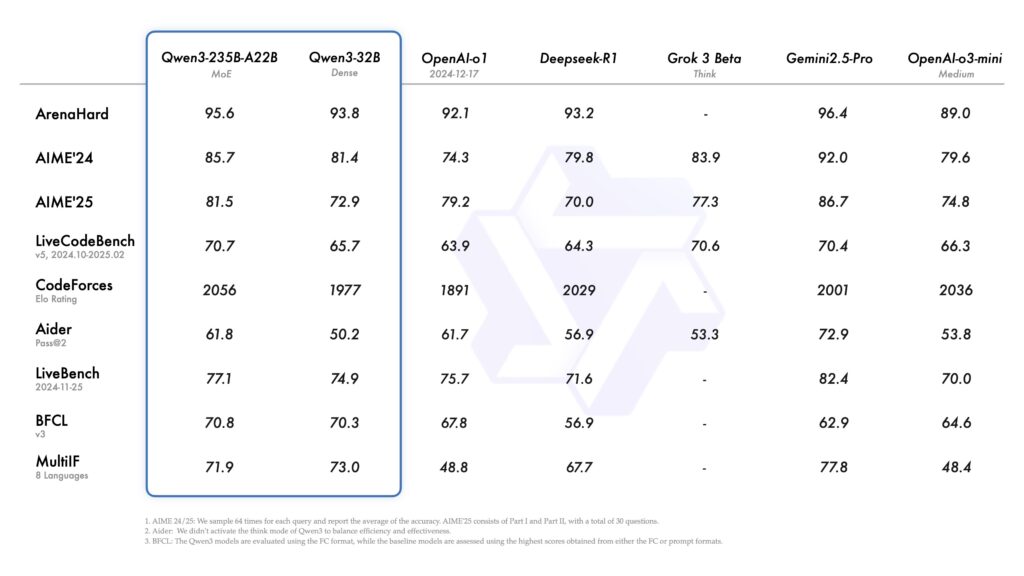

Alibaba Cloud just released Qwen3, the latest model from the popular Qwen series. It outperforms all the other top-tier thinking LLMs, such as DeepSeek-R1, o1, o3-mini, Grok-3, and Gemini-2.5-Pro.

Unlike traditional language models that spit out flat text, Qwen3 is a series of thinking LLMs, built to reason. It can switch between a deliberate, chain-of-thought Thinking Mode for tough problems and a lightning-fast Non-Thinking Mode for everyday tasks. And you don’t need to use two different models anymore; it’s a single model that handles both tasks. How cool is that! Under the hood, it packs clever Mixture-of-Experts architecture, massive multilingual coverage (119 tongues!), and a think switch (/think & /no_think) with a thinking budget so you stay in control of cost and latency.

In this article, we will explore:

- Current Trends in Reasoning LLMs

- What Is LLM Reasoning?

- Qwen3 Architecture

- How to use it locally

- Finetuning for Math Reasoning

Now, that’s exciting, right? So, grab a cup of coffee, and let’s dive in!

Current Trends in Reasoning LLMs

A couple of years ago, every AI headline bragged about “the first trillion-parameter model.” Bigger felt better, until we all noticed that a bloated network isn’t automatically a smarter one. In 2025, the picture has changed. It’s all about an LLM that can think and give smarter and accurate replies.

1. From Scale to Thoughtfulness

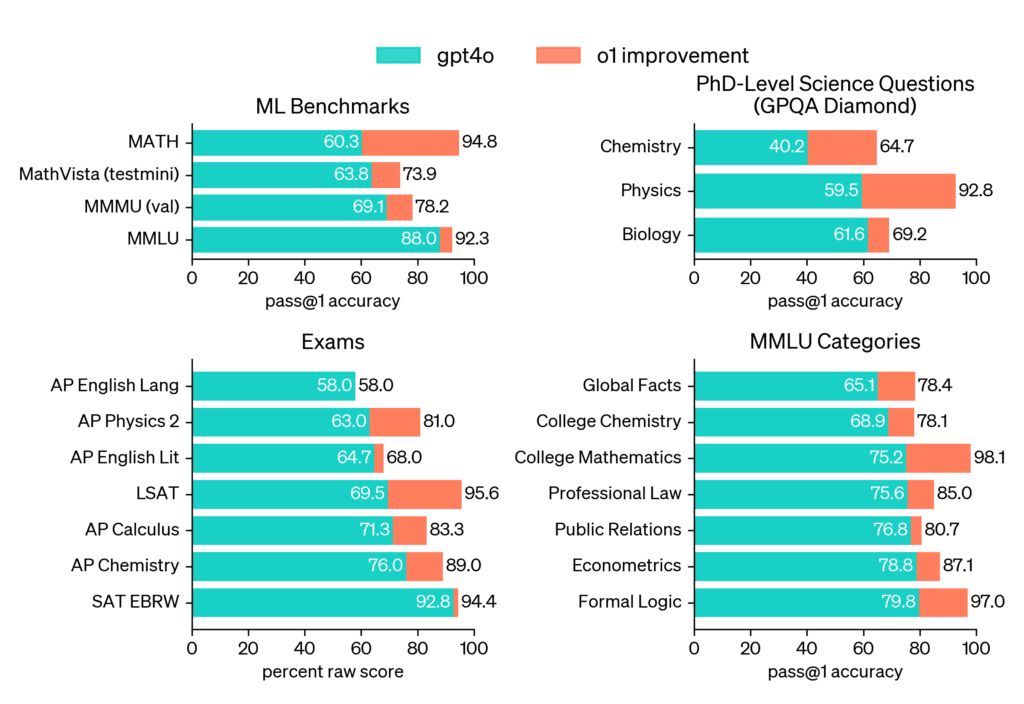

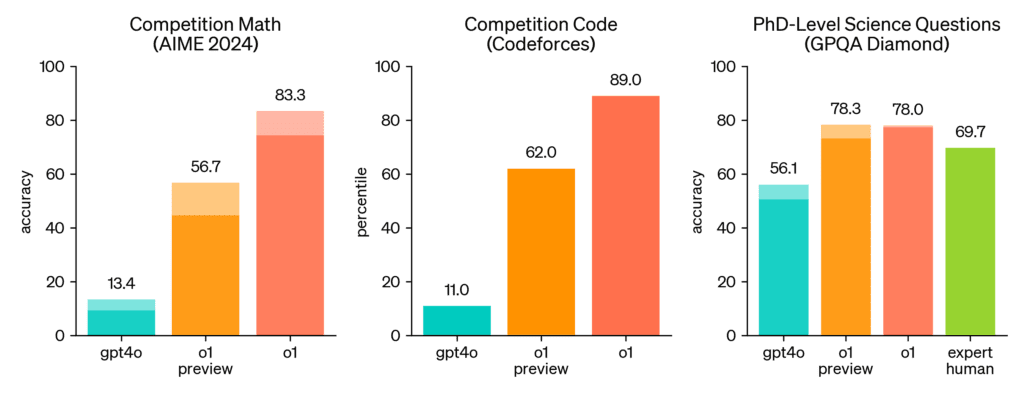

OpenAI’s o-series (o1, o3) kicked off the wave by showing that a mid-sized model can beat gpt4o once you let it think out loud. o1’s 78.0 % on the tricky GPQA-Diamond benchmark and a 2727 Codeforces Elo rank made it crystal clear: thoughtful beats sheer bulk when problems get hairy.

2. Compute When You Need It

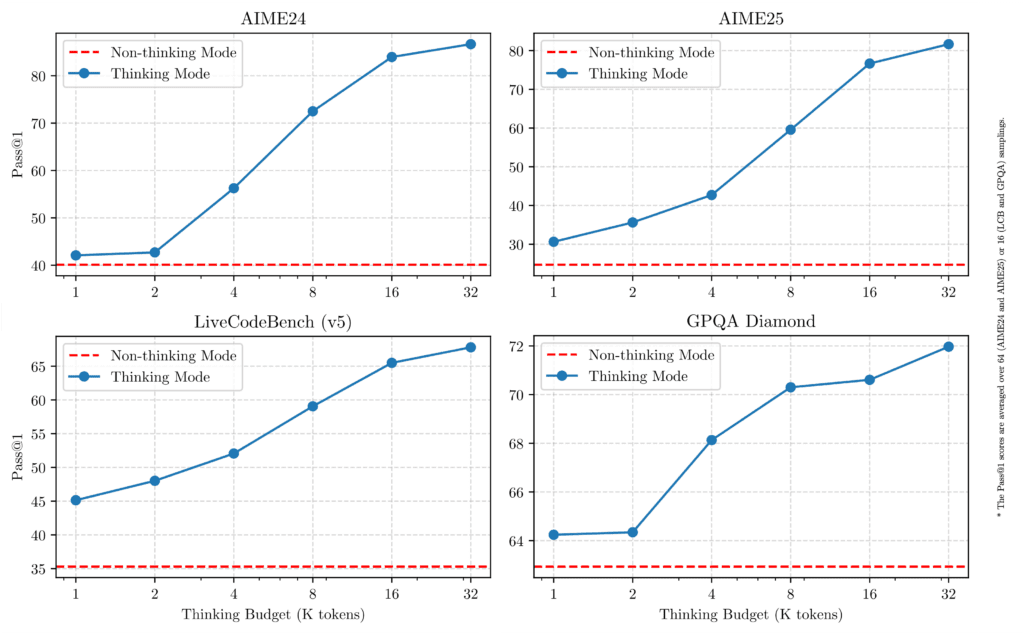

Modern deployments increasingly adopt inference-time compute scaling. Instead of consuming peak FLOPs on every prompt, the runtime allocates additional attention heads, search breadth, or expert routes only when the input is classified as complex. Models like o3 and DeepSeek-R1 are used for complex reasoning tasks using long COT contexts and memory; on the other hand, GPT4o and Gemini are used for quick general-purpose QA tasks, requiring less compute and fast inference. The beauty of Qwen3 is that it merges both capabilities into one model. Qwen3 achieves this with its /think toggle and adjustable thinking budget, enabling fine-grained cost-accuracy trade-offs without retraining.

3. New Benchmarks, New Champions

As modelling techniques strengthen, evaluation sets have grown more rigorous. Datasets like LiveCodeBench and AIME’25 probe formal proofs, long-context deduction, and cross-disciplinary reasoning. Qwen3-235B-A22B currently leads several of these suites, demonstrating that mixture-of-experts (MoE) can deliver state-of-the-art logic while activating only a fraction of total parameters.

That brings us back to Qwen3, an open-source model that bundles all these trends into it!

Our courses cover Vision Language Models (VLMs), Fundamentals of Computer Vision, and Deep Learning in depth. To get started, click below on any of our free Bootcamps!

What Is LLM Reasoning? How Does an LLM “Think”?

When people say a model like Qwen 3, DeepSeek-R1, OpenAI o-series, or Claude Opus “reasons,” they do not mean it spins up a symbolic logic engine. Under the hood, it is still the same Transformer architecture: layers of linear algebra predicting the next token.

What changes, through clever training and run-time tricks, is how that prediction process is steered so the model can work out an answer instead of reciting one.

Think of an LLM as a student solving a math problem. A regular model tries to jump straight to the answer. A reasoning model first does scratch work, checks itself, and then says the answer. That scratch work is what we call the model’s COT (chain of thought).

Two Kinds of “Thinking”

| Where it happens | What the model does | Why it helps |

|---|---|---|

| Inside (hidden) | Tries a few ideas, drops the bad ones, keeps the best. | Fewer silly mistakes. |

| Outside (shown to you) | Writes the steps between special tags like <think> ... </think>. | You can see and trust the logic, or feed each step to other tools. |

Simple picture: It’s like showing your long-division steps instead of only the final number.

Tiny Example

Question: “A train goes 60 mph for 3 hours. How far is that?”

Fast mode:

180 miles

Thinking mode:

<think>

distance = speed × time = 60 × 3 = 180

</think>

So the train travels 180 miles.

Same answer, but the second one shows the work.

How do models learn to think?

- Supervised chain-of-thought (CoT) fine-tuning

We feed the model thousands of problems paired with full step-by-step solutions. - Reinforcement learning (RL) with structured rewards

Instead of humans scoring each answer, scripts compile code, solve the arithmetic, or check the JSON format. Correct logic earns a higher reward; sloppy logic is penalised. - Distillation or “strong-to-weak” transfer

A giant teacher model creates CoT answers; smaller students learn to mimic them, keeping most of the reasoning power at a fraction of the size. - Self-play and reflection

Some models (e.g., OpenAI o-series) run multiple hidden attempts, vote on the best answer, or use an internal critic to spot contradictions.

Through these signals, the network’s weights encode patterns like “multiply speed by time before giving distance” or “declare a variable before using it.”

When to use the thinking mode

Good times to turn it on:

- Multi-step math or logic puzzles

- Big code changes that need careful reasoning

- Lessons where you want the model to explain why

Times to leave it off:

- Quick facts (“Capital of France?”)

- Short summaries or translations

- Simple yes/no or sentiment checks

PS: Use thinking mode when the extra clarity is worth the extra time.

Introduction to Qwen3 – Inside the “Thinking Expert”

Qwen3 packs a lot of new tech, but none of it is magic. Below is a plain-spoken walk-through of the main ideas, from RoPE tweaks to Mixture-of-Experts (MoE) routing, grounded in the official technical report.

Qwen3 series comes with two MoE models: Qwen3-235B-A22B, a large model with 235 billion total parameters and 22 billion activated parameters, and Qwen3-30B-A3B, a smaller MoE model with 30 billion total parameters and 3 billion activated parameters. Additionally, six dense models are also open-weighted, including Qwen3-32B, Qwen3-14B, Qwen3-8B, Qwen3-4B, Qwen3-1.7B, and Qwen3-0.6B, under Apache 2.0 license.

1. A Family of Models, One Philosophy

| Model | Architecture | Active Params | Context Window | Best Fit |

|---|---|---|---|---|

| 0.6 B · 1.7 B · 4 B | Dense | same as total | 32 K | Edge apps, lightweight RAG |

| 8 B · 14 B · 32 B | Dense | same as total | 128 K | Production chat, coding |

| 30B-A3B | MoE | 3 B | 128 K | Cost-sensitive inference |

| 235B-A22B | MoE | 22 B | 128 K | SOTA research, heavy agents |

Active parameters are what actually turn on per token. The 235 B flagship “feels” huge on a résumé but only burns the cost of 22 B during use.

2. Hybrid Thinking

- Thinking Mode – shows a full

<think>chain of thought, uses a broader search, and has higher accuracy. The model performs internal step-by-step reasoning (often using latent Chain-of-Thought) before generating the final answer. - Fast Mode – hides the steps, replies instantly. The model provides a direct, faster response, suitable for simpler queries where latency is critical.

Enable globally (enable_thinking=True/False) or per turn (/think, /no_think). The ThinkFollow test confirms Qwen3 hits 99 % accuracy in mode-switching after Stage-4 RL.

3. What’s New in Qwen3

| Piece | What it is | Why it helps | Where it shows up |

|---|---|---|---|

| RoPE — ABF-RoPE | Rotary Positional Embedding tells the model which token is first, second, etc. ABF just raises the max “ruler length,” like swapping a 10 m tape for a 1 km one. | Lets context stretch from 4 K to 32 K tokens without loss. | Long-context Stage 3 pre-training. |

| YARN | A scaling trick that re-maps positions on the fly. | Quadruples context to 128 K at inference while keeping weights fixed. | All 8B+ models. |

| Dual-Chunk Attention (DCA) | Splits super-long documents into two “chunks” per layer and cross-links them. | Cuts memory while keeping tokens aware of each other. | Same long-context stage. |

| MoE with 128 Experts / 8 Active | Think of 128 mini-brains; only 8 wake up per token. Global-batch load-balancing keeps them specialised. | Big throughput jump with GPU cost similar to a 22 B dense model. | 30B-A3B, 235B-A22B. |

| GQA & QK-Norm | Grouped-Query Attention shares keys/values; QK-Norm rescales them. | Faster attention and more stable training. | All dense layers. |

| SwiGLU + RMSNorm | Newer activation and normalisation layers. | Better gradient flow, fewer “dead neurons.” | Everywhere. |

RoPE (Rotary Positional Embedding) encodes token order by applying sinusoidal rotations to query-key vectors; Qwen 3 upgrades this to ABF-RoPE, lifting the rotation base so positional information stays accurate out to 32 K tokens. YARN (Yet Another RoPE eNhancement) then remaps those positions at inference time, essentially down-sampling coordinates, so the very same weights stretch to 128 K tokens without retraining.

To keep memory quadratic costs in check, Dual-Chunk Attention (DCA) splits an ultra-long sequence into two windows, runs self-attention inside each, and exchanges lightweight summary tokens between them, cutting VRAM roughly in half while preserving global context.

Finally, Mixture-of-Experts (MoE) layers route each token’s hidden state to the eight most relevant specialists among 128 expert blocks, so Qwen 3’s flagship model holds 235 B total parameters yet activates only ~22 B per token, delivering large-model accuracy at a midsize inference budget.

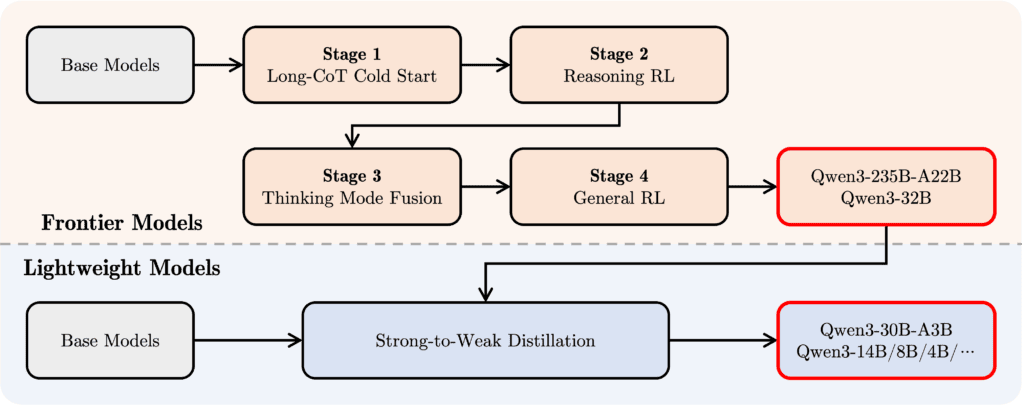

4. Four-Step Training

Stage 1 – Long-CoT Cold-Start

The freshly pre-trained model is first fine-tuned on roughly 300,000 hand-picked chain-of-thought (CoT) examples that span maths proofs, coding puzzles, logic games, and multi-hop QA. Each sample shows the full reasoning trace wrapped in <think></think> tags, so the model learns not just the right answers but the shape of a good explanation. At this point, we are still in classic supervised learning, with no reward model involved yet.

Stage 2 – Reasoning Reinforcement Learning

Next comes a reinforcement-learning loop. After every generation, the system checks two things:

- Accuracy – a compiler or calculator verifies the final answer for code and math tasks.

- Format – an LLM judge confirms the

<think>block is present and well-formed. Good behaviour earns a higher reward; sloppy logic or missing tags gets penalised. This is where the model starts self-checking: it realises that neat, correct reasoning means more reward.

Stage 3 – Thinking Mode Fusion

So far, the model always prints its chain of thought. To give users a speed dial, engineers now mix two data sources: the long-CoT set from Stage 1, plus a classic instruction-tuning corpus that contains only short answers. By alternating the two during fine-tuning, the very same checkpoint learns to notice a flag – enable_thinking or the prompt tag /think, and decide whether to reveal or hide the reasoning. In practice, it means deep analysis when you want it, tweet-length replies when you don’t.

Stage 4 – General RL

A final reinforcement-learning pass sweeps across more than twenty open-benchmark tasks covering helpfulness, safety, JSON-format compliance, multilingual QA, and tool calling. Extra rewards, like a consistency check, stop the model from drifting between languages in long answers. The result is a steadier tone, safer content, and, importantly, a ThinkFollow score near 99 %; the model almost never forgets which mode you asked for.

Strong-to-Weak Distillation

Once the flagship MoE checkpoints are polished, they act as teachers. Their fully worked solutions are distilled into the smaller, dense models (0.6 – 32 B), letting even laptop-friendly versions inherit core reasoning skills at about one-tenth the compute budget.

5. Real-World Upgrades You Feel

- 32 B dense beats the older 72 B on GSM8K and LiveCodeBench while running cheaper.

- 30B-A3B (3 B active) matches 32 B dense reasoning with a 5 × lower GPU bill.

- 235B-A22B tops open-weight leaderboards yet activates only 60 % of DeepSeek-R1’s flops.

- Multilingual support jumps from 29 to 119 languages, keeping accuracy above 90 %.

The Qwen Series includes a VLM named Qwen2.5-Omni. We have a detailed article on it; please read it if you want to learn more about VLMs.

Now let’s dive into the code!

Qwen3 Code Pipeline – Ollama

To run them locally, we will use Ollama. Ollama is a tool that lets us run our model locally simply with a single line of command or code. To install Ollama, run this simple command on your terminal:

curl -fsSL https://ollama.com/install.sh | sh

Now you are all set, yes, it’s this simple. To run any Qwen3 model, just run this command:

ollama run qwen3:the_model_varient

As we know, Qwen3 can switch between think and no-think mode seamlessly. We need to pass a simple flag /think or /no_think after our input prompt to toggle between the modes.

echo "Give me a short introduction of OpenCV University. /think" | ollama run qwen3:8b

You can also use it via the Python API. For that, we need to install the pip package:

pip install ollama

Then, run your Qwen model with a simple Python script:

import ollama

response = ollama.chat(

model="qwen3:8b",

messages=[

{"role": "user", "content": " Give me a short introduction of OpenCV. /no_think"}

]

)

print(response["message"]["content"])

PS: Ollama also starts a background API server (usually http://localhost:11434) compatible with the OpenAI API standard, allowing programmatic interaction.

Now, let’s make some inferences with the model.

PS: You will need good Hardware support, i.e., at least 8GB of GPU (VRAM) to run the 8B model and even higher RAM for larger models like 32GB or so on.

Qwen3 Inference – Ollama

All the models are available in HuggingFace, and the Qwen Team deployed all the models in Qwen Chat; you can also check those out. We are going with Ollama as our main focus is to run the models locally.

We are using the Nvidia GeForce RTX 3070 Ti Laptop GPU to run the inference. We will run the Qwen3-8B model.

Let’s try with this simple Prompt:

Give me a short introduction of OpenCV University. /think

This is the Output we get from the model:

You can observe the thinking: how the model first thinks about OpenCV and then connects the thought with OpenCV University. This is very close to how we reason for any new question that comes to our mind.

Now let’s clear some physics doubts:

Prompt:

explain about wave-particle duality /no_think

And the 2nd Propmt:

then in string theory how this duality is valid, how a string can be a wave or a paricle, what do you think ? /think

And this is the output:

Now, let’s try something with math.

Prompt:

What is the last digit of 8^999 /think

The output:

Also, we made a simple gradio app for you, which you can run locally by running the command:

pip install gradio

python app.py

You can pull any other models available with Ollama with the command:

ollama pull qwen3:4b

You can chat with any Qwen3 model. Our GPU (8GB) supports up to the Owen3 8B parameters model, and we have done all the experiments with it to make it budget-friendly and accessible to all.

The Download Code folder contains all the code, scripts, and notebooks. Let’s recap what we have learned so far.

Quick Recap

1. Strategic Shift – Reasoning over Raw Scale

The 2025 leaderboard now rewards the quality of logical trace rather than sheer parameter count. Qwen3 demonstrates that activating only 22 B parameters can surpass larger dense rivals on GPQA-Diamond, Math-RoB, and other reasoning suites.

2. Dual-Mode Execution – Thinking vs Fast

A single checkpoint handles both workloads:

- Thinking Mode (

/think, orenable_thinking=True) delivers audited, step-by-step solutions. - Fast Mode (

/no_think, orenable_thinking=False) returns concise answers with minimal latency.

3. Architectural Innovations that Matter

- ABF-RoPE + YARN keeps positional semantics intact to 128 K tokens.

- Dual-Chunk Attention halves long-context memory overhead.

- A 128-expert MoE (8 active) supplies large-model accuracy at a mid-tier GPU cost, critical for on-prem or edge deployment.

4. Ollama Coding – One-Command Local Inference

ollama run qwen3:8b

This single Ollama Qwen3 setup spins up an OpenAI-compatible REST endpoint, Python API, or Gradio demo, streamlining integration into existing tooling. The scripts are in the Download Code bundle.

5. Cost-Aware Fine-Tuning & Distillation

“Strong-to-weak” distillation transfers full reasoning capability to dense checkpoints down to 4 B, cutting compute requirements by an order of magnitude. The supplied CoT fine-tuning can further refine custom math or code domains.

Conclusion

Large-scale language models are no longer judged by how many parameters they boast but by how effectively they reason under real-world constraints of latency, cost, and transparency. Qwen 3 encapsulates this shift: a single Apache-2.0 model family that stretches context windows to 128 K tokens, routes each token through just the specialists it needs, and gives practitioners a dependable switch between audited chain-of-thought and rapid-fire replies. Its advanced architecture also combines both thinking and non-thinking capabilities within a single model.

Set it up on your local, play with it, and let us know if you can find any cool use cases. See you in the next one. Bye 😀

References

Qwen3: Think Deeper, Act Faster

Qwen3 Technical Report

Understanding Reasoning LLMs

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning