The field of computer vision is fueled by the remarkable progress in self-supervised learning. At the forefront of this revolution is DINOv2, a cutting-edge self-supervised vision transformer developed by Meta AI. This comprehensive blog post delves deep into the intricacies of DINOv2 , exploring its architecture, training methodology, advancements over its predecessor DINO, and its vast potential to reshape the landscape of computer vision.

Traditional deep learning models for computer vision often rely on massive amounts of labeled data, which can be expensive and time-consuming to acquire. Self-supervised learning offers a compelling alternative by enabling models to learn from unlabeled data, leveraging the inherent structure and patterns within the data itself. This paradigm shift has unlocked unprecedented opportunities for training powerful vision models without the constraints of manual annotations.

- DINOv2: Architecture and Training

- Data Pre-processing and Usage in DINOv2

- Code Example

- DINOv2 vs. DINO: A Comparative Analysis

- Unlocking the Potential: Applications of DINOv2

- Conclusion

- References

DINOv2: Architecture and Training

DINOv2, short for DIstillation of knowledge with NO labels v2, epitomizes the power of self-supervised learning. It leverages a student-teacher framework, where a student network learns to mimic the representations learned by a momentum teacher network. Both networks are vision transformers (ViTs), architectures renowned for their ability to capture long-range dependencies in images.

Key Innovations that set DINOv2 apart

- Fully Self-Supervised Training: Unlike its predecessor DINO, which relied on specific augmentations and architectural constraints for stability, DINOv2 achieves fully self-supervised training. This eliminates the need for hand-crafted techniques, making the training process more robust and scalable. The momentum teacher plays a crucial role in stabilizing training by providing consistent and reliable targets for the student to learn from. The teacher’s weights are updated as an exponential moving average of the student’s weights, ensuring a smooth and stable learning process.

- Training at Scale: Harnessing the Power of Data: DINOv2 is trained on a colossal dataset comprising 142 million images, dwarfing the datasets used for training previous self-supervised models. This massive scale training enables the model to learn richer, more generalizable visual representations that capture the intricate nuances of the visual world. The diversity and sheer volume of the training data contribute significantly to DINOv2’s exceptional performance.

- Refined Self-Distillation Framework: The self-distillation process, where the student learns from the teacher’s output distribution, is further enhanced in DINOv2. Techniques like centering and sharpening of the teacher’s output distribution are employed to improve the quality of the learned representations. Centering involves subtracting the mean from the teacher’s output, while sharpening amplifies the peakiness of the distribution, encouraging the student to focus on more discriminative features. This leads to more robust and informative feature representations.

- FlashAttention for Enhanced Efficiency: DINOv2 integrates FlashAttention, a state-of-the-art attention mechanism designed for optimal efficiency. FlashAttention significantly reduces the computational cost and memory footprint of the attention mechanism, enabling faster training and allowing the model to handle larger images and datasets. This efficiency boost is crucial for scaling self-supervised learning to even larger datasets and more complex models.

Let’s delve into the crucial effective implementation details that contribute significantly to DINOv2’s remarkable performance. The paper highlights several key techniques which provided significantly better results as compared to DINO. Let’s have a look at these refinements in the following points:

- Training with Very Large Batches:

- Why it matters: Training with massive batches allows the model to learn from a more diverse set of examples simultaneously, leading to better generalization and faster convergence.

- How DINOv2 achieves it: The paper leverages fully-sharded data parallelism (FSDP) which distributes both the model and the data across multiple GPUs. This enables training with batches exceeding 65k images. Critically, this large batch training doesn’t hinder performance due to careful implementation choices.

- Efficient Stochastic Gradient Descent (SGD) with Momentum:

- The challenge: Large batch training can sometimes lead to convergence issues due to the sharpness of the loss landscape.

- DINOv2’s solution: The paper employs several techniques to mitigate this:

- Gradient Clipping: Limits the magnitude of gradients to prevent exploding gradients.

- Stable Momentum: Instead of directly applying momentum to the gradients, DINOv2 updates a running average of the normalized gradients. This significantly improves stability in large-batch training.

- Weight Averaging:

- Boosting Performance: Averaging weights from different checkpoints during training smooths out the training trajectory and often leads to improved performance.

- DINOv2’s approach: Employs an exponential moving average (EMA) of model weights, effectively accumulating knowledge from past iterations. This results in a more robust final model.

- Feature Normalization:

- Preventing Feature Collapse: In self-supervised learning, a common issue is feature collapse, where the model outputs the same representation for all inputs.

- DINOv2’s strategy: Utilizes BatchNorm on the teacher network with a centered and scaled output. This helps prevent collapse and promotes more diverse feature representations. Furthermore, the use of a momentum teacher with no gradients flowing back to it assists in maintaining stable feature representations.

- Hardware and Software optimizations:

- Optimizing for speed and scale: Beyond algorithmic improvements, DINOv2 utilizes several hardware and software optimizations to facilitate large-scale training:

- Mixed precision training (FP16): Reduces memory footprint and speeds up training.

- Efficient data loading: Optimizes data pipeline for minimal latency.

- Custom CUDA kernels: Leverage custom kernels for specific operations to further enhance performance. For instance, they optimize the calculation of the cross-entropy loss between the student and teacher outputs.

- Optimizing for speed and scale: Beyond algorithmic improvements, DINOv2 utilizes several hardware and software optimizations to facilitate large-scale training:

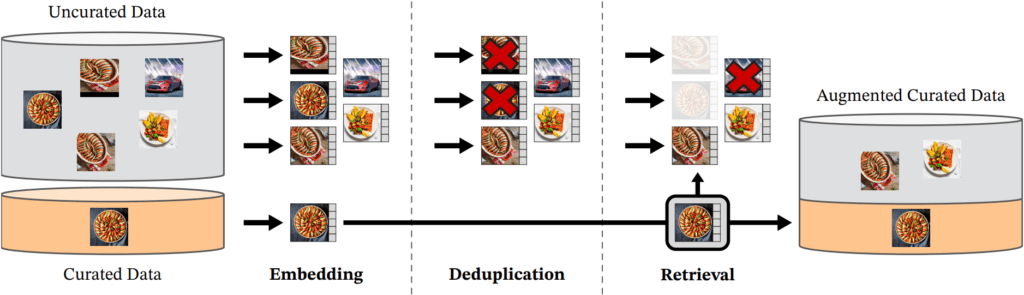

Data Pre-processing in DINOv2

DINOv2’s success hinges significantly on the quality and scale of its training data. The authors curated a massive dataset of 142 million images, emphasizing diversity and real-world representation. This dataset was assembled from various sources, including publicly available datasets and web-crawled images.

Key aspects of their data handling include:

- Scale: The sheer size of the dataset (142 million images) allows the model to learn more robust and generalizable features.

- Diversity: The dataset encompasses a wide variety of scenes, objects, and viewpoints, crucial for learning representations applicable across different tasks.

- Cleaning: The authors employed rigorous filtering techniques to remove low-quality images, duplicates, and those with potentially sensitive content. This ensures the integrity and reliability of the training data.

- Pre-processing: Images were resized to a standard resolution (typically 224×224 pixels) and normalized using standard ImageNet statistics (mean and standard deviation). Data augmentation techniques like random cropping, flipping, and color jittering were applied during training to further enhance the model’s robustness to variations in input data.

The emphasis on data quality, diversity, and scale, combined with thoughtful pre-processing techniques, contributes significantly to DINOv2’s exceptional performance and its ability to learn generic visual features applicable to a wide range of downstream tasks. The careful curation and preparation of the training data highlight the importance of data in self-supervised learning for computer vision.

Code Example

While training DINOv2 from scratch demands substantial computational resources, leveraging its pre-trained weights for feature extraction is readily accessible. Here’s an extended code example demonstrating how to extract features using PyTorch and the timm library:

Import Necessary Libraries

Ensure to disable fused attention layers to avoid getting errors. After importing necessary libraries we will load pre-trained DINOv2 model along with image preprocessing steps.

import torch

import torch.nn as nn

import timm

from PIL import Image

import numpy as np

import matplotlib.pyplot as plt

from timm.utils import AttentionExtract

from torchvision import transforms

from timm.data import create_transform

from typing import Dict

from torch.nn import functional as F

timm.layers.set_fused_attn(False)

# Load the pre-trained DINOv2 model

model = timm.create_model('vit_small_patch14_dinov2.lvd142m', pretrained=True)

model.eval()

# Load and preprocess the image

# Load image from URL

image = Image.open("your_input_image_path")

# Define the transformation

transform = transforms.Compose([

transforms.Resize(518),

transforms.CenterCrop(518),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])

# Apply transformation

img_tensor = transform(image).unsqueeze(0) # Add batch dimension

output = model(img_tensor) # output is (batch_size, num_features) shaped tensor

Function to Process Image for Attentionprocess_image() function:

- Dynamically create proper input transforms using model’s config (

pretrained_cfg). - Preprocess the input image.

- Extract multi-layer attention maps using

extractor.

def process_image(

image: Image.Image,

model: torch.nn.Module,

extractor: AttentionExtract

) -> Dict[str, torch.tensor]:

"""Process the input image and get the attention map"""

#Get correct transform for the model

config = model.pretrained_cfg

transform = create_transform(

input_size = config['input_size'], #default: (224, 224)

crop_pct = config['crop_pct'], #default: no cropping

mean = config['mean'],

std = config['std'],

interpolation = config['interpolation'], #default: bilinear

is_training = False

)

#Preprocess the image

tensor = transform(image).unsqueeze(0) #add batch

#Extract the attention maps

attention_maps = extractor(tensor)

return attention_maps

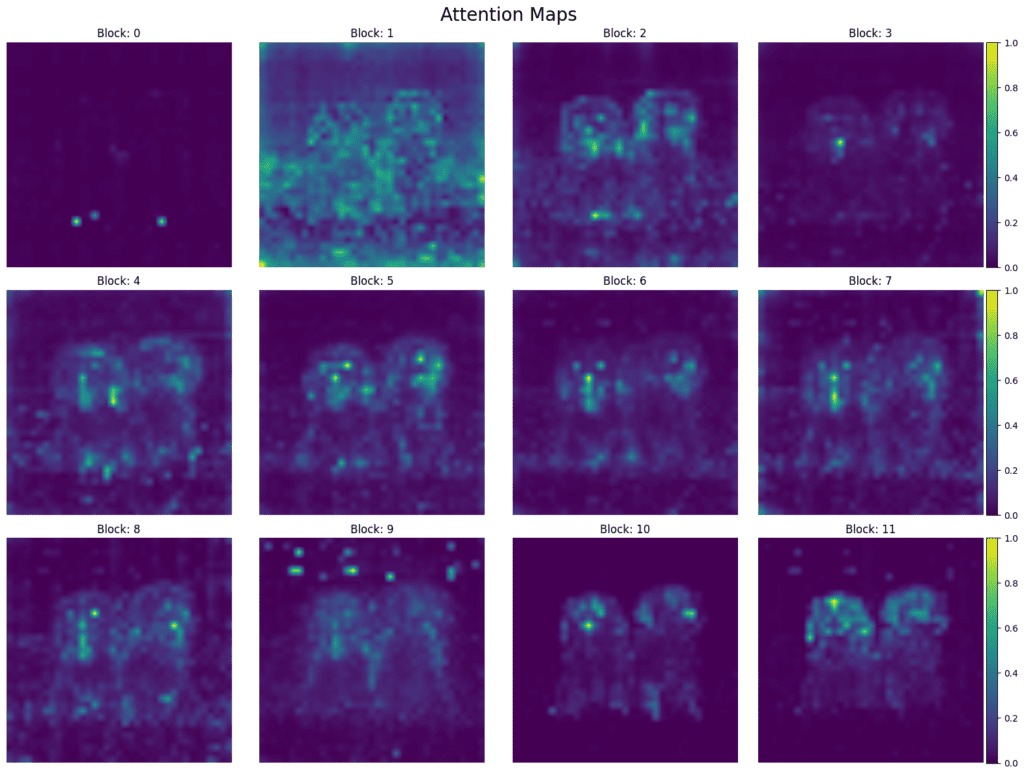

Extract Attention Maps

Pass the image through process_image() to get a dictionary of attention maps from each Transformer block.

extractor = AttentionExtract(model, method='fx') #feature extractor

attention_maps = process_image(image, model, extractor)

print(attention_maps.keys())

"""

dict_keys(['blocks.0.attn.softmax', 'blocks.1.attn.softmax', 'blocks.2.attn.softmax', 'blocks.3.attn.softmax', 'blocks.4.attn.softmax', 'blocks.5.attn.softmax', 'blocks.6.attn.softmax', 'blocks.7.attn.softmax', 'blocks.8.attn.softmax', 'blocks.9.attn.softmax', 'blocks.10.attn.softmax', 'blocks.11.attn.softmax'])

"""

Iterate Through Each Block’s Attention Map

For each layer:

- Remove batch dimension.

- Remove special tokens like

[CLS]. - Fuse heads by averaging across heads (

head_fusion = "mean"). - Focus only on attention from the class token.

- Reshape attention to 2D grid.

- Interpolate attention maps to match input image size for better visualization.

- Normalize attention map values between 0 and 1.

- Display each map on its respective subplot.

fig, axes = plt.subplots(3, 4, figsize = (16, 12)) #Create a 3x4 grid of subplots

fig.suptitle("Attention Maps", fontsize = (20))

axes = axes.flatten() # Flatten the 2D array of subplots into a 1D array

for i, (layer_name, attn_map) in enumerate(attention_maps.items()):

attn_map = attn_map[0] #Remove batch dimension; [num_heads, num_patches, num_patches]

attn_map = attn_map[:, :, num_prefix_tokens:] #Remove prefix tokens [CLS] for visualization

if head_fusion == "mean":

attn_map = attn_map.mean(0) #along the [num_heads, num_patches, num_patches - 1]

else:

raise ValueError(f"Invalid head fusion method: {head_fusion}")

# Use the class token's attention

attn_map = attn_map[0]

#Reshape the attention map to 2D

num_patches = int(attn_map.shape[0] ** 0.5) #to avoid negative values

attn_map = attn_map.reshape(num_patches, num_patches)

#Interpolate to match image size

attn_map = attn_map.unsqueeze(0).unsqueeze(0)

# Comment the line below, if you like to visualize attention maps without any smoothening effect.

# which would look similar to the feature image shown in this notebook.

attn_map = F.interpolate(attn_map, size = (img_tensor.shape[2], img_tensor.shape[2]), mode = 'bilinear', align_corners = False)

attn_map = attn_map.squeeze().detach().cpu().numpy()

#Normalize attention map

attn_map = (attn_map - attn_map.min()) / (attn_map.max() - attn_map.min())

ax = axes[i]

im = ax.imshow(attn_map, cmap = 'viridis')

ax.set_title(f'Block: {i}')

ax.axis('off')

#add colorbar only to last subplot

if (i + 1) % 4 == 0: # Check if it's the last subplot in a row

divider = make_axes_locatable(ax)

cax = divider.append_axes("right", size="5%", pad=0.05)

fig.colorbar(im, cax=cax)

plt.tight_layout()

plt.show()

Code Explanation:

This detailed explanation clarifies each part of the code, making it easier to understand how DINOv2 is used for feature extraction and how its attention mechanisms can be visualized. Remember to install the necessary libraries before running: pip install torch torchvision torchaudio timm pillow numpy matplotlib.

This extended code example not only demonstrates feature extraction but also visualizes attention maps from a specific layer in the DINOv2 model, providing insights into which parts of the image the model focuses on.

DINOv2 vs. DINO: A Comparative Analysis

DINOv2 builds upon the foundation laid by DINO, introducing crucial improvements that significantly enhance its performance and capabilities:

| Feature | DINO | DINOv2 |

| Training Data | Smaller | Massively larger (142M images) |

| Self-Supervision | Partially self-supervised (reliance on specific techniques) | Fully self-supervised |

| Training Stability | Required specific augmentations/architecture | More stable due to momentum teacher |

| Efficiency | Lower | Higher due to FlashAttention |

| Performance | Lower | Significantly improved |

| Feature Representations | Less robust | More robust and generalizable |

| Applicability | Limited by training constraints | Broader applicability due to improved training and performance |

For those who want to get an in-depth knowledge on DINO architecture as well as its implementation in road segmentation task can visit our other blog post here.

Unlocking the Potential: Applications of DINOv2

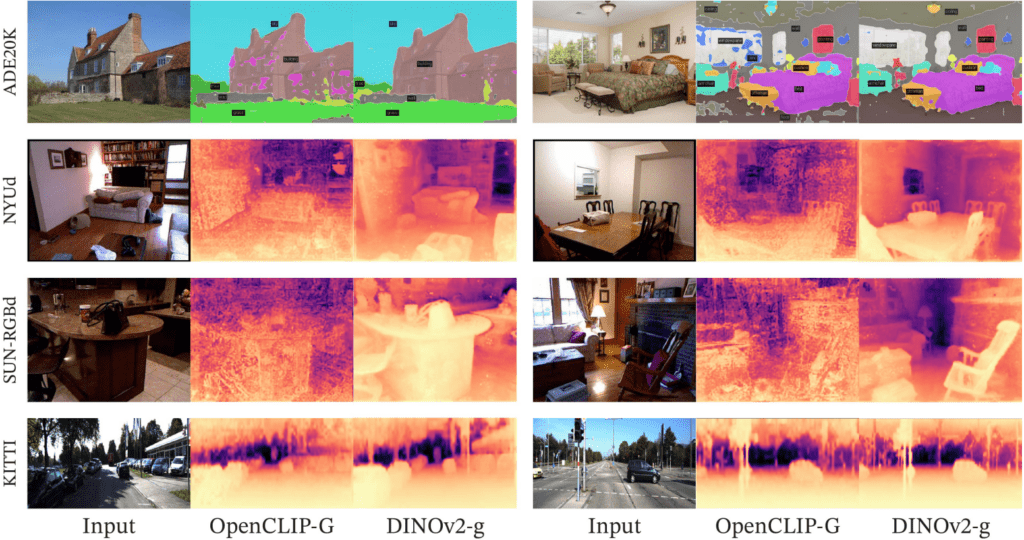

NYUd SUN RGB D and KITTI with a linear probe on frozen OpenCLIP G and DINOv2 g features

DINOv2’s ability to learn powerful and generic visual representations paves the way for a wide spectrum of applications:

- Image Classification: Excels in image classification tasks, achieving state-of-the-art results on various benchmarks.

- Object Detection and Segmentation: Enables precise and efficient object detection and segmentation, crucial for applications like autonomous driving and medical image analysis.

- Image Retrieval: Facilitates robust and scalable image retrieval systems, allowing for efficient searching of large image databases.

- Depth Estimation: Demonstrates promising results in monocular depth estimation, inferring depth information from a single image.

- Zero-Shot Learning: Empowers the model to recognize objects it hasn’t encountered during training, opening doors to novel applications in robotics and image understanding.

- Self-supervised Segmentation: DINOv2 features can be used to generate high-quality segmentation masks without explicit supervision. This capability simplifies the process of object segmentation and allows for the creation of pixel-level annotations for unlabeled images

Conclusion

DINOv2 signifies a major advancement in self-supervised learning for computer vision. Its ability to learn powerful visual representations from vast unlabeled data, combined with improved efficiency, establishes it as a key model for diverse applications. DINOv2’s accessibility through libraries like timm empowers widespread innovation in the field. The future of computer vision is intrinsically linked to self-supervised learning, and DINOv2 exemplifies this transformative power.