ColPali multimodal RAG offers a novel approach for efficient retrieval of elements such as images, tables, charts, and texts by treating each page as an image. This method takes advantage of Vision Language Models (VLM) to understand intricate details in complex documents like financial reports, legal contracts, and technical documents.

In documents like these, the accuracy of facts and figures from sources is paramount, as they can directly influence the decisions of investors and stakeholders. Unlike traditional retrieval systems, which may struggle with these elements, ColPali is an excellent contender for a production ready retrieval solution.

We will explore and test this through a much demanding industrial use case by building a Multimodal RAG application with Colpali and Gemini on finance reports. Specifically we will examine how to analyze a 10-Q quarterly report, a critical financial document filed by companies with the U.S. Securities and Exchange Commission (SEC).

The articles primarily discusses:

- Challenges in Processing Unstructured Elements

- Why is ColPali needed?

- Composition of ColPali and how does it work for Multimodal RAG?

- Building a financial report analysis application using ColPali and Gemini

- ViDoRe Benchmark

Individuals and companies looking to enhance their document analysis capabilities with RAG will find this read more useful. As the GenAI space evolves rapidly, for firm’s seeking reliable solutions over mere hype, ColPali is definitely worth exploring.

- Challenges in Processing Unstructured Elements

- Why is ColPali needed?

- Composition of ColPali

- How does ColPali Work?

- Additional Testing

- Visual Document Retrieval Benchmark – ViDoRe

- Limitations of ColPali

- Key Takeaways & Future Scopes

- Conclusion

- References

This is the fourth article on our series of blogs on LLMs and RAG.

- Deciphering LLMs: From Transformers to Quantization

- Fine Tuning LLMs with PEFT

- RAG with LLMs

- Multimodal RAG with ColPali and Gemini

Challenges in Processing Unstructured Elements

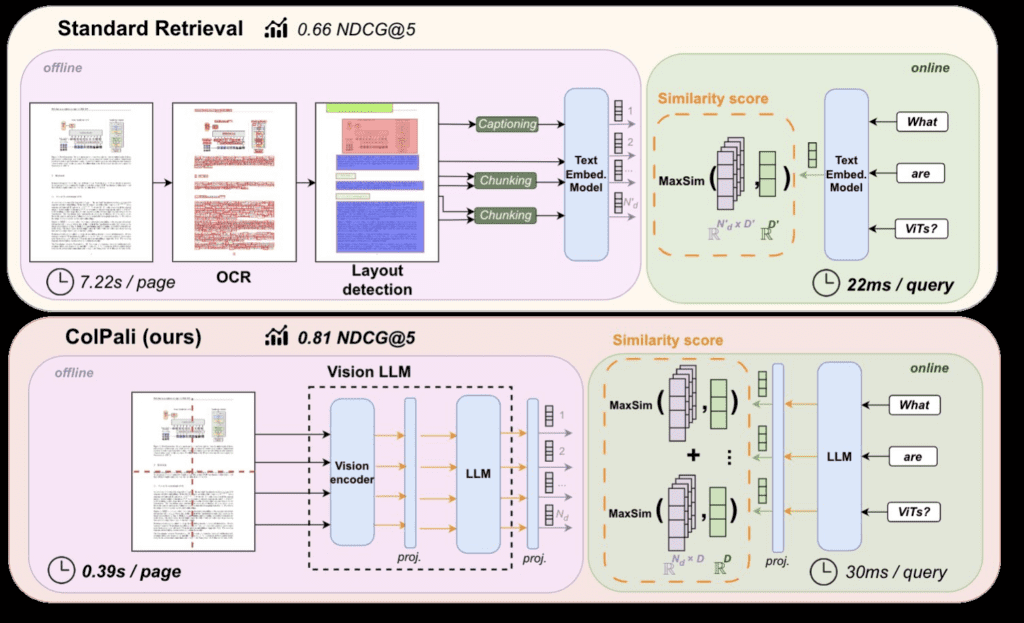

In practical industrial settings, the main performance bottleneck for efficient document retrieval is not in embedding model performance but in the prior data ingestion pipeline.

– ColPali Paper 2024

Consider the case where you need to index a PDF of a financial report containing unstructured elements tables, images, graphs, charts for Multimodal Retrieval Augmented Generation. Unlike structured elements modern retrieval systems involve several steps to ensure high quality retrieval. OCR models extract text elements, Layout detectors like YOLOX detect individual element types into various document segmentations such as table, charts, figure etc. Elements such as narrative text, table, title, figure caption, image, headers, footers, graph etc. are obtained within a raw list. Tools like Unstructured.io use models such as tesseract-ocr, microsoft/table-transformer-structure-recognition (TATR) and YOLOX internally for table and layout detection.

Using a Multimodal LLM like Gemini, text summaries or figure captions of the images and tables are generated and their embeddings are stored in Vector DB’s such as Qdrant, Weaviate, or Pinecone.

During the retrieval stage, dense embedding with fixed output dimension is used to encode user query and results are retrieved based on nearest neighbor distance or cosine similarity. However the quality of the retrieval can be inconsistent and often requires a lot of manual inspection. Curated sets of document chunks with optimal chunk size and chunk overlap length also play a crucial role extracting coherent information about each element.

The shortcomings of current text-centric systems includes,

- Complex data extraction and ingestion pipeline with OCR, chart and layout detection.

- High latency and the need for extensive pre and post processing data curation manually.

- Often poor retrieval results due to loss of context and lack of interaction between elements on a page, as each element type is extracted and indexed separately.

You can get access to our experimental notebook we have tried, using Unstructured tool and Gemini similar to the above workflow by clicking the “Download Code Button”.

Through our experimentation, we got average to poor results particularly in retrieving table elements, even when searching with exact keywords titles. The quality of retrieval can be improved, particularly in preparing the table and image summaries before ingesting. However this is subjected to further experimentation.

Why is ColPali needed?

Instead of trying to transform human documents into something LLMs understand, we should make LLMs understand documents the way humans do.

– Source: Langchain community

As humans, we are naturally visual creatures, often interacting with our environment through visual cues and interpreting information more effectively through images, tables, and diagrams. There’s a saying, “A picture is worth a thousand words” and in the context of Vision Language Models (VLMs), a picture can indeed be worth thousands of words in terms of the data and insights it can convey.

Documents are visually rich structures that convey information through text, as well as tables, figures, page layouts, or fonts.

– ColPali Paper 2024

In a similar fashion, ColPali enables VLMs like PaliGemma 3B to process this rich information not just as text. Rather than breaking down documents into individual isolated components, ColPali enables the model to interpret the entire page as an image, essentially maintaining the underlying context and preserving the layout intact. As a result, ColPali outperforms all SOTA PDF retrieval methods and is end-to-end trainable making it stand out in the RAG or search engines arena.

Composition of ColPali

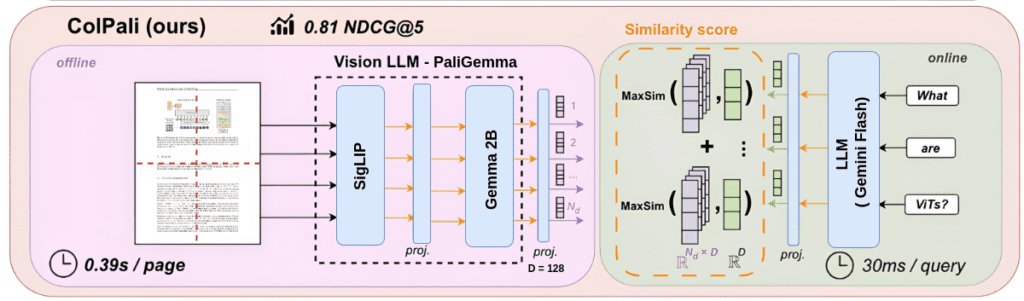

ColPali developed by Manuel Faysee et al., combines ColBERT’s late interaction mechanism with PaliGemma, a Vision LLM to efficiently extract elements from a document by treating each page as an image.

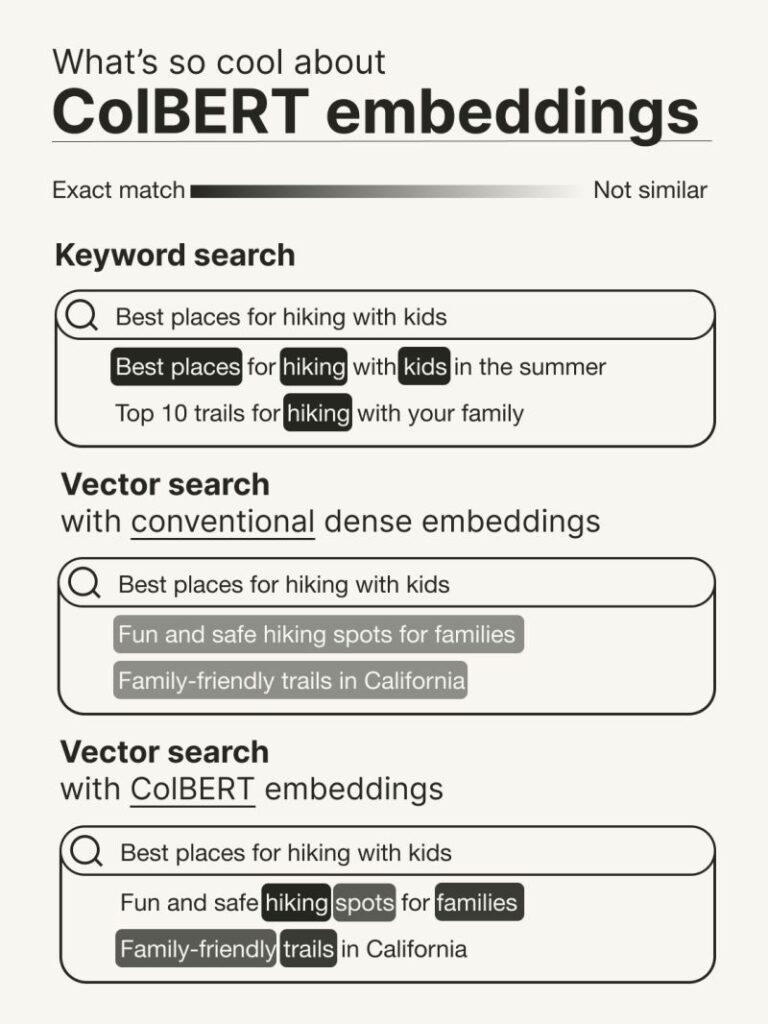

ColBERT

But what is ColBERT? ColBERT, is a ranking model based on contextualized late interaction over BERT. This differs from traditional models wherein a query is compressed into a single vector potentially losing semantically rich information. In ColBERT, every token in the query interacts with all the document embeddings through late interactions preserving granular details of the query.

Source: Victoria Slocum at Weaviate

Mathematically, the LI(q,d) is the late interaction operator, sums over all the query vectors (Eqi ) of maximum dot product with each document embedding vectors Edj .

ColBERT achieves a high Mean Reciprocal Rank (MRR@10) , in retrieval comparable to highly performant BERT based models but with reduced computation cost and less latency. ColBERT based embeddings can be more effective is by using a single embedding to retrieve a set of candidate chunks (Fast LookUp) and late interactions (Rerank) effectively reducing the cost.

Source Leonie Monigatti

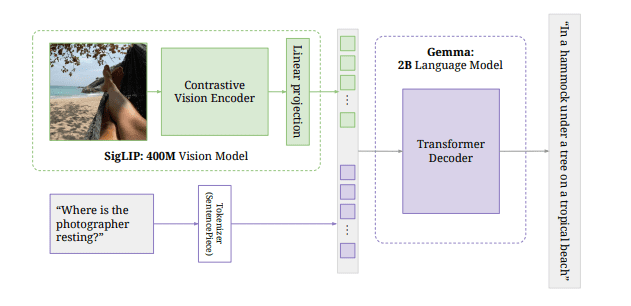

PaliGemma

On the other hand, PaliGemma is a VLM composed of 400M SigLIP Vision Encoder with Gemma 2B as language model.

Note: SigLiP is a successor to CLIP utilizing sigmoid loss instead of contrastive loss. To learn more about Training a CLIP like model from Scratch bookmark our article for a later read.

PaliGemma is a lightweight VLM, with robust performance across wide range of tasks like short video caption, visual question answering, text reading, object detection and object segmentation. PaliGemma is a single-turn VLM and is not suited for conversation.

How does ColPali Work?

The ColPali pipeline can be broken into two main phases:

1. Offline Indexing

This is a one time, computationally intensive process where the document is preprocessed, encoded and indexed into retrievable format and it is referred as an offline process.

- All the document pages are processed into 1030 patches. These flattened image patches of 128 dimensions each, are fed into the SigLIP vision encoder.

- An intermediate projection layer between SigLIP and Gemma2B projects the image tokens into a shared embedding space.

- These image patches are then passed into Gemma 2B decoder to generate contextualized representation about the image tokens.

- An additional projection layer maps the output of Gemma 2B language model embeddings into a lower dimensional (

D = 128) vector space, similar to approach in the ColBERT Paper to create lightweight bag-of-embedding representations. - These embeddings are then indexed either locally or in Vector DB’s that natively supports ColBERT style embeddings such as Vespa, LintDB etc.

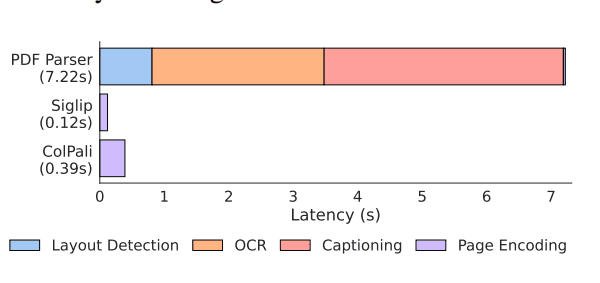

The paper reports that a typical PDF Parsers takes about 7.22s during offline indexing while ColPali takes just 0.37s.

2. Online Querying

The querying is an online phase and the inference must be fast and responsive for better user-experience. When a search query is submitted,

- The query is encoded by the language model on-the-fly.

- A late interaction mechanism similar to the ColBERT ranking model (Khattab and Zaharia, 2020) computes the maximum similarity score between query embeddings and pre- indexed document embeddings.

- Finally, ColPali returns top k similar results(as images), which can be then fed into any Multimodal LLM like Gemini along with user query to get an interpretable response about the table, text or figures.

During the online querying phase, standard retrievers with the BGE embedding model takes about 22ms to encode 15 tokens while ColPali is relatively slow taking around 30 ms/query.

Until now, we have understood all the nitty gritty details about ColPali’s internal workings. Using this knowledge let’s build an interesting RAG application for 10-Q reports of companies. At a high level our application workflow, looks as follows,

Building a Financial Report Analysis App using ColPali and Gemini

Code Walkthrough

Installing Dependencies:

colpali-engine and mteb– For running ColPali engine and benchmarking with MTEB.Transformers– to load and work with pre-trained transformer models.bitsandbytes– enables efficient loading of models in 8 bits and 4 bits quantization.einops– for flexible tensor manipulation, to simplify operations for complex data structures.google-generativeai– Provides access to Gemini models via API from Google AI Studio.pdf2image– to convert pdf pages to images list.

!pip install colpali-engine==0.2.2 -q

!pip install -U bitsandbytes -q

!pip install mteb transformers tqdm typer seaborn -q

!pip install pdf2image einops google-generativeai gradio -q

We will need to also install poppler-utils, an essential package for manipulating PDF files and converting them to other formats.

# Run in terminal

sudo apt install poppler-utils

Import Dependencies

Next we will import the necessary dependencies,

- AutoProcessor : from the transformers library, is used to automatically load and preprocess data for the model.

- ColPali from paligemma colbert architecture combines PaliGemma VLM with ColBERT’s late interaction mechanism.

- CustomEvaluator from colpali engine helps to evaluate the retrieval performance on accuracy and relevance.

- process_images, process_queries utility functions transform images and queries expected by the ColPali model.

import os

import gradio as gr

import torch

from pdf2image import convert_from_path

from PIL import Image

from torch.utils.data import DataLoader

from tqdm import tqdm

from transformers import AutoProcessor

from colpali_engine.models.paligemma_colbert_architecture import ColPali

from colpali_engine.trainer.retrieval_evaluator import CustomEvaluator

from colpali_engine.utils.colpali_processing_utils import (

process_images,

process_queries,

)

Load Model from Hugging Face 🤗

We will set our HuggingFace Access Token as an environment variable to access the PaliGemma model as it is a gated model. For this we will use google/paligemma-3b-mix-448 trained on a mixture of downstreali and how does it work for Multimodal RAGam tasks like Segmentation, OCR, VQA, Captioning etc.

Let’s load the fine-tuned lora adapter of PaliGemma-3b in bfloat16 precision occupying around 6 GB vRAM.

model_name = "vidore/colpali" # specify the adapter model name

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

# bnb_4bit_compute_dtype=torch.bfloat16

)

retrieval_model = ColPali.from_pretrained(

"google/paligemma-3b-mix-448",

torch_dtype=torch.float16, # set the dtype to bfloat16

device_map="cuda",

quantization_config=bnb_config,

).eval() # set the device to cuda

vit_config = VIT_CONFIG["google/paligemma-3b-mix-448"]

retrieval_model.load_adapter(model_name)

paligemma_processor = AutoProcessor.from_pretrained(model_name)

device = retrieval_model.device

Let’s download some quarterly financial pdf files from official websites of U.S Tech firms which we will be using later.

DATA_FOLDER = "pdfs-financial"

os.makedirs(DATA_FOLDER,exist_ok = True)

!wget -nc https://www.apple.com/newsroom/pdfs/fy2024-q1/FY24_Q1_Consolidated_Financial_Statements.pdf -O "data/apple-2024.pdf" -q

!wget -nc https://abc.xyz/assets/9c/12/c198d05b4f7aba1e9487ba1c8b79/goog-10-q-q1-2024.pdf -O "data/google-alphabet-2024.pdf" -q

!wget -nc https://digitalassets.tesla.com/tesla-contents/image/upload/IR/TSLA-Q4-2023-Update.pdf -O "data/tesla-2023-q4.pdf" -q

!wget -nc https://s201.q4cdn.com/141608511/files/doc_financials/2024/q1/ecefb2b2-efcb-45f3-b72b-212d90fcd873.pdf -O "data/nvidia-2024-q1.pdf" -q

1. Offline Indexing

The following function index() is responsible for offline indexing by taking a set of pdf files and converting them to images into a list using the pdf2image.convert_from_path package to encode into image embeddings.

In order to efficiently process large datasets (here images), we implement batch processing using a naive PyTorch DataLoader with a batch size of 4. This allows us to handle multiple images in batches, optimizing speed and optimal memory usage.

To ensure the compatible input to the ColPali vision model (SigLIP), we use ColPali AutoProcessor to resize images to 448, normalize and convert them into tensors. Then ColPali encodes image patches into embeddings, unbind them into individual vectors, and offloads to cpu. The model’s output is processed in inference mode without gradient computation ( torch.no_grad( ) ). Including model loading, the indexing phase demands 10.5GB vRAM in Colab.

# Function to index the PDF document (Get the embedding of each page)

def index(files: List[str]) -> Tuple[str, List[torch.Tensor], List[Image.Image]]:

images = []

document_embeddings = []

# Convert PDF pages to images

for file in files:

print(f"Indexing now: {file}")

images.extend(convert_from_path(file))

# Create DataLoader for image batches

dataloader = DataLoader(

images,

batch_size=1,

shuffle=False,

collate_fn=lambda x: process_images(paligemma_processor, x),

)

# Process each batch and obtain embeddings

for batch in dataloader:

with torch.no_grad():

batch = {key: value.to(device) for key, value in batch.items()}

embeddings = retrieval_model(**batch)

document_embeddings.extend(list(torch.unbind(embeddings.to("cpu"))))

total_memory = sum(

embedding.element_size() * embedding.nelement()

for embedding in document_embeddings

)

print(f"Total Embedding Memory (CPU): {total_memory/1024 **2} MB")

total_image_memory = sum(

image.width * image.height * 3 for image in images

) # 3 for RGB channels

print(f"Total Image Memory: {total_image_memory / (1024 ** 2)} MB")

# Return document embeddings, and images

return document_embeddings, images

pdf_files = [os.path.join(DATA_FOLDER, file) for file in os.listdir(DATA_FOLDER) if file.lower().endswith('.pdf')]

document_embeddings, images = index(pdf_files)

2. Online Querying

In the online querying phase, search queries are processed using process_queries () by the language model (Gemma 2B) to convert into embeddings. Similar to the indexing phase the embedding tokens are saved into a list of query tokens qs.

The process_queries( ) takes a paligemma_processor object, query and a dummy image of size 448 with white pixels as a placeholder object. VLMs like PaliGemma are designed and trained to take both query and image simultaneously. Even though we are only interested in passing a text query, a placeholder or blank image is passed along to meet the model’s input structure, ensuring compatibility and preventing errors during inference.

To evaluate the most relevant document page, the query embeddings (qs) and the pre-computed document embeddings (ds) are compared using a CustomEvaluator.

In CustomEvaluator( ) class, is_multi_vector = True parameter represents multiple vectors for each query indicating colbert style embeddings and late interaction mechanism.

The top k best page indices of images list, with maximum scores are retrieved by retrieve_top_document( ).

def retrieve_top_document(

query: str,

document_embeddings: List[torch.Tensor],

document_images: List[Image.Image],

) -> Tuple[str, Image.Image]:

query_embeddings = []

# Create a placeholder image

placeholder_image = Image.new("RGB", (448, 448), (255, 255, 255))

with torch.no_grad():

# Process the query to obtain embeddings

query_batch = process_queries(paligemma_processor, [query], placeholder_image)

query_batch = {key: value.to(device) for key, value in query_batch.items()}

query_embeddings_tensor = retrieval_model(**query_batch)

query_embeddings = list(torch.unbind(query_embeddings_tensor.to("cpu")))

# Evaluate the embeddings to find the most relevant document

evaluator = CustomEvaluator(is_multi_vector=True)

similarity_scores = evaluator.evaluate(query_embeddings, document_embeddings)

# Identify the index of the highest scoring document

best_index = int(similarity_scores.argmax(axis=1).item())

# Return the best matching document text and image

return document_images[best_index], best_index

Configuring Gemini LLM API

For a better understanding about complex financial tables and image elements like these, we will use one of the most versatile multimodals, Gemini-Flash from Google AI Studio. By signing in, developers get 1500 API calls per day for Gemini-Flash and Gemini 1.0 pro. We also get access to text embedding models which will be part of a typical RAG pipeline.

We will set configs like perplexity, top_k and maximum generation tokens. According to the need and nature of the task, you can adjust these configurations.

import google.generativeai as genai

generation_config = {

"temperature": 0.0,

"top_p": 0.95,

"top_k": 64,

"max_output_tokens": 1024,

"response_mime_type": "text/plain",

}

genai.configure(api_key=gemini_api_key)

model = genai.GenerativeModel(model_name="gemini-1.5-flash" , generation_config=generation_config)

def get_answer(prompt:str , image:Image):

response = model.generate_content([prompt, image])

return response.text

model

Now, everything is set to use Gemini-Flash via API

genai.GenerativeModel(

model_name='models/gemini-1.5-flash',

generation_config={'temperature': 0.0, 'top_p': 0.95, 'top_k': 64,

'max_output_tokens': 1024, 'response_mime_type': 'text/plain'},

safety_settings={}, tools=None, system_instruction=None,

cached_content=None

)

Perform Multimodal RAG with ColPali

The get_answer( ) function takes in a prompt and the best image retrieved by ColPali, which is then passed to Gemini Flash to generate a response.

def get_answer(prompt:str , image:Image):

response = model.generate_content([prompt, image])

return response.text

Finally, we define the utility function where we call the retrieval_top_document() to return best_image and its corresponding index in the total images list.

def answer_query(query: str ,prompt):

# Retrieve the most relevant document based on the query

best_image, best_index = retrieve_top_document(query=query,

document_embeddings=document_embeddings,

document_images=images)

# Generate an answer using the retrieved document

# answer = phi_vision(prompt, best_image)

#Gemini 1.5 Flash

answer = f"Gemini Response\n: {get_answer(prompt, best_image)}"

return answer, best_image, best_index

Let’s put it all together. We will provide a prompt to Gemini Flash for proper interpretation about the best retrieved image. For better analysis and interpretation, we will need to set detailed instructions in the prompt.

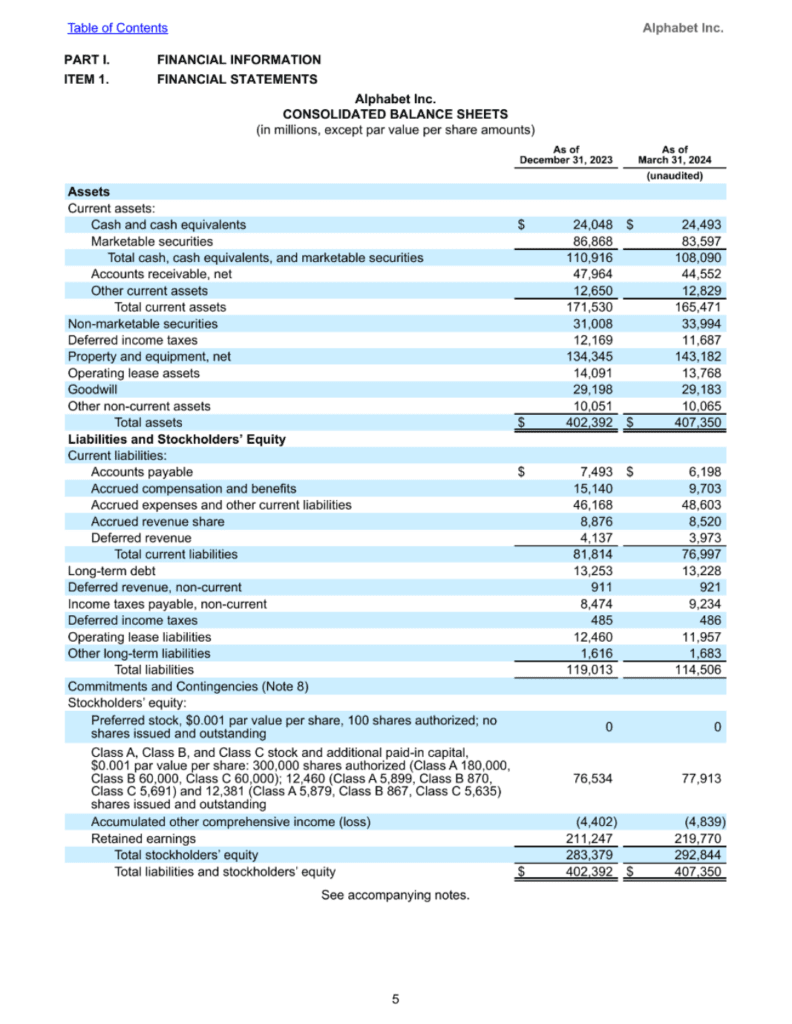

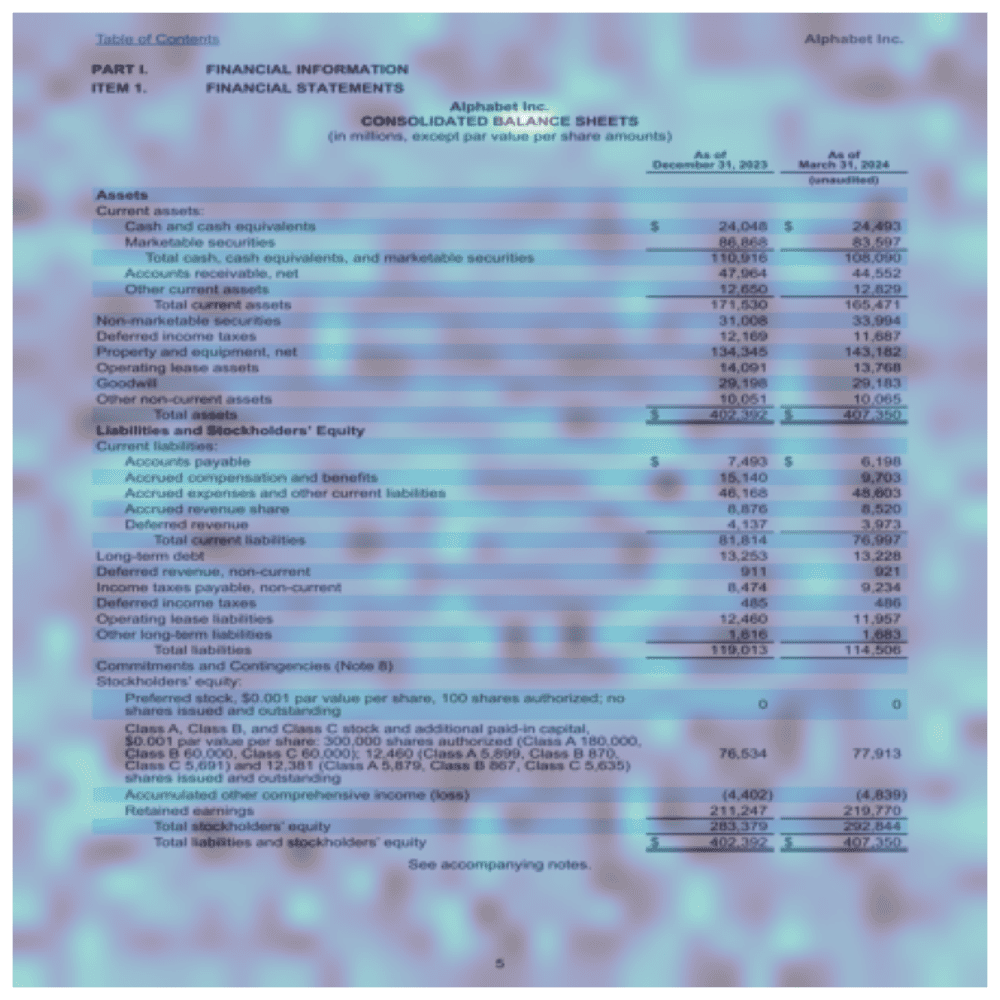

search_query = "Alphabet Inc. Balance Sheets"

prompt = "What is shown in this imageOnline Querying, explain it? Format the answer in neat 200 words summary"

answer, best_image,best_index = answer_query(search_query, prompt)

retrieved_idx = best_index

print(answer)

best_image

Most relevant image index is `7`

Gemini Response

The image shows the consolidated balance sheets of Alphabet Inc.

for the periods ending December 31, 2023, and March 31, 2024.

The balance sheet is divided into three sections: assets, liabilities,

and stockholders' equity.The assets section includes current assets,

non-current assets, and total assets. The liabilities section includes

current liabilities, long-term debt, and total liabilities. The

stockholders equity section includes preferred stock, common stock,

accumulated other comprehensive income (loss), retained earnings,

and total stockholders' equity. The balance sheet shows that

Alphabet Inc. had total assets of $402,392 million as of December 31,

2023, and $407,350 million as of March 31, 2024. The company had

total liabilities of $119,013 million as of December 31, 2023, and

$114,506 million as of March 31, 2024. The company had total

stockholders' equity of $283,379 million as of December 31, 2023,

and $292,844 million as of March 31, 2024.

Gradio App

To build a gradio interface, the indexing and search functions are similar to retrieve_top_k_documents( ), discussed earlier. We will add gradio blocks to upload multiple files, and convert them to embeddings. The subsequent steps are as usual, by submitting a search query we obtain a descriptive summary of the image from Gemini.

# Load model

model_name = "vidore/colpali"

hf_token = getpass.getpass("Enter HF API: ")

os.environ["HF_TOKEN"] = hf_token

model = ColPali.from_pretrained(

"google/paligemma-3b-mix-448",

torch_dtype=torch.bfloat16,

device_map="cuda",

token=hf_token,

).eval()

model.load_adapter(model_name)

processor = AutoProcessor.from_pretrained(model_name, token=hf_token)

device = model.device

def index(file, ds):

images = []

for f in file:

images.extend(convert_from_path(f))

# run inference - docs

dataloader = DataLoader(

images,

batch_size=4,

shuffle=False,

collate_fn=lambda x: process_images(processor, x),

)

for batch_doc in tqdm(dataloader):

with torch.no_grad():

batch_doc = {k: v.to(device) for k, v in batch_doc.items()}

embeddings_doc = model(**batch_doc)

ds.extend(list(torch.unbind(embeddings_doc.to("cpu"))))

return f"Uploaded and converted {len(images)} pages", ds, images

def search(query: str, ds, images):

qs = []

with torch.no_grad():

batch_query = process_queries(processor, [query], mock_image)

batch_query = {k: v.to(device) for k, v in batch_query.items()}

embeddings_query = model(**batch_query)

qs.extend(list(torch.unbind(embeddings_query.to("cpu"))))

# run evaluation

retriever_evaluator = CustomEvaluator(is_multi_vector=True)

scores = retriever_evaluator.evaluate(qs, ds)

best_page = int(scores.argmax(axis=1).item())

return f"The most relevant page is {best_page}", images[best_page]

COLORS = ["#4285f4", "#db4437", "#f4b400", "#0f9d58", "#e48ef1"]

mock_image = Image.new("RGB", (448, 448), (255, 255, 255))

with gr.Blocks() as demo:

gr.Markdown(

"# ColPali: Efficient Document Retrieval with Vision Language Models 📚🔍"

)

gr.Markdown("## 1️⃣ Upload PDFs")

file = gr.File(file_types=["pdf"], file_count="multiple")

gr.Markdown("## 2️⃣ Index the PDFs and upload")

convert_button = gr.Button("🔄 Convert and upload")

message = gr.Textbox("Files not yet uploaded")

embeds = gr.State(value=[])

imgs = gr.State(value=[])

# Define the actions for conversion

convert_button.click(index, inputs=[file, embeds], outputs=[message, embeds, imgs])

gr.Markdown("## 3️⃣ Search")

query = gr.Textbox(placeholder="Enter your query to match", lines=150)

search_button = gr.Button("🔍 Search")

gr.Markdown("## 4️⃣ ColPali Retrieval")

message2 = gr.Textbox("Most relevant image is...")

output_img = gr.Image()

def get_answer(prompt: str, image: Image):

response = gemini_flash.generate_content([prompt, image])

return response.text

# Function to combine retrieval and LLM call

def search_with_llm(

query,

ds,

images,

prompt="What is shown in this image, analyse and provide some interpretation? Format the answer in a neat 500 words summary.",

):

# Step 1: Search the best image based on query

search_message, best_image = search(query, ds, images)

# Step 2: Generate an answer using LLM

answer = get_answer(prompt, best_image)

return search_message, best_image, answer

# Action for search button

search_button.click(

search_with_llm,

inputs=[query, embeds, imgs],

outputs=[message2, output_img, output_text],

)

if __name__ == "__main__":

demo.queue(max_size=10).launch(debug=True, share=True)

Gradio Demo – Multimodal RAG with ColPali and Gemini

Additional Testing

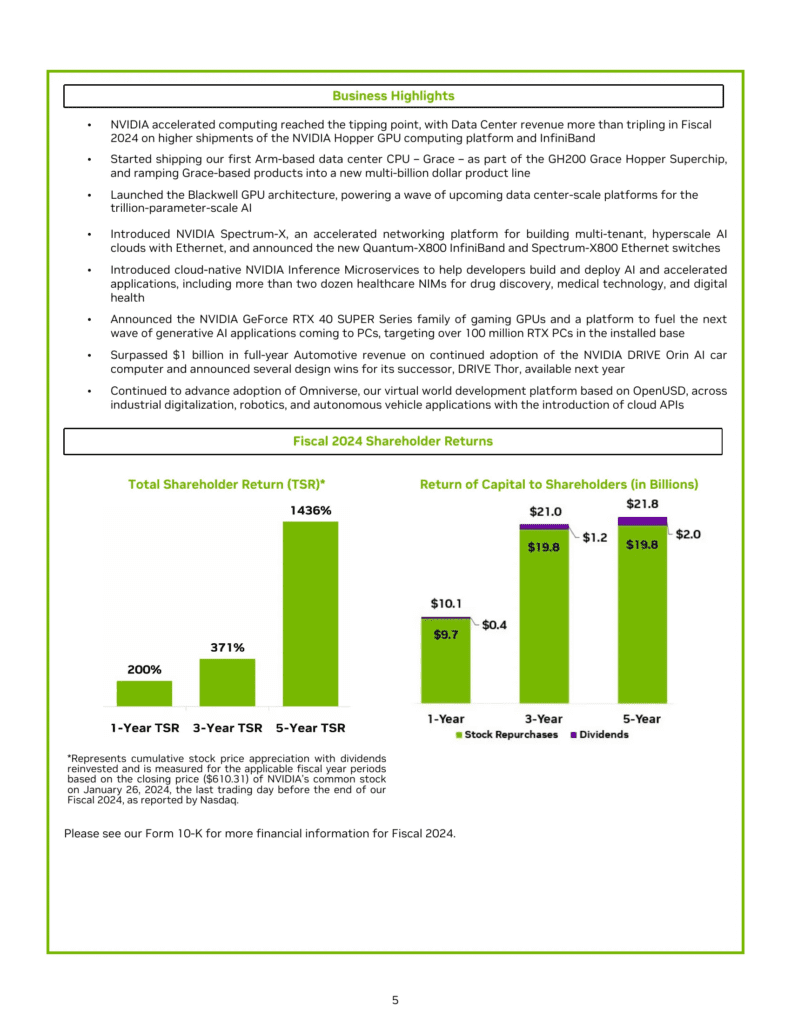

Type 1: Chart Interpretation

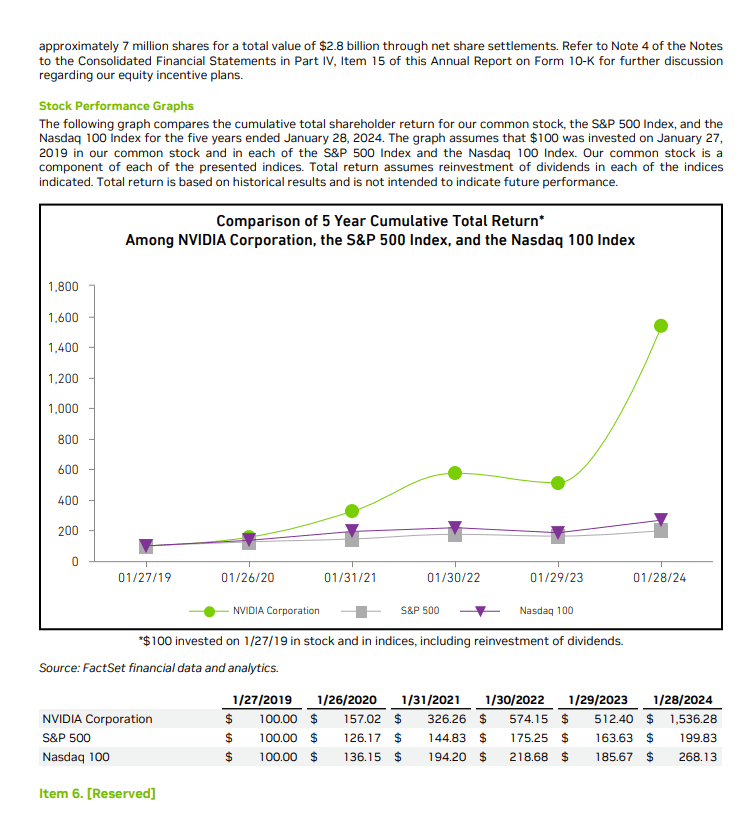

Search Query: Nvidia Fiscal Shareholder Returns

ColPali Retrieved Image

Gemini Response

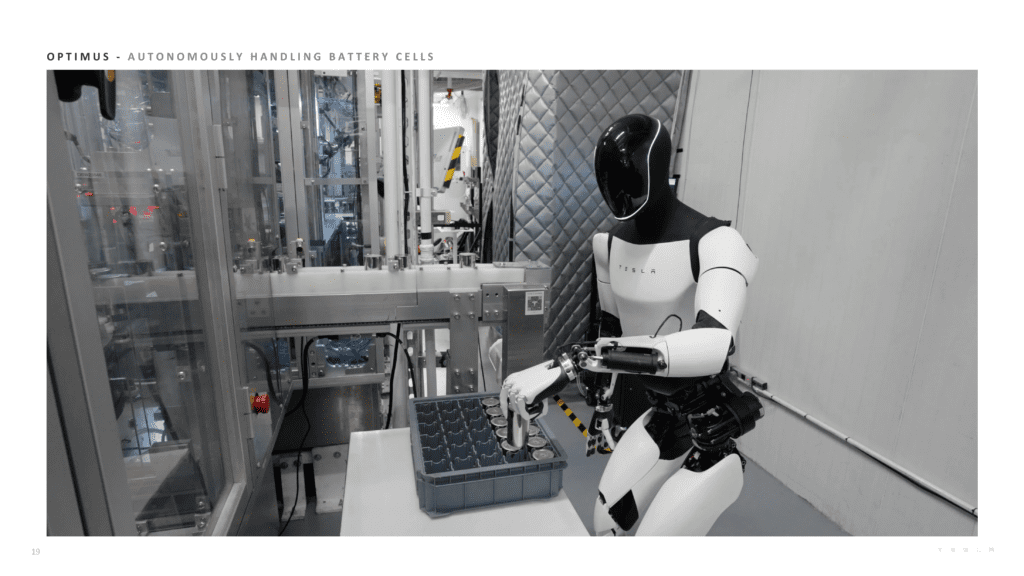

Type 2: Image Interpretation

Search Query: Tesla Optimus

ColPali Retrieved Image

Gemini Response

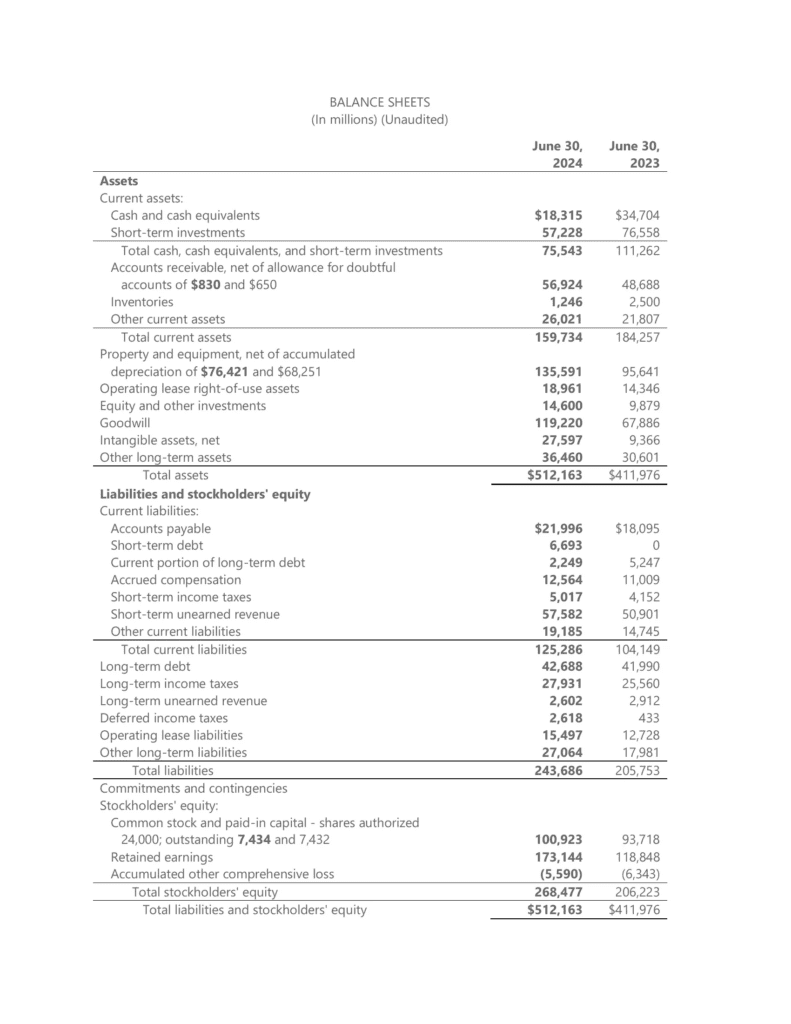

Type 3: Table Interpretation

Search Query: Describe Microsoft Balance Sheet

ColPali Retrieved Image

Gemini Response

Type 4: Graph Interpretation

Search Query: Nvidia’s Comparison of 5 Year Cumulative Total Return

ColPali Retrieved Image

Gemini Response

ColPali Training Details From Paper

The dataset comprises 127,460 query-page pairs along with Claude 3.5 Sonet’s pseudo questions. Training configuration of the model includes a paged_adamw_8bit optimizer, learning rate of 5e-5 with linear decay with 2.5% warm up steps and a batch size of 32.Also the LoRA configs of the language model are α = 32 and r = 32. Additionally, Query Augmentation, a technique is used by appending 5 <unused0> tokens to query tokens serving as placeholders to be learnt during training. This helps the model to focus on most relevant terms by prioritizing which part of the query is important.

ColPali encodes each page as an image directly consuming a memory of about 256KB per page. The paper also presents other models like ColIdefics2 with composed models of Mistral-7B as decoder and SigLIP as vision encoder.

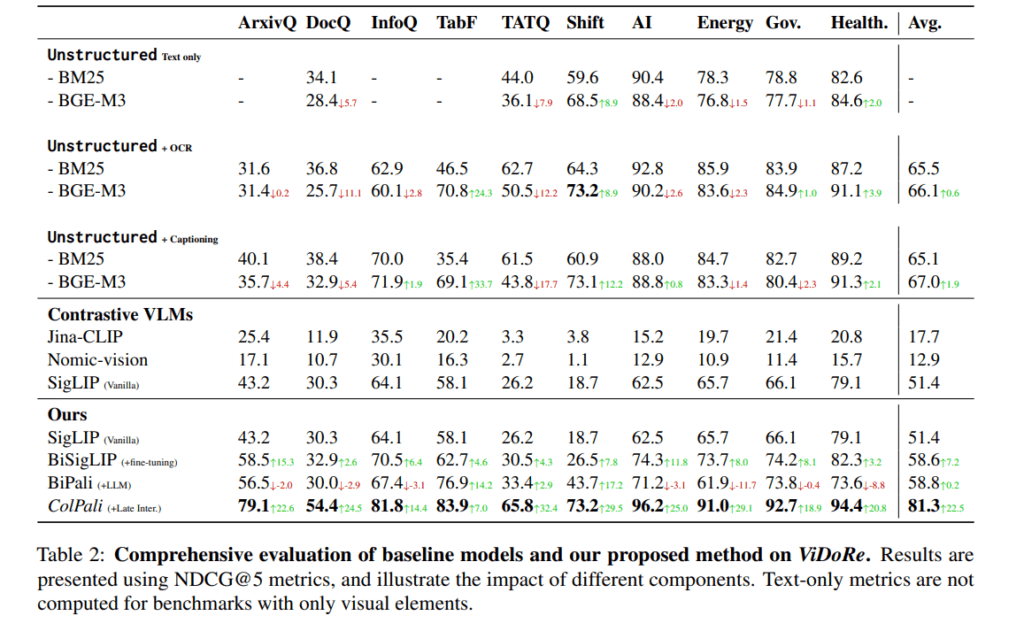

Visual Document Retrieval Benchmark – ViDoRe

A benchmark evaluates document retrieval methods by considering both text and visual document features. It is composed of various page-level retrieving tasks across modalities – text, figures, infographics, tables etc. To create this benchmark, the author collected publicly available pdf documents and generated queries using Claude 3.5 Sonet, producing a set of page-question-answer triplets. An important evaluation metric in this benchmark is NDCG@5 (Normalized Discounted Cumulative Gain at rank 5) to measure the relevance of top-5 retrieved results, with higher score means more relevant retrieval performance.

Apart from these, ColPali performs well on non-english documents as well; which is attributed to multilingual data present in pre pre-training corpus of Gemma 2B.

Limitations of ColPali

- ColPali is mainly evaluated mainly on English and French language documents.

- The paper discusses throughput and latency aspects mainly for PDF-type documents while non-pdf documents are much underexplored.

- As some part of the training dataset query pairs are being synthetically generated from Claude 3.5 Sonet, there might be some bias in the generated queries.

- Limited Vector DB’s natively support ColBERT embeddings which might be an overhead if you’re planning to upgrade or augment ColPali within your existing RAG application. However, community discussion of Vector DB providers like Weaviate suggest that there are workarounds to retrieve ColBERT style embeddings.

- ColPali saves and retrieves the entire page instead of specific chunks. This demands additional tokens and API costs to process the entire page.

- The most relevant information about the search query might be distributed in small chunks across the pages or any random page within the document. Therefore just retrieving the relevant chunks can sometimes be a more optimal solution.

A vision retriever pipeline shouldn’t be dependent on being able to parse the text from the documents as it defeats the purpose. Another way to reduce search space would be to flatten and pad the query vectors (25×128) and perform vector search on doc vectors (1030×128). This method a hack to capture the pages that might contain patches similar to the query. After all, both use the same projection layers to map inputs to 128-dim. In my experiments, this method worked quite well, reducing the search space without having to capture doc texts.

– Source: Ayush Chaurasia, LanceDB

Trivia

Visualize Heatmaps of our Query from ColPali

Search Query: Alphabet Balance Sheet

from colpali_engine.interpretability.vit_configs import VIT_CONFIG

from colpali_engine.interpretability.plot_utils import plot_attention_heatmap

from colpali_engine.interpretability.gen_interpretability_plots import (

generate_interpretability_plots,

)

from colpali_engine.interpretability.processor import ColPaliProcessor

from colpali_engine.interpretability.plot_utils import plot_attention_heatmap

from colpali_engine.interpretability.torch_utils import (

normalize_attention_map_per_query_token,

)

retrieved_image = images[retrieved_idx]

colpali_processor = ColPaliProcessor(paligemma_processor)

# Resize the image to square

input_image_square = retrieved_image.resize(

(vit_config.resolution, vit_config.resolution)

)

# scale_image(image, 256)

plt.imshow(retrieved_image)

plt.axis("off")

print("Image size: ", retrieved_image.size)

input_text_processed = colpali_processor.process_text(search_query).to(device)

input_image_processed = colpali_processor.process_image(

retrieved_image, add_special_prompt=True

).to(device)

with torch.no_grad():

output_text = retrieval_model.forward(**asdict(input_text_processed)) # type: ignore

with torch.no_grad():

output_image = retrieval_model.forward(**asdict(input_image_processed)) # type: ignore

# Remove the memory tokens

output_image = output_image[

:, : colpali_processor.processor.image_seq_length, :

] # (1, n_patch_x * n_patch_y, hidden_dim)

output_image = rearrange(

output_image,

"b (h w) c -> b h w c",

h=vit_config.n_patch_per_dim,

w=vit_config.n_patch_per_dim,

)

attention_map = torch.einsum("bnk,bijk->bnij", output_text, output_image)

# Normalize the attention map (all values should be between 0 and 1)

attention_map_normalized = normalize_attention_map_per_query_token(attention_map)

# attention_map_normalized.shape

# Use this cell output to choose a token"

idx = {

idx: val

for idx, val in enumerate(colpali_processor.tokenizer.tokenize(search_query))

}

print(" Tokens Idx: ", idx)

token_idx = len(idx) - 1

print(

f"Attention Token Chosen: {colpali_processor.batch_decode(input_text_processed.input_ids[:, token_idx])}"

)

attention_map_image = Image.fromarray(

(attention_map_normalized[0, token_idx, :, :].cpu().numpy() * 255).astype("uint8")

).resize(input_image_square.size, Image.Resampling.BICUBIC)

fig, ax = plot_attention_heatmap(

input_image_square,

patch_size=vit_config.patch_size,

image_resolution=vit_config.resolution,

attention_map=attention_map_normalized[0, token_idx, :, :],

)

os.makedirs("activations", exist_ok=True)

fig.savefig("activations/heatmap")

Search Query Alphabet Balance Sheet

Key Takeaways

- Instead of commercial Gemini or Claude, you can also use a host smaller VLM like Phi- Vision or Qwen-2B in colab, to query over a set of private documents or sensitive data.

- Latest improvements and wrappers around ColPali by Byaldi RAGatouille from AnswerDotAI are promising, which brings it closer to production ready servings with an easy to use retrieval pipeline.

Conclusion

The results from ColPali are pretty impressive in handling multimodal data. We got our hands-on testing of this with a most demanding application in GenAI space to chat with documents like finance,law and regulations etc.

ColPali is a big leap in Multimodal Generative AI space, which improves the document retrieval quality by a huge margin. Kudos to the team at Illuin Technology for making this as an open-source project with MIT license.

References

- ColPali: Efficient Document Retrieval with Vision Language Model

- ColPali Github

- ColBERT: Efficient and Effective Passage Search via Contextualized Late Interaction over BERT

- PyVespa

- Asif Qamar ColPali Session on Support Vectors

- Prompt Engineering – Youtube

- GoPenAI

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning