As vehicular technology continues to evolve at a rapid pace, the push towards safer, smarter, and more efficient driving experiences has been at the forefront of automotive innovation. Advanced Driver Assistance Systems (ADAS) is a key player in this technological revolution, and refers to a set of technologies and features integrated into modern vehicles to enhance driver safety, improve the driving experience, and assist in various driving tasks. It uses sensors, cameras, radar, and other technologies to monitor the vehicle’s surroundings, collect data, and provide real-time feedback to the driver.

The roots of ADAS can be traced back to the early stages of automotive safety enhancements, such as the introduction of Anti-lock Braking Systems (ABS) in the late 1970s. However, the true emergence of ADAS as we understand it today began in the 2000s, with the integration of radar, cameras, and ultrasonic sensors.

Need for ADAS

In the current generation, there are multiple factors that require serious consideration:

Road Safety Statistics

- According to the World Health Organization (WHO), approximately 1.35 million people die in road traffic accidents yearly, making it the eighth leading cause of death globally.

- Road traffic crashes result in an estimated global economic loss of approximately 3% of GDP for most countries. This figure not only accounts for immediate medical costs but also the long-term impacts on productivity and rehabilitation costs.

ADAS can play a significant role in reducing these numbers. By integrating features like automatic emergency braking, lane departure warnings, and adaptive cruise control, the likelihood of collisions can be decreased.

Changing Urban Landscape

- With more people migrating to cities, the traffic density in urban areas is set to increase exponentially. This surge will be accompanied by new infrastructural developments and changing traffic patterns, creating more complex driving conditions.

- In many cities, road infrastructure hasn’t kept pace with the rate of urbanization. Narrow roads, inadequate signage, and unpredictable traffic patterns pose challenges to even the most experienced drivers.

- To combat these challenges, ADAS tools like traffic sign recognition, pedestrian detection, and parking assistance can prove invaluable.

Environmental Considerations

- Road vehicles are responsible for significant global CO2 emissions, which play a major role in climate change. Inefficient driving patterns, like rapid acceleration and deceleration, can exacerbate these emissions.

- In urban areas, frequent stop-and-go traffic leads to excessive fuel consumption and increased emissions. This not only has environmental repercussions but also has direct health implications due to the degradation of air quality.

- Systems like adaptive cruise control can modulate speed efficiently, leading to smoother driving patterns and reduced fuel consumption.

Different levels of ADAS

There are multiple levels of advanced driver assistance systems, based on the offerings:

Level 0 [Manual]:

At this level, vehicles have no automation capabilities. The responsibility for control and decision-making rests entirely with the human driver.

Level 1 [Driver Assistance]:

This level offers limited assistance, either with steering or with acceleration / braking. Examples include adaptive cruise control and lane-keep assistance, but the driver remains largely in control. Lane detection is a crucial aspect of driver assistance.

Level 2 [Partial Automation]:

Vehicles at this level can manage both steering and acceleration/braking in certain conditions. However, the driver must always be alert and ready to take over, making sure to supervise the vehicle’s actions closely.

Level 3 [Conditional Automation]:

Here, the vehicle can make dynamic decisions using AI components. It can handle some driving tasks but will prompt the driver to intervene when it encounters a scenario it can’t navigate.

Level 4 [High Automation]:

These vehicles can operate in both urban and dedicated highway settings autonomously. Nevertheless, there are still conditions or situations where human intervention might be necessary.

Level 5 [Full Automation]:

The ultimate level of automation, these vehicles don’t even need steering wheels or pedals. They’re designed to handle all driving tasks in every condition without any human input, except the final destination. This can also be a voice input.

What are the core components of ADAS?

Long-Range Radar – Continental ARS640

Highlights

- Type: Long-Range

- Dimensions: 137 x 90 x 40 mm

- Weight: ~500 grams

- Measurement Parameters: Range, Doppler, Azimuth, and Elevation

- Operating Temperature Range: -40° to +85° Celsius

- Power Consumption: 12 volts

The ARS640 Long-Range Radar is a cutting-edge automotive radar system designed to support highly automated driving scenarios, ranging from Level 3 to Level 5 autonomous systems. It boasts a compact form factor with dimensions of 137 x 90 x 40 mm (excluding the connector) and a weight of approximately 500 grams. With an impressive range of 300 meters, it enables precise and direct measurement of four critical dimensions: range, doppler, azimuth, and elevation.

This radar system offers outstanding angular accuracy, with deviations of just ±0.1° in both azimuth and elevation, ensuring precise object detection and tracking. Its rapid update rate of 60 milliseconds enhances real-time awareness, while its operating temperature range of -40° to +85° Celsius ensures robust performance in diverse environmental conditions. With a low power dissipation of around 23 watts and a nominal supply voltage of 12 volts, it is an energy-efficient and reliable solution.

There are multiple applications for long-range radar in ADAS. Let’s explore a few:

Adaptive Cruise Control (ACC)

It is an advanced driver assistance system that automatically adjusts a vehicle’s speed to maintain a safe following distance from the vehicle ahead. Using long-range radar, and camera-based sensors, ACC continuously monitors the traffic environment and responds to changes in the flow. It intelligently decelerates the car accordingly when a slower-moving vehicle is detected in the same lane. Once the road ahead is clear, the system accelerates back to the preset speed, ensuring an optimal balance between maintaining speed and ensuring safety.

Short / Medium Range Radar – Continental SRR520

Highlights

- Type: Short-Range

- Dimensions: 83 x 68 x 22 mm

- Weight: ~135 grams

- Additional Features: Object list management, blind spot warning, lane change assist (Type IIIc), rear-cross traffic alert with braking, front-cross traffic alert with braking, etc

- Operating Temperature Range: -40°C to +85°C Celsius

- Power Consumption: 12 volts

The SRR520 short range radar is a compact and highly capable radar system designed for automotive applications. With dimensions of 83 x 68 x 22 mm and a mass of approximately 135 grams (without connectors), it is a lightweight and space-efficient solution. Operating in the 76-77 GHz frequency range, it offers an impressive range of 100 meters at a 0° angle, along with a wide field of view, featuring ± 90° detection and ± 75° measurement.

This radar system boasts an exceptional update rate of 50 milliseconds and precise speed measurement accuracy of ± 0.07 kilometers per hour. It operates reliably in extreme temperatures ranging from -40°C to +85°C while dissipating 4.2 watts of power at a 12V supply voltage. Its advanced features include object list management, blind spot warning, lane change assist (Type IIIc), rear cross traffic alert with braking, front cross traffic alert with braking, rear pre-crash sensing, occupant safe exit support, and avoidance of lateral collisions.

There are multiple applications for short-medium range radar in ADAS. Let’s explore a few:

Cross Traffic Alert (CTA)

The Cross Traffic Alert (CTA) system becomes active when the driver shifts the vehicle into reverse. It employs short-to-medium range radar sensors, typically mounted on the rear corners of the vehicle, to scan for approaching vehicles from either side. If an approaching vehicle is detected, the system alerts the driver through visual, audible, or haptic warnings. These visual alerts often manifest in the side mirrors or on the vehicle’s infotainment screen. Based on this alert, the driver can stop, continue reversing cautiously, or wait for the cross traffic to clear.

Rear Collision Warning (RTW)

It uses a fusion of multiple rear cameras with radar technology to inform the driver if another vehicle is approaching from either direction, when the vehicle is moving in reverse or backing out of a parking space.

LiDAR: Light Detection and Ranging – InnovizTwo

Highlights

- Type: 3D Point-cloud Estimation

- Angular Resolution: 0.05°x0.05°

- Range of Frame Rate: 10, 15, 20 FPS

- Detection Range: 0.3 meters to 300 meters

- Maximum Field of View: 120°x43°

The InnovizTwo LiDAR system boasts impressive technical specifications, including a maximum angular resolution of 0.05°x0.05° and a configurable frame rate of 10, 15, or 20 FPS, making it a versatile and high-performance sensor for autonomous vehicles. With a detection range spanning from 0.3 meters to an impressive 300 meters, it excels in capturing objects at varying distances. Its expansive 120°x43° maximum field of view ensures comprehensive coverage of the vehicle’s surroundings.

InnovizTwo’s standout features include its ability to dynamically focus on four individually-controlled regions of interest within a limited FOV, enhancing visibility without compromising bandwidth, resolution, or frame rate. The system also excels at handling complex scenarios, recording multiple reflections per pixel and effectively processing laser pulses that encounter rain droplets, snowflakes, or multiple objects in their path.

Its scanning pattern is characterized by contiguous pixels, eliminating data gaps crucial for building a safe autonomous vehicle perception system. This design ensures the system’s capability to detect even small collision-relevant objects and pedestrians on the road surface. A vertical FOV offers high resolution evenly distributed across all regions, in contrast to other sensors that may prioritize the center and lose data towards the edges. This design provides more comprehensive data and accommodates mounting tolerances and varying driving conditions.

There are multiple applications for LiDAR in ADAS. Let’s explore a few:

Emergency Braking (ER)

It utilizes advanced sensor technologies, including radar, LiDAR, and high-resolution cameras, to continuously scan the vehicle’s forward environment. It employs sophisticated algorithms to process this data in real time, determining potential collision risks based on the relative speed and trajectory of detected objects. In situations where a potential frontal collision is imminent, and the driver fails to respond, the system automatically activates the braking mechanism, optimizing brake pressure to mitigate impact severity or, if possible, prevent the collision altogether.

Pedestrian Detection (PD)

PD uses LiDAR, which involves emitting laser beams and analyzing the reflected signals to identify and locate pedestrians in real-time. By capturing the 3D spatial information of a scene, LiDAR systems provide high-resolution data, enabling precise distance measurements and accurate differentiation of pedestrians from other objects in various lighting conditions.

Collision Avoidance (CA)

LiDAR technology plays a pivotal role in Collision Avoidance systems, offering high-resolution, 3D mapping of surroundings in real-time. By emitting laser beams and analyzing the reflected signals, LiDAR provides accurate distance measurements, enabling vehicles to detect obstacles and respond swiftly, even in congested traffic scenarios. This advanced sensing enhances the vehicle’s safety and decision-making capabilities in complex environments.

Cameras – Continental MFC500

Highlights

- Type: Mono-Vision

- Dimensions: 88 x 70 x 38 mm

- Weight: ~200 grams

- Additional Features: Emergency braking, traffic sign assist, traffic jam assist, adaptive cruise control control, continuous lane centering, etc

- Operating Temperature Range: -40°C to +85°C Celsius

- Power Consumption: ~7 watts

The MFC500 Mono camera is a compact and lightweight automotive vision system with highly notable technical specifications. Measuring a mere 88 x 70 x 38mm and weighing less than 200g offers an unobtrusive form factor for seamless vehicle integration. The camera boasts an impressive, effective field of view, with horizontal coverage of up to 125° and vertical coverage of up to 60°, enabling comprehensive vision and perception capabilities for various driving scenarios. Operating within an extreme temperature range from -40° to +95° Celsius, it ensures reliable performance in harsh environmental conditions.

With a low power dissipation of less than 7 watts and a 12V supply voltage, the MFC500 is energy-efficient and compatible with automotive power systems. Its feature set aligns with key safety and regulatory standards, including support for General Safety Regulation (GSR 2.0) and EU-NCAP requirements. The camera offers a range of advanced driver assistance features, including emergency braking for vehicles, pedestrians, and cyclists, emergency lane keeping, speed limit information with automatic limitation, traffic sign assist, event data recording, adaptive cruise control with stop&go functionality and continuous lane centering assist. It also supports standard headlight systems up to matrix beams, enhancing nighttime visibility and safety. The MFC500 Mono camera is a powerful and versatile component for modern automotive safety and automation systems.

There are multiple applications for pure vision in ADAS. Let’s explore a few:

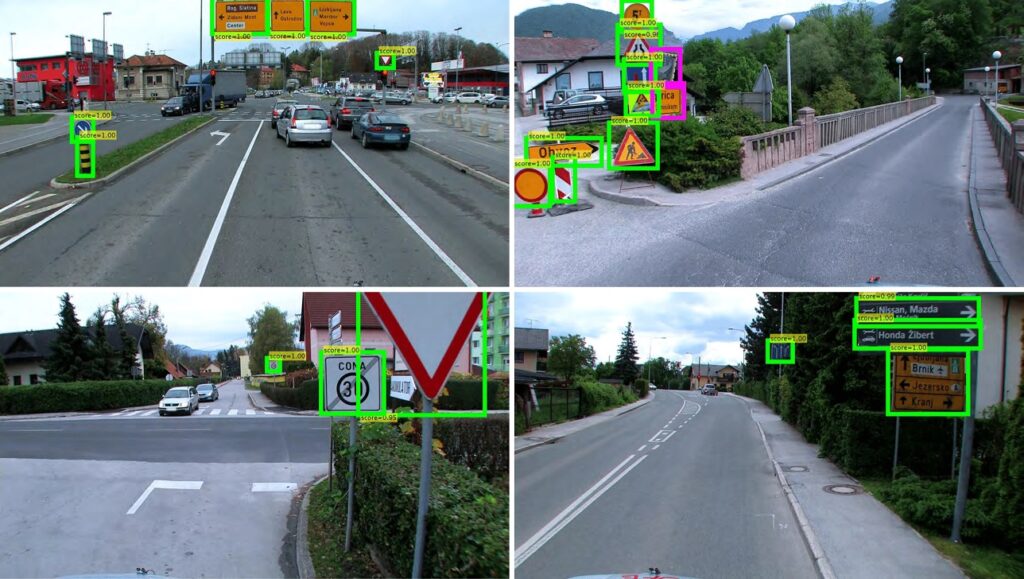

Traffic Sign Recognition (TSR)

Utilizing high-resolution cameras, these systems employ advanced image processing and deep learning algorithms to identify and interpret traffic signs and signals. Pure vision-based TSR provides real-time feedback by analyzing visual data, ensuring drivers or autonomous systems adhere to road regulations. The effectiveness of such systems is enhanced by adaptive lighting conditions and the capability to distinguish between diverse sign types, colors, and shapes.

Lane Departure Warning (LDW)

It provides dynamic alerts to the driver if the vehicle is drifting out of a specific lane on the road. LDW continuously monitors road lane markings to detect unintentional lane shifts. This technology, reliant on high-resolution cameras, processes the road’s visual data in real-time to ensure the vehicle remains within its designated path, enhancing road safety and assisting drivers in maintaining proper lane discipline.

Surround View (SV)

SV is a critical component of advanced driver assistance systems, enhancing driver safety and awareness by offering a comprehensive 180 to 360-degree view of the vehicle’s surroundings. This technology relies on the fusion of data from ultrawide-angle cameras strategically positioned on the vehicle to eliminate blind spots and provide a clear, real-time visual representation of the environment.

ADAS in Action: How Does ADAS work?

The first automobile manufacturer that comes to mind when someone says ‘Self-Driving Car’ is Tesla. This bleeding-edge feature called ‘Autopilot’ relies heavily on artificial intelligence and deep learning techniques to process and interpret the data from sensors. Some of the software components and techniques involved include:

Neural Networks

Tesla uses deep neural networks for image and data analysis. Convolutional Neural Networks (CNNs) are used to recognize and track objects, such as other vehicles, pedestrians, and lane markings.

Sensor Fusion

The system combines data from cameras, ultrasonic sensors, and radar to create a more comprehensive and accurate understanding of the vehicle’s surroundings. This sensor fusion is crucial for safe autonomous driving.

Mapping and Localization

Tesla vehicles use high-definition maps to help with localization. GPS data is combined with information from sensors to position the vehicle on the road precisely.

Control Algorithms

Sophisticated control algorithms are used to make driving decisions, such as maintaining the vehicle within lanes, changing lanes when necessary, and navigating complex traffic scenarios.

What is The Future of Advanced Driver Assistance Systems?

Technology is always evolving, so it is important to keep an eye on what the future holds for this specific technology.

Increased Automation

While Level 2 automation (e.g., Tesla’s Autopilot) was common in 2021, Level 3 and Level 4 systems, which require less driver intervention, are expected to become more prevalent.

Better Sensor Technology

The future of ADAS will likely involve the integration of more advanced sensors, higher-resolution cameras, and improved LiDAR and radar systems to provide a more accurate and comprehensive view of the vehicle’s surroundings.

User Experience and Human-Machine Interaction

The design of user interfaces and the interaction between humans and AI-driven vehicles will be critical to ADAS development. Natural language processing (NLP) and computer vision will play a role in creating more intuitive and user-friendly interfaces.

Environmental Impact

There will be a growing emphasis on reducing the environmental impact of transportation. ADAS may play a role in optimizing driving patterns for fuel efficiency and reducing emissions.

Conclusions

Advanced Driver Assistance Systems, uses industry-leading computer vision technology to represent a pivotal technological frontier in the automotive industry. Leveraging cutting-edge developments in artificial intelligence, deep learning, and sensor technology, it has transcended conventional driver assistance systems, evolving into a sophisticated suite of tools designed to enhance safety, automation, and user experience. The integration of high-resolution cameras, LiDAR, radar, and ultrasonic sensors, combined with the ever-advancing capabilities of neural networks and reinforcement learning algorithms, empowers ADAS to navigate complex real-world driving scenarios with unprecedented precision.

At this point, we’re evolving well beyond the scope of driver assistance and into the domain of autonomous driving. But no matter how far our solutions advance, they’ll all owe their origins to the building blocks of our lifesaving core ADAS technology.

References

- World Health Organization Statistics: https://www.who.int/news-room/fact-sheets/detail/road-traffic-injuries

- Continental ARS640: https://www.continental-automotive.com/en-gl/Passenger-Cars/Autonomous-Mobility/Enablers/Radars/Long-Range-Radar/ARS640

- Continental SRR520: https://www.continental-automotive.com/en-gl/Passenger-Cars/Autonomous-Mobility/Enablers/Radars/Short-Range-Radar/SRR520

- Continental MFC500: https://www.continental-automotive.com/en-gl/Passenger-Cars/Autonomous-Mobility/Enablers/Cameras/Mono-Camera

- InnovizTwo LiDAR: https://innoviz.tech/innoviztwo