Real-time object detection has become essential for many practical applications, and the YOLO (You Only Look Once) series by Ultralytics has always been a state-of-the-art model series, providing a robust balance between speed and accuracy. The inefficiencies of attention mechanisms have hindered their adoption in high-speed systems like YOLO. YOLOv12 aims to change this by integrating attention mechanisms into the YOLO framework

YOLOv12 combines the fast inference speeds of CNN-based models with the enhanced performance that attention mechanisms bring.

- What’s new in YOLOv12 ?

- Architecture Overview

- Benchmarks

- Limitation

- Inference experiments

- Conclusion

- Key Takeaways

- References

YOLO Master Post – Every Model Explained

Don’t miss out on this comprehensive resource, Mastering All Yolo Models, for a richer, more informed perspective on the YOLO series.

What’s new in YOLOv12 ?

Most object detection architectures have traditionally relied on Convolutional Neural Networks (CNNs) due to the inefficiency of attention mechanisms, which struggle with quadratic computational complexity and inefficient memory access operations. As a result, CNN-based models generally outperform attention-based systems in YOLO frameworks, where high inference speed is critical.

YOLOv12 seeks to overcome these limitations by incorporating three key improvements:

Area Attention Module (A2):

- YOLOv12 introduces a simple yet efficient Area Attention module (A2), which divides the feature map into segments to preserve a large receptive field while reducing the computational complexity of traditional attention mechanisms. This simple modification allows the model to retain a significant field of view while improving speed and efficiency.

Residual Efficient Layer Aggregation Networks (R-ELAN):

- YOLOv12 leverages R-ELAN to address optimization challenges introduced by attention mechanisms. R-ELAN improves on the previous ELAN architecture with:

- Block-level residual connections and scaling techniques to ensure stable training.

- A redesigned feature aggregation method that improves both performance and efficiency.

Architectural Improvements:

- Flash Attention: The integration of Flash Attention addresses the memory access bottleneck of attention mechanisms, optimizing memory operations and enhancing speed.

- Removal of Positional Encoding: By eliminating positional encoding, YOLOv12 streamlines the model, making it both faster and cleaner without sacrificing performance.

- Adjusted MLP Ratio: The expansion ratio of the Multi-Layer Perceptron (MLP) is reduced from 4 to 1.2 to balance the computational load between attention and feed-forward networks, improving efficiency.

- Reduced Block Depth: By decreasing the number of stacked blocks in the architecture, YOLOv12 simplifies the optimization process and enhances inference speed.

- Convolution Operators: YOLOv12 makes extensive use of convolution operations to leverage their computational efficiency, further improving performance and reducing latency.

Architecture Overview of YOLOv12

This section introduces the YOLOv12 framework from the perspective of network architecture. As discussed in the previous section we will now elaborate on the three key improvements namely, Area Attention module, Residual Efficient Layer Aggregation Network (R-ELAN) module and the improvements in the vanilla attention mechanism.

Area Attention Module

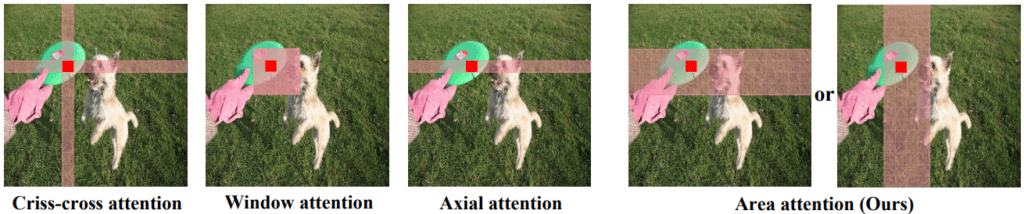

To address the computational cost associated with vanilla attention mechanisms, YOLOv12 utilizes local attention mechanisms like Shift window, Criss-Cross, and Axial attention. While these methods help reduce complexity by transforming global attention into local attention, they suffer from limitations in terms of speed and accuracy due to the reduced receptive field.

- Proposed Solution: YOLOv12 introduces a simple yet efficient Area Attention module. This module divides the feature map of resolution (H, W) into L segments of size (H/L, W) or (H, W/L). Rather than using explicit window partitioning, it applies a simple reshape operation.

- Benefits: This reduces the receptive field to 1/4th of the original size but still maintains a larger receptive field compared to other local attention methods. By cutting the computational cost to (n²hd)/2 from the traditional (2n²hd), the model becomes more efficient without sacrificing accuracy.

Residual Efficient Layer Aggregation Networks(R-ELAN)

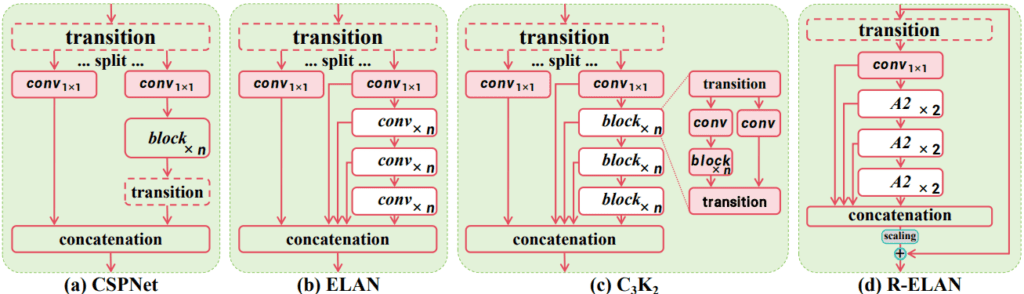

ELAN Overview:

Efficient Layer Aggregation Networks (ELAN) were used in earlier YOLO models to improve feature aggregation. ELAN works by:

- Splitting the output of a 1×1 convolution layer.

- Processing these splits through multiple modules.

- Concatenating the outputs before applying another 1×1 convolution to align the final dimensions.

Issues with ELAN:

- Gradient blocking: Causes instability due to a lack of residual connections from input to output.

- Optimization challenges: The attention mechanism and architecture can lead to convergence problems, with L- and X-scale models failing to converge or remaining unstable, even with Adam or AdamW optimizers.

Proposed Solution – R-ELAN:

- Residual connections: Introduces residual shortcuts from input to output with a scaling factor (default 0.01) to improve stability.

- Layer scaling analogy: Similar to layer scaling used in deep vision transformers but avoids the slowdown which results from applying the layer scaling to every area attention module.

New Aggregation Approach:

- Modified design: Instead of splitting the output after the transition layer, the new approach adjusts the channel dimensions and creates a single feature map.

- Bottleneck structure: Processes the feature map through subsequent blocks before concatenation, forming a more efficient aggregation method.

Architectural Improvements in YOLOv12

- Flash Attention: YOLO12 leverages Flash Attention, which minimizes memory access overhead. This addresses the main memory bottleneck of attention, closing the speed gap with CNNs.

- MLP Ratio Adjustment: The feed-forward network expansion ratio is reduced from the usual 4 (in Transformers) to about 1.2 in YOLO12. This prevents the MLP from dominating runtime and thus improving overall efficiency.

- Removal of Positional Encoding: YOLO12 omits explicit positional encodings in its attention layers. This makes the model “fast and clean” with no loss in performance for detection.

- Reduction of Stacked Blocks: Recent YOLO backbones stacked three attention/CNN blocks in the last stage; YOLO12 instead uses only a single R-ELAN block in that stage. Fewer sequential blocks ease optimization and improve inference speed, especially in deeper models.

- Convolution Operators: The architecture also uses convolutions with batch norm instead of Linear layer with layer norm in order to fully exploit the efficiency of convolution operators.

Benchmarks of YOLOv12

Dataset: All models were evaluated on the MS COCO 2017 object detection benchmark.

YOLOv12-N Performance: The smallest YOLOv12-N achieves a higher mAP of 40.6% as compared to YOLOv10-N (38.5%) or YOLOv11-N (39.4%) while maintaining similar inference latency.

YOLOv12-S vs. RT-DETR: The YOLOv12-S model also outperforms RT-DETR models. Notably, it runs about 42% faster than the RT-DETR-R18 model, while using only ~36% of the computation and ~45% of the parameters of RT-DETR-R18.

Each YOLOv12 model (N through X) yields better mAP at comparable or lower latency than similarly sized models from YOLOv8, YOLOv9, YOLOv10, YOLOv11, etc. This advantage holds from small models to the largest, demonstrating the scalability of YOLOv12’s improvements.

Limitation of YOLOv12

A current limitation of YOLOv12 is its reliance on FlashAttention for optimal speed. FlashAttention is only supported on relatively modern GPU architectures (NVIDIA Turing, Ampere, Ada Lovelace, or Hopper families) such as Tesla T4, RTX 20/30/40-series, A100, H100, etc.

This means older GPUs that lack these architectures cannot fully benefit from YOLOv12’s optimized attention implementation. Users on unsupported hardware would have to fall back to standard attention kernels, losing some speed advantage.

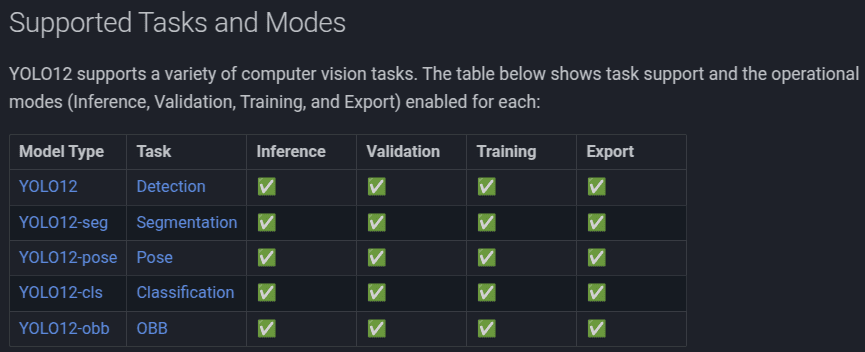

How to use YOLOv12 from Ultralytics?

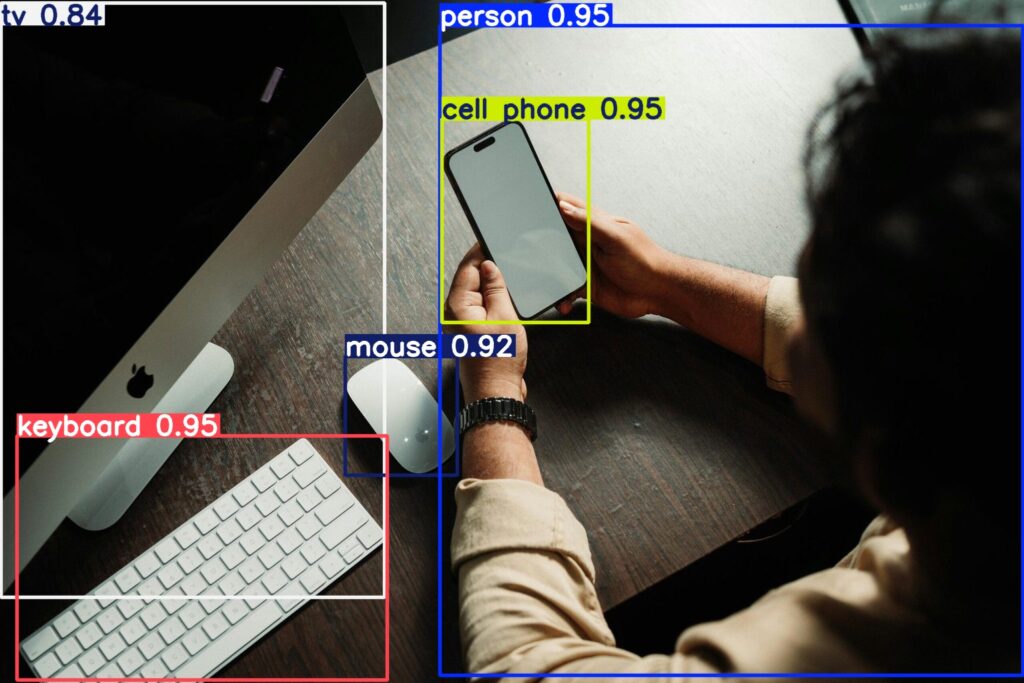

Ultralytics has provided the list of tasks (as shown in the image below) that can be performed by YOLO12.

The Ultralytics YOLO12 implementation, by default, does not require Flash Attention. However, Flash Attention can be optionally compiled and used with YOLO12. To compile Flash Attention, one of the following NVIDIA GPUs is needed:

Hopper GPUs (e.g., H100/H200)

Turing GPUs (e.g., T4, Quadro RTX series)

Ampere GPUs (e.g., RTX30 series, A30/40/100)

Ada Lovelace GPUs (e.g., RTX40 series)

Let’s look at a simple implementation of YOLO12 using Ultralytics pipeline:

!pip install -q ultralytics

from ultralytics import YOLO

model = YOLO("yolo12m.pt")

result = model(img_path, save = True, conf=0.5)

To create more amazing object detection results and get a hand-on experience with Ultralytics pipeline , you can download the notebook created by us by clicking on the button below. We have also included the implementation procedure for Flash Attention in the notebook.

Inference experiments

Conclusion

The architecture of YOLOv12 is a significant step forward in real-time object detection. By incorporating Area Attention, R-ELAN, and architectural improvements such as Flash Attention, MLP ratio adjustment, and the removal of positional encoding, YOLOv12 offers a model that is faster, more efficient, and more accurate than its predecessors

It sets a new standard for the efficient use of attention mechanisms in high-speed vision systems.

Key Takeaways on YOLOv12

- Architectural changes to incorporate attention mechanism

- Area Attention module: Provides much faster inference with minimal accuracy loss.

- R-ELAN module: Ensures that even very deep or large-attention models converge reliably

- Flash attention integration

- It achieves state-of-the-art detection accuracy while also delivering lower latency.

References

- YOLOv12

- A diligent thanks to Muhammad Rizwan Munawar for providing the comparison results between YOLOv12 and YOLO11.

- Github repo of YOLOv12: https://github.com/sunsmarterjie/yolov12/tree/49854964f68b9a49eadd95eb2aaf4a838ba72143

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning