Long videos are brutal for today’s Large Vision-Language Models (LVLMs). A 30-60 minute clip contains thousands of frames, multiple speakers, on-screen text, and objects that appear, disappear, and reappear across scenes. Push too many frames into the model and we blow past the context window; push too few and you miss the moment that actually answers the question. Long-context LVLMs are power-hungry with diminishing returns, and GPT-style agent pipelines are accurate but slow and costly. Video-RAG wipes out those pain points, delivering strong, scalable, long-video understanding with minimal overhead.

Video-RAG takes a third path. Instead of forcing the model to “see everything,” it retrieves only what matters, using open-source tools to extract auxiliary texts from the video and then feeding those texts, along with a small set of frames, into any LVLM. The payoff is big, all while adding only a couple thousand tokens of auxiliary text and modest latency/VRAM overhead.

By the end, we’ll understand:

- The core intuition behind replacing visual tokens with visually aligned text.

- The three-phase pipeline (Query Decouple → Auxiliary Text Generation & Retrieval → Integration & Generation).

- The exact tools (EasyOCR, Whisper, CLIP, APE, Contriever, FAISS) and how they fit together.

- The knobs that matter in practice (frame count, retrieval thresholds, modality ablations) and how to set them.

- How to replicate results and implement the method in our own stack.

- What is Video-RAG

- The Video-RAG Pipeline (end-to-end)

- Phase 0 (context): What the baseline does

- Query Decouple – tell the system what to fetch (and why)

- Auxiliary Text Generation & Retrieval – build small, smart evidence stores

- Integration & Generation – answer once, with grounded evidence

- Components and Tools (at a glance)

- When to rely on which modality

- Implement Video-RAG

- Tuning & trade-offs (defaults that work)

- Conclusion

- References

1. What is Video-RAG?

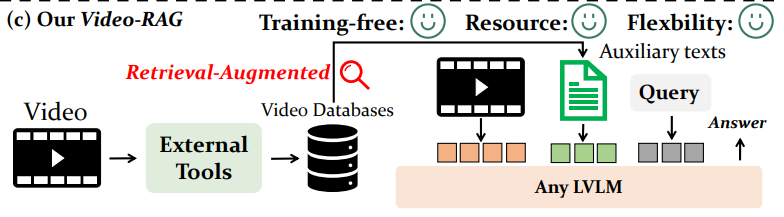

Video-RAG is a training-free, plug-and-play pipeline that improves long-video understanding by giving any Large Vision-Language Model (LVLM) access to visually aligned, auxiliary, text-concise, query-relevant facts extracted directly from the video with open-source tools. Instead of forcing the LVLM to ingest hundreds of frames (and blow the context window), Video-RAG replaces many of those visual tokens with compact text that points at the right evidence.

Core idea in one sentence

Retrieve the words the video already contains – spoken phrases (ASR), on-screen text (OCR), and object facts (DET: counts, locations, relations) – and feed those words, plus a few key frames, to your LVLM in a single pass.

1.1 Key Properties of Video-RAG

- Training-free: no finetuning the LVLM; we run a pipeline around it.

- Model-agnostic: works with Long-LLaVA, LLaVA-Video, Qwen-VL, etc.

- Single-turn & efficient: one retrieval step + one LVLM forward pass.

- Visually aligned: every snippet is tied to frames/timestamps or boxes.

- Open-source stack: EasyOCR, Whisper, CLIP, APE (detector), Contriever, FAISS.

- Proven uplift: consistent gains on Video-MME/MLVU/LongVideoBench; best setting in the paper adds ~1.9K aux tokens and ~5–11 s latency with ~8 GB extra VRAM.

1.2 What does “visually aligned auxiliary text” mean

Video-RAG builds three per-video stores before you ask a question:

| Modality | What it captures | How it’s built | Why it helps |

|---|---|---|---|

| OCR | On-screen text | EasyOCR → Contriever → FAISS | Names, signs, and UI labels, the LVLM can misread from pixels. |

| ASR | Spoken content | Whisper → Contriever → FAISS | Narration, events, and causal links are not visible in frames. |

| DET | Objects & geometry | CLIP-gated keyframes → APE detector → scene-graph text (counts, locations, relations) | Makes quantities/positions explicit, reducing hallucinations. |

Scene-graph text is crucial: detections are rewritten as human-readable facts like

- “books: 2; table: 1” (counts),

- “table-1 at room center; books on table-1” (locations),

- “cup-3 left-of kettle-1” (relations).

1.3 What Video-RAG is not?

- Not a replacement for video encoders or frame sampling – it augments them.

- Not a multi-turn planner – one retrieval step, one generation step.

- Not a full transcript dump – it filters aggressively to stay within context.

A quick, concrete example

Question: “Which decoration appears most on the Christmas tree?”

Plan: ASR: null, DET: ["apples","candles","berries"], TYPE: ["num"]

DET scene-graph counts: apples: 9; candles: 3; berries: 5

Answer: “Apples.” (grounded, explainable, and reproducible from the stored DET text)

2. The Video-RAG Pipeline (end-to-end)

2.1 Phase 0 (context): What the baseline does

A vanilla LVLM samples a handful of frames, encodes them, and answers:

Where Q is the question and Fv are visual features from sampled frames F (CLIP-like encoder). This breaks down on long videos because the right evidence may be off-frame (spoken words, tiny labels, objects outside the sampled set) or too far back in time.

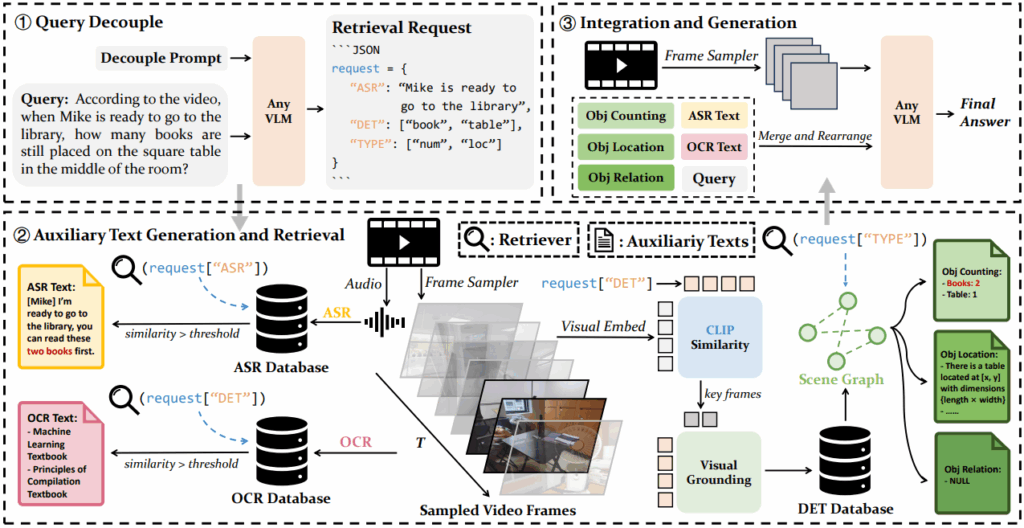

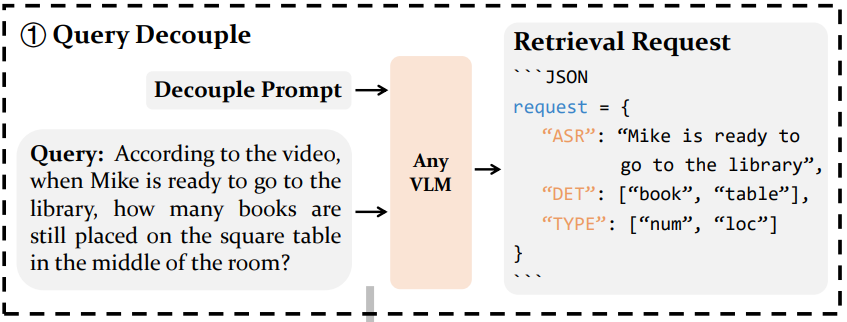

2.2 Query Decouple – tell the system what to fetch (and why)

Why it exists?

Long videos contain far more text/audio/objects than you can feed to an LVLM. If you don’t narrow the search, retrieval returns redundant chunks, and you blow your token budget. Query decoupling converts a fuzzy question into a precise retrieval plan.

How it helps?

It prevents running heavy tools (ASR, detection) everywhere and focuses retrieval on what matters for the current question.

What it does?

We prompt the LVLM (text-only) to emit a compact, JSON-like spec:

R_asr: what to listen for (short clause).R_det: concrete objects to look for (nouns).R_type: which facts are needed:["num","loc","rel"](counts, locations, relations).

Example (counting on a table):

{

"ASR": "when Mike is ready to go to the library",

"DET": ["book","table"],

"TYPE": ["num","loc"]

}

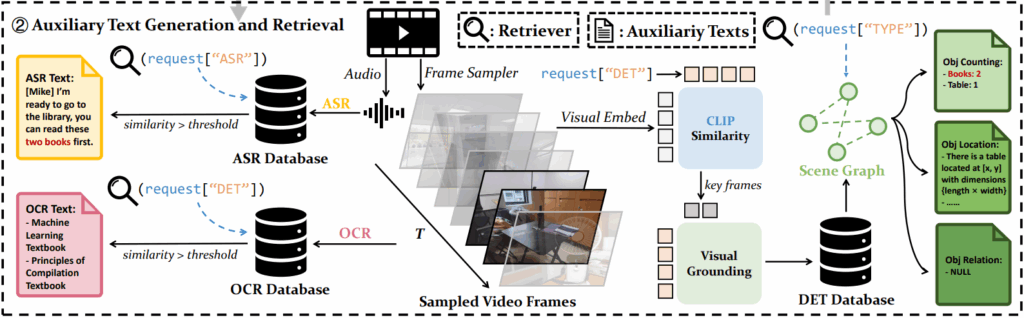

2.3 Auxiliary Text Generation & Retrieval – build small, smart evidence stores

Why it exists

The video already contains the words your LVLM needs – spoken phrases, on-screen text, and object facts – just not in a form the model can efficiently use. This phase extracts those words and indexes them for targeted lookup.

We build three per-video stores in parallel (once per video), then retrieve only the parts relevant to R.

- OCR store – “read” the video

- Why: Names, jersey numbers, street signs, UI labels—models often mangle these from pixels.

- Retrieve: search by similarity to the concatenated plan+query (below).

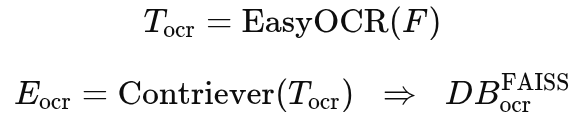

- Build:

- ASR store – “listen” to the video

- Why: Speech carries timeline and causality (who did what, when) – often invisible in frames.

- Build:

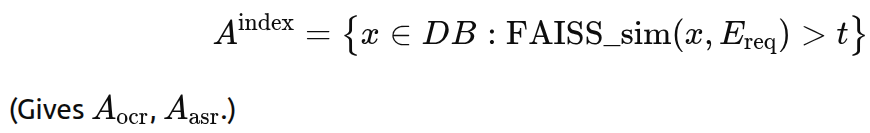

- DET store – “understand geometry”

- Why: Counts, locations, and relations are where LVLMs hallucinate most. Making these explicit stabilizes answers.

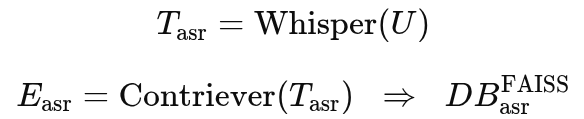

- Keyframe gating (save compute, raise precision): Run detection only on keyframes.

- Open-vocabulary detection + grounding: Store categories + boxes as text.

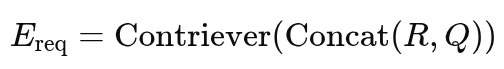

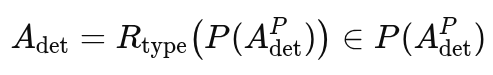

- Retrieve only what matters (all stores)

- Build a single request embedding for OCR/ASR.

- Keep chunks with similarity above the threshold. Gives Aocr, Aasr.

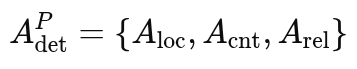

- DET → scene-graph text (so LVLM doesn’t have to “infer” geometry). Scene-Graph Text is a compact, natural-language serialization of a simple scene graph built from object detections on selected keyframes. Instead of handing the LVLM raw boxes or lots of pixels, Video-RAG converts detections into short, readable facts

- Filter by requested info type(s).

Strategy tips

- Threshold t is our sparsity knob. Paper’s sweet spot: t≈0.3 for both FAISS (OCR/ASR) and CLIP keyframes (DET).

- Lower t → more recall but more tokens.

- Higher t → leaner context but risk missing key evidence.

- Chunking matters. Use sentence/phrase-level chunks for OCR/ASR; too large → retrieval is blunt; too small → fragmentation.

- DET scope. Keep prompts concrete; avoid a synonym explosion—CLIP gating already covers some variation.

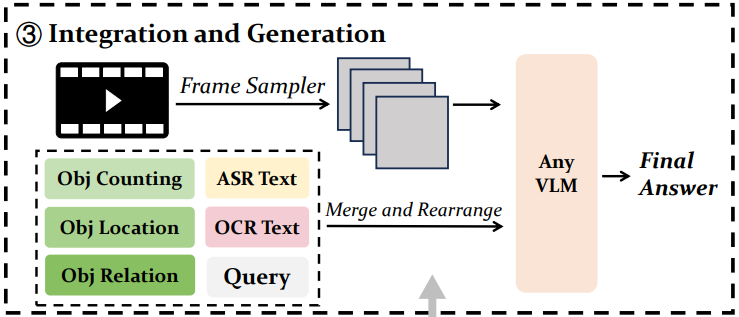

2.4 Integration & Generation – answer once, with grounded evidence

Why it exists

Even perfect retrieval can fail if you hand the LVLM a bag of disjoint facts. Ordering and fusing the evidence with a few frames gives the model a coherent, time-aligned story.

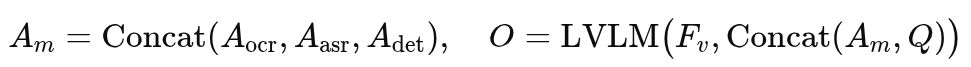

What it does

Merge the auxiliary texts (OCR, ASR, DET) chronologically, deduplicate near-duplicates, and concatenate with the question and a small set of frames:

2.5 Components and Tools (at a glance)

| Component | What it extracts | Tooling in paper | Indexed by |

|---|---|---|---|

| OCR | On-screen text | EasyOCR | FAISS (Contriever embeddings) |

| ASR | Spoken content | Whisper | FAISS (Contriever embeddings) |

| DET | Objects, boxes | APE (open-vocab detector) | Stored as NL + scene-graph (counts, locations, relations) |

| Keyframe gating | Frames likely containing the requested objects | CLIP image–text similarity | threshold t ≈ 0.3 |

| Embeddings | Textual retrieval | Contriever | IndexFlatIP |

| LVLMs | Any video-capable model | e.g., Long-LLaVA, LLaVA-Video, Qwen-VL | No fine-tuning required |

2.6 When to rely on which modality

| Situation | Use this first | Why |

|---|---|---|

| Counts, positions, “left of”, “on top of” | DET (scene-graph) | Turns geometry into explicit text; LVLM doesn’t have to infer. |

| Who said what, causality across scenes | ASR | Speech anchors events in time; critical for temporal reasoning. |

| Names, IDs, signs, labels, UIs | OCR | Pixels → exact strings; reduces hallucinated entities. |

| Mixed questions (e.g., “When X happens, how many Y on Z?”) | ASR + DET | Use ASR to find the moment; DET to count/locate at that moment. |

3. Implement Video-RAG

3.1 Compute & Storage Requirements (practical setup)

Working with Google SigLIP, Facebook Contriever, LLaVA-Video 7B, OpenAI Whisper, and OpenAI CLIP (ViT) means you’ll download multiple large model checkpoints and keep intermediate data (audio, frames, embeddings) on disk. In practice, you should plan for substantial storage and a GPU with ample VRAM, especially if we want smooth experimentation without constantly swapping precision or unloading models.

Recommended way to run

If you’re keen to implement Video-RAG end-to-end, we recommend using managed GPU workspaces such as RunPod or Lightning Studios. Both let us pick:

- GPU/VRAM to match our budget (e.g., 24 GB L4/A10G for lean builds; 40 GB A100 for faster, FP16-heavy runs).

- An OS template with Python 3.10 (our repo and modified files assume 3.10).

- Sufficient persistent disk so you don’t re-download models between sessions.

3.2 Implementation Scope & Reproducibility of Video-RAG (No-APE Build)

Why did we diverge from the official repo

During implementation, we discovered that both the Video-RAG add-on for LLaVA-NeXT and the APE open-vocabulary detector have fallen behind current dependency stacks. In particular, strict/pinned versions triggered incompatibilities (Torch/vision/pycocotools, CUDA wheels, spaCy models, etc.).

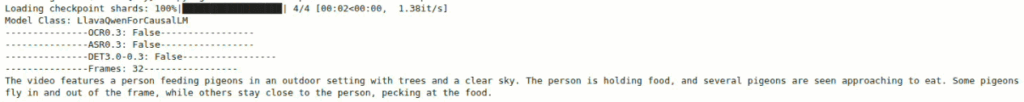

To move forward, we commented out and relaxed several packages in requirements.txt and verified the pipeline end-to-end. This brings the OCR + ASR branches of Video-RAG online reliably. However, because of unresolved APE dependencies, we disable the DET branch (open-vocabulary detection) in our build.

Our model stack for this blog

- Backbone: LLaVA-Video 7B (Qwen2 language model, 32K context window)

- Training sources: LLaVA-Video-178K and LLaVA-OneVision

- RAG modalities enabled: ASR + OCR (DET off)

- Retrieval: Contriever embeddings + FAISS (IndexFlatIP)

- Key defaults: frame sampler ~32 frames; similarity threshold t ≈ 0.3 for retrieval

What’s provided

We include a small patch bundle with modified files that drop into the official LLaVA-NeXT repo:

requirements.txt(relaxed pins; removes conflicting packages)vidrag_pipeline.py (integrated pipeline with DET disabled by default)/LLaVA-NeXT/llava/model/builder.py

Click the Download Code button below, unzip, and replace the corresponding files in your local LLaVA-NeXT checkout. Then follow the steps below.

3.3 Inferencing via Video-RAG Pipeline

Step-by-step setup (clean, reproducible)

Clone the LLaVA-NeXT GitHub repository –

git clone https://github.com/LLaVA-VL/LLaVA-NeXT

cd LLaVA-NeXT

For the learners or readers not using Lightning Studios or RunPod, we need to create a separate Conda environment built with Python 3.10 version –

conda create -n llava python=3.10 -y

conda activate llava

Installing required packages –

pip install -e ".[train]"

pip install spacy faiss-cpu easyocr ffmpeg-python

pip install torch==2.1.2 torchaudio numpy

python -m spacy download en_core_web_sm

To solve the Spacy library dependency issues –

pip install spacy==3.8.0

pip install transformers==4.39.0

From the VideoRAG official repository at https://github.com/Leon1207/Video-RAG-master, now we need to download all the files under the tools directory and put them in a separate sub-directory with the same name (to be manually created) under the LLaVA-NeXT directory.

Replace files with our bundle – unzip the download and copy/overwrite the following files in the LLaVA-NeXT directory.

requirements.txt

vidrag_pipeline.py (under LLaVA-NeXT)

/LLaVA-NeXT/llava/model/builder.py (all files included in the bundle)

Install a few more required packages –

pip install -r requirements.txt

Our pipeline disables the DET branch by default. So USE_DET/use_ape flag has been set to False. Readers or learners who want to use VideoRAG with an audio-included video file will have to comment out the corresponding code snippets in the vidrag_pipeline.py file.

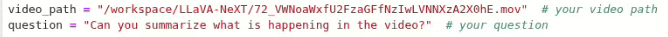

Upload the desired input video file, and then pass the correct path of the input file along with the questions we need to ask from the VideoRAG, and for that, we’ll have to modify the question variable, and if required, the response_pmt_0 variable too, which passes all of the questions in a JSON format to the LVLM. Similarly, we’ll have to carefully pass either question or retrieve_pmt_0 to the llava_inference function defined in the vidrag_pipeline.py python script to get the desired output.

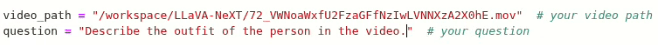

Run the pipeline –

python vidrag_pipeline.py

3.4 Video-RAG Inferencing Code Execution Flow

3.5 Inference Results

Query

Output

Query

Output

4. Tuning & trade-offs (defaults that work)

- Thresholds: start at t=0.3 (both FAISS & CLIP).

- Frames: 32. Only increase if the latency budget allows.

- Aux tokens target: ~1.9K (lean and accurate).

- Latency/VRAM add-on: ~5-11 s and ~8 GB (setup-dependent).

- Modality impact: adding all three (ASR+OCR+DET) is most robust; ASR often gives the single biggest jump; DET stabilizes counts/locations.

5. Conclusion

Video-RAG gives long-video systems a practical superpower: it retrieves the right words from the video and uses them to guide the model, instead of brute-forcing more frames into a limited context window. In this blog, we implemented that idea in a clean, reproducible way, focusing on the OCR + ASR branches (no APE) and integrating the pipeline with LLaVA-Video-7B (Qwen2, 32K). The result is a training-free, single-pass workflow that is easy to run, explainable, and cost-aware while still delivering meaningful accuracy gains on narrative, identity, and timeline questions.

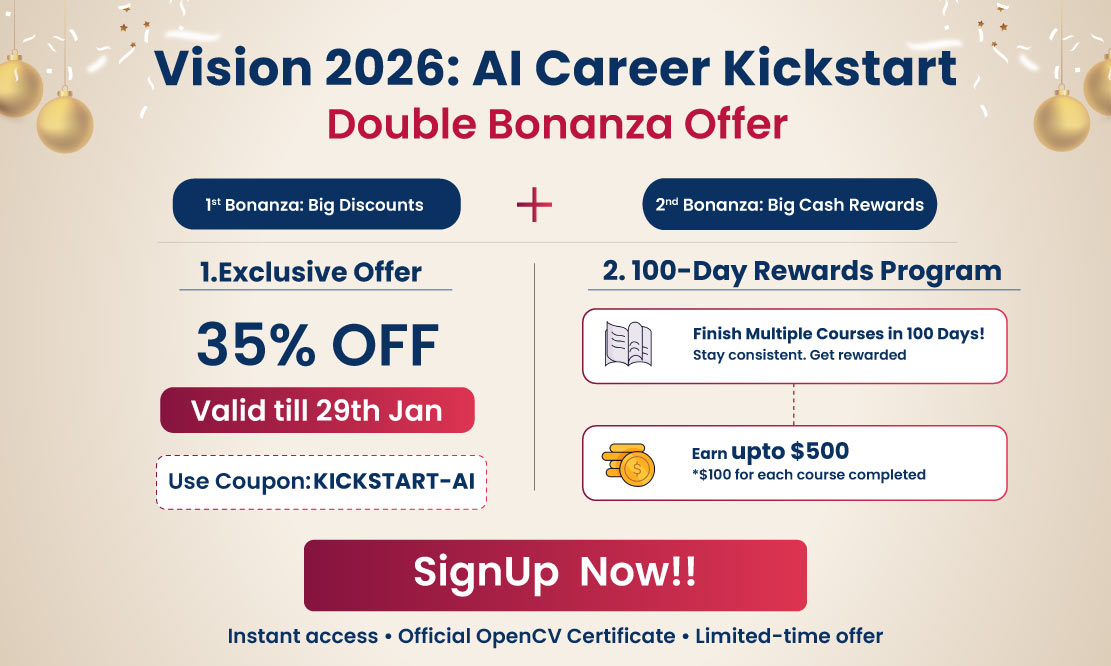

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning