In this article, you will learn how to build python-based gesture-controlled applications using AI. We will guide you all the way with step-by-step instructions. I’m sure you will have loads of fun and learn many useful concepts following the tutorial.

Specifically, you will learn the following:

- How to train a custom Hand Detector with Dlib.

- How to cleverly automate the data collection & annotation step with image processing so we don’t have to label anything.

- How to convert normal PC applications like Games and Video Players to be controlled via hand gestures.

Here’s a demo of what we’ll be building in this Tutorial:

Excited yet ? if so keep reading..

Most of you are probably familiar with dlib library, a popular computer vision library mostly used for landmark detection. If you’re an old user of Dlib then you’d know that this library is much more than that.

Dlib contains many interesting application-specific algorithms for e.g. Its contains methods for facial recognition, tracking, landmark_detection, and others. Of course, landmark detection itself can be used to create a variety of other applications like Face morphing, emotion recognition. Facial manipulation etc. You can already find plenty of examples of these online, so today I’m going to show you a lesser-known but a really interesting ability of Dlib. I’m going to show you step by step how to train a custom Object Detector with Dlib.

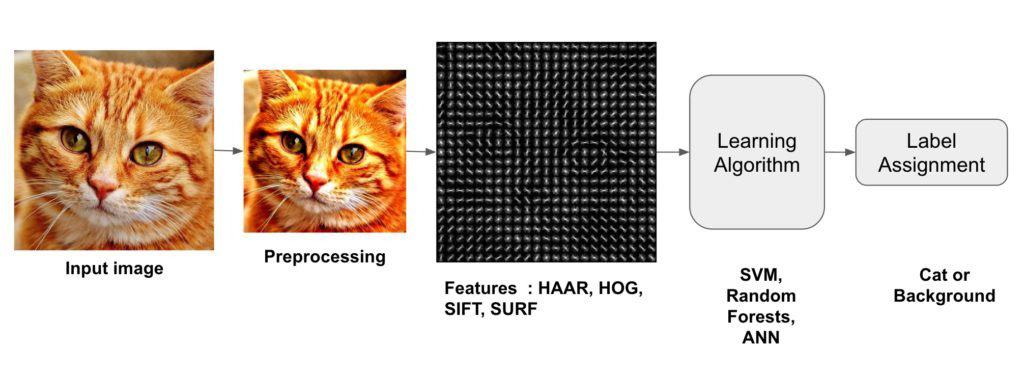

Dlib contains a HOG + SVM based detection pipeline.

Note: OpenCV also contains a HOG + SVM detection pipeline but personally speaking I find the dlib implementation a lot cleaner. Although the OpenCV version gives you a lot more control over different parameters.

What is HOG and SVM?

HOG or HIstogram of Oriented Gradients is a type of feature descriptor.

What is a feature descriptor?

Feature descriptors are vectors (an array of numbers), these vectors may look ordinary to you but for a computer, it encodes useful information about the image. You can think of a feature descriptor as a representation of an image (or an image patch) that consists of useful information about the image’s content.

For e.g. a good feature descriptor of a person on a blue background is pretty similar to a feature descriptor of that person on a different background. So with these descriptors, you can match images containing the same contents, this way you can do classification, cluster similar images, and do other things.

Before the rise of Deep Learning, we used feature descriptors to grab useful information from the image. (This is still used today when Deep leaning is not an option).

Now HOG is one of the most powerful feature descriptors out there, Satya has written a great explanation on details of the HOG feature descriptor, you can read it here.

With feature descriptors, you can get useful vectors but you still need a machine learning model to make sense of that vector and give you a prediction. This is where SVM or support vector machines come in.

SVM is a really strong ML classifier. You can read more about this algorithm here.

So if DL is not an option then SVM + HOG is the best machine learning approach you have.

So this is how our approach will look like:

So by using HOG as feature descriptor and SVM as our learning algorithm we have got ourselves a robust ML image classifier.

But wait! Weren’t we going to make an Object Detector which also outputs the location (bounding box coordinates) of the detected class?

Yes, and it’s pretty easy to convert this classifier to a detector. All you need to use is a Sliding Window. If you don’t know already, a sliding is exactly what the name suggests a window that slides over the whole image, you can think of it as a kernel or a filter going over the image. Take a look at the illustration below in which the window goes over the image from left to right, the window moves by a stride (an amount we set) after it reaches the end of the row then it moves down by a stride amount and moves back to the start of the row.

There’s one more thing you need to do to make it a complete detector, you need to add image pyramids which will make your detector scale-invariant and it will allow your sliding window to detect your target Object at different sizes. You can learn more about image pyramids here.

Why use this approach over a Deep Learning based Detector?

Yes, this is a valid question since you’ll probably end up with a better detector with DL based approaches but the major benefit here is that with this approach you’ll end up training the detector in a few seconds and that too using just your CPU and a few data samples.

IF you have seen the demo then you would know that our intention in this tutorial is two-fold so we can split this tutorial into two parts.

- Part 1: Training a Custom Hand Detector with DLIB

- Part 2: Integrating Gesture controls with Applications.

Let’s start with the coding of first part

Part 1: Training a Custom Hand Detector with DLIB

This part can be split into following steps:

- Step 1: Data Generation & Automatic Annotation.

- Step 2: Preprocessing Data.

- Step 3: Display Images (Optional)

- Step 4: Train the Detector.

- Step 5: Save & Evaluate the Detector.

- Step 6: Test the Trained Detector on Live Webcam.

- Step 7: How to do Multi-Object Detection (Optional)

Lets start by importing the required libraries

# Import Libraries

import dlib

import glob

import cv2

import os

import sys

import time

import numpy as np

import matplotlib.pyplot as plt

import pyautogui as pyg

import shutil

Step 1: Data Generation & Automatic Annotation.

Normally when you’re training a hand detector you’re going to need several images of the hand and then you’ll need to annotate them meaning you’ll have to draw bounding boxes over the hand in each image.

So now you have two options:

Option 1: Annotate Images Manually

Record a video of yourself and in the video wave your hand, move it around, rotate a bit, and so on but don’t deform your hand (palm should face the camera each time). After the recording, split the video into images and then download an annotation tool. You can install labelimg (a popular annotation tool) by just doing: pip install labelimg. After that, you have to annotate each image with a bounding box. Now depending upon the number of images this would probably take some hours.

Option 2: Automate the Annotation Process

A smarter way to go about is to automate this annotation process while you’re collecting training images.

How are we going to do that?

Well, all you need to do is use a sliding window, I’ve already explained what a sliding window is above.

Now what we’re going to do is put our hand inside the window and whenever the window moves we will move our hand with it, after that is done we will save that image and the window box will be our annotated box.

This way we will automate the annotation process. How cool is that.

The script below does just that, it saves the images in the folder named training_images and appends the window box locations in a python list.

# If cleanup is True then the new images and annotations will be appended to previous ones

# If False then all previous images and annotations will be deleted.

cleanup = True

# Set the window to a normal one so we can adjust it

cv2.namedWindow('frame', cv2.WINDOW_NORMAL)

# Resize the window and adjust it to the center

# This is done so we're ready for capturing the images.

cv2.resizeWindow('frame', 1920,1080)

cv2.moveWindow("frame", 0,0)

# Initialize webcam

cap = cv2.VideoCapture(0,cv2.CAP_DSHOW)

# Initalize sliding window's x1,y1

x1 ,y1 = 0,0

# These will be the width and height of the sliding window.

window_width = 190#140

window_height = 190

# We will save images after every 4 frames

# This is done so we don't have lot's of duplicate images

skip_frames = 3

frame_gap = 0

# This is the directory where our images will be stored

# Make sure to change both names if you're saving a different Detector

directory = 'train_images_h'

box_file = 'boxes_h.txt'

# If cleanup is True then delete all imaages and bounding_box annotations.

if cleanup:

# Delete the images directory if it exists

if os.path.exists(directory):

shutil.rmtree(directory)

# Clear up all previous bounding boxes

open(box_file, 'w').close()

# Initialize the counter to 0

counter = 0

elif os.path.exists(box_file):

# If cleanup is false then we must append the new boxes with the old

with open(box_file,'r') as text_file:

box_content = text_file.read()

# Set the counter to the previous highest checkpoint

counter = int(box_content.split(':')[-2].split(',')[-1])

# Open up this text file or create it if it does not exists

fr = open(box_file, 'a')

# Create our image directory if it does not exists.

if not os.path.exists(directory):

os.mkdir(directory)

# Initial wait before you start recording each row

initial_wait = 0

# Start the loop for the sliding window

while(True):

# Start reading from camera

ret, frame = cap.read()

if not ret:

break

# Invert the image laterally to get the mirror reflection.

frame = cv2.flip( frame, 1 )

# Make a copy of the original frame

orig = frame.copy()

# Wait the first 50 frames so that you can place your hand correctly

if initial_wait > 60:

# Increment frame_gap by 1.

frame_gap +=1

# Move the window to the right by some amount in each iteration.

if x1 + window_width < frame.shape[1]:

x1 += 4

time.sleep(0.1)

elif y1 + window_height + 270 < frame.shape[1]:

# If the sliding_window has reached the end of the row then move down by some amount.

# Also start the window from start of the row

y1 += 80

x1 = 0

# Setting frame_gap and init_wait to 0.

# This is done so that the user has the time to place the hand correctly

# in the next row before image is saved.

frame_gap = 0

initial_wait = 0

# Break the loop if we have gone over the whole screen.

else:

break

else:

initial_wait += 1

# Save the image every nth frame.

if frame_gap == skip_frames:

# Set the image name equal to the counter value

img_name = str(counter) + '.png'

# Save the Image in the defined directory

img_full_name = directory + '/' + str(counter) + '.png'

cv2.imwrite(img_full_name, orig)

# Save the bounding box coordinates in the text file.

fr.write('{}:({},{},{},{}),'.format(counter, x1, y1, x1+window_width, y1+window_height))

# Increment the counter

counter += 1

# Set the frame_gap back to 0.

frame_gap = 0

# Draw the sliding window

cv2.rectangle(frame,(x1,y1),(x1+window_width,y1+window_height),(0,255,0),3)

# Display the frame

cv2.imshow('frame', frame)

if cv2.waitKey(1) == ord('q'):

break

# Release camera and close the file and window

cap.release()

cv2.destroyAllWindows()

fr.close()

Note: The Code above is structured in a way that when you run the code the second time it will append new images with the previous one, this is done so you can gather more samples at different places with different backgrounds, so you can have a diverse dataset. You can choose to delete all previous images by setting clear_images variable to True.

Before we move forward, you should also know that the detector we’re training is not a full-fledged hand detector but its actually a hand palm detector this is because a HOG + SVM model is not robust enough to capture the deformation of objects like a hand. If we were training a deep learning based detector then this wouldn’t be much of an issue but for this case make sure you’re not collecting images of deformed hands with different variations, make sure the palm is facing the camera.

Step 2: Preprocessing Data.

Before you start training you just need to load and preprocess the data (images and labels) slightly so they are in the required format.

First, we will extract all the image names from the images directory. Then we will use the indexes of those images to extract their associated bounding box. The bounding box will be converted to a dlib rectangle format and then the image and its box will be stored together in a dictionary in the format: index: (image, bounding_box) ... At the time of training, we will separate the images from bounding_boxes, for now, we’ll keep them together.

Note: You could get away by just reading in all images and labels directly from their locations in a list but it’s a bad practice since if you delete a single image from its directory after recording images. Then it would cause trouble. Ideally, you should get rid of any image in train_images directory before training if you feel it’s not right.

# In this dictionary our images and annotations will be stored.

data = {}

# Get the indexes of all images.

image_indexes = [int(img_name.split('.')[0]) for img_name in os.listdir(directory)]

# Shuffle the indexes to have random train/test split later on.

np.random.shuffle(image_indexes)

# Open and read the content of the boxes.txt file

f = open(box_file, "r")

box_content = f.read()

# Convert the bounding boxes to dictionary in the format `index: (x1,y1,x2,y2)` ...

box_dict = eval( '{' +box_content + '}' )

# Close the file

f.close()

# Loop over all indexes

for index in image_indexes:

# Read the image in memmory and append it to the list

img = cv2.imread(os.path.join(directory, str(index) + '.png'))

# Read the associated bounding_box

bounding_box = box_dict[index]

# Convert the bounding box to dlib format

x1, y1, x2, y2 = bounding_box

dlib_box = [ dlib.rectangle(left=x1 , top=y1, right=x2, bottom=y2) ]

# Store the image and the box together

data[index] = (img, dlib_box)

Let’s also check the total number of images and boxes present.

print('Number of Images and Boxes Present: {}'.format(len(data)))Number of Images and Boxes Present: 148

Step 3: Display Images (Optional)

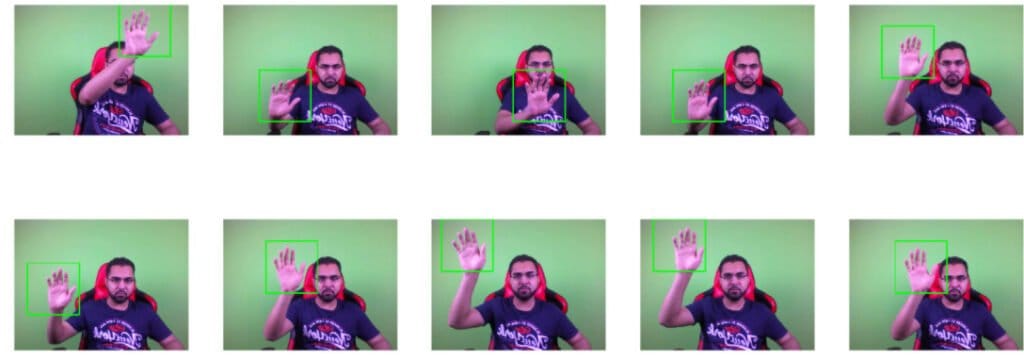

You can optionally choose to display images along with their bounding box, this way you can visualize if the boxes were drawn properly or not.

no_of_samples = 10

image_names = os.listdir(directory)

np.random.shuffle(data)

# Extract the subset of boxes

#subset = data[][:no_of_samples ]

cols = 5

# Given the number of samples to display, what's the number of rows required.

rows = int(np.ceil(no_of_samples / cols))

# Set the figure size

plt.figure(figsize=(cols*cols, rows*cols))

#Loop for each class

for i in range(no_of_samples):

# Extract the bonding box coordinates

d_box = data[i][1][0]

left, top, right,bottom = d_box.left(), d_box.top(), d_box.right(), d_box.bottom()

# Get the image

image = data[i][0]

# Draw reectangle on the detected hand

cv2.rectangle(image,(left,top),(right,bottom),(0,255,0),3)

# Display the image

plt.subplot(rows,cols,i+1);plt.imshow(image[:,:,::-1]);plt.axis('off');

Step 4: Train the Detector.

You can start training the detector by calling dlib.train_simple_object_detector and passing in a list of images and a list of associated dlib rectangles. First, we will extract the images and bounding box rectangles from our dictionary and then pass them to the training function.

Before you start training you can also specify some training options.

# This is the percentage of data we will use to train

# The rest will be used for testing

percent = 0.8

# How many examples make 80%.

split = int(len(data) * percent)

# Seperate the images and bounding boxes in different lists.

images = [tuple_value[0] for tuple_value in data.values()]

bounding_boxes = [tuple_value[1] for tuple_value in data.values()]

# Initialize object detector Options

options = dlib.simple_object_detector_training_options()

# I'm disabling the horizontal flipping, becauase it confuses the detector if you're training on few examples

# By doing this the detector will only detect left or right hand (whichever you trained on).

options.add_left_right_image_flips = False

# Set the c parameter of SVM equal to 5

# A bigger C encourages the model to better fit the training data, it can lead to overfitting.

# So set an optimal C value via trail and error.

options.C = 5

# Note the start time before training.

st = time.time()

# You can start the training now

detector = dlib.train_simple_object_detector(images[:split], bounding_boxes[:split], options)

# Print the Total time taken to train the detector

print('Training Completed, Total Time taken: {:.2f} seconds'.format(time.time() - st))

Training Completed, Total Time taken: 22.48 seconds

Step 5: Save & Evaluate the Detector

Save The Trained Detector

You should now save the detector so you don’t have to train it again the next time you want to use it. The extension of the model is .svm as in Support Vector Machine.

file_name = 'Head_Detector.svm'

detector.save(file_name)

Check the Hog Descriptor:

You can even check out the final hog descriptor from the code below, the descriptor should look something like the target object. After running this code a window will pop up.

win_det = dlib.image_window()

win_det.set_image(detector)

Check Training Metrics

You can call dlib.test_simple_object_detector() to test your model on training data.

print("Training Metrics: {}".format(dlib.test_simple_object_detector(images[:split], bounding_boxes[:split], detector)))

Training Metrics: precision: 0.991379, recall: 0.974576, average precision: 0.974576

Check Testing Metrics:

Similarly, we can also check the testing metrics by using the remaining 20% of the data

print("Testing Metrics: {}".format(dlib.test_simple_object_detector(images[split:], bounding_boxes[split:], detector)))

Testing Metrics: precision: 1, recall: 0.933333, average precision: 0.933333

Train the Final Detector

We trained the model on 80% of the data, if you’re satisfied with the metrics above then you now can retrain the detector on 100% of the data.

detector = dlib.train_simple_object_detector(images, bounding_boxes, options)

detector.save(file_name)

One thing you may have noted is that the precision of the model is pretty high, this is really good since we don’t want any false positives when we try to gesture control games.

Step 6: Test the Trained Detector on Live Webcam.

Finally let’s test our detector. Now we’re going to do inference with our trained detector. You can load the detector by calling detector = dlib.simple_object_detector() After loading the detector you can pass in a frame by doing detector(frame) and it will return the bounding box location of the hand if it was detected.

#file_name = 'Hand_Detector.svm'

# Load our trained detector

detector = dlib.simple_object_detector(file_name)

# Set the window name

cv2.namedWindow('frame', cv2.WINDOW_NORMAL)

# Initialize webcam

cap = cv2.VideoCapture(0, cv2.CAP_DSHOW)

# Setting the downscaling size, for faster detection

# If you're not getting any detections then you can set this to 1

scale_factor = 2.0

# Initially the size of the hand and its center x point will be 0

size, center_x = 0,0

# Initialize these variables for calculating FPS

fps = 0

frame_counter = 0

start_time = time.time()

# Set the while loop

while(True):

# Read frame by frame

ret, frame = cap.read()

if not ret:

break

# Laterally flip the frame

frame = cv2.flip( frame, 1 )

# Calculate the Average FPS

frame_counter += 1

fps = (frame_counter / (time.time() - start_time))

# Create a clean copy of the frame

copy = frame.copy()

# Downsize the frame.

new_width = int(frame.shape[1]/scale_factor)

new_height = int(frame.shape[0]/scale_factor)

resized_frame = cv2.resize(copy, (new_width, new_height))

# Detect with detector

detections = detector(resized_frame)

# Loop for each detection.

for detection in (detections):

# Since we downscaled the image we will need to resacle the coordinates according to the original image.

x1 = int(detection.left() * scale_factor )

y1 = int(detection.top() * scale_factor )

x2 = int(detection.right() * scale_factor )

y2 = int(detection.bottom()* scale_factor )

# Draw the bounding box

cv2.rectangle(frame,(x1,y1),(x2,y2),(0,255,0), 2 )

cv2.putText(frame, 'Hand Detected', (x1, y2+20), cv2.FONT_HERSHEY_COMPLEX, 0.6, (0, 0, 255),2)

# Calculate size of the hand.

size = int( (x2 - x1) * (y2-y1) )

# Extract the center of the hand on x-axis.

center_x = x2 - x1 // 2

# Display FPS and size of hand

cv2.putText(frame, 'FPS: {:.2f}'.format(fps), (20, 20), cv2.FONT_HERSHEY_COMPLEX, 0.6, (0, 0, 255),2)

# This information is useful for when you'll be building hand gesture applications

cv2.putText(frame, 'Center: {}'.format(center_x), (540, 20), cv2.FONT_HERSHEY_COMPLEX, 0.5, (233, 100, 25))

cv2.putText(frame, 'size: {}'.format(size), (540, 40), cv2.FONT_HERSHEY_COMPLEX, 0.5, (233, 100, 25))

# Display the image

cv2.imshow('frame',frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Relase the webcam and destroy all windows

cap.release()

cv2.destroyAllWindows()

If you noticed above I actually downsized the frame by a factor of 2 before passing it to the detector.

Why did I do that?

Well remember that our HOG + SVM is actually a classifier, the things that allow it to become a detector are sliding windows and image pyramids. Now we already have seen that bigger an image is, the more time a sliding window is going to take to go over its rows and columns so it’s safe to say that our detector will run faster if the frame is small.

This is the reason we are downsizing the frames. Although if you downsize too much then the detector’s accuracy will be affected, you will need to figure out the right scaling factor, in my case, a factor of 2.0 which reduces the size by 50% gives the best balance of speed and accuracy.

After you have resized the image and performed detection, you then must re-scale the detected coordinates according to the original image.

Step 7: Using Multiple Object Detectors Together

Now if you wanted to train a detector on multiple classes and not just hands then unfortunately you can’t just do that. The only way to do that is to train multiple detectors and run them simultaneously, This of course will significantly reduce your speed.

There is a silver lining here which is that dlib comes with a function called dlib.fhog_object_detector.run_multiple() which allows you to run multiple object detectors simultaneously in an efficient way. Of course the more detectors you add, the slower your code gets. This method also gives you confidence scores for each detection.

Here’s an example of me using two detectors One is trained on my hand and one on my face

# Load our trained detector

detector = dlib.simple_object_detector(file_name)

# Set the window to normal

cv2.namedWindow('frame', cv2.WINDOW_NORMAL)

# Initialize webcam

cap = cv2.VideoCapture(0, cv2.CAP_DSHOW)

# Setting the downscaling size, for faster detection

# If you're not getting any detections then you can set this to 1

scale_factor = 2.0

# Initially the size of the hand and its center x point will be 0

size, center_x = 0,0

# Initialize these variables for calculating FPS

fps = 0

frame_counter = 0

start_time = time.time()

# load up all the detectors using this function.

hand_detector = dlib.fhog_object_detector("Hand_Detector.svm")

head_detector = dlib.fhog_object_detector("Head_Detector.svm")

# Now insert all detectors in a list

detectors = [hand_detector, head_detector]

# Create a list of detector names in the same order

names = ['Hand Detected', 'Head Detected']

# Set the while loop

while(True):

# Read frame by frame

ret, frame = cap.read()

if not ret:

break

# Laterally flip the frame

frame = cv2.flip( frame, 1 )

# Calculate the Average FPS

frame_counter += 1

fps = (frame_counter / (time.time() - start_time))

# Create a clean copy of the frame

copy = frame.copy()

# Downsize the frame.

new_width = int(frame.shape[1]/scale_factor)

new_height = int(frame.shape[0]/scale_factor)

resized_frame = cv2.resize(copy, (new_width, new_height))

# Perform the Detection

# Beside's boxes you will also get confidence scores and ID's of the detector

[detections, confidences, detector_idxs] = dlib.fhog_object_detector.run_multiple(detectors, resized_frame,

upsample_num_times=1)

# Loop for each detected box

for i in range(len(detections)):

# Since we downscaled the image we will need to resacle the coordinates according to the original image.

x1 = int(detections[i].left() * scale_factor )

y1 = int(detections[i].top() * scale_factor )

x2 = int(detections[i].right() * scale_factor )

y2 = int(detections[i].bottom()* scale_factor )

# Draw the bounding box with confidence scores and the names of the detector

cv2.rectangle(frame,(x1,y1),(x2,y2),(0,255,0), 2 )

cv2.putText(frame, '{}: {:.2f}%'.format(names[detector_idxs[i]], confidences[i]*100), (x1, y2+20),

cv2.FONT_HERSHEY_COMPLEX, 0.6, (0, 0, 255),2)

# Calculate size of the hand.

size = int( (x2 - x1) * (y2-y1) )

# Extract the center of the hand on x-axis.

center_x = int(x1 + (x2 - x1) / 2)

# Display FPS and size of hand

cv2.putText(frame, 'FPS: {:.2f}'.format(fps), (20, 20), cv2.FONT_HERSHEY_COMPLEX, 0.6, (0, 0, 255),2)

# This information is useful for when you'll be building hand gesture applications

cv2.putText(frame, 'Center: {}'.format(center_x), (540, 20), cv2.FONT_HERSHEY_COMPLEX, 0.5, (233, 100, 25))

cv2.putText(frame, 'size: {}'.format(size), (540, 40), cv2.FONT_HERSHEY_COMPLEX, 0.5, (233, 100, 25))

# Display the image

cv2.imshow('frame',frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Relase the webcam and destroy all windows

cap.release()

cv2.destroyAllWindows()

Part 2: Integrating Gesture controls with Applications.

Now that we have learned how to train a single and multi Object Detector, let’s move onto the fun part where we automate a game and a video player via hand gestures.

For this tutorial I’ll be controlling following two applications:

First, I’ll control the VLC media player to pause/play or move forward/backward in the video. Then using the same code I’ll control a clone of the infamous temple run game.

Ofcourse you can feel free to control other applications too.

So how are we going to accomplish this ?

Well, it’s pretty simple, We are going to use the pyautogui library. This library allows you to control keyboard buttons and mouse cursor programmatically. You can learn more about this library here.

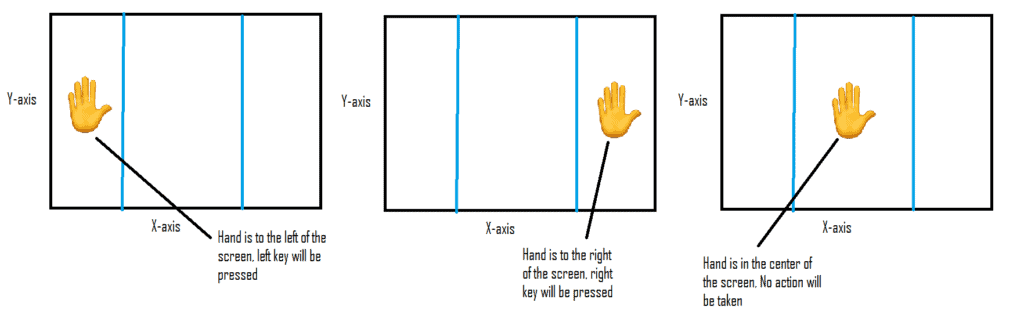

So now we’ll make a program that when we move our hand to the left of the screen pyautogui will press the left arrow key and when the hand is moved to the right, the right arrow key is pressed.

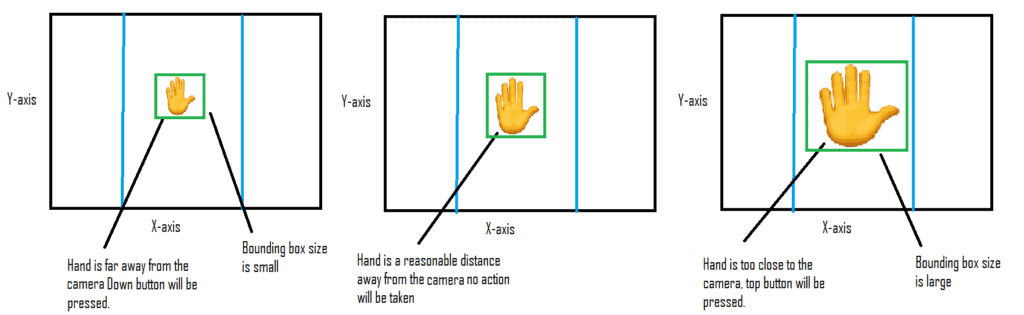

Similarly, If our hand is closer to the screen we’ll want to press the Up button and the hand is further from the screen we’ll want to press the down button.

This can easily be accomplished by measuring the size of the bounding box of the hand. As the size increases or decreases when the hand moves closer or far from the camera.

Let’s start with the code

Tuning Our Distance Thresholds:

Before we start controlling the game, we need to visualize how the buttons will be triggered, and if our defined thresholds are correct. This script draws lines between thresholds and displays the buttons that should be pressed. If these default thresholds don’t work for you then Change them, especially the size_up_th and size_down_th.

# Set these thresholds accordingly.

# If hand size is larger than this then up, button is triggered

size_up_th = 80000

# If hand size is smaller than this then down key is triggered

size_down_th = 25000

# If the center_x location is less than this then left key is triggered

left = 160

# If the center_x location is greater than this then right key is triggered

right = 480

# Load our trained detector

detector = dlib.simple_object_detector('Hand_Detector.svm')

# Set the window to normal

cv2.namedWindow('frame', cv2.WINDOW_NORMAL)

# Initialize webcam

cap = cv2.VideoCapture(0, cv2.CAP_DSHOW)

# Setting the downscaling size, for faster detection

# If you're not getting any detections then you can set this to 1

scale_factor = 2.0

# Initially the size of the hand and its center x point will be 0

size, center_x = 0,0

# Initialize these variables for calculating FPS

fps = 0

frame_counter = 0

start_time = time.time()

# Set the while loop

while(True):

# Read frame by frame

ret, frame = cap.read()

if not ret:

break

# Laterally flip the frame

frame = cv2.flip( frame, 1 )

# Calculate the Average FPS

frame_counter += 1

fps = (frame_counter / (time.time() - start_time))

# Create a clean copy of the frame

copy = frame.copy()

# Downsize the frame.

new_width = int(frame.shape[1]/scale_factor)

new_height = int(frame.shape[0]/scale_factor)

resized_frame = cv2.resize(copy, (new_width, new_height))

# Detect with detector

detections = detector(resized_frame)

# Set Default values

text = 'No Hand Detected'

center_x = 0

size = 0

# Loop for each detection.

for detection in (detections):

# Since we downscaled the image we will need to resacle the coordinates according to the original image.

x1 = int(detection.left() * scale_factor )

y1 = int(detection.top() * scale_factor )

x2 = int(detection.right() * scale_factor )

y2 = int(detection.bottom()* scale_factor )

# Calculate size of the hand.

size = int( (x2 - x1) * (y2-y1) )

# Extract the center of the hand on x-axis.

center_x = int(x1 + (x2 - x1) / 2)

# Draw the bounding box of the detected hand

cv2.rectangle(frame,(x1,y1),(x2,y2),(0,255,0), 2 )

# Now based on the size or center_x location set the required text

if center_x > right:

text = 'Right'

elif center_x < left:

text = 'Left'

elif size > size_up_th:

text = 'Up'

elif size < size_down_th:

text = 'Down'

else:

text = 'Neutral'

# Now we should draw lines for left/right threshold

cv2.line(frame, (left,0),(left, frame.shape[0]),(25,25,255), 2)

cv2.line(frame, (right,0),(right, frame.shape[0]),(25,25,255), 2)

# Display Center_x value and size.

cv2.putText(frame, 'Center: {}'.format(center_x), (500, 20), cv2.FONT_HERSHEY_COMPLEX, 0.6, (233, 100, 25), 1)

cv2.putText(frame, 'size: {}'.format(size), (500, 40), cv2.FONT_HERSHEY_COMPLEX, 0.6, (233, 100, 25))

# Finally display the text showing which key should be triggered

cv2.putText(frame, text, (220, 30), cv2.FONT_HERSHEY_COMPLEX, 0.7, (33, 100, 185), 2)

# Display the image

cv2.imshow('frame',frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Relase the webcam and destroy all windows

cap.release()

cv2.destroyAllWindows()

Make sure you’re satisfied with the above results and the keys are triggered correctly based on the location of your hand, if not then change the thresholds if you’re facing trouble detecting the hand then you have to train the detector again with more examples.

Now that we have configured our thresholds we will make a script that will press the required button based on those thresholds.

Main Function

This is our main script which will control the keyboard keys based on the hand movement. Now with this script I’ve controlled both the temple run game and the VLC media player.

When controlling the media player I make the variable player = True

# Load our trained detector

detector = dlib.simple_object_detector('Hand_Detector.svm')

# Initialize webcam

cap = cv2.VideoCapture(0, cv2.CAP_DSHOW)

# Setting the downscaling size, for faster detection

# If you're not getting any detections then you can set this to 1

scale_factor = 2.0

# Initially the size of the hand and its center x point will be 0

size, center_x = 0,0

# Initialize these variables for calculating FPS

fps = 0

frame_counter = 0

start_time = time.time()

# Set Player = True in order to use this script for the VLC video player

player = True

# This variable is True when we press a key and False when there is no detection.

# It's only used in the video Player

status = False

# We're recording the whole screen to view it later

screen_width, screen_height = tuple(pyg.size())

out = cv2.VideoWriter(r'videorecord.mp4', cv2.VideoWriter_fourcc(*'XVID'), 15.0, (screen_width,screen_height ))

# Set the while loop

while(True):

try:

# Read frame by frame

ret, frame = cap.read()

if not ret:

break

# Laterally flip the frame

frame = cv2.flip( frame, 1 )

# Calculate the Average FPS

frame_counter += 1

fps = (frame_counter / (time.time() - start_time))

# Create a clean copy of the frame

copy = frame.copy()

# Downsize the frame.

new_width = int(frame.shape[1]/scale_factor)

new_height = int(frame.shape[0]/scale_factor)

resized_frame = cv2.resize(copy, (new_width, new_height))

# Detect with detector

detections = detector(resized_frame)

# Key will initially be None

key = None

if len(detections) > 0:

# Grab the first detection

detection = detections[0]

# Since we downscaled the image we will need to resacle the coordinates according to the original image.

x1 = int(detection.left() * scale_factor )

y1 = int(detection.top() * scale_factor )

x2 = int(detection.right() * scale_factor )

y2 = int(detection.bottom()* scale_factor )

cv2.rectangle(frame,(x1,y1),(x2,y2),(0,255,0), 2 )

cv2.putText(frame, 'Hand Detected', (x1, y2+20), cv2.FONT_HERSHEY_COMPLEX, 0.6, (0, 0, 255),2)

# Calculate size of the hand.

size = int( (x2 - x1) * (y2-y1) )

# Extract the center of the hand on x-axis.

center_x = int(x1 + (x2 - x1) / 2)

# Press the required button based on center_x location and size

# The behavior of keys will be different depending upon if we're controlling a game or a video player.

# The status variable makes sure we do not double press the key in case of a video player.

if center_x > right:

key = 'right'

if player and not status:

pyg.hotkey('ctrl', 'right')

status = True

elif center_x < left:

key = 'left'

if player and not status:

pyg.hotkey('ctrl', 'left')

status = True

elif size > size_up_th:

key = 'up'

if player and not status:

pyg.press('space')

status = True

elif size < size_down_th:

key = 'down'

# Check if we're playing a game then press the required key

if key is not None and player == False:

pyg.press(key)

# If there wasn't a detection then the status is made False

else:

status = False

# Capture the screen

image = pyg.screenshot()

# Convert to BGR, numpy array (Opencv format of image)

img = cv2.cvtColor(np.array(image), cv2.COLOR_RGB2BGR)

# Resize the camera frame and attach it to screen.

resized_frame = cv2.resize(frame, (0,0), fx=0.6, fy=0.6)

h = resized_frame.shape[0]

w = resized_frame.shape[1]

img[0:h, 0:w] = resized_frame

# Save the video frame

out.write(img)

#time.sleep(0.2)

except KeyboardInterrupt:

print('Releasing the Camera and exiting since the program was stopped')

cap.release()

out.release()

sys.exit()

If you noticed above I’m also saving the output as a video recording, before saving I attach the camera output to the left corner of the screen and then save it, this is done just for the demo, it won’t impact the performance.

Now let’s run this script on a temple run game, Make sure to set player = False . I’ve included the link to this game in the Source Code.

One thing I will admit is that playing the temple run game with this simple approach was hard. This is because some games are really time-sensitive and you just can’t replace the effectiveness of pressing keys in rapid succession with fingers with hand gestures. Plus your hand gets tired real fast. The only advantage I see is that it looks cool.

Summary:

So today we learned how easy is it to use dlib to train a simple Object Detector and not only that but we learned how to automate the tiresome data collection and annotation process. The sliding window technique that I used is just one of many that you can cook up once you know enough image processing. If you want to fully master image processing and computer vision fundamentals then OpenCV’s CV 1 Course is a must take.

One drawback of the data collection and annotation method I used is that the final detector overfits on the training background and so it performs poorly on a different background. So if your intention is to have a background agnostic model then you should run the data generation script multiple times on different backgrounds, make sure to set clear_images variable to False after the first run.

We also learned how to use multiple detectors efficiently with dlib and then learned to gesture control some applications, now you can get really creative and control all sorts of other applications or games. I would really love to see what you guys can do with this 🙂

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning