Introduction

In the ever-evolving landscape of artificial intelligence, staying updated with the latest breakthroughs is paramount. September 2023 has been a testament to the relentless pursuit of knowledge in the AI community, with researchers pushing the boundaries of what’s possible. In this article, we will cover the Top 5 AI papers of September 2023. In July and August, we covered many exciting papers and projects at:

As we dive into the top 5 AI papers of September 2023, it’s essential to understand not just their novelty but their potential impact on your work and the broader AI ecosystem. Here is a brief overview of the top 5 papers of the last month:

- Vision Transformers Reimagined: The first paper delves into the realm of vision transformers, exploring the potential of replacing traditional softmax with ReLU. If you’ve been working with vision transformers or are intrigued by their capabilities, this paper might offer a fresh perspective on optimizing their performance.

- Summarization Perfected with GPT-4: Summarization has always been a challenging task, balancing between detail and brevity. The second paper introduces a novel approach using GPT-4, which could revolutionize how we generate and perceive summaries. For content creators and NLP enthusiasts, this could be a game-changer.

- Efficiency in Vision Applications: The third paper emphasizes making Vision Transformers more efficient, especially for resource-constrained applications. If you’re looking to deploy AI models on mobile or other limited-resource platforms, this research might hold the key to achieving optimal performance without compromising efficiency.

- Optimization through Language Models: The fourth paper showcases the power of large language models in optimization tasks. If you’re grappling with real-world applications where traditional optimization methods fall short, this approach might offer a novel solution, making complex tasks more intuitive.

- Memory Management in LLM Serving: The final paper addresses a critical challenge in serving large language models: efficient memory management. For those involved in deploying and serving AI models, this research could pave the way for more efficient and scalable systems.

Now, let’s dive deep into each paper.

- Paper 1: Replacing softmax with ReLU in Vision Transformers

- Paper 2: From Sparse to Dense: GPT-4 Summarization with Chain of Density Prompting

- Paper 3: Mobile V-MoEs: Scaling Down Vision Transformers via Sparse Mixture-of-Experts

- Paper 4: Large Language Models as Optimizers

- Paper 5: Efficient Memory Management for Large Language Model Serving with PagedAttention

- Summary

Paper 1: Replacing softmax with ReLU in Vision Transformers

Overview:

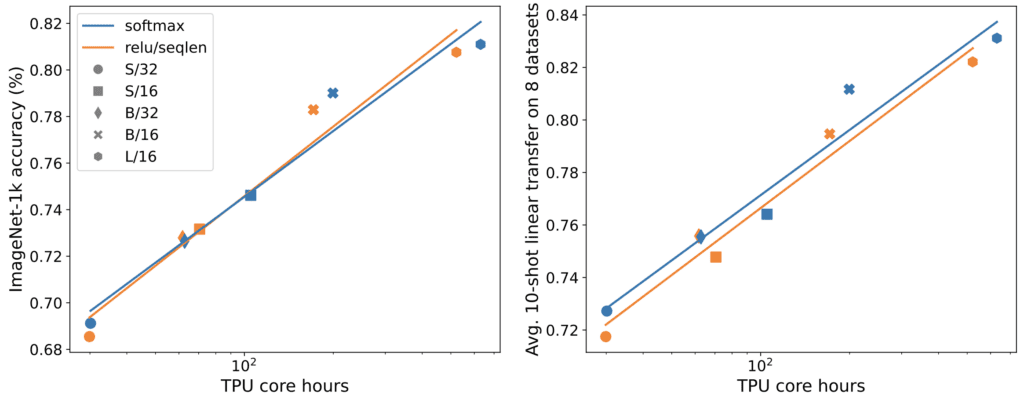

The paper discusses the replacement of the attention softmax with a pointwise activation such as ReLU in the context of vision transformers. The authors find that the degradation observed in previous research when replacing softmax with ReLU is mitigated when dividing by sequence length. The study indicates that ReLU-attention can approach or match the performance of softmax-attention in terms of scaling behavior as a function of compute.

Problem Addressed:

The traditional transformer architecture includes a softmax operation in the attention mechanism, which produces a probability distribution over tokens. The softmax operation is computationally costly due to the exponent calculation and sum over sequence length, making parallelization challenging.

Methodology and Key Findings:

- The authors explore point-wise alternatives to the softmax operation.

- They observe that attention with ReLU divided by sequence length can approach or match traditional softmax attention in terms of scaling behavior as a function of compute for vision transformers.

- Experiments were conducted on ImageNet-21k using various vision transformer models.

- The results indicate that ReLU-attention matches the scaling trends for softmax attention for ImageNet-21k training.

- The best results were typically achieved when sequence length scaling was close to 1.

- The presence of a gate in the attention mechanism did not remove the need for sequence length scaling.

Novel Ideas:

- The introduction of ReLU-attention, which can be parallelized over the sequence length dimension with fewer gather operations than traditional attention.

- The exploration of the effect of sequence length scaling for various point-wise alternatives to softmax.

Implications:

- The findings present new opportunities for parallelization in vision transformers.

- The replacement of softmax with ReLU-attention can lead to similar or even better performance, offering potential benefits in terms of computational efficiency.

- The study leaves open questions, such as the exact reason why the factor L⁻¹ improves performance or if there might be a better activation function that was not explored.

Links

Read the paper here: Replacing softmax with ReLU in Vision Transformers

The authors haven’t made the code publicly available, but this paper is not difficult to implement.

Paper 2: From Sparse to Dense: GPT-4 Summarization with Chain of Density Prompting

Overview:

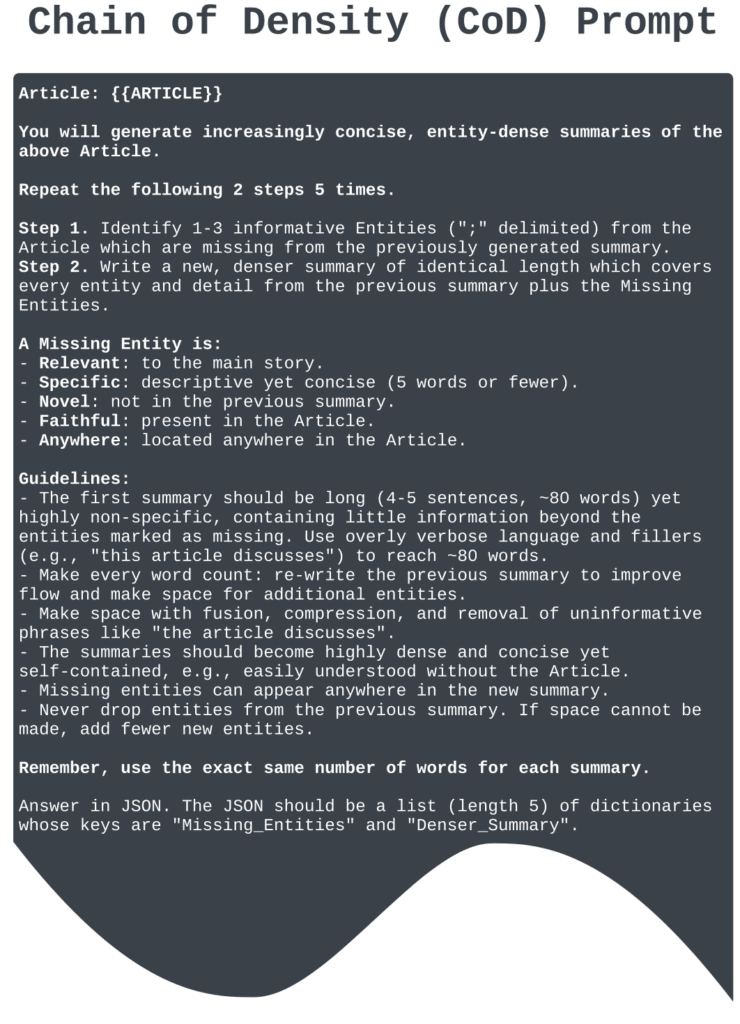

This paper builds upon previous prompt engineering techniques like chain of thought, tree of thought etc and proposes chain of density (CoD) prompting. It discusses the challenge of selecting the right amount of information for a summary. The authors introduce a method called “Chain of Density” prompting to generate increasingly dense GPT-4 summaries.

Problem Addressed:

The primary challenge addressed is determining the optimal information density for a summary. A summary should be detailed and entity-centric without being overly dense and hard to follow. The paper seeks to understand the tradeoff between informativeness and readability in summaries.

Methodology and Key Findings:

- The authors introduce the “Chain of Density” (CoD) prompt, where GPT-4 generates an initial entity-sparse summary and then iteratively incorporates missing salient entities without increasing the length.

- CoD summaries are found to be more abstractive, exhibit more fusion, and have less lead bias than GPT-4 summaries generated by a vanilla prompt.

- A human preference study was conducted on 100 CNN DailyMail articles. The results showed that humans prefer GPT-4 summaries that are denser than those generated by a vanilla prompt and almost as dense as human-written summaries.

- The CoD method involves generating a set of summaries with varying levels of information density while controlling for length. An initial summary is generated and made increasingly entity dense by adding unique salient entities from the source text without increasing the length.

Novel Ideas:

- The Chain of Density (CoD) prompting is a novel approach to generating summaries with controlled information density.

- The CoD method involves iterative re-writing of summaries to make space for additional entities, leading to increased abstraction, fusion, and compression.

Implications:

- The findings suggest that there’s a balance to be struck between informativeness and readability in summaries.

- The CoD method provides a way to generate summaries that are both detailed and concise, potentially improving the quality of automatic summarization.

- The paper contributes to the field by developing a prompt-based iterative method for making summaries increasingly entity dense and by providing a dataset of annotated CoD summaries available on HuggingFace.

Links

Paper 3: Mobile V-MoEs: Scaling Down Vision Transformers via Sparse Mixture-of-Experts

Overview:

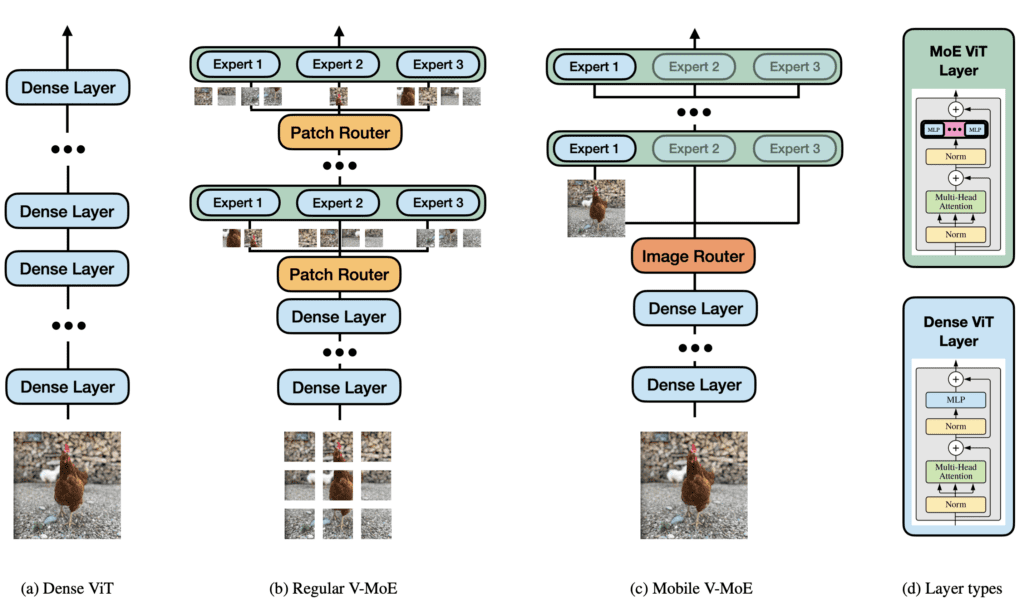

The paper introduces a method to scale down Vision Transformers (ViTs) using sparse Mixture-of-Experts (MoEs) models. This approach aims to make ViTs more suitable for resource-constrained vision applications.

Problem Addressed:

The challenge of balancing performance and efficiency in neural networks, especially in environments with limited computational resources, is addressed. Traditional dense models activate all parameters for each input, whereas sparse MoEs activate only a subset, making them more efficient.

Methodology and Key Findings:

- The authors propose a mobile-friendly sparse MoE design where entire images, rather than individual patches, are routed to the experts.

- A stable MoE training procedure is introduced that uses super-class information to guide the router.

- Empirical results show that sparse Mobile Vision MoEs (V-MoEs) offer a better trade-off between performance and efficiency than dense ViTs. For instance, the Mobile V-MoE outperforms its dense counterpart by 3.39% on ImageNet-1k for the ViT-Tiny model.

Novel Ideas:

- Introduction of a simplified MoE design where a single router assigns entire images to the experts.

- Development of a training procedure where expert imbalance is avoided by using semantic super-classes to guide the router training.

Implications:

Sparse MoEs can enhance the performance-efficiency trade-off compared to dense ViTs, making them more suitable for resource-constrained applications. The findings suggest potential applications in mobile-friendly models like MobileNets or ViT-CNN hybrids and other vision tasks like object detection.

Links

Paper 4: Large Language Models as Optimizers

Overview:

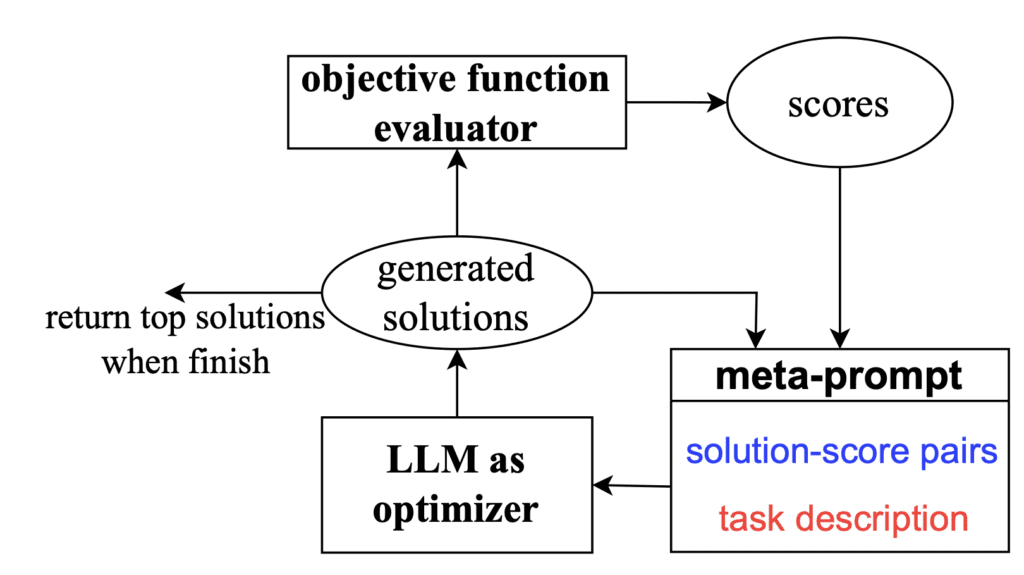

The paper introduces an approach called Optimization by PROmpting (OPRO) that leverages large language models (LLMs) as optimizers. The optimization task is described in natural language, and the LLM generates new solutions based on the prompt containing previously generated solutions and their values.

Problem Addressed:

The paper addresses the challenge of optimization in real-world applications, especially when gradients are absent. Traditional derivative-based algorithms have limitations in such scenarios. The authors aim to utilize the capabilities of LLMs to perform optimization tasks described in natural language.

Methodology and Key Findings:

- The proposed OPRO approach involves describing the optimization task in natural language and instructing the LLM to iteratively generate new solutions based on the problem description and previously found solutions.

- The LLM’s ability to understand natural language offers a new way for optimization without formally defining the problem.

- The authors first demonstrate the potential of LLMs for optimization using case studies on linear regression and the traveling salesman problem. They show that LLMs can find good-quality solutions through prompting.

- The paper also explores the ability of LLMs to optimize prompts. The goal is to find a prompt that maximizes task accuracy. The authors show that optimizing the prompt for accuracy on a small training set can achieve high performance on the test set.

Novel Ideas:

- The concept of using LLMs as optimizers by describing the optimization task in natural language is novel.

- The OPRO approach introduces the idea of a meta-prompt, which contains the optimization problem description and the optimization trajectory (past solutions paired with their optimization scores).

- The paper presents a unique way of leveraging the optimization trajectory, enabling the LLM to gradually generate new prompts that improve task accuracy.

Implications:

- The OPRO approach opens up new possibilities for optimization in various domains without the need for formal problem definitions.

- LLMs can be used to quickly adapt to different tasks by changing the problem description in the prompt, making the optimization process more flexible and adaptable.

- The findings suggest that LLMs can match or even surpass hand-designed heuristic algorithms in certain optimization tasks.

Links

Paper 5: Efficient Memory Management for Large Language Model Serving with PagedAttention

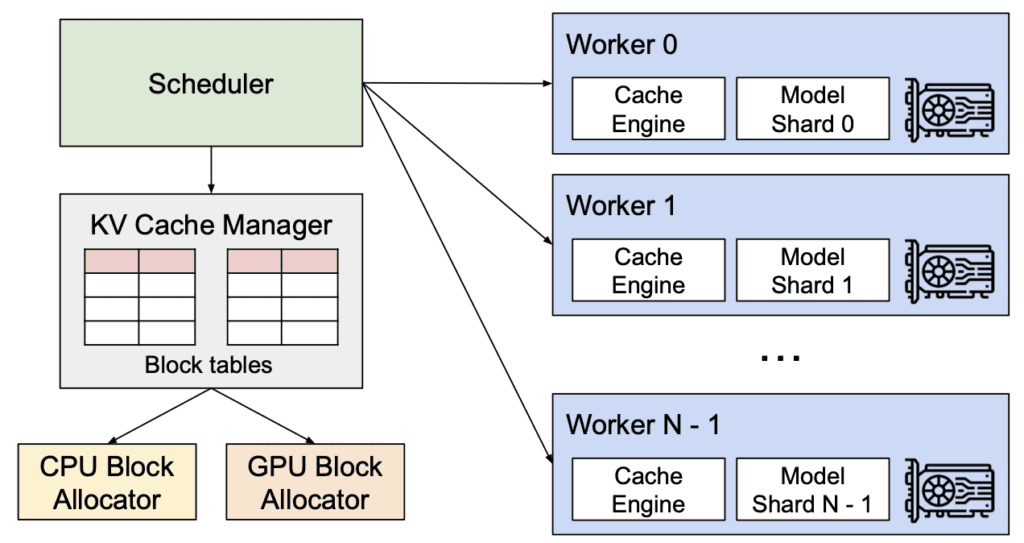

Figure 5. vLLM system overview

Overview:

The paper presents a method for efficient memory management when serving large language models (LLMs). The authors introduce PagedAttention, an attention algorithm inspired by classical virtual memory and paging techniques used in operating systems. They also introduce vLLM, an LLM serving system built on top of PagedAttention.

Problem Addressed:

Serving large language models requires batching many requests simultaneously. However, existing systems face challenges due to the dynamic growth and shrinkage of the key-value cache (KV cache) memory for each request. Inefficient management of this memory can lead to fragmentation and redundant duplication, which limits batch size.

Methodology and Key Findings:

- The authors propose PagedAttention, which divides the request’s KV cache into blocks. These blocks can contain the attention keys and values of a fixed number of tokens. Unlike traditional methods where blocks for the KV cache are stored in contiguous space, PagedAttention allows for non-contiguous storage. This design reduces internal fragmentation and eliminates external fragmentation.

- The vLLM system, built on PagedAttention, achieves near-zero waste in KV cache memory. It uses block-level memory management and preemptive request scheduling. Evaluations show that vLLM improves the throughput of popular LLMs by 2-4x compared to state-of-the-art systems without affecting model accuracy.

Novel Ideas:

- PagedAttention is a novel attention algorithm inspired by virtual memory and paging in operating systems. It divides the KV cache into blocks and allows for non-contiguous storage, addressing memory fragmentation issues.

- vLLM is a new LLM serving system that efficiently manages memory, achieving near-zero waste in KV cache memory.

Implications:

Efficient memory management is crucial for serving large language models, especially when batching multiple requests. The introduction of PagedAttention and vLLM can lead to significant improvements in throughput and reduced memory wastage. This can have a profound impact on the efficiency and cost-effectiveness of deploying large language models in real-world applications.

Links

Summary

Here is a quick one-line summary of the five papers we have covered this month.

Vision Transformers Reimagined: Dive into a fresh perspective on optimizing vision transformers by replacing softmax with ReLU.

Summarization Perfected with GPT-4: Discover a revolutionary approach to generating summaries, potentially changing the game for content creators and NLP enthusiasts.

Efficiency in Vision Applications: Unlock the potential of deploying AI models on resource-constrained platforms without compromising performance.

Optimization through Language Models: Explore a novel solution to real-world optimization challenges, making them more intuitive with the power of large language models.

Memory Management in LLM Serving: Address the critical challenge of efficient memory management in AI model serving, paving the way for scalable systems.

Stay tuned and join us next month as we delve into October’s top 5 groundbreaking AI papers. Your journey to staying updated with the latest AI research continues with us.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning