Introduction

Welcome to our latest series of blog posts about artificial intelligence (AI) and machine learning (ML)! Whether you’re a beginner dipping your toes into the AI pool for the first time or a junior engineer looking to stay updated on the latest trends, this blog post is for you.

AI and ML are not just buzzwords; they are powerful tools that are reshaping the way we live, work, and interact with the world. From recommending your next favorite song on Spotify to predicting weather patterns, AI and ML are at the heart of many technologies we use daily. They have the potential to revolutionize industries, solve complex problems, and make our lives a bit easier in the process.

But how do we keep up with this rapidly evolving field? One way is by exploring the latest research papers, where cutting-edge ideas are born and the future of AI is being shaped. That’s why starting now, each month, we will sift through the vast sea of AI research to bring you the top 5 papers that are making waves in the AI community. We will choose papers not only on computer vision but also on language models, robotic and reinforcement learning, etc to give you a 360° view of the entire field.

This month, for July 2023, we’ve selected five papers that stand out for their innovative approaches, practical applications, and potential to influence the field. These papers tackle a range of topics, from improving machine learning algorithms to exploring new uses for AI in various industries.

Our aim is not to overwhelm you with complex technical jargon but rather to highlight the key ideas and findings in a way that’s accessible and relevant to you. We’ll delve into what these papers are about, why they matter, and, most importantly, what they could mean for the future of AI and ML.

So, whether you’re looking to apply AI in your next project or simply curious about where this exciting field is headed, read on! The future of AI awaits.

- Paper 1: Llama 2: Open Foundation and Fine-Tuned Chat Models

- Paper 2: Flash Attention 2

- Paper 3: What Should Data Science Education Do with Large Language Models?

- Paper 4: Less is More – Focus Attention for Efficient DETR

- Paper 5: Hardwiring ViT Patch Selectivity into CNNs using Patch Mixing

- Conclusion

Paper 1: Llama 2: Open Foundation and Fine-Tuned Chat Models

- Overview: This paper, authored by Meta researchers, introduces LLAMA 2, a collection of pretrained and fine-tuned large language models (LLMs) optimized for dialogue use cases. The models range in scale from 7 billion to 70 billion parameters and come out of the box chat ready, so they can be used as chatbots just like chatGPT. The authors claim that their models outperform open-source chat models on most benchmarks they tested and based on their human evaluations for helpfulness and safety, may be a suitable substitute for closed-source models. They provide a detailed description of their approach to fine-tuning and safety improvements of Llama 2-Chat to enable the community to build on their work and contribute to the responsible development of LLMs.

- Problem Addressed: The paper addresses the challenge of developing large language models that are not only effective in dialogue use cases but also safe and responsible. The major excitement around LLAMA 2 comes from the fact that the models are freely available and can be run locally. In fact, Andrej Karpathy has created his own version of LLAMA 2 inference purely in the C programming language, which runs on a CPU.

- Methodology and Key Findings: The authors developed Llama 2, a family of pretrained and fine-tuned LLMs, and Llama 2-Chat, a fine-tuned version of Llama 2 optimized for dialogue use cases. The models were trained on a new mix of publicly available data, and the authors took measures to increase their safety, using safety-specific data annotation and tuning, as well as conducting red-teaming and employing iterative evaluations. The models generally perform better than existing open-source models and appear to be on par with some of the closed-source models, at least on the human evaluations performed by the authors.

- Implications: The development and open release of Llama 2 and Llama 2-Chat represent significant contributions to the field of AI. By making these models available to the public, the authors are enabling further research and development in this area, potentially leading to more advanced and safer AI systems. However, the authors also caution that developers should perform safety testing and tuning tailored to their specific applications of the model.

- Key Quote: “In this work, we develop and release Llama 2, a family of pretrained and fine-tuned LLMs, Llama 2 and Llama 2-Chat, at scales up to 70B parameters. On the series of helpfulness and safety benchmarks we tested, Llama 2-Chat models generally perform better than existing open-source models. They also appear on par with some of the closed-source models, at least on the human evaluations we performed”.

Paper 2: Flash Attention 2

- Overview: The paper titled “FlashAttention-2: Faster Attention with Better Parallelism and Work Partitioning” by Tri Dao, discusses an improved version of the FlashAttention model, called FlashAttention-2. The paper was published on July 18, 2023 and is all about efficiently utilizing NVIDIA GPUs for computing multi headed self attention.

- Problem Addressed: The paper addresses the challenge of scaling Transformers to longer sequence lengths, a major problem in recent years. The attention layer is the main bottleneck in scaling to longer sequences, as its runtime and memory increase quadratically in the sequence length. While the original FlashAttention model brought significant memory saving and runtime speedup, it was still not as fast as optimized matrix-multiply (GEMM) operations.

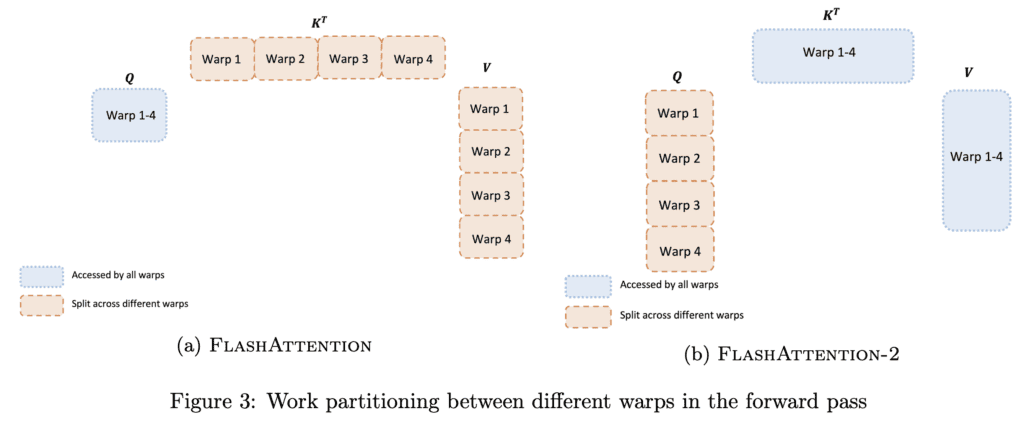

- Methodology and Key Findings: FlashAttention-2 introduces better work partitioning to address the inefficiencies of the original model. The paper proposes three main improvements:

- Tweaking the algorithm to reduce the number of non-matmul FLOPs.

- Parallelizing the attention computation, even for a single head, across different thread blocks to increase occupancy.

- Distributing the work between warps within each thread block to reduce communication through shared memory.

These improvements lead to around 2x speedup compared to FlashAttention, reaching 50-73% of the theoretical maximum FLOPs/s on A100 and getting close to the efficiency of GEMM operations.

- Implications: The improvements in FlashAttention-2 have significant implications for the training of GPT-style models. The paper empirically validates that when used end-to-end to train these models, FlashAttention-2 reaches a training speed of up to 225 TFLOPs/s per A100 GPU, which is 72% model FLOPs utilization.

- Key Quote: “We propose FlashAttention-2, with better work partitioning to address these issues. In particular, we (1) tweak the algorithm to reduce the number of non-matmul FLOPs (2) parallelize the attention computation, even for a single head, across different thread blocks to increase occupancy, and (3) within each thread block, distribute the work between warps to reduce communication through shared memory. These yield around 2x speedup compared to FlashAttention, reaching 50-73% of the theoretical maximum FLOPs/s on A100 and getting close to the efficiency of GEMM operations.”

Paper 3: What Should Data Science Education Do with Large Language Models?

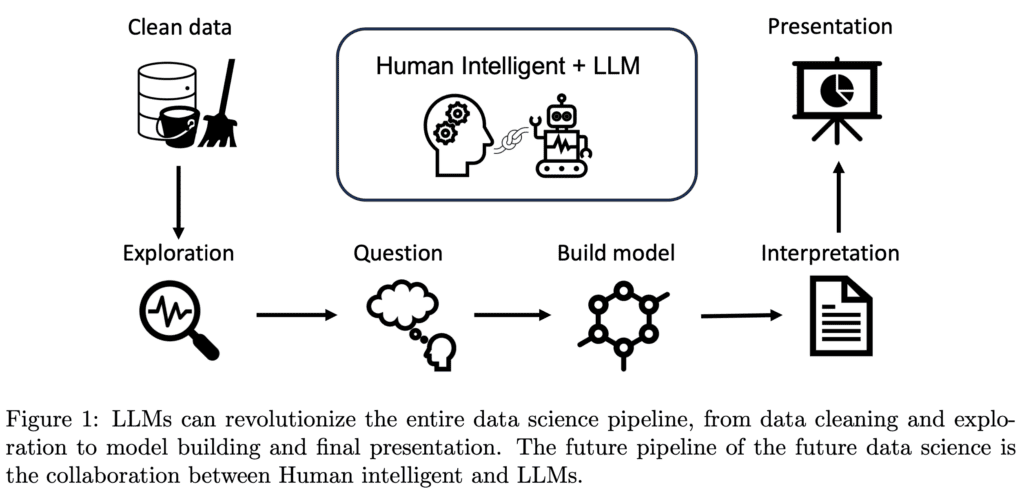

- Overview: This paper discusses the impact of Large Language Models (LLMs) like ChatGPT on data science and statistics. The authors argue that LLMs are transforming the responsibilities of data scientists, shifting their focus from hands-on coding and conducting standard analyses to assessing and managing analyses performed by these automated AIs. They also discuss the implications of this shift for data science education.

- Problem Addressed: The paper addresses the challenge of adapting data science education to the rapid advances in AI, particularly the development of LLMs. The authors argue that the rise of LLMs necessitates a shift in both the content and methods of data science education.

- Methodology and Key Findings: The authors discuss the capabilities of LLMs in streamlining complex processes such as data cleaning, model building, interpretation, and report writing. They illustrate this with concrete data science case studies using LLMs. They also discuss the need for data science education to place greater emphasis on cultivating diverse skillsets among students, such as LLM-informed creativity, critical thinking, AI-guided programming, and interdisciplinary knowledge.

- Implications: The rise of LLMs has significant implications for the field of data science and its education. As LLMs take over more routine tasks, data scientists can focus on higher-level tasks, similar to transitioning from a software engineer to a product manager. This shift necessitates a change in data science education, with a greater emphasis on strategic planning, coordinating resources, and overseeing the overall product life cycle.

- Key Quote: “We argue that LLMs are transforming the responsibilities of data scientists, shifting their focus from hands-on coding, data-wrangling and conducting standard analyses to assessing and managing analyses performed by these automated AIs. This evolution of roles is reminiscent of the transition from a software engineer to a product manager, where strategic planning, coordinating resources, and overseeing the overall product life cycle supersede the task of writing code.” (Page 1)

Paper 4: Less is More – Focus Attention for Efficient DETR

- Overview: This paper introduces Focus-DETR, an improved version of the DETR-like model. The authors propose a new method focusing on more informative tokens, aiming to strike a better balance between computational efficiency and model accuracy.

- Problem Addressed: The paper addresses the issue of computational inefficiency in DETR-like models, where all tokens are treated equally without discrimination, leading to a redundant computational burden. Previous sparsification strategies have attempted to reduce this burden by focusing on a subset of informative tokens, but these methods often rely on unreliable model statistics and can hinder detection performance.

- Methodology and Key Findings: The authors propose Focus-DETR, which reconstructs the encoder with dual attention, including a token scoring mechanism that considers both localization and category semantic information of the objects from multi-scale feature maps. This approach allows the model to efficiently abandon the background queries and enhance the semantic interaction of the fine-grained object queries based on the scores. Compared with the state-of-the-art sparse DETR-like detectors under the same setting, Focus-DETR achieves comparable complexity while improving the model’s accuracy (50.4AP, +2.2 on COCO).

- Implications: The development of Focus-DETR represents a significant contribution to the field of object detection. By focusing attention on more informative tokens, the model can achieve better performance while maintaining computational efficiency. This approach could potentially lead to more advanced and efficient object detection models.

- Key Quote: “We propose Focus-DETR, which focuses the self-attention mechanism on more informative tokens for a better trade-off between computation efficiency and model accuracy. Specifically, we reconstruct the encoder with dual attention, which includes a token scoring mechanism that considers both localization and category semantic information of the objects from multi-scale feature maps.”

Paper 5: Hardwiring ViT Patch Selectivity into CNNs using Patch Mixing

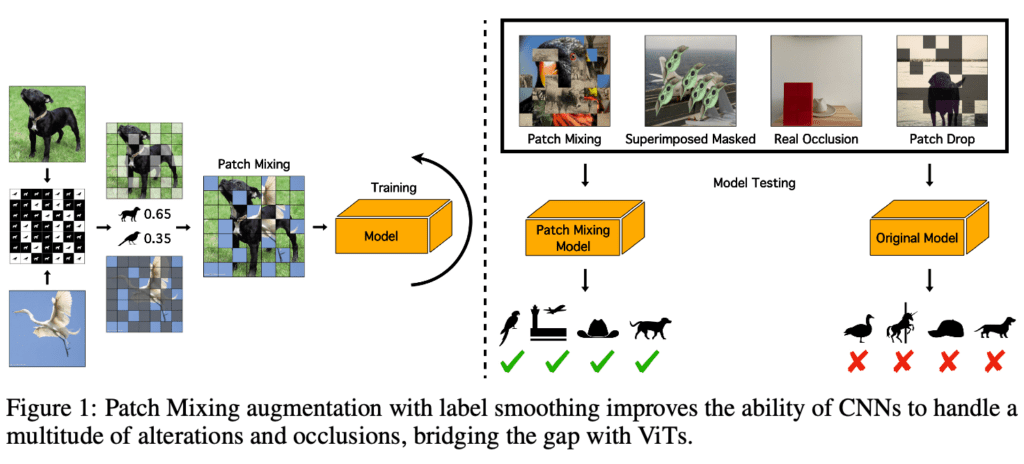

- Overview: This paper explores the concept of patch selectivity in Vision Transformers (ViTs) and Convolutional Neural Networks (CNNs). The authors propose a new data augmentation method, Patch Mixing, to improve the ability of CNNs to handle occlusions and out-of-context information.

- Problem Addressed: The paper addresses the challenge of handling occlusions and out-of-context information in CNNs. The authors argue that ViTs are naturally more robust when out-of-context information is added to an image compared to CNNs, a property they term as “patch selectivity”. The goal is to improve this ability in CNNs.

- Methodology and Key Findings: The authors propose Patch Mixing, a data augmentation method where patches from other images are introduced into training images and ground-truth labels are interpolated. They find that CNNs trained using Patch Mixing improve their ability to ignore out-of-context information and become more robust to occlusions. Interestingly, ViTs do not improve nor degrade when trained using Patch Mixing, suggesting that patch selectivity is a natural capability of ViTs.

- Implications: The findings of this paper have significant implications for the training of CNNs. By using Patch Mixing, CNNs can acquire the ability to ignore out-of-context information, making them more robust to occlusions. This could potentially lead to the development of more robust and efficient CNN models.

- Key Quote: “We find that ViTs do not improve nor degrade when trained using Patch Mixing, but CNNs acquire new capabilities to ignore out-of-context information and improve on occlusion benchmarks, leaving us to conclude that this training method is a way of simulating in CNNs the abilities that ViTs already possess.”

Conclusion

In this blog post, we’ve delved into the top five AI papers of July 2023, each contributing unique insights and advancements to the field of artificial intelligence and machine learning. We see that the momentum of innovation in the field of large language models continues to remain strong. Even data science education is affected by this as outlined in paper 3. We also see that the rivalry between convolutions and self attention in computer vision remains active and how ViTs are being used by researchers to improve convolutions.

As AI continues to evolve and shape our world, it’s more important than ever to stay informed and continue learning about this transformative technology. We hope that these summaries have sparked your interest and deepened your understanding of the latest advancements in AI. Don’t forget to join us next month as we explore the top 5 AI papers of August 2023. We look forward to continuing this journey of discovery with you!