The AI community generously shares code, model architectures, and even models trained on large datasets. We are standing on the shoulders of giants, which is why the industry is adopting AI so widely.

When we start a computer vision project, we first find models that partially solve our problem.

Let’s say you want to build a security application that looks for humans in restricted areas. First, check if a publicly available pedestrian detection model works for you out of the box. If it does, you do not need to train a new model. If not, experimenting with publicly available models will give you an idea of which architecture to choose for finetuning or transfer learning.

Today, we will learn about free resources for computer vision, machine learning, and AI models.

- Papers With Code

- Modelzoo.co

- Open Model Zoo

- TensorFlow Model Garden

- TensorFlow Hub

- MediaPipe

- Awesome CoreML models

- Jetson Zoo

- Pinto Model Zoo

- ONNX Model Zoo

- Bonus: Modelplace.AI

1. Papers With Code

Source: paperswithcode.com/static/logo.png

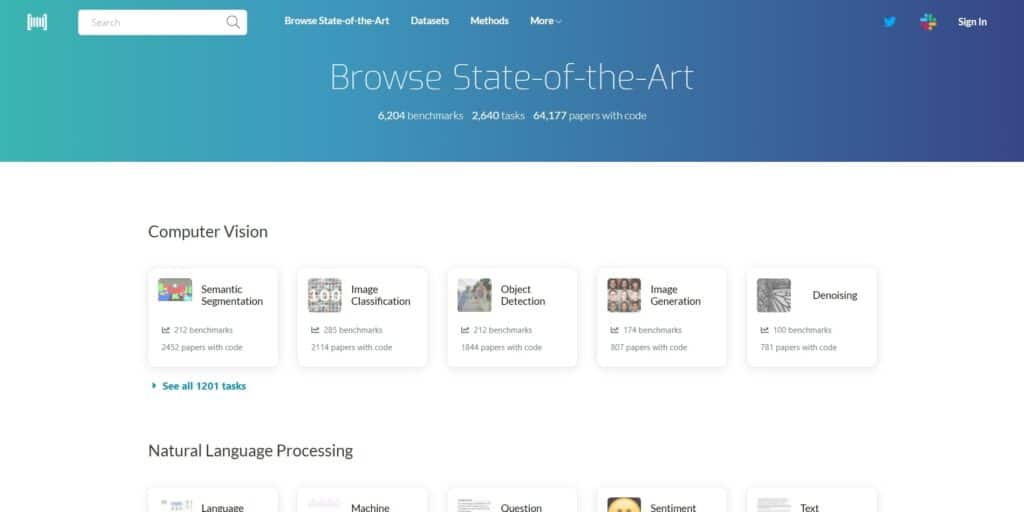

The mission of Papers With Code is to create a free and open resource with Machine Learning papers, code, datasets, methods, and evaluation tables.

The platform is updated regularly with the latest papers and resources in computer vision and other subfields of AI.

Source: paperswithcode.com/sota

1.1 Use filters to narrow search results

With an extensive catalog of Machine Learning tasks with hundreds of research papers and code implementations, you will have a sea of information to sift through, but the thoughtful filtering features available on the platform come to the rescue.

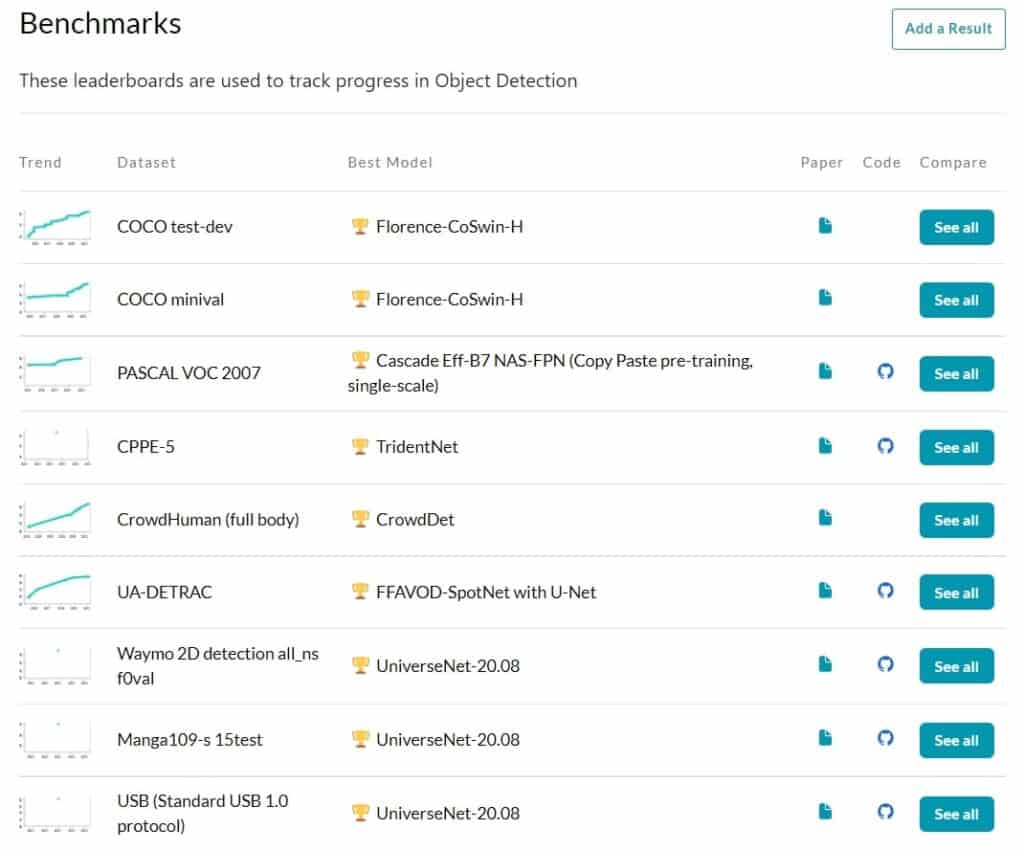

1.2 Benchmarks

Source: paperswithcode.com/task/object-detection

For a particular task, you can view all the state-of-the-art models that perform best on the popular benchmarks for that task. Also, you can choose to see the submissions by the number of upvotes they have received from the community in the form of stars.

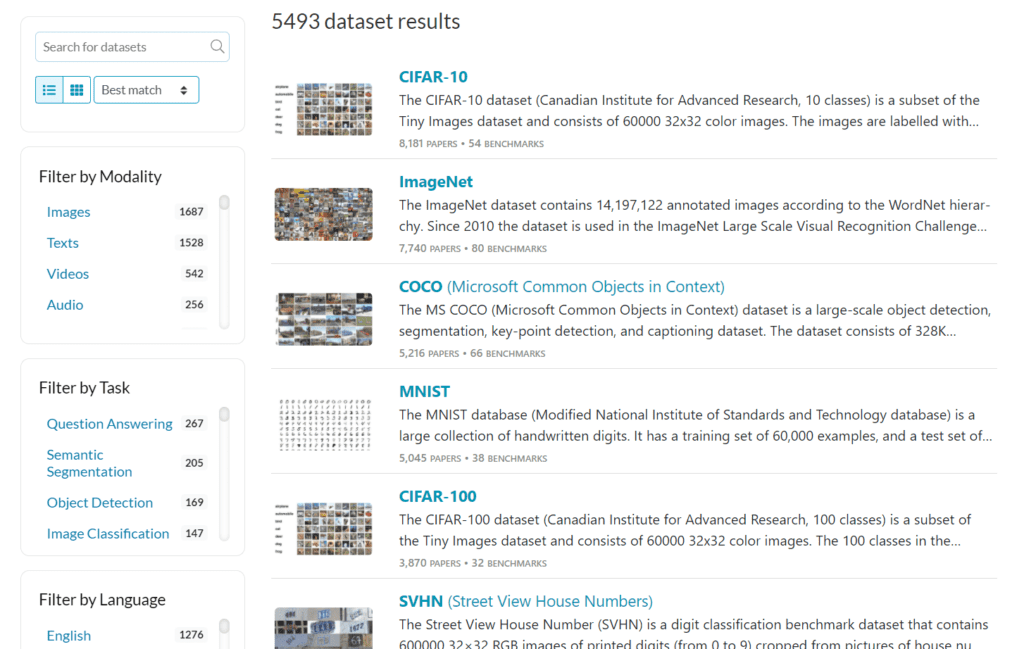

1.3 Datasets

Besides this, Papers with Code also has a collection of popular public datasets available at one location.

Source: paperswithcode.com/datasets

Using Papers With Code, you can compare a large number of solutions to find the one that might suit you.

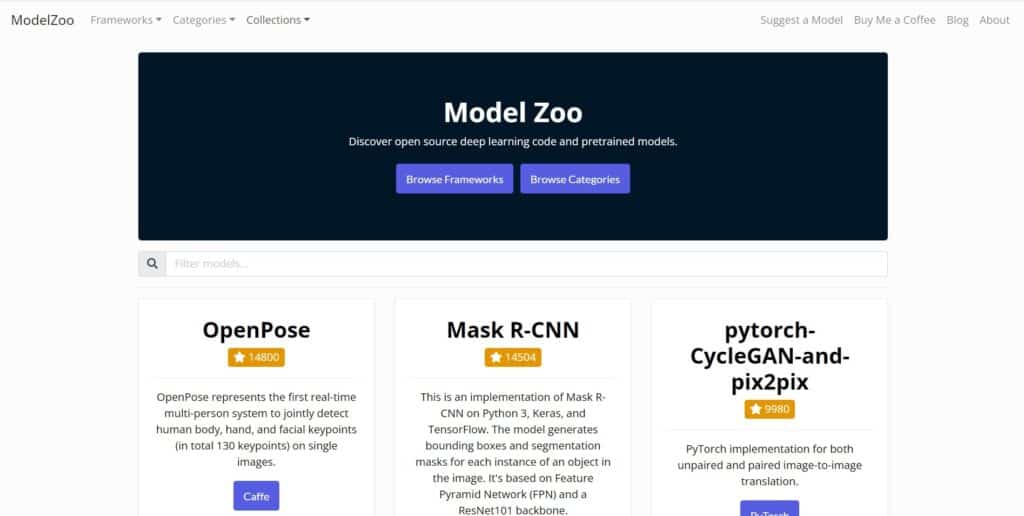

2. ModelZoo.co

Source: modelzoo.co

Created by Jing Yu Koh, a research engineer at Google, Model Zoo curates and provides a platform for deep learning researchers to quickly find pre-trained models for various platforms and tasks.

The site is regularly updated and provides filtering functionality to find the suitable models according to the machine learning framework you plan to use or the category of the task at hand.

The two most useful categories for us are

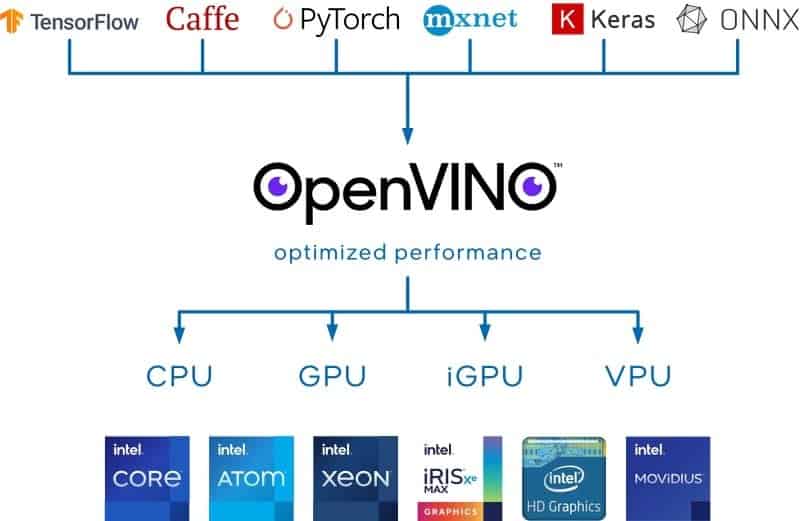

3. Open Model Zoo

Source: docs.openvino.ai/latest/_static/images/ov_chart.png

Open Model Zoo for OpenVINO™ toolkit provides a wide variety of free, highly optimized pre-trained deep learning models that run blazingly fast on Intel CPUs, GPUs, and VPUs.

This repository contains over 200 neural network models for tasks including object detection, classification, image segmentation, handwriting recognition, text to speech, pose estimation, and others.

There are two kinds of models.

- Intel’s Pre-Trained Models: The team at Intel has trained these models and optimized them to run with OpenVINO. Check out the documentation to learn about model accuracy and performance.

- Public Pre-Trained Models: These are models created by the AI community and can be easily converted to OpenVINO format using OpenVINO Model Optimizer. Check out the documentation for model speed and accuracy.

Always check the device support page to ensure the model is compatible with the device you want to run it on. Check out our series on OpenVINO to learn the ins and outs.

The Open Model Zoo also houses a load of demo applications with instructions for running them. You can use these applications as a template to build your applications.

You can also use the Model Analyzer to get more insights for a model like memory consumption, sparsity, Gflop, etc. Keep in mind Model Analyzer works only with models in Intermediate Representation (IR) format.

And if you are just beginning with OpenVino Toolkit, it might be worth checking out the openvinotoolkit/openvino_notebooks repo. It contains ready-to-run jupyter notebooks to help you get up to speed about working with OpenVino toolkit and models available in the zoo.

Moreover, it also provides tools to perform tasks on the model, such as download, conversion, and quantization.

4. TensorFlow Model Garden

Source: camo.githubusercontent.com

TensorFlow is an end-to-end open-source platform for Machine Learning and arguably the most popular ML framework.

The TensorFlow Model Garden is a repository containing many state-of-the-art (SOTA) models. There are three kinds of models.

- Official: The models in this collection are maintained, tested, and kept up to date with the latest TensorFlow API.

- Research: The models in this collection may use Tensorflow 1 or 2 and are maintained by researchers.

- Community: This is a collection of links to models maintained by the community.

The aim of this repository is to provide the building blocks for you to train your own models by building on top of provided default configurations, datasets, and fine-tuning available model checkpoints.

To help you reproduce the training results training logs for the available models have also been provided.

TensorFlow users can leverage the provided models and treat them as references to train their model or fine-tune models from available checkpoints.

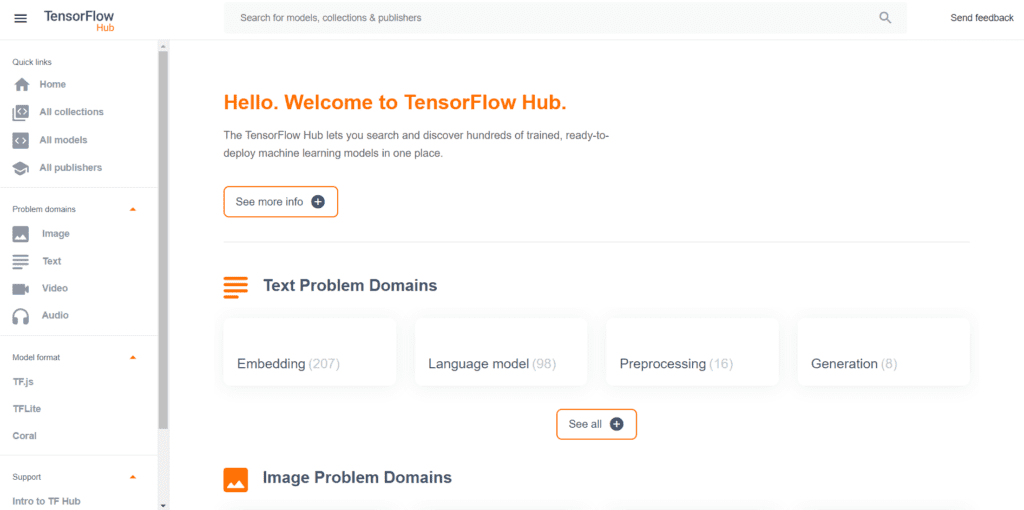

5. TensorFlow Hub

Source: www.gstatic.com/aihub/tfhub/tensorflow_hub_logo_fullcolor

From image classification, text embeddings, audio, and video action recognition, TensorFlow Hub is a space where you can browse trained models and datasets from across the TensorFlow ecosystem.

Source: tfhub.dev

Unlike TensorFlow Model Garden, the models available on TensorFlow Hub are ready-to-use and intended to be used as black boxes with set inputs and outputs. If you can find a model that suits your needs, you can use it!

You can discover and download hundreds of trained ready-to-deploy machine learning models published from reputed resources.

All the deployment details are provided for the models like, the input and output formats, the dataset used, and expected performance metrics to help you choose the best models for your tasks. Example notebooks and interactive web experiences are also available for some models to make working with them a breeze.

The common formats of the available models are SavedModel, TFLite, or TF.js formats that can be directly implemented in code from their available tensorflow_hub library. It lets you download and use the available models from TensorFlow Hub in your TensorFlow program with a minimum amount of code.

The TensorFlow Hub is open to community contributions, so we can be sure that the collection of models is bound to grow, and more models will be available at our disposal.

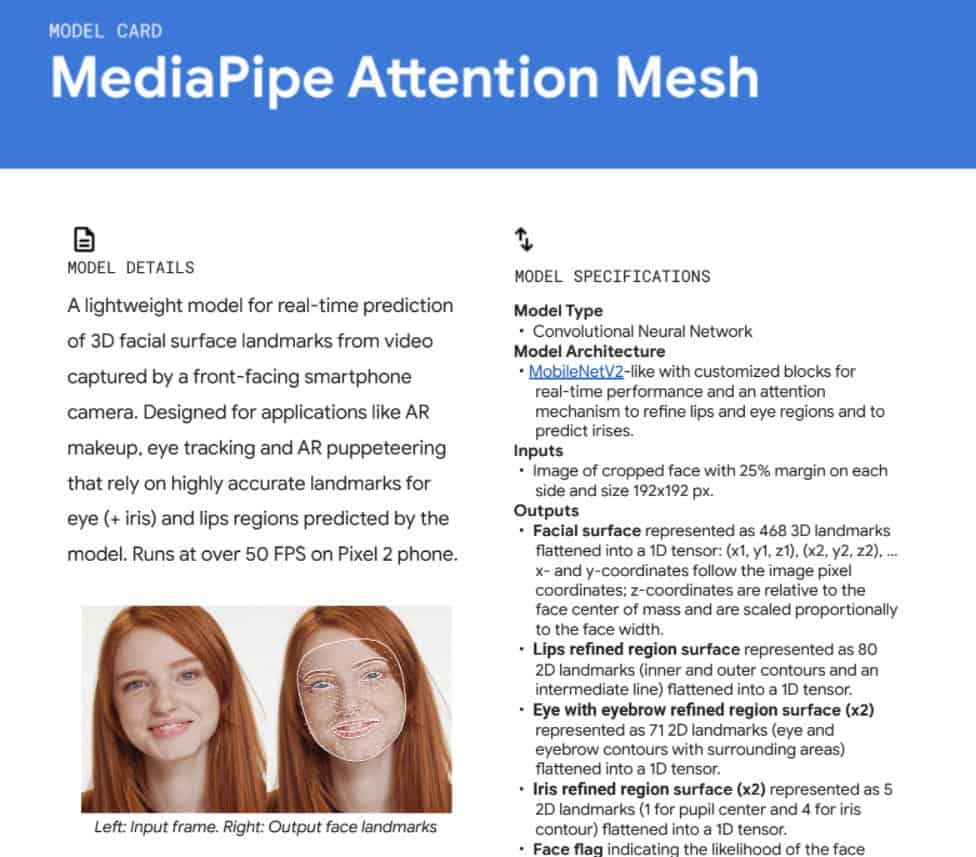

6. MediaPipe Models

Source: mediapipe.dev/assets/img/brand

MediaPipe is an open-source, cross-platform Machine Learning framework developed by Google researchers. It provides customizable ML solutions.

Although the Mediapipe project is still in the Alpha stage, its solutions have already been deployed in many everyday applications that we use. Google’s ‘motion stills’ and Youtube’s ‘privacy blur’ feature are such examples.

On top of lightweight and blazingly fast performance, MediaPipe supports cross-platform compatibility. The idea is to build an ML model once and deploy it on different platforms and devices with reproducible results.

It supports Python, C, Javascript, Android, and IOS platforms.

The Medipipe Models collection provides ready-to-use perception models for different tasks,

Source: google.github.io/mediapipe/

The most popular solutions available are Face mesh (face landmark model), Pose Detection, Hair Segmentation, KNIFT (feature matching), etc.

Model cards are provided for each of the models available, containing all of the details regarding that model.

Source: drive.google.com/file/d/1tV7EJb3XgMS7FwOErTgLU1ZocYyNmwlf/preview

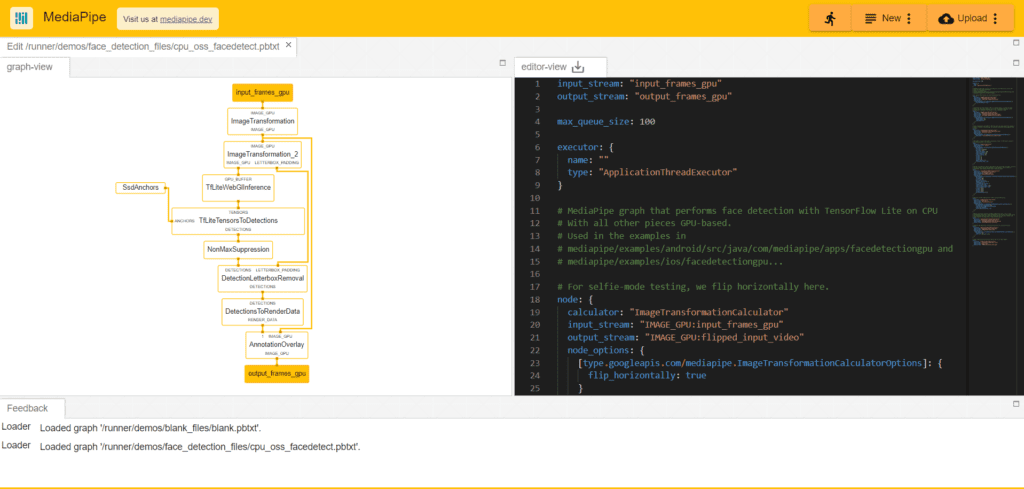

Mediapipe also provides a Model visualizer that is available online, helping to understand the overall behavior of their machine learning inference pipelines. Below is the graph structure of the available Face Detection model.

Source: viz.mediapipe.dev/demo/face_detection

With Fast performance and the hardware compatibility it offers, Mediapipe can be a good fit for most of the real-time vision solutions.

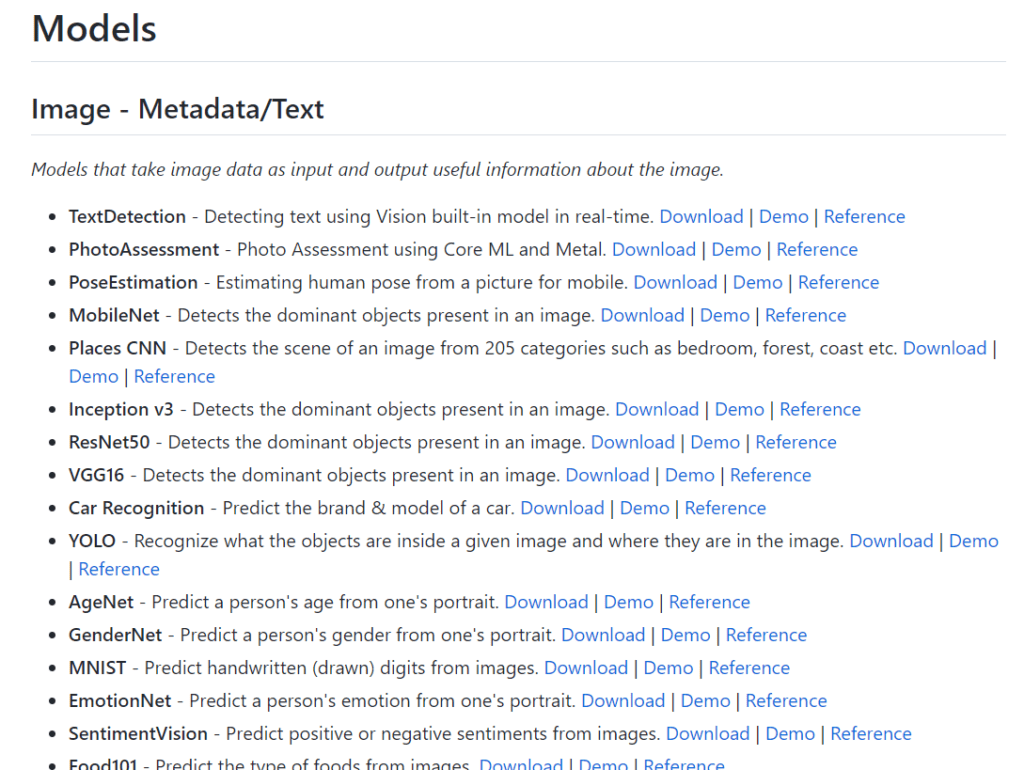

7. Awesome CoreML Models

Source: github.com/likedan/Awesome-CoreML-Models/raw/master/images/coreml.png

Apple’s CoreML library allows iOS, macOS, tvOS, or watchOS developers to create fun and exciting applications that harness the powers of AI. It has been around since iOS 11 and is incorporated in a lot of the upcoming applications in some form or the other.

Source: github.com/likedan/Awesome-CoreML-Models

Awesome-CoreML-Models repository contains a collection of machine learning models that Apple’s CoreML library supports. The models are available in the CoreML format and don’t require post-processing or conversion in a CoreML based application.

Source: docs-assets.developer.apple.com/published/cc3b628bee/rendered2x-1638462885.png

Other than the available models, the number of model formats can be converted to the coreML format. The supported formats include Tensorflow, Caffe, Keras, XGBoost, Scikit-learn, MXNet, LibSVM, Torch7, etc.

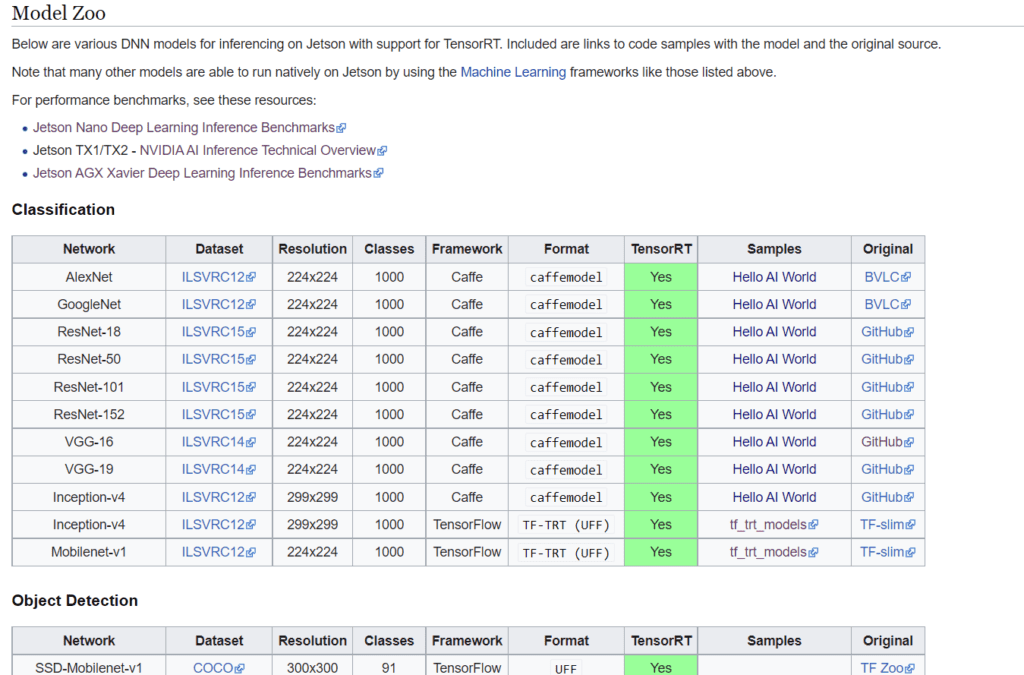

8. Jetson Zoo

Source: www.nvidia.com/en-in/autonomous-machines/embedded-systems/

Jetson, the embedded computing board from Nvidia, is a popular choice amongst the embedded platform’s community for deploying AI applications. Jetson can run various advanced networks, including the full native versions of popular ML frameworks like TensorFlow, PyTorch, Caffe/Caffe2, Keras, MXNet, and others.

Jetson Model Zoo contains various DNN models for inferencing on Nvidia Jetson with support for TensorRT. It includes the links to code samples with the model and the original source of the model.

Source: www.elinux.org/Jetson_Zoo#Model_Zoo

The model zoo also gives an insight into the inference benchmarks of different models tested on Nvidia Jetson compared with other popular embedded development boards such as Raspberry Pi, Intel Neural Compute Stick, and Google Edge TPU Dev Board.

Source: developer.nvidia.com/embedded/jetson-nano-dl-inference-benchmarks

Considering Jetson is one of the most powerful embedded platforms, the Jetson Model Zoo is a good resource for deploying any embedded application.

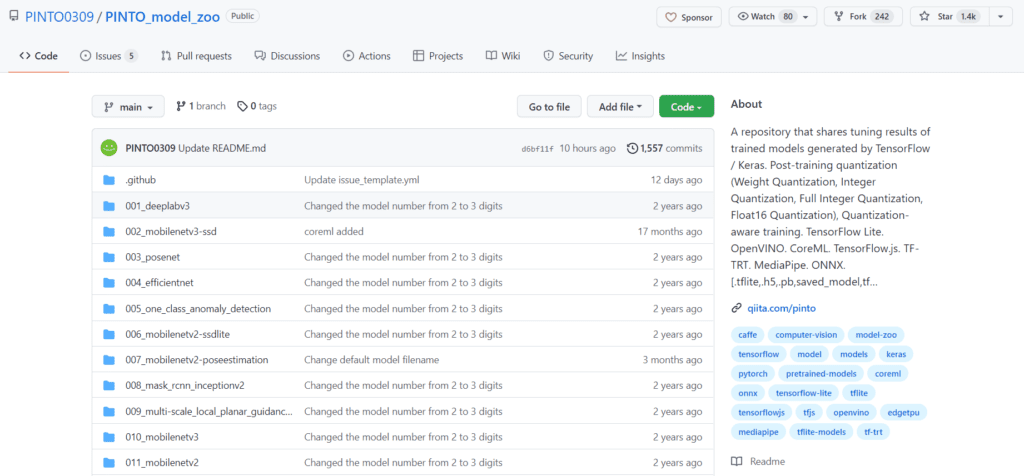

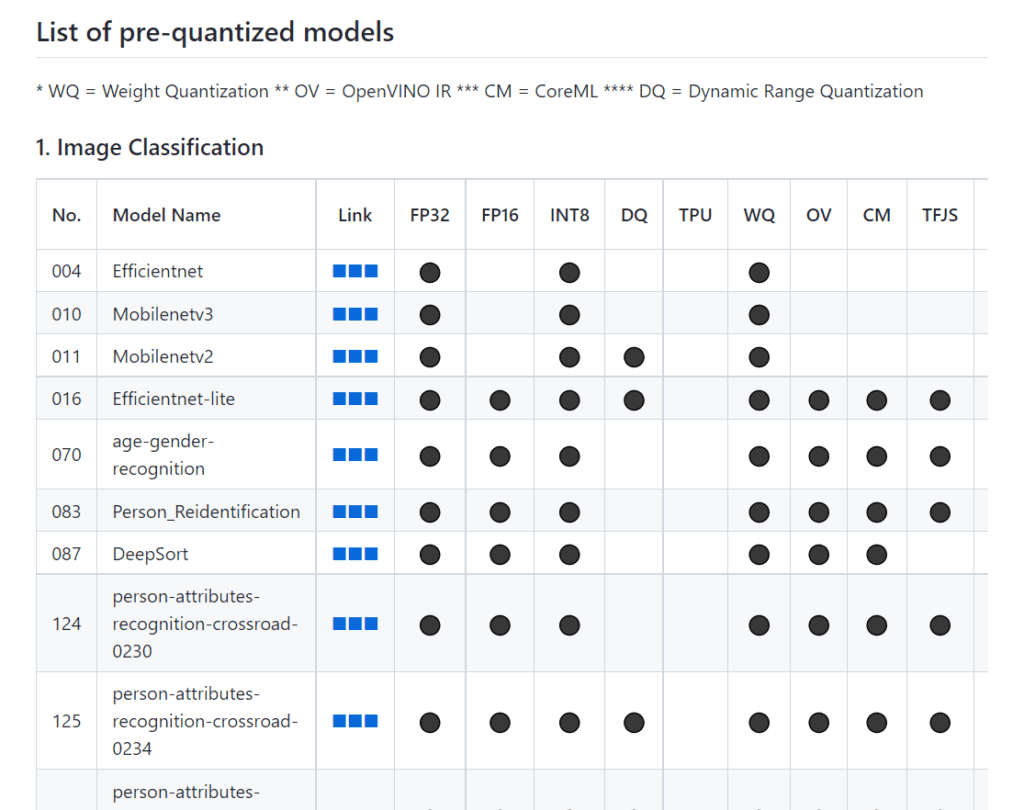

9. Pinto Model Zoo

Source: user-images.githubusercontent.com/33194443/104581604-2592cb00-56a2-11eb-9610-5eaa0afb6e1f.png

The PINTO Model Zoo is created by Katsuya Hyodo, a programmer and Intel Software Innovator Program member. It is a repository that shares tuning results of trained models generated by Tensorflow.

Source: drive.google.com/file/d/1qpGnsizlYr_a5HEiBye8HQRpNwvO43Dd/view?usp=sharing

The zoo contains a huge amount of 252 optimized models covering a wide range of machine learning domains.

The provided models have been optimized using various techniques like Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization) and Quantization-aware training.

Source: github.com/PINTO0309/PINTO_model_zoo

Models are available for many different platforms like TensorFlow Lite, OpenVINO, CoreML, TensorFlow.js, TF-TRT, MediaPipe, ONNX. Pinto Model Zoo is the go-to resource if you want optimized versions of popular ML models.

10. ONNX model zoo

Source: github.com/onnx/models/blob/master/resource/images/ONNX_logo_main.png

The ONNX Model Zoo is a collection of pre-trained, state-of-the-art models in the ONNX format

Open Neural Network Exchange (ONNX) is an open standard format for representing machine learning models contributed by community members. It offers the benefit of interoperability and enables you to use your preferred framework with your chosen inference engine. You can think of it as a common language of models for all the popular ML frameworks to talk to each other.

It contains models for a number of different tasks in different domains:

Vision

- Image Classification

- Object Detection & Image Segmentation

- Body, Face & Gesture Analysis

- Image Manipulation

Language

- Machine Comprehension

- Machine Translation

- Language Modelling

Other

- Visual Question Answering & Dialog

- Speech & Audio Processing

- Other interesting models

Source: github.com/onnx/models/blob/master/resource/images/ONNX_logo_main.png

Accompanying each model are Jupyter notebooks for model training and running inference with the trained model. The notebooks are written in Python and include links to the training dataset and references to the original paper that describes the model architecture.

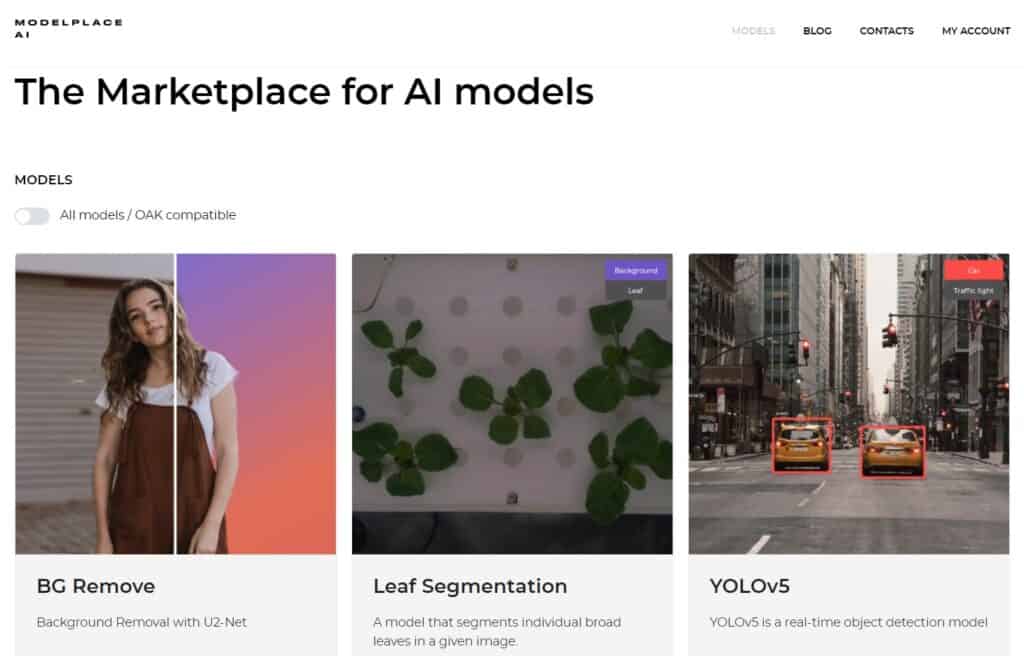

11. BONUS: Modelplace.AI

Modelplace.AI is a marketplace for machine learning models and a platform for the community to share their custom-trained models. It has a growing collection of models for various Computer Vision tasks, be it Classification, Object Detection, Pose Estimation, Segmentation, or Text Detection.

Source: modelplace.ai/models

11.1 Web Interface for trying models without downloading

One significant factor that sets Modelplace.AI separate from the other model repositories is that you can use their web interface to try out the model of your liking with your custom images. You can also compare models that perform similar tasks against one another on standard benchmarks.

ImageURL : learnopencv.com/wp-content/uploads/2021/10/demo-1.gif

11.2 Cloud API

Modelplace.AI provides a convenient Cloud API you can use in your desktop, mobile, or edge application.

11.3 Import model as a Python wheel

Alternatively, you can choose to download the optimized version of that model depending on the platform you want to deploy it on. For the Python environment, they package the models as a Python wheel file so you can get the model up and running swiftly with only a few lines of code.

To know more about Modelplace.AI, check out our blog post, where we detail its features and how you can use it.

Conclusion

Start taking advantage of these resources whenever starting with a Machine learning application and check if there exists a similar model that you can use or build upon, instead of training your models from scratch to speed up the development and deployment process.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning