batch normalization

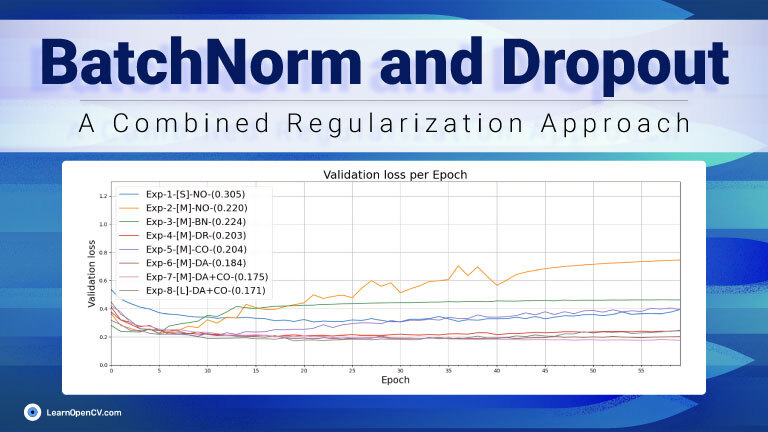

In Deep Learning, Batch Normalization (BatchNorm) and Dropout, as Regularizers, are two powerful techniques used to optimize model performance, prevent overfitting, and speed up convergence. While both have their individual

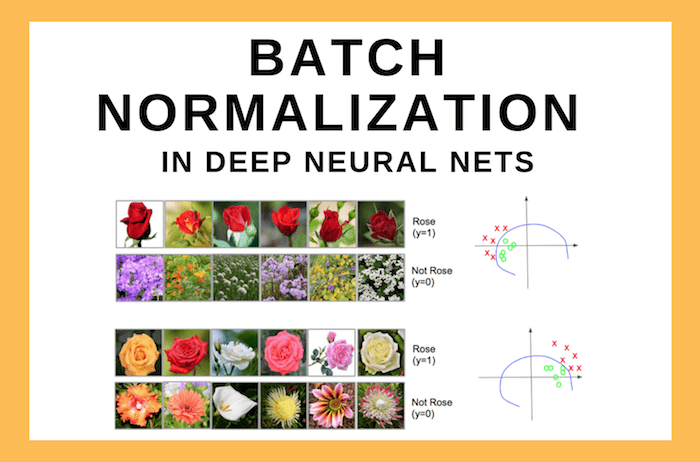

In this post, we will learn what Batch Normalization is, why it is needed, how it works, and how to implement it using Keras. Batch Normalization was first introduced by