Tracking is one of the most important components in object detection when it comes to real-world applications. Applications like real-time surveillance and autonomous driving systems cannot reach their full potential without tracking. Be it persons or vehicles, object tracking plays a major role. However, testing a lot of detection models and reidentification models is cumbersome. For that, we are going to streamline the process of real time tracking using Deep SORT with Torchvision detectors.

In this article, we will create a small codebase that will allow us to test any object detection model from Torchvision. We will couple that with a real-time Deep SORT library that will give us access to a range of Re-ID models. In addition, we will also do qualitative and quantitative analysis of FPS and result for different combinations of detectors and Re-ID models.

What Are Re-ID Models and Why Do We Need Them?

Before we dive into the coding part, let’s discuss re-identification models (Re-ID for short).

Re-ID models help us track the same object in different frames with the same ID. In most cases, Re-ID models are deep learning based and are very good at extracting features from images and frames. Re-ID models are pretrained on re-identification datasets. During the training process, they learn how the same person may look from different angles and in different lighting conditions. After training, we can use the weights for real-time re-identification of a person in video frames.

But what if we want to track something other than persons?

Although it is recommended to use a person re-identification model when tracking persons, we can use any large pretrained model e.g, if we want to track and Re-ID cars in video frames. For such a case, we do not have a Re-ID model trained on cars. However, we can use an ImageNet pretrained model for this. Since the model is already trained on millions of images, it will be able to extract the features of cars easily.

Similarly, we can also use foundation image models (e.g. CLIP ResNet50) for Re-ID. We are going to use such models in this article.

When using a Re-ID model with an object detection model, the process becomes two-stage. Although single-stage trackers, which do detection, tracking, and re-identification, are becoming common, we still have use cases for separate Re-ID models.

Why Do We Need Re-ID Models?

Re-ID models have a lot of advantages, especially in multi-camera settings where security and accuracy are top priorities.

Multi-camera setting: When using a multi-camera setting for tracking persons, a separate Re-ID model can become very helpful. It can recognize the movement and features of the same person across cameras. Eventually, we can assign the same ID to the same person even if he appears on different cameras.

If we take a look at the above example, we can see that the same person is assigned the same ID across cameras. Even though the model takes a few frames to capture the features of the persons and assign the IDs, it eventually does so.

Association across occlusions: There can be occlusions when persons or vehicles move about in the video frames. If a person gets occluded behind an object for a few frames and emerges again, then a Re-ID model can associate the same ID as before occlusion.

Across lighting conditions: Re-ID models also help when there is a change in lighting conditions. If the detector fails in low light conditions and is able to detect the person again after a few frames, then the Re-ID model can associate with the previous ID.

Real Time Deep SORT Setup

To use different detection models from Torchvision along with Deep SORT, we need to install a few libraries.

The most important of them all is the deep-sort-realtime library. It gives us access to the Deep SORT algorithm through API calls. Along with that, it has the option to choose from several Re-ID models which have been pretrained on huge foundation datasets like ImageNet. These models include a lot of OpenAI CLIP image models and torchreid models as well.

Before carrying out the following steps, please ensure that you have installed PyTorch along with CUDA.

To install the deep-sort-realtime library, execute the following command in the environment of choice:

pip install deep-sort-realtime

This gives us access to the Deep SORT algorithm and one built-in mobilenet Re-ID embedder.

But if we want to access the OpenAI CLIP Re-ID and torchreid embedders, then we need to perform additional steps.

To use the CLIP embedders, we will install the OpenAI CLIP library using the following command:

pip install git+https://github.com/openai/CLIP.git

This allows us to use several CLIP ResNet and Vision Transformer models as embedders.

The final steps involve installing the torchreid library in case we want to use their embedders as Re-ID models. However, please note the library provides Re-ID models specifically trained for person re-identification. Skip this step if you don’t plan on executing this.

First, we need to clone the repository and make it the current working directory. You can clone it in a directory other than the project directory.

git clone https://github.com/KaiyangZhou/deep-person-reid.git

cd deep-person-reid/

Next, check the requirements.txt file and install the dependencies as per your need. Once that is done, install the library in development mode.

python setup.py develop

Having completed all the installation steps, we can move on to the coding part.

Code for Real Time Deep SORT using Torchvision

Here we will handle the preprocessing, detection, and post-processing of the tracking outputs.

The deep-sort-realtime library will handle the tracking details internally. We aim to create a modular codebase for the rapid prototyping of several detection and Re-ID models.

The two main Python files that we need are deep_sort_tracking.py and utils.py.The contents of the coco_classes.py file that contains a list of all the COCO dataset classes is as below:

COCO_91_CLASSES = [

'__background__',

'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus',

'train', 'truck', 'boat', 'traffic light', 'fire hydrant', 'N/A', 'stop sign',

'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'N/A', 'backpack', 'umbrella', 'N/A', 'N/A',

'handbag', 'tie', 'suitcase', 'frisbee', 'skis', 'snowboard', 'sports ball',

'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard', 'tennis racket',

'bottle', 'N/A', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl',

'banana', 'apple', 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza',

'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed', 'N/A', 'dining table',

'N/A', 'N/A', 'toilet', 'N/A', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'N/A', 'book',

'clock', 'vase', 'scissors', 'teddy bear', 'hair drier', 'toothbrush'

]

This will be used for mapping the class indices and the class names.

Deep SORT Tracking Code

The deep_sort_tracking.py is the executable script that we will run from the command line.

It handles the detection model, the Re-ID models, and the classes we want to track.

The code will clarify this further. Let’s begin with the import statements and the argument parsers.

import torch

import torchvision

import cv2

import os

import time

import argparse

import numpy as np

from torchvision.transforms import ToTensor

from deep_sort_realtime.deepsort_tracker import DeepSort

from utils import convert_detections, annotate

from coco_classes import COCO_91_CLASSES

parser = argparse.ArgumentParser()

parser.add_argument(

'--input',

default='input/mvmhat_1_1.mp4',

help='path to input video',

)

parser.add_argument(

'--imgsz',

default=None,

help='image resize, 640 will resize images to 640x640',

type=int

)

parser.add_argument(

'--model',

default='fasterrcnn_resnet50_fpn_v2',

help='model name',

choices=[

'fasterrcnn_resnet50_fpn_v2',

'fasterrcnn_resnet50_fpn',

'fasterrcnn_mobilenet_v3_large_fpn',

'fasterrcnn_mobilenet_v3_large_320_fpn',

'fcos_resnet50_fpn',

'ssd300_vgg16',

'ssdlite320_mobilenet_v3_large',

'retinanet_resnet50_fpn',

'retinanet_resnet50_fpn_v2'

]

)

parser.add_argument(

'--threshold',

default=0.8,

help='score threshold to filter out detections',

type=float

)

parser.add_argument(

'--embedder',

default='mobilenet',

help='type of feature extractor to use',

choices=[

"mobilenet",

"torchreid",

"clip_RN50",

"clip_RN101",

"clip_RN50x4",

"clip_RN50x16",

"clip_ViT-B/32",

"clip_ViT-B/16"

]

)

parser.add_argument(

'--show',

action='store_true',

help='visualize results in real-time on screen'

)

parser.add_argument(

'--cls',

nargs='+',

default=[1],

help='which classes to track',

type=int

)

args = parser.parse_args()

We import the DeepSort tracker class from deep_sort_realtime package that we will later use to initialize the tracker. We also import the convert_detections and annotate functions from the utils package. For now, we need not go into the details of the above two functions. Let’s discuss them while writing the code for the utils.py file.

The description of all the argument parsers that we created above:

--input: The path to the input video file.--imgsz: This accepts a single integer indicating the square shape to which the image should be resized.--model: This is the Torchvision model enum. We can choose from any of the object detection models from Torchvision.--threshold: The score threshold below which all the detections will be discarded.--embedder: The Re-ID embedder model that we want to use.--show: A boolean argument indicating whether we want to visualize the output in real-time or not.--cls: This accepts the class indices that we want to track. By default, it tracks only persons. If we want to track persons and bicycles, we should provide--cls 1 2.

Next, we will set the seed, define the output directory and print the information regarding the experiment.

np.random.seed(42)

OUT_DIR = 'outputs'

os.makedirs(OUT_DIR, exist_ok=True)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

COLORS = np.random.randint(0, 255, size=(len(COCO_91_CLASSES), 3))

print(f"Tracking: {[COCO_91_CLASSES[idx] for idx in args.cls]}")

print(f"Detector: {args.model}")

print(f"Re-ID embedder: {args.embedder}")

Going further, we need to load the detection model, Re-ID model, and the video file.

# Load model.

model = getattr(torchvision.models.detection, args.model)(weights='DEFAULT')

# Set model to evaluation mode.

model.eval().to(device)

# Initialize a SORT tracker object.

tracker = DeepSort(max_age=30, embedder=args.embedder)

VIDEO_PATH = args.input

cap = cv2.VideoCapture(VIDEO_PATH)

frame_width = int(cap.get(3))

frame_height = int(cap.get(4))

frame_fps = int(cap.get(5))

frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

save_name = VIDEO_PATH.split(os.path.sep)[-1].split('.')[0]

# Define codec and create VideoWriter object.

out = cv2.VideoWriter(

f"{OUT_DIR}/{save_name}_{args.model}_{args.embedder}.mp4",

cv2.VideoWriter_fourcc(*'mp4v'), frame_fps,

(frame_width, frame_height)

)

frame_count = 0 # To count total frames.

total_fps = 0 # To get the final frames per second.

As you may see, we are also defining the video information that we use to define the output file’s name. The frame_count and total_fps will help us keep track of the number of frames iterated over and the FPS at which inference is happening.

The final part of this code file includes a large while block for iterating over the video frames and carrying out the detection & tracking inference.

while cap.isOpened():

# Read a frame

ret, frame = cap.read()

if ret:

if args.imgsz != None:

resized_frame = cv2.resize(

cv2.cvtColor(frame, cv2.COLOR_BGR2RGB),

(args.imgsz, args.imgsz)

)

else:

resized_frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# Convert frame to tensor and send it to device (cpu or cuda).

frame_tensor = ToTensor()(resized_frame).to(device)

start_time = time.time()

# Feed frame to model and get detections.

det_start_time = time.time()

with torch.no_grad():

detections = model([frame_tensor])[0]

det_end_time = time.time()

det_fps = 1 / (det_end_time - det_start_time)

# Convert detections to Deep SORT format.

detections = convert_detections(detections, args.threshold, args.cls)

# Update tracker with detections.

track_start_time = time.time()

tracks = tracker.update_tracks(detections, frame=frame)

track_end_time = time.time()

track_fps = 1 / (track_end_time - track_start_time)

end_time = time.time()

fps = 1 / (end_time - start_time)

# Add `fps` to `total_fps`.

total_fps += fps

# Increment frame count.

frame_count += 1

print(f"Frame {frame_count}/{frames}",

f"Detection FPS: {det_fps:.1f},",

f"Tracking FPS: {track_fps:.1f}, Total FPS: {fps:.1f}")

# Draw bounding boxes and labels on frame.

if len(tracks) > 0:

frame = annotate(

tracks,

frame,

resized_frame,

frame_width,

frame_height,

COLORS

)

cv2.putText(

frame,

f"FPS: {fps:.1f}",

(int(20), int(40)),

fontFace=cv2.FONT_HERSHEY_SIMPLEX,

fontScale=1,

color=(0, 0, 255),

thickness=2,

lineType=cv2.LINE_AA

)

out.write(frame)

if args.show:

# Display or save output frame.

cv2.imshow("Output", frame)

# Press q to quit.

if cv2.waitKey(1) & 0xFF == ord("q"):

break

else:

break

# Release resources.

cap.release()

cv2.destroyAllWindows()

After processing each frame, we pass the tensor through the detection model to obtain the detections. The detections need to be in a detection format before passing it to the tracker. We call the convert_detections() function for this. Along with the detections, the detection threshold and class indices are also passed to it.

After we get the detections in the proper format, we call the update_tracks() method of the tracker object.

In the end, we annotate the frame with the bounding boxes, the detection IDs, and the FPS and show the output on the screen. Along with that, we also show the FPS for detection, tracking, and the final FPS on the terminal.

This is all we need for the main script. But a few important things happen in the utils.py file that we will analyze next.

Utility Scripts for Detection and Annotation

There are two functions in the utils.py file. Let’s start with the imports and the convert_detections() function.

import cv2

import numpy as np

# Define a function to convert detections to SORT format.

def convert_detections(detections, threshold, classes):

# Get the bounding boxes, labels and scores from the detections dictionary.

boxes = detections["boxes"].cpu().numpy()

labels = detections["labels"].cpu().numpy()

scores = detections["scores"].cpu().numpy()

lbl_mask = np.isin(labels, classes)

scores = scores[lbl_mask]

# Filter out low confidence scores and non-person classes.

mask = scores > threshold

boxes = boxes[lbl_mask][mask]

scores = scores[mask]

labels = labels[lbl_mask][mask]

# Convert boxes to [x1, y1, w, h, score] format.

final_boxes = []

for i, box in enumerate(boxes):

# Append ([x, y, w, h], score, label_string).

final_boxes.append(

(

[box[0], box[1], box[2] - box[0], box[3] - box[1]],

scores[i],

str(labels[i])

)

)

return final_boxes

The convert_detections() function accepts the output from the model and returns only those class boxes and labels which we want to track. For each object, the tracker library expects a tuple containing the bounding boxes in x, y, w, h format, the scores, and the label indices. We store it in the final_boxes list and return it at the end.

The annotate() function accepts the tracker output and the frame information.

# Function for bounding box and ID annotation.

def annotate(tracks, frame, resized_frame, frame_width, frame_height, colors):

for track in tracks:

if not track.is_confirmed():

continue

track_id = track.track_id

track_class = track.det_class

x1, y1, x2, y2 = track.to_ltrb()

p1 = (int(x1/resized_frame.shape[1]*frame_width), int(y1/resized_frame.shape[0]*frame_height))

p2 = (int(x2/resized_frame.shape[1]*frame_width), int(y2/resized_frame.shape[0]*frame_height))

# Annotate boxes.

color = colors[int(track_class)]

cv2.rectangle(

frame,

p1,

p2,

color=(int(color[0]), int(color[1]), int(color[2])),

thickness=2

)

# Annotate ID.

cv2.putText(

frame, f"ID: {track_id}",

(p1[0], p1[1] - 10),

cv2.FONT_HERSHEY_SIMPLEX,

0.5,

(0, 255, 0),

2,

lineType=cv2.LINE_AA

)

return frame

It extracts the object tracking id using the track_id attribute and the class label using the det_class attribute. Then we annotate the frame with the bounding box and ID and return it.

This is all we need for the coding part. In the next section, we will conduct several Deep SORT tracking experiments using Torchvision models and analyze the results.

Deep SORT Tracking using Torchvision Detection Model – Experiments

Note: All inference experiments were run on a laptop with GTX 1060 GPU, 8th generation i7 CPU, and 16 GB of RAM.

Let’s run the first Deep SORT inference using the default Torchvision detection model and Re-ID embedder.

python deep_sort_tracking.py --input input/video_traffic_1.mp4 --show

The above command will run the script with the Faster RCNN ResNet50 FPN V2 model along with MobileNet Re-ID embedding model. Also, it will only track persons by default.

Here is the video result.

The results are good even with an average of 2.5 FPS. The model can correctly track the persons. It’s remarkable to note that the robustness of the Faster RCNN model even detects the person inside the car in the final few frames.

But can we make the inference even faster? Yes, we can use the Faster RCNN MobileNetV3 model which is a light weight detector. We can couple that with the MobileNet Re-ID model to get excellent results.

python deep_sort_tracking.py --input input/video_traffic_1.mp4 --model fasterrcnn_mobilenet_v3_large_fpn --embedder mobilenet --cls 1 3 --show

This time we provide --cls 1 3 which correspond to the class indices of person and car in the COCO dataset.

The Deep SORT tracking runs almost at 8 FPS. This is mostly because of the Faster RCNN MobileNetV3 model. The results are also good. All the cars are getting detected and there is less switching between the IDs.

Next, we will use the OpenAI CLIP ResNet50 embedder as the Re-ID model and the Torchvision RetinaNet detector. Here we use a much more dense traffic scene where we will track cars and trucks.

python deep_sort_tracking.py --input input/video_traffic_2.mp4 --model retinanet_resnet50_fpn_v2 --embedder clip_RN50 --cls 3 8 --show --threshold 0.7

The results are not bad. The detector is able to detect almost all the cars and trucks and the Deep SORT tracker is tracking almost all of them. However, there are a few ID switches as well. One interesting thing to note is that the detector sometimes detects faraway trucks as cars. It gets corrected as the truck approaches nearer. But the ID does not switch. This shows another usefulness of using a Re-ID model.

For the final experiment, we will use the torchreid library in a very challenging setting. By default, the torchreid model uses the osnet_ain_x1_0 which is a pretrained person Re-ID model. Along with that, we will use the RetinaNet detection model.

python deep_sort_tracking.py --input input/mvmhat_1_1.mp4 --model retinanet_resnet50_fpn_v2

--embedder torchreid --cls 1 --show --threshold 0.7

Although the FPS is a bit lower because of the RetinaNet model, the results are very good. Despite multiple persons crossing each other, we only see two ID switches.

Conclusion

In this article, we created a simple codebase to combine different Torchvision detection models with Re-ID models to carry out Deep SORT tracking. The results were not perfect but trying out different combinations of Re-ID embedders and object detectors can prove useful. Such a solution can be taken further for tracking traffic in real-time using light weight detectors which are only trained on vehicles.

Let us know in the comment section what application you are trying to build using this.

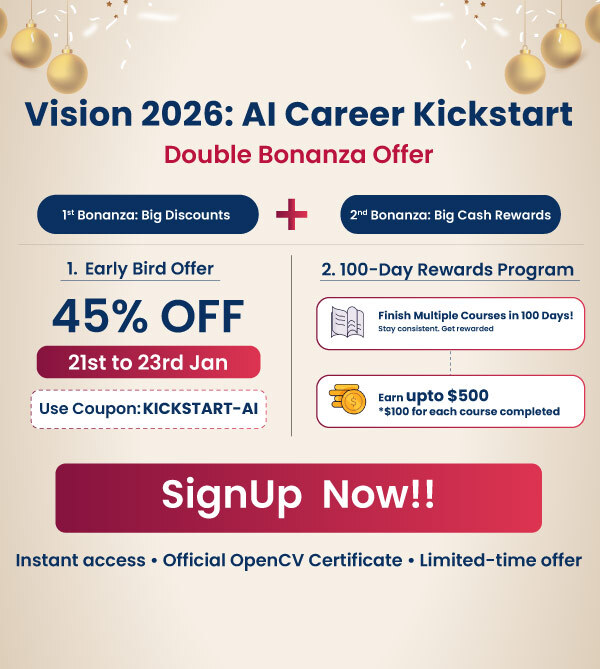

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning