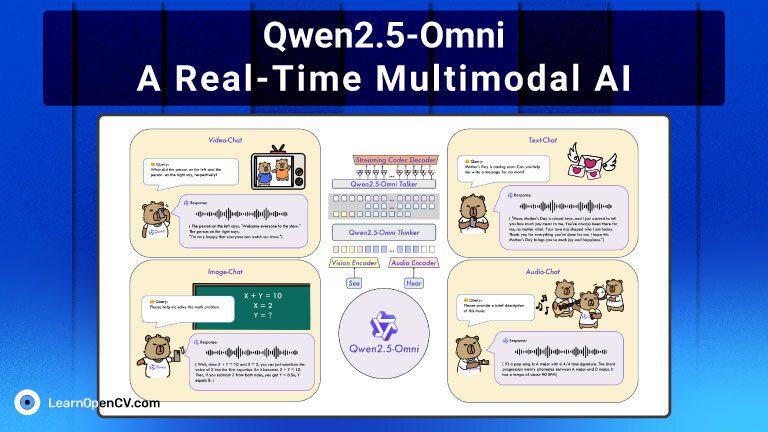

Qwen2.5-Omni is a groundbreaking end-to-end multimodal foundation model developed by Alibaba Qwen Group. In a unified and streaming manner, it’s designed to perceive and generate across multiple modalities – including text, images, audio, and video. With a series of innovations across its architecture, training pipeline, and real-time capabilities, Qwen2.5-Omni stands out as one of the most comprehensive models to date.

This blog post presents a detailed walkthrough of the model based on the official technical report, structured section-by-section to help you grasp how this next-generation AI assistant works, its innovations, and its real-world capabilities.

- Qwen2.5-Omni

- Qwen2.5-Omni’s Architecture Overview

- Unified Qwen2.5-Omni’s Multimodal Perception

- Qwen2.5-Omni’s Output Generation

- Qwen2.5-Omni’s Designs for Streaming Outputs

- Pre-training Qwen2.5-Omni

- Post-training Qwen2.5-Omni

- Conclusion

- References

Qwen2.5-Omni

Qwen2.5-Omni is a unified multimodal model built to perform real-time perception and generation across various input formats, including plain text, audio signals, static images, and videos. It’s designed with a streaming-first mindset, making it ideal for real-world applications like AI assistants, voice interfaces, and smart agents.

Unlike modular approaches that stitch together separate models, Qwen2.5-Omni is trained end-to-end. This means the model natively understands how to coordinate different input modalities and generate both text and speech as output — synchronously and coherently.

Qwen2.5-Omni’s Architecture Overview

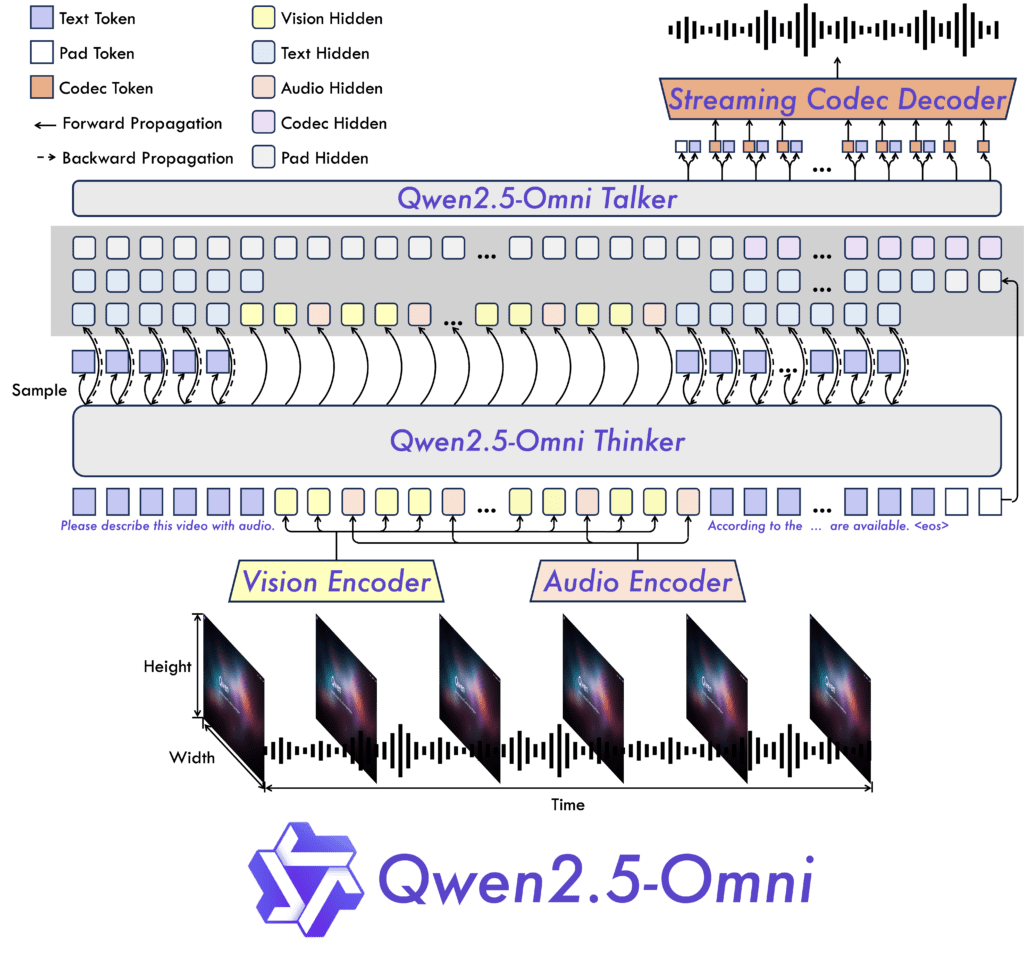

At the core of Qwen2.5-Omni lies the Thinker-Talker framework, but the overall architecture consists of four major components:

- Multimodal encoders for perception: audio and vision

- Thinker is a large language model (LLM) that handles reasoning, comprehension, and text generation.

- Talker is a streaming speech generation module that converts semantic representations and tokenized text into realistic audio.

- Streaming mechanisms: Designed to minimize latency and memory usage in real-time scenarios

This design mimics human behavior. The brain (Thinker) decides what to say, and the vocal system (Talker) expresses it. Separating responsibilities allows both parts to specialize.

Unified Qwen2.5-Omni’s Multimodal Perception

Qwen2.5-Omni is capable of consuming and interpreting the following modalities:

- Text: Tokenized with a byte-level BPE vocabulary (151K tokens)

- Audio is resampled to 16 kHz and converted into a 128-channel mel-spectrogram using a 25ms window and 10ms hop. It is then passed through an encoder derived from Whisper-large-v3. Each output frame corresponds to ~40ms of audio.

- Images and videos are processed by a Vision Transformer (ViT)-based encoder (~675M parameters), trained on both image and video data. Videos are sampled using a dynamic frame rate to align with audio timing. Static images are treated as two identical frames for consistency.

- Mixed: Inputs such as video+audio or text+image are jointly processed

Temporal Alignment for Videos using TMRoPE in Qwen2.5-Omni

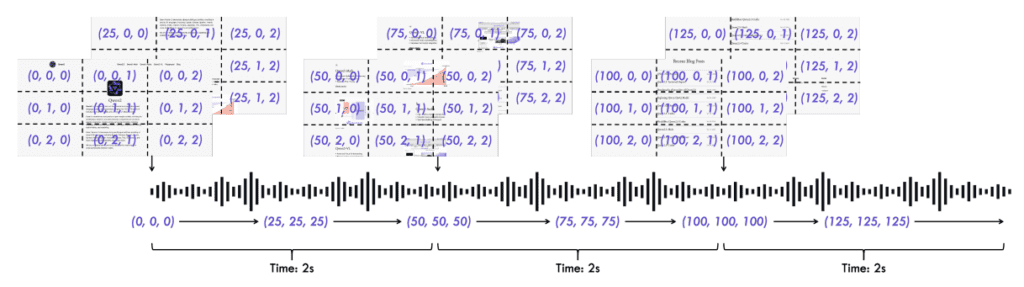

To synchronize time-sensitive audio and video inputs, Qwen2.5-Omni introduces TMRoPE (Time-aligned Multimodal Rotary Position Embedding) built upon a previous idea: M-RoPE (Multimodal RoPE).

- Positional Embeddings are decomposed into three parts: temporal, height, and width.

- Text doesn’t have spatial or temporal structure (just sequence). So only temporal ID is used, like in traditional transformers.

- For image inputs, each image is static, so the temporal ID is the same for all tokens. But each token in the image gets unique height and width IDs. This preserves spatial structure.

- For audios, each audio frame = 40ms of sound, and therefore every frame gets one temporal ID (increasing by 1 every 40ms). There are no height/width positions — only time matters.

- For videos with audio inputs, as videos are just a sequence of image frames, each frame will get a unique temporal ID, and inside each frame, tokens have height/width IDs. Every 40ms audio frame will have one audio position ID (same as before). Crucially, the temporal IDs of audio and video frames are aligned using a shared 40ms base unit.

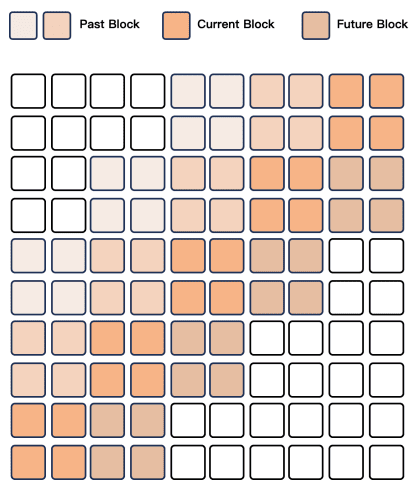

- Inputs from all modalities are merged in a sequence and arranged using a time-interleaving algorithm every 2 seconds to ensure real-time perception and processing.

Time Interleaving used in Qwen2.5-Omni

Once all tokens (from video, audio, etc.) are embedded and labeled with TMRoPE, they’re arranged in a special order that mixes them based on time. For every 2-second segment, the model puts visual tokens first, followed by audio tokens. This is called time-interleaving.

Handling Variable Frame Rates

Real-world videos may have non-uniform frame rates (e.g., 24 fps, 30 fps, or variable). TMRoPE uses real-time to set temporal IDs. It ensures each 40ms = one unit of temporal position, no matter the actual fps. This guarantees accurate time alignment between video and audio.

Position Numbering Across Modalities

To make sure that the position IDs are non-overlapping across modalities, position numbering for each modality is initialized by incrementing the maximum position ID of the preceding modality by one. This helps the attention mechanism differentiate inputs from different modalities while still reasoning over them jointly.

Qwen2.5-Omni’s Output Generation

Text Generation by Qwen2.5-Omni’s Thinker

Whenever the model needs to produce text output (like answering a question, describing a scene, or transcribing speech), that responsibility falls on Thinker, Qwen2.5-Omni’s Thinker module uses tried-and-true LLM techniques to generate text that is:

- Context-aware (thanks to autoregression), where text is generated in the form of one token at a time and each new token depends on all previously generated tokens.

- Diverse (via top-p sampling),

- And natural-sounding (with repetition control).

- Adheres to the ChatML dialogue format for consistency in interactive applications

For every step, the model assigns a probability to every token in its vocabulary (over 150,000 tokens in Qwen). It then samples from this distribution, selecting the next token based on likelihood. This approach is common to almost all modern LLMs.

High-Quality Speech Generation by Qwen2.5-Omni’s Talker

Talker, the speech generation module, transforms semantic intent into expressive and fluent speech. Once the Thinker has generated internal thoughts and selected words (tokens), it shares two types of information with Talker:

- High-level semantic representations from Thinker. These contain rich semantic meaning, emotion, and intent.

- Discrete tokens from Thinker for phonetic clarity. Tokens clarify exact pronunciation and structure.

In streaming mode, Talker starts speaking before the full sentence is available. However, to sound natural, the tone still needs to be predicted. The high-level embeddings from Thinker carry this emotional and stylistic information. This enables Talker to adjust pitch, rhythm, and tone in real time.

The Talker uses qwen-tts-tokenizer, a custom codec that produces audio tokens decoded into audio waveforms using a causal decoder. Traditional TTS systems often need precise timing: this word starts at 0.6s, ends at 1.2s, etc. Qwen2.5-Omni doesn’t need that. Talker is trained end-to-end using only text and audio without forced alignment.

Qwen2.5-Omni’s Designs for Streaming Outputs

There are a countable number of main sources of initial latency in streaming interactions. The Qwen2.5-Omni paper explores various optimizations, both:

- Algorithmic – like streaming encoders, sliding-window attention, Thinker-Talker parallelism.

- Architectural – like lightweight vision/audio encoders and fast decoders.

The goal is to minimize startup delay in a real-world system that must hear or see something, understand it, and start talking back immediately.

Let’s go through the optimizations –

Qwen2.5-Omni Prefilling Support

Prefilling means preparing the model with some input before it starts generating output, which is again useful for streaming models.

- Audio encoder processes in 2-second blocks with temporal block-wise attention instead of all at once. It allows the system to start inference early, without waiting for the full input to arrive.

Two optimizations for the vision encoder:

- Flash Attention: An optimized algorithm that speeds up self-attention while reducing memory use.

- 2×2 Token Merging – A lightweight MLP merges each 2×2 grid of visual tokens into one. This reduces the total number of tokens that effectively downsamples the visual input.

The vision encoder splits images into patches, similar to how ViT works. A patch size of 14 allows variable-sized images to be tokenized efficiently

Qwen2.5-Omni Streaming Codec Generation

Streaming speech generation uses:

- A Flow-Matching DiT model to generate mel-spectrograms from audio tokens. Flow-Matching is a generative method for learning mappings between distributions. It converts discrete speech tokens (code) → into mel-spectrograms (time-frequency representations of audio).

- A modified BigVGAN vocoder to convert spectrograms into waveforms. Once the mel-spectrogram is generated, it’s fed into a vocoder to produce raw audio. BigVGAN GAN-based neural vocoder is known for realistic and high-quality waveform generation.

The decoding uses block-based sliding window attention, with a 4-block receptive field (2 lookback, 1 lookahead) to ensure context is maintained in low-latency settings.

Pre-training Qwen2.5-Omni

The model is pre-trained in three carefully designed stages, each with a specific objective:

Stage 1: Encoder Alignment

The language model (LLM) is kept frozen (no training). Only the vision and audio encoders are trained at this point. The goal is to align the encoders’ outputs with the semantic space of the LLM. This helps the LLM later process multimodal inputs without being disturbed by unaligned embeddings.

Stage 2: Full Multimodal Fine-Tuning

Now, the entire model is trainable: LLM, audio encoder, and vision encoder. The training data is more diverse and includes image-text, video-text, audio-text, video-audio, etc. This phase encourages deep integration across modalities and tasks.

In this stage, Qwen2.5-Omni multimodal model is trained on –

- 800B image/video tokens

- 300B audio tokens

- 100B video-audio tokens

In this stage, hierarchical tags have been replaced with natural language prompts to improve generalization.

Stage 3: Long-Sequence Support

- Maximum sequence length has been increased from 8192 to 32,768 tokens.

- It incorporates long audio and video data.

- It also enables understanding of long conversations, audio, and video segments

Model Initialization from Existing Backbones

To accelerate and stabilize training, Qwen2.5-Omni uses –

- LLM → from Qwen2.5

- Vision encoder → from Qwen2.5-VL

- Audio encoder → from Whisper-large-v3 (a strong open-source speech model)

It provides a solid foundation, avoiding training from scratch.

The two encoders are trained separately on the fixed LLM, with both initially focusing on training their respective adapters before training the encoders. Helps align multimodal features with the LLM gradually and safely.

Post-training Qwen2.5-Omni

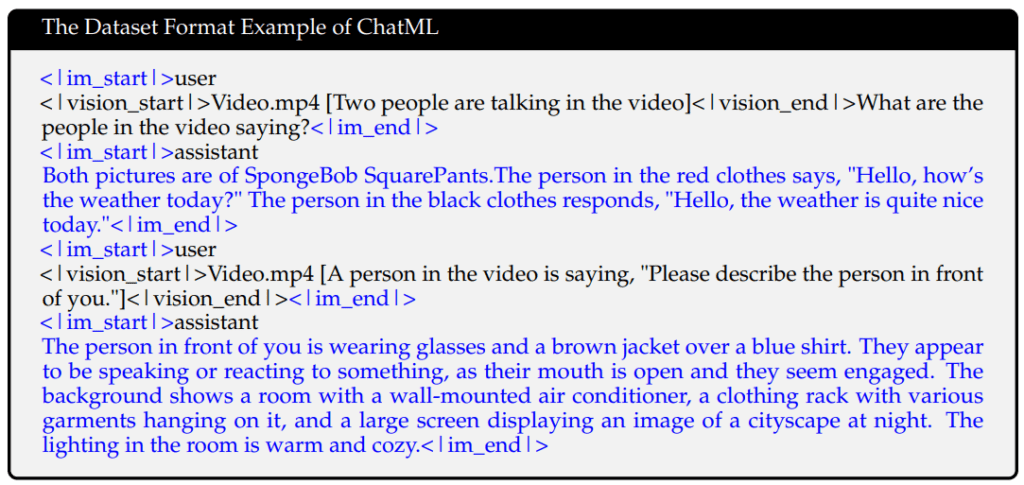

Post-training, Qwen2.5-Omni is fine-tuned to follow instructions using ChatML format:

- Structure: <|system|>, <|user|>, <|assistant|>

- Supports multi-turn conversations

- Supports text-only, audio-based, visual, and mixed-modality conversations

Using this format helps the Thinker learn structured dialogue and maintain consistency across modalities and turns.

Qwen2.5-Omni Talker’s Post-training Strategy

Talker, the component responsible for streaming voice output, is trained in three carefully designed stages:

Stage 1: Speech Continuation

It is like standard next-token prediction but for audio tokens. Talker is trained to generate speech outputs that flow naturally, maintaining context, tone, and Rhythm from the previous tokens. We can think of it as learning how to keep talking coherently, just like a human speaker does.

Stage 2 – Stability via DPO

DPO (Direct Preference Optimization) is a reinforcement learning technique. Instead of just optimizing likelihood, it directly teaches the model to prefer high-quality outputs over bad ones. The model is shown pairs of speech responses (good vs. bad). It learns to prefer the “better sounding” one, based on Lower word error rate (WER) and fewer punctuation errors.

Stage 3 – Multi-Speaker Instruction Tuning

Now Talker is trained to sound like different speakers, adapt tone, emotion, prosody, and style based on instructions or context. This gives users or downstream tasks the ability to control how the speech sounds.

Timbre Disentanglement has also been used to decouple voice from semantic content. Timbre represents the unique quality of a voice. Disentanglement helps in separating this quality from the text’s meaning. It ensures voices stay independent from what’s being said so we can use any speaker voice with any content.

Reinforcement Learning techniques filter low-quality outputs using Word Error Rate (WER) and Punctuation Feedback.

Once the base Talker is trained, a final fine-tuning step is done to teach it how to speak like specific individuals. It improves the smoothness and realism of the voice

So this was all about Qwen2.5-Omni.

Conclusion

Qwen2.5-Omni represents a significant step forward in the quest for general-purpose, real-time, multimodal AI assistants. As the world moves toward multimodal computing, Qwen2.5-Omni provides a glimpse into the future: an AI that can see, hear, understand, and speak all at once. By combining powerful architecture (Thinker-Talker), a deeply integrated training pipeline, and smart inference designs like TMRoPE and chunked streaming, the model is capable of performing in a broad array of real-world scenarios.

Qwen2.5-Omni is not just another multimodal model. It’s a well-engineered streaming-first AI assistant that understands and responds across text, speech, vision, and video, in real time, with natural voice, long-sequence reasoning, and multi-speaker expressiveness.