Let’s play rock, paper scissors.

You think of your move and I’ll make mine below this line in 1…2…and 3.

I choose ROCK.

Well? …who won. It doesn’t matter cause you probably glanced at the word “ROCK” before thinking about a move or maybe you didn’t pay any heed to my feeble attempt at playing rock, paper, scissor with you in a post.

So why am I making some miserable attempts trying to play this game in text with you?

Let’s just say, a couple of months down the road in lockdown you just run out of fun ideas. To be honest I desperately need to socialize and do something fun.

Ideally, I would love to play games with some good friends, …or just friends…or anyone who is willing to play.

Now I’m tired of video games. I want to go for something old fashioned, like something involving other intelligent beings, ideally a human. But because of the lockdown, we’re a bit short on those for close proximity activities. So what’s the next best thing?

AI of course. So yeah why not build an AI that would play with me whenever I want.

Now I don’t want to make a dumb AI bot that predicts randomly between rock, paper, and scissor, but rather I also don’t want to use any keyboard inputs or mouse. Just want to play the old fashioned way.

Luckily since I’m a Computer Vision Engineer I could actually train an AI that,

A) learns to recognize my hand signs

B) then play its own random move.

To be clear the prediction part is still random so technically the AI is still dumb…and why not the goal is for ME TO WIN…and have fun of course.

Joke aside, since winning Rock, paper scissors is based on random moves so I can’t model a winning AI system as there is no strategy involved. The only intelligence our system would have is in visual recognition of my hand signs.

So how does this Work:

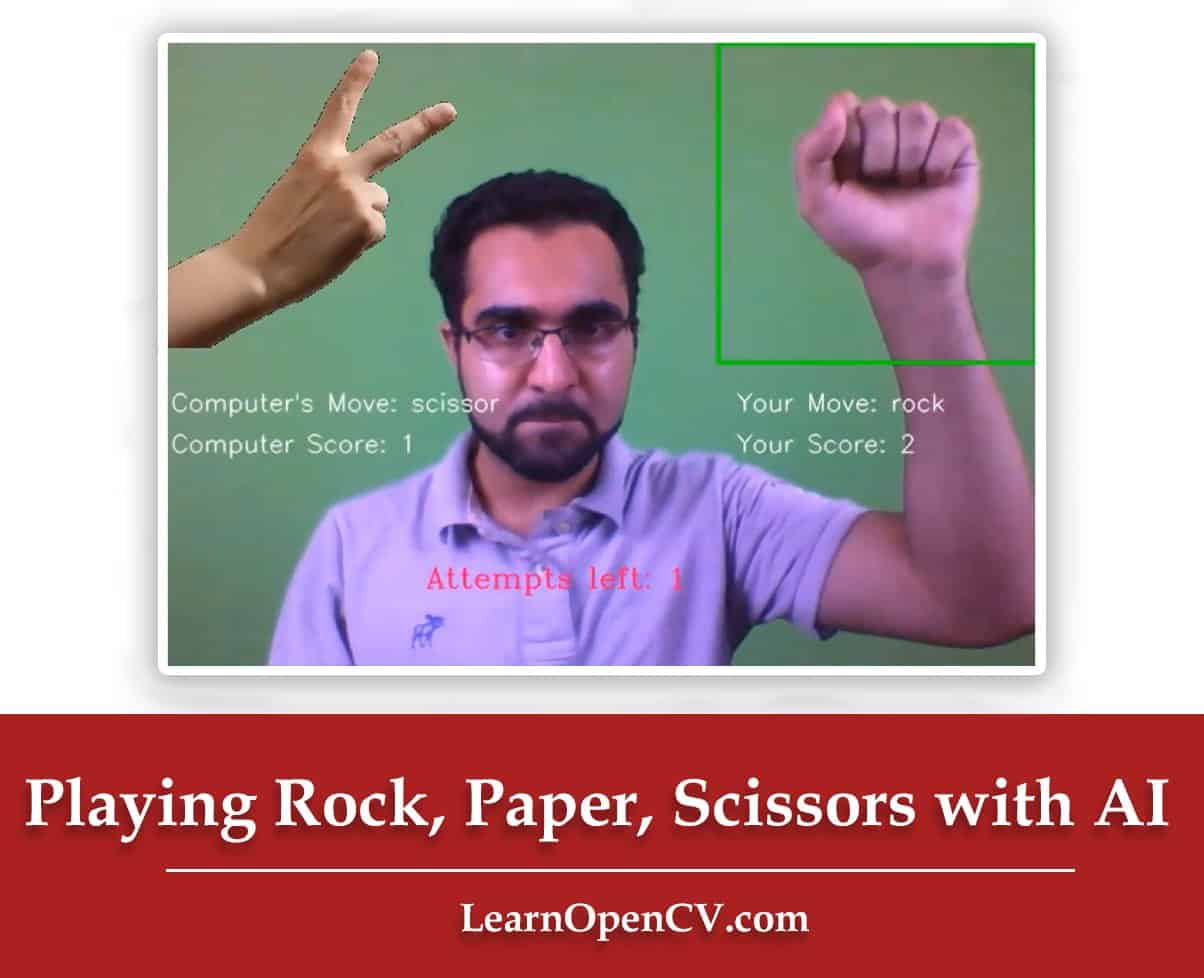

It’s pretty simple. I’ve Fine-tuned NASNETMobile model to recognize my hand signs when it’s inside the box, so when the model predicts my hand signs, the AI randomly generates its own move. And then the winner of that move is decided. The application is designed in a way that you can decide whether to go for the best of 5, best of 10, or any number for that number.

Project Structure:

Here’s a breakdown of our application in steps.

- Step 1: Gather Data, for rock, paper scissor classes.

- Step 2: (Optional) Visualize the Data.

- Step 3: Preprocess Data and Split it.

- Step 4: Prepare Our Model for Transfer Learning.

- Step 5: Train Our Model.

- Step 6: Check our Accuracy, Loss graphs & save the model.

- Step 7: Test on Live Webcam Feed.

- Step 8: Create the Final Application.

So let’s start with the code

Start by making the required imports.

You should have Tensorflow 2.2, OpenCV 4x, and scikit-learn 0.23x installed in your system.

import os

import cv2

import numpy as np

import matplotlib.pyplot as plt

import time

import tensorflow as tf

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.models import Model, load_model

from tensorflow.keras.layers import Dense,MaxPool2D,Dropout,Flatten,Conv2D,GlobalAveragePooling2D,Activation

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.utils import to_categorical

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder

from random import choice,shuffle

from scipy import stats as st

from collections import deque

Step 1: Gather Data, for rock, paper scissor classes.

Now we will create a function that will collect images of our hands. The function is designed in a way that it takes num_samples as an argument and then records that many number of images for each class.

When the function launches you would need to place your hands inside an ROI box of size 224×224, I’m directly saving the image in this size as the model that we are going to use, accepts this size and we wouldn’t even need to do resizing.

def gather_data(num_samples):

global rock, paper, scissor, nothing

# Initialize the camera

cap = cv2.VideoCapture(0)

# trigger tells us when to start recording

trigger = False

# Counter keeps count of the number of samples collected

counter = 0

# This the ROI size, the size of images saved will be box_size -10

box_size = 234

# Getting the width of the frame from the camera properties

width = int(cap.get(3))

while True:

# Read frame by frame

ret, frame = cap.read()

# Flip the frame laterally

frame = cv2.flip(frame, 1)

# Break the loop if there is trouble reading the frame.

if not ret:

break

# If counter is equal to the number samples then reset triger and the counter

if counter == num_samples:

trigger = not trigger

counter = 0

# Define ROI for capturing samples

cv2.rectangle(frame, (width - box_size, 0), (width, box_size), (0, 250, 150), 2)

# Make a resizable window.

cv2.namedWindow("Collecting images", cv2.WINDOW_NORMAL)

# If trigger is True than start capturing the samples

if trigger:

# Grab only slected roi

roi = frame[5: box_size-5 , width-box_size + 5: width -5]

# Append the roi and class name to the list with the selected class_name

eval(class_name).append([roi, class_name])

# Increment the counter

counter += 1

# Text for the counter

text = "Collected Samples of {}: {}".format(class_name, counter)

else:

text = "Press 'r' to collect rock samples, 'p' for paper, 's' for scissor and 'n' for nothing"

# Show the counter on the imaege

cv2.putText(frame, text, (3, 350), cv2.FONT_HERSHEY_SIMPLEX, 0.45, (0, 0, 255), 1, cv2.LINE_AA)

# Display the window

cv2.imshow("Collecting images", frame)

# Wait 1 ms

k = cv2.waitKey(1)

# If user press 'r' than set the path for rock directoryq

if k == ord('r'):

# Trigger the variable inorder to capture the samples

trigger = not trigger

class_name = 'rock'

rock = []

# If user press 'p' then class_name is set to paper and trigger set to True

if k == ord('p'):

trigger = not trigger

class_name = 'paper'

paper = []

# If user press 's' then class_name is set to scissor and trigger set to True

if k == ord('s'):

trigger = not trigger

class_name = 'scissor'

scissor = []

# If user press 's' then class_name is set to nothing and trigger set to True

if k == ord('n'):

trigger = not trigger

class_name = 'nothing'

nothing = []

# Exit if user presses 'q'

if k == ord('q'):

break

# Release the camera and destroy the window

cap.release()

cv2.destroyAllWindows()

Now you can launch the function, let’s just collect 100 samples for each class.

no_of_samples = 100

gather_data(no_of_samples)

So now you just have to press a button to record individual classes, for e.g. to record samples of rock press ‘r’, to record samples of nothing press ‘n’ and so on.

If you haven’t figured it out already then yeah instead of having 3 classes for “Rock”, “paper” and “scissor” we will also have another class called “nothing”. This is because when you’re not playing a move and your hand is out of the box even then the model would try to predict something so we train it on an empty frame so that the model learns that when there is no hand present in the box it’s called nothing.

Ideally, the data for “nothing” class should be a bunch of random images but for the sake of keeping the code simple, we’re just recording a static frame 100 times which is not the best thing to do as now you can only test the model at the same location in which you’re recording images otherwise the model would give you incorrect results. I talk more about improvements at the end of this post.

Note: While recording images try to make as many variations as possible, like try to do zooming, translation, rotation, slight deformation of hands, etc.

It’s worth noting that we’re purposefully saving the images in memory and not on disk. This is because it will allow us to train faster by getting rid of I/O latency caused by loading images on disk.

You won’t always be able to load images in RAM, especially when you have a big dataset, luckily we only have 400 images of 224×224 so my RAM can handle it easily. We would still need to be careful though.

Step 2: (Optional) Visualize the Data:

Since we are not saving the images in the disk, we need to at least visualize them here so we know we collected the data correctly.

As the images are loaded randomly, you can run this cell multiple times and see different images display each time. This will give you a sense of your data variation for each class.

# Set the figure size

plt.figure(figsize=[30,20])

# Set the rows and columns

rows, cols = 4, 8

# Iterate for each class

for class_index, each_list in enumerate([rock, paper, scissor,nothing]):

# Get 8 random indexes, since we will be showing 8 examples of each class.

r = np.random.randint(no_of_samples, size=8);

# Plot the examples

for i, example_index in enumerate(r,1):

plt.subplot(rows,cols,class_index*cols + i );plt.imshow(each_list[example_index][0][:,:,::-1]);plt.axis('off');

Step 3: Preprocess Data and Split it:

In this step, we will combine all the images and labels in a single list and then preprocess them as required by the network. After preprocessing is done we will split them into train and test sets.

Note: If you’re wondering why I didn’t combine all these images in the data collection phase, the reason was because if now you want to record samples of a single class again, you can do that by just pressing that class’s button in the data collection script. If I had combined all images together initially then a change in single class would have required re collecting samples of all other classes.

# Combine the labels of all classes together

labels = [tupl[1] for tupl in rock] + [tupl[1] for tupl in paper] + [tupl[1] for tupl in scissor] +[tupl[1] for tupl in nothing]

# Combine the images of all classes together

images = [tupl[0] for tupl in rock] + [tupl[0] for tupl in paper] + [tupl[0] for tupl in scissor] +[tupl[0] for tupl in nothing]

# Normalize the images by dividing by 255, now our images are in range 0-1. This will help in training.

images = np.array(images, dtype="float") / 255.0

# Print out the total number of labels and images.

print('Total images: {} , Total Labels: {}'.format(len(labels), len(images)))

# Create an encoder Object

encoder = LabelEncoder()

# Convert Lablels to integers. i.e. nothing = 0, paper = 1, rock = 2, scissor = 3 (mapping is done in alphabatical order)

Int_labels = encoder.fit_transform(labels)

# Now the convert the integer labels into one hot format. i.e. 0 = [1,0,0,0] etc.

one_hot_labels = to_categorical(Int_labels, 4)

# Now we're splitting the data, 75% for training and 25% for testing.

(trainX, testX, trainY, testY) = train_test_split(images, one_hot_labels, test_size=0.25, random_state=50)

# Empty memory from RAM

images = []

# This can further free up memory from RAM but be careful, if you won't be able to change split % after this.

# rock, paper, scissor = [], [], []

Total images: 400 , Total Labels: 400

Notice how we free up RAM by emptying the images list. You can further free up more memory by emptying the individual class lists, I’ve commented that part because once you empty those lists you can’t change the train test split nor record samples for a single class.

Step 4: Prepare Our Model for Transfer Learning:

It’s time to set up our model, so I went here and looked up a model to fine-tune, I was looking at the model with the best balance of Speed and Accuracy. I finally decided upon NASNETMobile.

Note: I probably would have chosen an EfficientNet version but then I would need to use tf nightly (contains latest models and updates of TensorFlow but is not stable) or TensorFlow hub (a huge library for reusable machine learning modules) but I don’t want to complicate this post.

So in this section, we are going to load up NASNETMobile without the head, because it was trained on 1000 imagenet classes and we have to predict just 4 classes so we don’t need the head of the model.

We’re going to do Transfer Learning so we will first add a few necessary layers on top of the base model to create our custom head in which the final layer will contain the number of nodes equal to the number of classes which in our case is 4. Make sure you don’t have too many dense layers in the head as they will make the model heavy. In my case, I have just put a single dense layer with 712 units. I’ve also added a dropout Layer, and Global Average Pooling to avoid overfitting.

# This is the input size which our model accepts.

image_size = 224

# Loading pre-trained NASNETMobile Model without the head by doing include_top = False

N_mobile = tf.keras.applications.NASNetMobile( input_shape=(image_size, image_size, 3), include_top=False, weights='imagenet')

# Freeze the whole model

N_mobile.trainable = False

# Adding our own custom head

# Start by taking the output feature maps from NASNETMobile

x = N_mobile.output

# Convert to a single-dimensional vector by Global Average Pooling.

# We could also use Flatten()(x) GAP is more effective reduces params and controls overfitting.

x = GlobalAveragePooling2D()(x)

# Adding a dense layer with 712 units

x = Dense(712, activation='relu')(x)

# Dropout 40% of the activations, helps reduces overfitting

x = Dropout(0.40)(x)

# The fianl layer will contain 4 output units (no of units = no of classes) with softmax function.

preds = Dense(4,activation='softmax')(x)

# Construct the full model

model = Model(inputs=N_mobile.input, outputs=preds)

# Check the number of layers in the final Model

print ("Number of Layers in Model: {}".format(len(model.layers[:])))

Number of Layers in Model: 773

After our full model is constructed we will freeze all layers, our base model will provide useful features which will make the work for our dense layer a lot easier.

Step 5: Train Our Model:

Alright now before I compile and train my model, I want to use data augmentation to add some random transformations to my images. Remember we just recorded 100 images per class so it would be a good idea to augment these examples. With Data augmentation combined with transfer learning, we don’t have to worry about having few training examples.

# Adding transformations that I know would help, you can feel free to add more.

# I'm doing horizontal_flip = False, in case you aren't sure which hand you would be using you can make that True.

augment = ImageDataGenerator(

rotation_range=30,

zoom_range=0.25,

width_shift_range=0.10,

height_shift_range=0.10,

shear_range=0.10,

horizontal_flip=False,

fill_mode="nearest"

)

Now we compile the model, we will be using the Adam Optimizer with a reasonable learning rate. Since it’s a multi-class problem with one hot encoded value we will be using a categorical_crossentropy loss and the only metric we care about right now is accuracy.

model.compile(optimizer=Adam(learning_rate=0.0001), loss='categorical_crossentropy', metrics=['accuracy'])

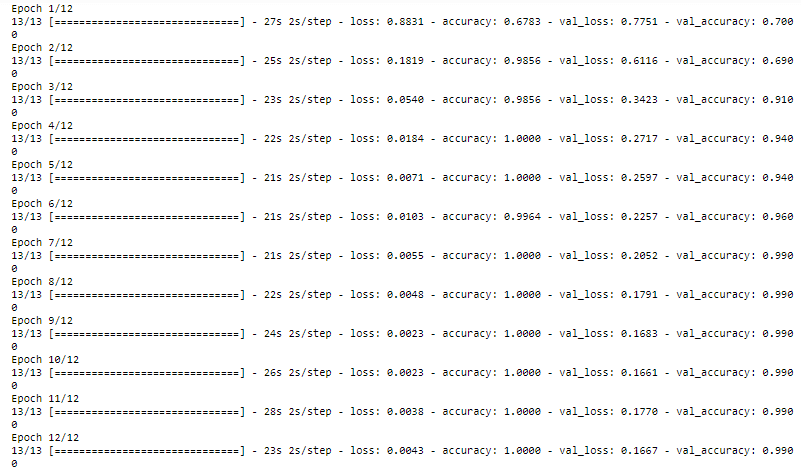

Lets, start training and run for 15 epochs with a batch size of 20. You can change the batchsize if you want but be warned since all the data is in RAM, please don’t crash the kernel.

# Set batchsize according to your system

epochs = 15

batchsize = 20

# Start training

history = model.fit(x=augment.flow(trainX, trainY, batch_size=batchsize), validation_data=(testX, testY),

steps_per_epoch= len(trainX) // batchsize, epochs=epochs)

# Use model.fit_generator function instead if TF version < 2.2

#history = model.fit_generator(x = augment.flow(trainX, trainY, batch_size=batchsize), validation_data=(testX, testY),

#steps_per_epoch= len(trainX) // batchsize, epochs=epochs)

Note: Use model.fit_generator instead of model.fit if you’re using TF version < 2.2

You can run for more iterations as you can see the loss was decreasing, and you can even fine tune this by unfreezing a few layers and retraining it but I’m already achieving an excellent accuracy so I won’t bother for now.

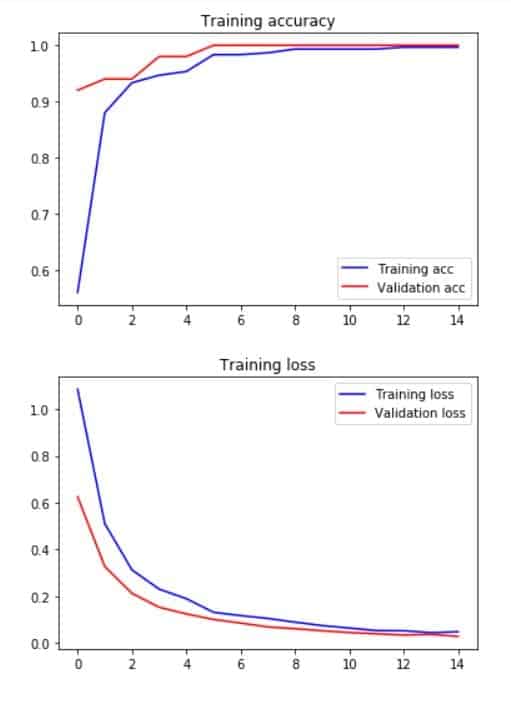

Step 6: Check our Accuracy, Loss graphs & save the model.

In my case from the last epoch, I can see that I’m getting an extremely good accuracy. Let’s plot the accuracy and loss graphs.

# Plot the accuracy and loss curves

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'b', label='Training acc')

plt.plot(epochs, val_acc, 'r', label='Validation acc')

plt.title('Training accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'b', label='Training loss')

plt.plot(epochs, val_loss, 'r', label='Validation loss')

plt.title('Training loss')

plt.legend()

plt.show()

Now those are some really nice curves, just look at the convergence only 100 examples of each class are used.

Now you should save your model, so you won’t have to gather images and train the model the next time you run the notebook.

model.save("rps4.h5")

And here’s how you load the model

model = load_model("rps4.h5")

Step 7: Test on Live Webcam Feed:

Finally, let’s test our model on Live Video Feed, we will be doing the same preprocessing as done during training. As you can see from the video below this cell, I’m getting really good results.

# This list will be used to map probabilities to class names, Label names are in alphabetical order.

label_names = ['nothing', 'paper', 'rock', 'scissor']

cap = cv2.VideoCapture(0)

box_size = 234

width = int(cap.get(3))

while True:

ret, frame = cap.read()

if not ret:

break

frame = cv2.flip(frame, 1)

cv2.rectangle(frame, (width - box_size, 0), (width, box_size), (0, 250, 150), 2)

cv2.namedWindow("Rock Paper Scissors", cv2.WINDOW_NORMAL)

roi = frame[5: box_size-5 , width-box_size + 5: width -5]

# Normalize the image like we did in the preprocessing step, also convert float64 array.

roi = np.array([roi]).astype('float64') / 255.0

# Get model's prediction.

pred = model.predict(roi)

# Get the index of the target class.

target_index = np.argmax(pred[0])

# Get the probability of the target class

prob = np.max(pred[0])

# Show results

cv2.putText(frame, "prediction: {} {:.2f}%".format(label_names[np.argmax(pred[0])], prob*100 ),

(10, 40), cv2.FONT_HERSHEY_SIMPLEX, 0.90, (0, 0, 255), 2, cv2.LINE_AA)

cv2.imshow("Rock Paper Scissors", frame)

k = cv2.waitKey(1)

if k == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

Step 8: Create the Final Application:

It’s time for the finale, we will now take our final model and build a full application out of it, the final application has 3 simple helper functions.

First, we need a function that takes two moves, one by the user and one the computer, and then finds out who won that round. This function is pretty simple as you can see below.

def findout_winner(user_move, Computer_move):

# All logic below is self explanatory

if user_move == Computer_move:

return "Tie"

elif user_move == "rock" and Computer_move == "scissor":

return "User"

elif user_move == "rock" and Computer_move == "paper":

return "Computer"

elif user_move == "scissor" and Computer_move == "rock":

return "Computer"

elif user_move == "scissor" and Computer_move == "paper":

return "User"

elif user_move == "paper" and Computer_move == "rock":

return "User"

elif user_move == "paper" and Computer_move == "scissor":

return "Computer"

Let’s test this function.

user_move = 'paper'

computer_move = choice(['rock', 'paper', 'scissor'])

winner = findout_winner(user_move, computer_move)

print("User Selected '{}' and computer selected '{}' , winner is: '{}' ".format(user_move, computer_move, winner))

User Selected 'paper' and computer selected 'paper' , winner is: 'Tie'

Let’s do another one.

user_move = 'paper'

computer_move = choice(['rock', 'paper', 'scissor'])

winner = findout_winner(user_move, computer_move)

print("User Selected '{}' and computer selected '{}' , winner is: '{}' ".format(user_move, computer_move, winner))

User Selected 'paper' and computer selected 'rock' , winner is: 'User'

What we just built is a dumb AI that can play rock, paper, scissors with us. But as I mentioned I don’t want to use the keyboard or mouse to make a move. So here is where our Classifier will come in.

Here’s another function that will decide the winner by counting the user’s and the computer’s score.

def show_winner(user_socre, computer_score):

if user_score > computer_score:

img = cv2.imread("images/youwin.jpg")

elif user_score < computer_score:

img = cv2.imread("images/comwins.jpg")

else:

img = cv2.imread("images/draw.jpg")

cv2.putText(img, "Press 'ENTER' to play again, else exit",

(150, 530), cv2.FONT_HERSHEY_COMPLEX, 1, (0, 0, 255), 3, cv2.LINE_AA)

cv2.imshow("Rock Paper Scissors", img)

# If enter is pressed.

k = cv2.waitKey(0)

# If the user presses 'ENTER' key then return TRUE, otherwise FALSE

if k == 13:

return True

else:

return False

Finally this function will display a transparent image of rock, paper or scissor based on what move computer played. This is entirely optional but it does make the application look more alive.

def display_computer_move(computer_move_name, frame):

icon = cv2.imread( "images/{}.png".format(computer_move_name), 1)

icon = cv2.resize(icon, (224,224))

# This is the portion which we are going to replace with the icon image

roi = frame[0:224, 0:224]

# Get binary mask from the transparent image, 4th channel is the alpha channel

mask = icon[:,:,-1]

# Making the mask completely binary (black & white)

mask = cv2.threshold(mask, 1, 255, cv2.THRESH_BINARY)[1]

# Store the normal bgr image

icon_bgr = icon[:,:,:3]

# Now combine the foreground of the icon with background of ROI

img1_bg = cv2.bitwise_and(roi, roi, mask = cv2.bitwise_not(mask))

img2_fg = cv2.bitwise_and(icon_bgr, icon_bgr, mask = mask)

combined = cv2.add(img1_bg, img2_fg)

frame[0:224, 0:224] = combined

return frame

Final Application:

We have all the components and so now we can proceed with the final script, and make our game.

cap = cv2.VideoCapture(0)

box_size = 234

width = int(cap.get(3))

# Specify the number of attempts you want. This means best of 5.

attempts = 5

# Initially the moves will be `nothing`

computer_move_name= "nothing"

final_user_move = "nothing"

label_names = ['nothing', 'paper', 'rock', 'scissor']

# All scores are 0 at the start.

computer_score, user_score = 0, 0

# The default color of bounding box is Blue

rect_color = (255, 0, 0)

# This variable remembers if the hand is inside the box or not.

hand_inside = False

# At each iteration we will decrease the total_attempts value by 1

total_attempts = attempts

# We will only consider predictions having confidence above this threshold.

confidence_threshold = 0.70

# Instead of working on a single prediction, we will take the mode of 5 predictions by using a deque object

# This way even if we face a false positive, we would easily ignore it

smooth_factor = 5

# Our initial deque list will have 'nothing' repeated 5 times.

de = deque(['nothing'] * 5, maxlen=smooth_factor)

while True:

ret, frame = cap.read()

if not ret:

break

frame = cv2.flip(frame, 1)

cv2.namedWindow("Rock Paper Scissors", cv2.WINDOW_NORMAL)

# extract the region of image within the user rectangle

roi = frame[5: box_size-5 , width-box_size + 5: width -5]

roi = np.array([roi]).astype('float64') / 255.0

# Predict the move made

pred = model.predict(roi)

# Get the index of the predicted class

move_code = np.argmax(pred[0])

# Get the class name of the predicted class

user_move = label_names[move_code]

# Get the confidence of the predicted class

prob = np.max(pred[0])

# Make sure the probability is above our defined threshold

if prob >= confidence_threshold:

# Now add the move to deque list from left

de.appendleft(user_move)

# Get the mode i.e. which class has occured more frequently in the last 5 moves.

try:

final_user_move = st.mode(de)[0][0]

except StatisticsError:

print('Stats error')

continue

# If nothing is not true and hand_inside is False then proceed.

# Basically the hand_inside variable is helping us to not repeatedly predict during the loop

# So now the user has to take his hands out of the box for every new prediction.

if final_user_move != "nothing" and hand_inside == False:

# Set hand inside to True

hand_inside = True

# Get Computer's move and then get the winner.

computer_move_name = choice(['rock', 'paper', 'scissor'])

winner = findout_winner(final_user_move, computer_move_name)

# Display the computer's move

display_computer_move(computer_move_name, frame)

# Subtract one attempt

total_attempts -= 1

# If winner is computer then it gets points and vice versa.

# We're also changing the color of rectangle based on who wins the round.

if winner == "Computer":

computer_score +=1

rect_color = (0, 0, 255)

elif winner == "User":

user_score += 1;

rect_color = (0, 250, 0)

elif winner == "Tie":

rect_color = (255, 250, 255)

# If all the attempts are up then find our the winner

if total_attempts == 0:

play_again = show_winner(user_score, computer_score)

# If the user pressed Enter then restart the game by re initializing all variables

if play_again:

user_score, computer_score, total_attempts = 0, 0, attempts

# Otherwise quit the program.

else:

break

# Display images when the hand is inside the box even when hand_inside variable is True.

elif final_user_move != "nothing" and hand_inside == True:

display_computer_move(computer_move_name, frame)

# If class is nothing then hand_inside becomes False

elif final_user_move == 'nothing':

hand_inside = False

rect_color = (255, 0, 0)

# This is where all annotation is happening.

cv2.putText(frame, "Your Move: " + final_user_move,

(420, 270), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 255, 255), 1, cv2.LINE_AA)

cv2.putText(frame, "Computer's Move: " + computer_move_name,

(2, 270), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 255, 255), 1, cv2.LINE_AA)

cv2.putText(frame, "Your Score: " + str(user_score),

(420, 300), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 255, 255), 1, cv2.LINE_AA)

cv2.putText(frame, "Computer Score: " + str(computer_score),

(2, 300), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 255, 255), 1, cv2.LINE_AA)

cv2.putText(frame, "Attempts left: {}".format(total_attempts), (190, 400), cv2.FONT_HERSHEY_COMPLEX, 0.7,

(100, 2, 255), 1, cv2.LINE_AA)

cv2.rectangle(frame, (width - box_size, 0), (width, box_size), rect_color, 2)

# Display the image

cv2.imshow("Rock Paper Scissors", frame)

# Exit if 'q' is pressed

k = cv2.waitKey(10)

if k == ord('q'):

break

# Relase the camera and destroy all windows.

cap.release()

cv2.destroyAllWindows()

Notice even though we trained a strong classifier, we still added some checks to avoid false predictions. These were A) making sure we only considered predictions above some threshold (we used 70%) and B) Instead of using a single prediction we utilized 5 predictions using a Dequeue object. This allowed us to have a robust system.

Important Considerations and Tips for Improvement:

In this post, we learned to Generate custom data right inside the notebook. I used this for collecting images of hands but you can use this for different things.

If you’re planning to have a dataset of medium or large size then make sure to save images on disk not on RAM.

A clear limitation of this application was that it would correctly work on the place where you recorded training images.

There are multiple solutions around this:

- You can try to exclude nothing class and in prediction time use background subtraction to tell if there is an object (hand) inside the ROI box. This is a really easy and effective way but It can be influenced by changing lighting conditions.

- Instead of saving a single static image for nothing class, you can save random images.

Another important thing to note is that even if trained correctly rock, paper scissors classes are predicted correctly most of the time but there is a clear impact on their accuracy if you tested on different places.

The way to solve this is to record samples of rock, paper, scissors on different backgrounds.

Two smart ways to go about doing this are:

- Use a handheld USB cam or a mobile cam just over your hand and move it around making a video, split the video into frames later. Make sure not to cause motion blur.

- During training perform background subtraction to extract the hand, and then merge random background images with the hand. For best results make sure to do a clean segmentation.

Lastly, No doubt Deep learning will give you the best results for this problem but you can easily solve this by other classical approaches for example take a look at this hand gesture recognition based calculator I built a while back using just shape analysis.

I hope you all enjoyed this tutorial. If you have any questions feel free to ask them in the comments.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning