Consider this, you’re in the middle of your work, and then suddenly due to some reason the internet stops working due to some router issue you’re informed that it will be fixed in a few hours.

So now you have a couple of hours to kill! .. What would you do?

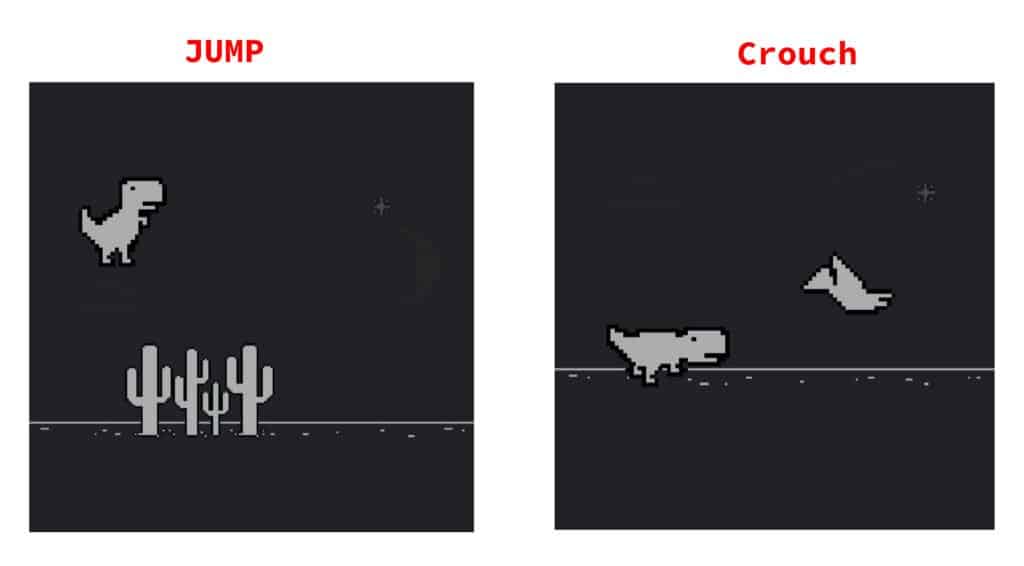

Like most people you might find yourself playing infamous Chrome’s Dino game that appears on chrome when the internet is not working. See image below

But what if you can’t afford to spend hours playing that game and on top of that you’re a computer vision engineer like me and want to put your time into more productive use.

So something like that did happen and what I end up doing is that I created an OpenCV-based Augmented reality application that used my facial expression to control the Dino in the game and so I didn’t even have to touch the keyboard or mouse while playing the game.

How Cool is that?

Here’s a video Demo of me playing that game:

If you still think that was a waste of time then you may be right, but if that’s not the case then let me walk you through the exact step-by-step process I used to create this application.

Here’s the best part, you can actually use the techniques you learn in this post to control any other games. The only condition is that make sure the game you’re trying to control is simple enough.

Also, this is not the first time I’m making a Human-Computer Interaction (HCI) based game, check out this post where I control a game with hand gestures.

So How Does It work?

So the Chrome’s Dino game is one of the simplest games you might have ever played. The only two controls that you need to worry about is making the Dino Jump or Crouch in order to avoid obstacles. Normally, you would press the space and down button on the keyboard to make the Dino do that.

So all we need to do is programmatically press those buttons, we can easily do that by utilizing the pyautogui library which will allow us to control our keyboard with python.

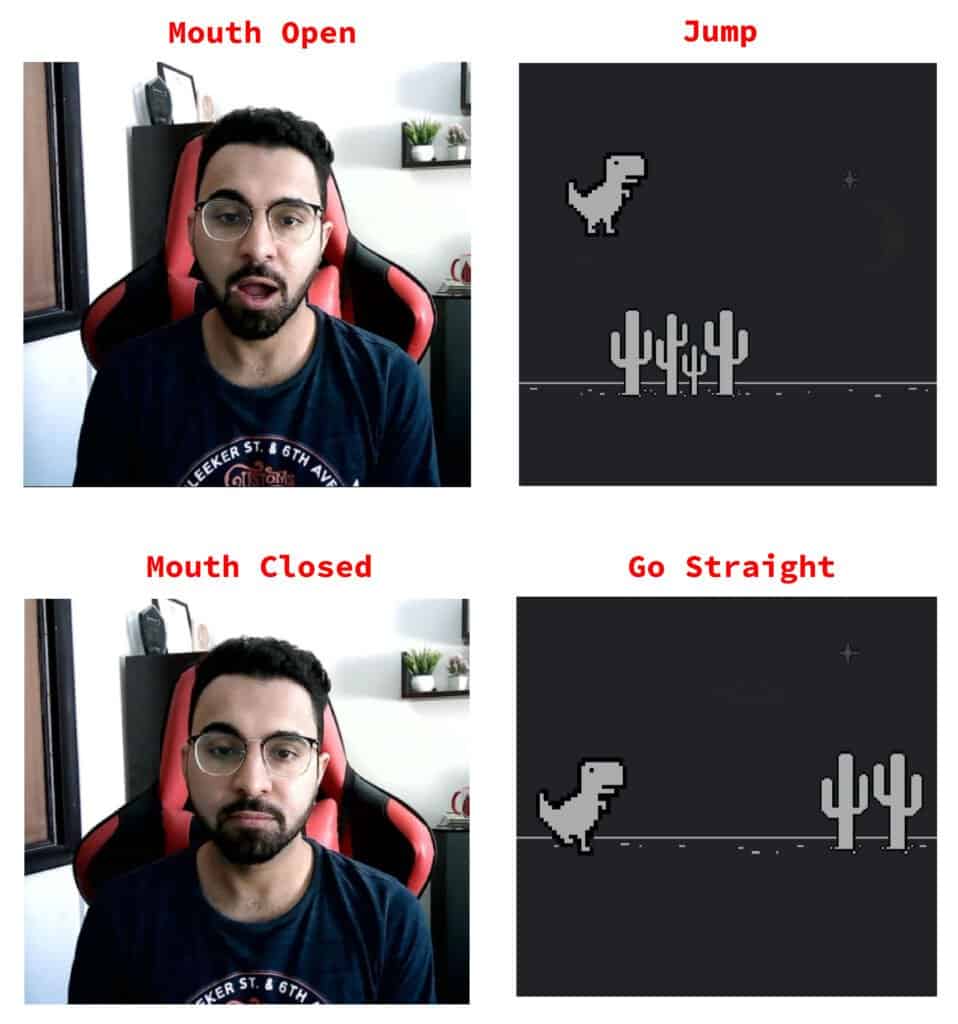

Alright, now we just need to trigger those controls based on certain actions that I make. For this post, I’ve decided to control the dino using my face.

So I want to make the Dino jump when I open my mouth, I can do this by detecting the facial landmarks around my lips to determine if my mouth is opened or closed. By using the dlib library I can easily achieve this. I’ll go into more detail on this when I’m implementing this in code.

Now the only thing left is to make the dino crouch, we can do that with several methods. By using landmarks we can control the crouching mechanism by implementing blink detection. But as the game speeds up blinking as a control is not a feasible option, you would hit potential obstacles when your eyes are closed.

Another option is to implement smile detection using landmarks to crouch but that is also a bad idea because then not only it will be harder to tune thresholds but when you’re rapidly switching between opening/closing your mouth and smiling then, believe me, it’s not a pretty look, compared to that Mr. Bean’s expressions would probably look more appealing.

And I’m talking about these expressions.

So, what’s the solution?

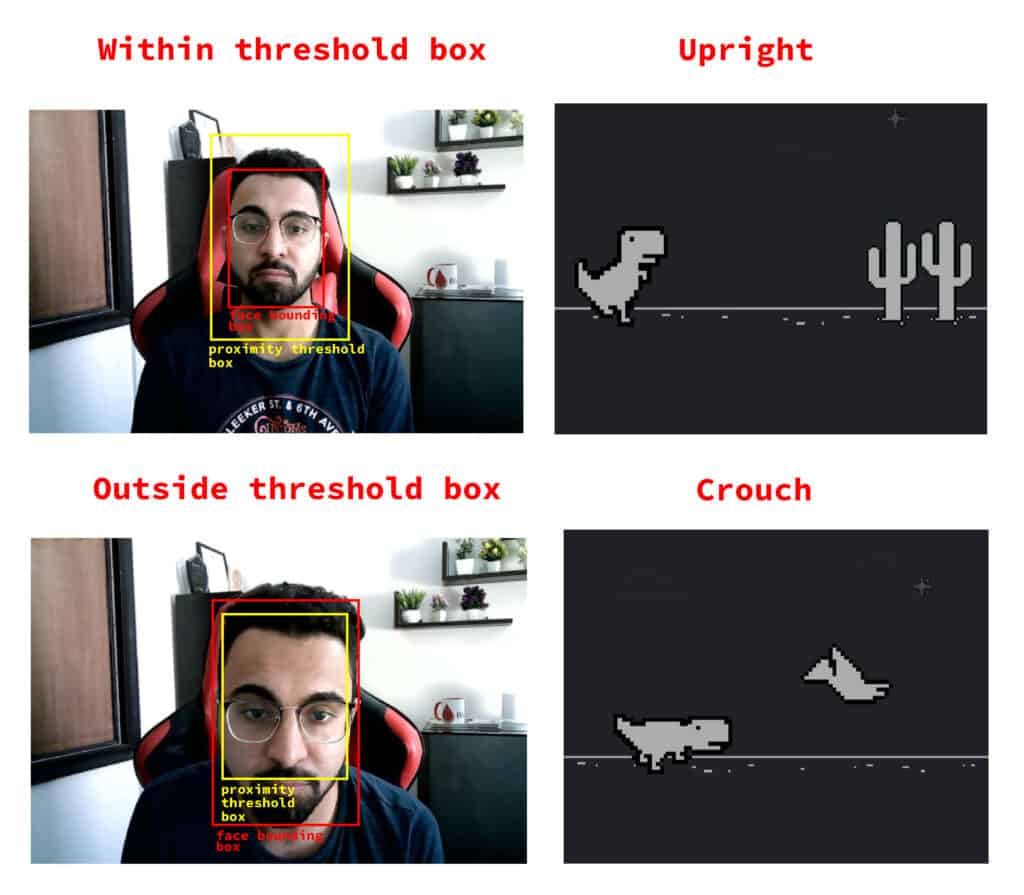

How about we monitor the size of the detected face, so if the face is closer to the camera the detected size is bigger and the dino should crouch. This way we’re controlling the dino by measuring the proximity between the detected face and the camera. So now you can make the dino crouch by moving your face closer and farther from the camera. I’ll go into more details on this but for now, here are the steps we will be performing in this tutorial.

Outline:

- Step 1: Real-time Face Detection

- Step 2: Find the landmarks for the detected face

- Step 3: Build the Jump Control mechanism for the Dino

- Step 4: Build the Crouch Control mechanism

- Step 5: Perform Calibration

- Step 6: Keyboard Automation with PyautoGUI

- Step 7: Build the Final Application

Alright, let’s start with the Code.

Lets start by importing the required libraries

import cv2

import numpy as np

import matplotlib.pyplot as plt

from math import hypot

import pyautogui

import dlib

Step 1: Face Detection

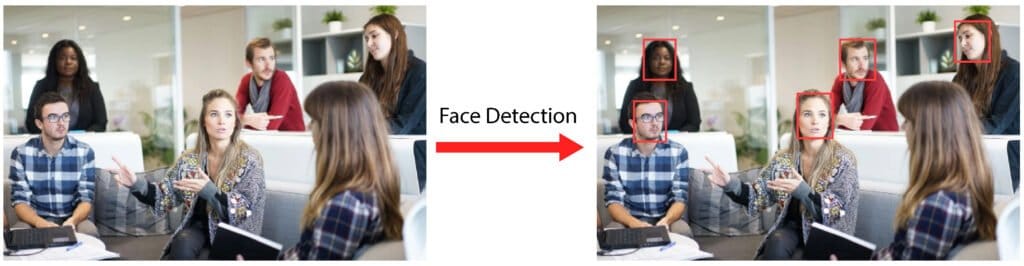

To detect face landmarks, we don’t need to look over the whole image but just inside the face region, so the first thing we need to do is apply Face Detection.

Now we can use OpenCV’s Haar cascades or Dlib’s HOG-based face detector but instead, I’m going to use a more robust deep learning-based SSD face detector with OpenCV’s DNN module.

If you’re interested in learning more about how SSD-based face detectors work then you can read this paper (Liu et al., 2015).

Initialize The DNN Module:

The SSD face detector provided by OpenCV is a Caffe model and you will need two files to do inference with it. A .prototxt file that defines the model architecture and a .caffemodel file that contains the pre-trained weights for the layers in the architecture. Both the Caffe model and .prototxt file will be available in the download folder.

Let’s initialize the DNN module with these files.

# Path to the weights file

model_weights = "model/res10_300x300_ssd_iter_140000_fp16.caffemodel"

# Path to the architecture file

model_arch = "model/deploy.prototxt.txt"

# Load the caffe model

net = cv2.dnn.readNetFromCaffe(model_arch, model_weights)

Create A Face Detection Function:

Now we will create a function called face_detector() which will take the image as input and detect the faces in the image. Since the Dino has to be controlled using a single input at a time, only one of the detected faces can be used so the bounding rectangle is only returned for the detected face with the highest confidence.

We will also need to do some preprocessing steps before we can pass our image to the model, these steps are:

- Resizing images to 300×300:

- Applying mean subtraction of values (104, 177, 123):

- And formating the array structure to a 4D tensor.

Fortunately all these things will be taken care of by DNN module’s dnn.blobFromImage() function.

After preprocessing we can feed the processed image to the network and then post-process the results. So the model returns a 4-dimensional array, the shape of which in our case is (1, 1, 200, 7). this array contains the confidence score for each detection in the image along with 4 coordinates of the detection scaled down to 0-1 range. For each image, the array also returns 200 detections, However since we are only interested in detecting a single face, we will extract the face with the highest confidence.

Finally, the true coordinates for the bounding box rectangle can be then retrieved by multiplying the scaled-down coordinates by the width and height of the original image before resizing.

def face_detector(image, threshold =0.7):

# Get the height,width of the image

h, w = image.shape[:2]

# Apply mean subtraction, and create 4D blob from image

blob = cv2.dnn.blobFromImage(image, 1.0,(300, 300), (104.0, 117.0, 123.0))

# Set the new input value for the network

net.setInput(blob)

# Run forward pass on the input to compute output

faces = net.forward()

# Get the confidence value for all detected faces

prediction_scores = faces[:,:,:,2]

# Get the index of the prediction with highest confidence

i = np.argmax(prediction_scores)

# Get the face with highest confidence

face = faces[0,0,i]

# Extract the confidence

confidence = face[2]

# if confidence value is greater than the threshold

if confidence > threshold:

# The 4 values at indexes 3 to 6 are the top-left, bottom-right coordinates

# scales to range 0-1.The original coordinates can be found by

# multiplying x,y values with the width,height of the image

box = face[3:7] * np.array([w, h, w, h])

# The coordinates are the pixel numbers relative to the top left

# corner of the image therefore needs be quantized to int type

(x1, y1, x2, y2) = box.astype("int")

# Draw a bounding box around the face.

annotated_frame = cv2.rectangle(image.copy(), (x1, y1), (x2, y2), (0, 0, 255), 2)

output = (annotated_frame, (x1, y1, x2, y2), True, confidence)

# Return the original frame if no face is detected with high confidence.

else:

output = (image,(),False, 0)

return output

Now we can test the face_detector() function with a real-time camera feed.

# Get the video feed from webcam

cap = cv2.VideoCapture(0)

# Set the window to a normal one so we can adjust it

cv2.namedWindow('face Detection', cv2.WINDOW_NORMAL)

while(True):

# Read the frames

ret, frame = cap.read()

# Break if frame is not returned

if not ret:

break

# Flip the frame

frame = cv2.flip( frame, 1 )

# Detect face in the frame

annotated_frame, coords, status, conf = face_detector(frame)

# Display the frame

cv2.imshow('face Detection', annotated_frame)

# Break the loop if 'q' key pressed

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything is done, release the capture and destroy the window

cap.release()

cv2.destroyAllWindows()

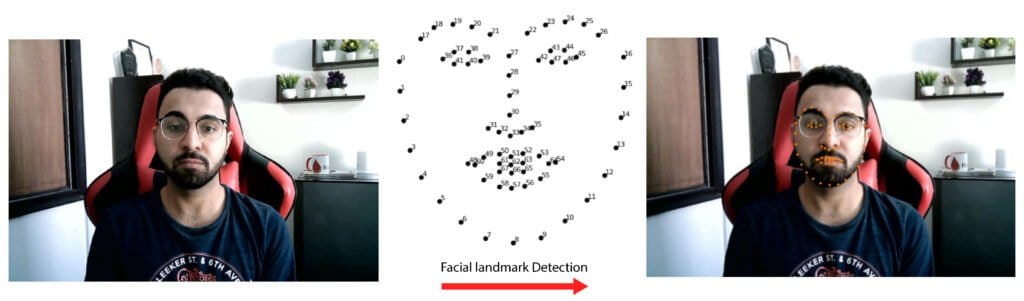

Step 2: Landmarks Detection

To implement the jump mechanism, we need information about whether the mouth is open or closed. For this, we need to detect facial landmarks so we can determine the position of upper and lower lips.

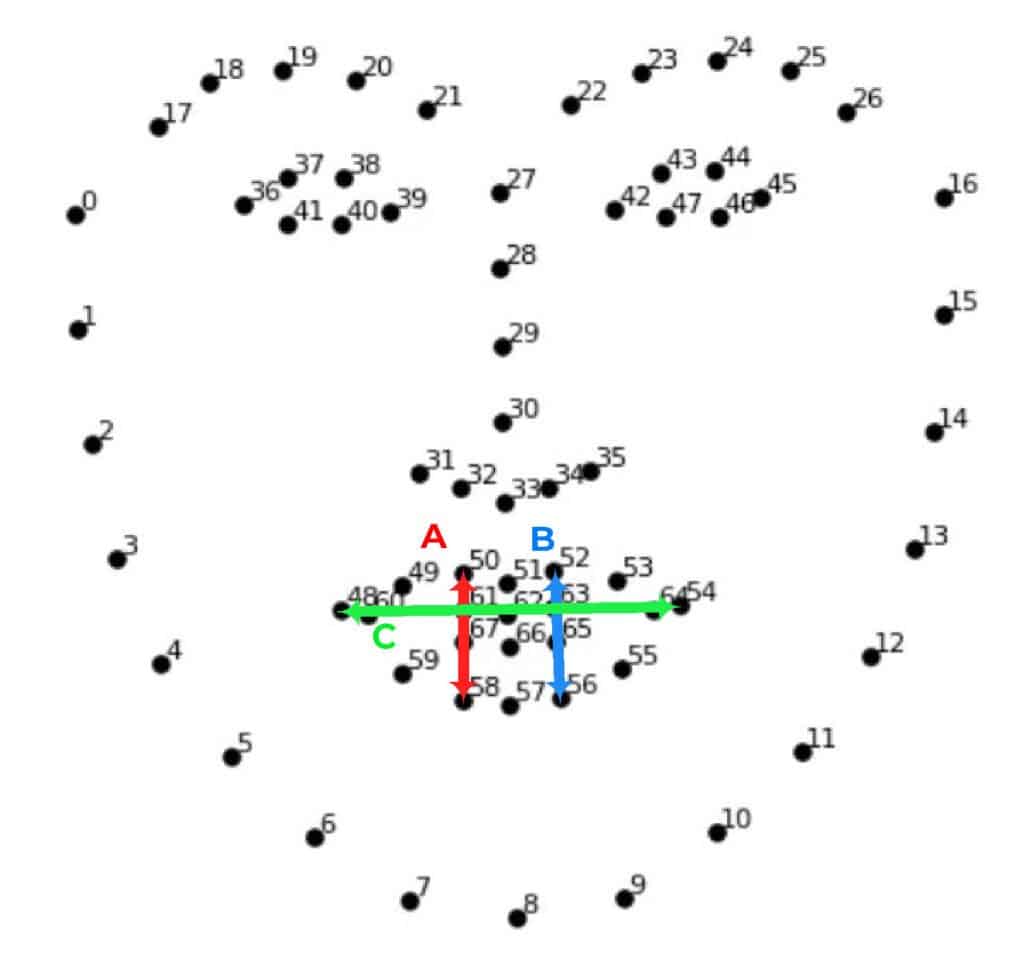

By using Dlib’s 68 landmark detector, we’ll be able to detect below 68 landmarks on the face.

Dlib is a C++ library with python bindings, created and maintained by Davis King. It is a toolkit containing many machine learning & numerical optimization algorithms and also a few Computer Vision algorithms for important tasks including Facial Landmark detection.

Set Up the facial landmark Detector:

Now to use the landmark detector on dlib, you will need to download the landmark detection model from here and put it inside the model folder inside this directory.

The landmark detection model is an implementation of the paper One Millisecond Face Alignment with an Ensemble of Regression Trees by Vahid Kazemi and Josephine Sullivan(2014).

To initialize the landmark detector, you will use dlib.shape_predictor() function, which will load the pre-trained landmark detector from the disk.

predictor = dlib.shape_predictor("model/shape_predictor_68_face_landmarks.dat")

Create the detect_landmarks() function

Now we will create a function called detect_landmarks() which takes in the coordinates of the detected face returned by the face_detector() method and then detects landmarks and returns them, while also annotating the image with circles on landmark positions.

def detect_landmarks(box, image):

# For faster results convert the image to gray-scale

gray_scale = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# Get the coordinates

(x1, y1, x2, y2) = box

# Perform the detection

shape = predictor(gray_scale, dlib.rectangle(x1, y1, x2, y2))

# Get the numPy array containing the coordinates of the landmarks

landmarks = shape_to_np(shape)

# Draw the landmark points with circles

for (x, y) in landmarks:

annotated_image = cv2.circle(image, (x, y),2, (0, 127, 255), -1)

return annotated_image, landmarks

The helper function below converts the shape object returned by the predictor function into a more convenient NumPy array. So the helper function below is being used by the landmark function we created above.

def shape_to_np(shape):

# Create an array of shape (68, 2) for storing the landmark coordinates

landmarks = np.zeros((68, 2), dtype="int")

# Write the x,y coordinates of each landmark into the array

for i in range(0, 68):

landmarks[i] = (shape.part(i).x, shape.part(i).y)

return landmarks

Now we can test detect_landmarks() function on a real time camera feed with the code below.

# Get the video feed from webcam

cap = cv2.VideoCapture(0)

# Set the window to a normal one so we can adjust it

cv2.namedWindow('Landmark Detection', cv2.WINDOW_NORMAL)

while(True):

# Read the frames

ret, frame = cap.read()

# Break if frame is not returned

if not ret:

break

# Flip the frame

frame = cv2.flip( frame, 1 )

# Detect face in the frame

face_image, box_coords, status, conf = face_detector(frame)

if status:

# Get the landmarks for the face region in the frame

landmark_image, landmarks = detect_landmarks(box_coords, frame)

# Display the frame

cv2.imshow('Landmark Detection',landmark_image)

# Break the loop if 'q' key pressed

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything is done, release the capture and destroy the window

cap.release()

cv2.destroyAllWindows()

Step 3: Jump Control mechanism

In this step we will start implementing the jump control mechanism, what we need to do is to make the dino jump when the mouth is opened and stay still when it’s not.

The jump control mechanism that we will use simply utilizes the euclidean distance between a pair of landmark points indicated below to calculate a ratio of mouth height to its width. Using a threshold value for comparison the mouth can then be evaluated as being close or open.

Later on we will see how you can calibrate the best threshold value according to your posture.

def is_mouth_open(landmarks, ar_threshold = 0.7):

# Calculate the euclidean distance labelled as A,B,C

A = hypot(landmarks[50][0] - landmarks[58][0], landmarks[50][1] - landmarks[58][1])

B = hypot(landmarks[52][0] - landmarks[56][0], landmarks[52][1] - landmarks[56][1])

C = hypot(landmarks[48][0] - landmarks[54][0], landmarks[48][1] - landmarks[54][1])

# Calculate the mouth aspect ratio

# The value of vertical distance A,B is averaged

mouth_aspect_ratio = (A + B) / (2.0 * C)

# Return True if the value is greater than the threshold

if mouth_aspect_ratio > ar_threshold:

return True, mouth_aspect_ratio

else:

return False, mouth_aspect_ratio

Testing the is_mouth_open() function:

# Get the video feed from webcam

cap = cv2.VideoCapture(0)

# Set the window to a normal one so we can adjust it

cv2.namedWindow('Mouth Status', cv2.WINDOW_NORMAL)

while(True):

# Read the frames

ret, frame = cap.read()

# Break if frame is not returned

if not ret:

break

# Flip the frame

frame = cv2.flip( frame, 1 )

# Detect face in the frame

face_image, box_coords, status, conf = face_detector(frame)

if status:

# Get the landmarks for the face region in the frame

landmark_image, landmarks = detect_landmarks(box_coords, frame)

# Adjust the threshold and make sure it's working for you.

mouth_status,_ = is_mouth_open(landmarks, ar_threshold = 0.6)

# Display the mouth status

cv2.putText(frame,'Is Mouth Open: {}'.format(mouth_status),

(20, 20), cv2.FONT_HERSHEY_SIMPLEX, 0.65, (0, 127, 255),2)

# Display the frame

cv2.imshow('Mouth Status',frame)

# Break the loop if 'q' key pressed

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything done, release the capture and destroy the window

cap.release()

cv2.destroyAllWindows()

Step 4: Crouch Control Mechanism

The Crouch control mechanism will utilize the euclidean distance between the top-left corner and bottom-right corner of the face bounding box. When the face is near the camera the distance will be greater, and when the face is close enough a key down event will be triggered causing the Dino to crouch.

The face_proximity function below calculates the diagonal distance and compares it with the proximity_threshold to return either True or False. Also, the coordinates of a rectangle are calculated relative to the face. This rectangle guides the user on how close they need to get to the camera to trigger the crouch.

def face_proximity(box,image, proximity_threshold = 325):

# Get the height and width of the face bounding box

face_width = box[2]-box[0]

face_height = box[3]-box[1]

# Draw rectangle to guide the user

# Calculate the angle of diagonal using face width, height

theta = np.arctan(face_height/face_width)

# Use the angle to calculate height, width of the guide rectangle

guide_height = np.sin(theta)*proximity_threshold

guide_width = np.cos(theta)*proximity_threshold

# Calculate the mid-point of the guide rectangle/face bounding box

mid_x,mid_y = (box[2]+box[0])/2 , (box[3]+box[1])/2

# Calculate the coordinates of top-left and bottom-right corners

guide_topleft = int(mid_x-(guide_width/2)), int(mid_y-(guide_height/2))

guide_bottomright = int(mid_x +(guide_width/2)), int(mid_y + (guide_height/2))

# Draw the guide rectangle

cv2.rectangle(image, guide_topleft, guide_bottomright, (0, 255, 255), 2)

# Calculate the diagonal distance of the bounding box

diagonal = hypot(face_width, face_height)

# Return True if distance greater than the threshold

if diagonal > proximity_threshold:

return True, diagonal

else:

return False, diagonal

Testing the face_proximity() function:

# Get the video feed from webcam

cap = cv2.VideoCapture(0)

# Set the window to a normal one so we can adjust it

cv2.namedWindow('Face proximity', cv2.WINDOW_NORMAL)

while(True):

# Read the frames

ret, frame = cap.read()

# Break if frame is not returned

if not ret:

break

# Flip the frame

frame = cv2.flip( frame, 1 )

# Detect face in the frame

face_image, box_coords, status, conf = face_detector(frame)

if status:

# Check if face is closer than the defined threshold

is_face_close,_ = face_proximity(box_coords, face_image, proximity_threshold = 325)

# Display the mouth status

cv2.putText(face_image,'Is Face Close: {}'.format(is_face_close),

(20, 20), cv2.FONT_HERSHEY_SIMPLEX, 0.65, (0, 127, 255),2)

# Display the frame

cv2.imshow('Face proximity',face_image)

# Break the loop if 'q' key pressed

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything done, release the capture and destroy the window

cap.release()

cv2.destroyAllWindows()

Step 5: Perform Calibration (Optional)

The threshold values I have selected for is_mouth_open() and face_proximity() should generalize well for most people but in case those values are not working for you, you can change it here.

So in this step, we’ll learn how to Calibrate the ideal threshold values. Now run the code below and open-close your mouth and find the threshold that works best to differentiate between the two states. Also, do the same thing for the face proximity threshold.

# Get the video feed from webcam

cap = cv2.VideoCapture(0)

# Set the window to a normal one so we can adjust it

cv2.namedWindow('Calibration', cv2.WINDOW_NORMAL)

while(True):

# Read the frames

ret, frame = cap.read()

# Break if frame is not returned

if not ret:

break

# Flip the frame

frame = cv2.flip( frame, 1 )

# Detect face in the frame

face_image, box_coords, status, conf = face_detector(frame)

if status:

# Detect landmarks if the frame is found

landmark_image, landmarks = detect_landmarks(box_coords, frame)

# Get the current mouth aspect ratio

_,mouth_ar = is_mouth_open(landmarks)

# Get the current face proximity

_, proximity = face_proximity(box_coords, face_image)

# Calculate threshold values

ar_threshold = mouth_ar*1.4

proximity_threshold = proximity*1.3

# Dsiplay the threshold values

cv2.putText(frame, 'Aspect ratio threshold: {:.2f} '.format(ar_threshold),

(20, 20), cv2.FONT_HERSHEY_SIMPLEX, 0.65, (0, 127, 255),2)

cv2.putText(frame,'Proximity threshold: {:.2f}'.format(proximity_threshold),

(20, 50), cv2.FONT_HERSHEY_SIMPLEX, 0.65, (0, 127, 255),2)

# Display the frame

cv2.imshow('Calibration',frame)

# Break the loop if 'q' key pressed

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything is done, release the capture and destroy the window

cap.release()

cv2.destroyAllWindows()

When you’re satisfied with the values above then the only piece remaining is to trigger keypress events, instead of just showing text on the screen.

Step 6: Keyboard Automation

The Dino cannot jump or crouch unless it is provided with input from the keyboard. We will use the output from the is_mouth_open() and face_proximity() functions to trigger keyboard keypress events.

For this, we will be using PyAutoGUI which is a simple API that lets a python script control the mouse and keyboard, it’s used to automate processes. Let’s have a look at how it works and how you can use it to trigger key events.

Note: When running the following cells in Jupyter Notebook, make sure you don’t use the Shift + Enter command to run the following code cells. You can use the Run code cell button in the Toolbar. Also if the keyboard buttons misbehave then you can also restart the kernel

Here’s how you can simply trigger a right click using pyatogui.

# This will open a context menu

pyautogui.click(button='right')

To trigger key presses we can use press() function.

# Press space bar. This will scroll down the page in some browsers

pyautogui.press('space')

To press multiple keys we can pass a list of strings to press() function.

# This will move the focus to the next cell in the notebook

pyautogui.press(['shift','enter'])

When you use pyautogui.keyDown() instead of pyautogui.press(). Then the specified key is held down unless pyautogui.keyUp() event takes place helping us trigger a longer keypress.

Let’s see how it works by holding the Shift key down:

# Hold down the shift key

pyautogui.keyDown('shift')

The Shift key is still held down so now we only need to press the Enter key to run the next cell.

# Press enter while the shift key is down, this will run the next code cell

pyautogui.press('enter')

# Release the shift key

pyautogui.keyUp('shift')

# This will run automatically after running the two code cells above

print('I ran')

Step 7: Build The Final Application

The final application brings all of the components together. Based on the output of is_mouth_open() and face_proximity() functions the keyboard events are triggered causing the Dino to jump or crouch accordingly.

All that needs to be done is to run the code below and go to Chrome://Dino in your browser. Make sure your threshold values are calibrated correctly according to your current posture.

Note: The image window screen will freeze when you trigger key buttons since at that moment the while loop will pause to press the key. So don’t worry about that, after the program launches minimize the camera window and just go to chrome://dino/ and start playing using your face and mouth.

# Get the video feed from webcam

cap = cv2.VideoCapture(0)

# Set the window to a normal one so we can adjust it

cv2.namedWindow('Dino with OpenCV', cv2.WINDOW_NORMAL)

# By default each key press is followed by a 0.1 second pause

pyautogui.PAUSE = 0.0

# The fail-safe triggers an exception in case mouse

# is moved to corner of the screen

#pyautogui.FAILSAFE = False

while(True):

# Read the frames

ret, frame = cap.read()

# Break if frame is not returned

if not ret:

break

# Flip the frame

frame = cv2.flip( frame, 1 )

# Detect face in the frame

face_image, box_coords, status, conf = face_detector(frame)

if status:

# Detect landmarks if a face is found

landmark_image, landmarks = detect_landmarks(box_coords, frame)

# Check if mouth is open

is_open,_ = is_mouth_open(landmarks, ar_threshold)

# If the mouth is open trigger space key Down event to jump

if is_open:

pyautogui.keyDown('space')

mouth_status = 'Open'

else:

# Else the space key is Up

pyautogui.keyUp('space')

mouth_status = 'Closed'

# Display the mouth status on the frame

cv2.putText(frame,'Mouth: {}'.format(mouth_status),

(20, 20), cv2.FONT_HERSHEY_SIMPLEX, 0.65, (0, 127, 255),2)

# Check the proximity of the face

is_closer,_ = face_proximity(box_coords, frame, proximity_threshold)

# If face is closer press the down key

if is_closer:

pyautogui.keyDown('down')

else:

pyautogui.keyUp('down')

# Display the frame

cv2.imshow('Dino with OpenCV',frame)

# Break the loop if 'q' key pressed

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything done, release the capture

cap.release()

cv2.destroyAllWindows()

Summary:

So that was it, In this tutorial, you learned how to use facial expressions and movement to control a simple Game. Two important things covered in this post were how to efficiently trigger events based on simple facial expressions and movements.

The other thing that we learned was how to automate mouse, keyboard controls via a python script using a library called Pyautogui.

Now obviously, using mouth controls is a much cooler way to play this game but you probably wouldn’t be able to beat the record you made while playing this game with just the keyboard.

Now, this teaches you a valuable lesson, that not everything is worth making it vision controlled. Whenever you apply AI to a project or a problem you should first ask will the added value be worth the effort. AI is a tool but it’s not always the right tool for every problem.

Still, it’s worth mentioning that you can take this same application that I’ve created here and control a different game or application with it. Maybe this time the use-case that you come with will actually be beneficial.

If you do end up controlling a unique application then do let me know either in comments here or on LinkedIn etc.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning