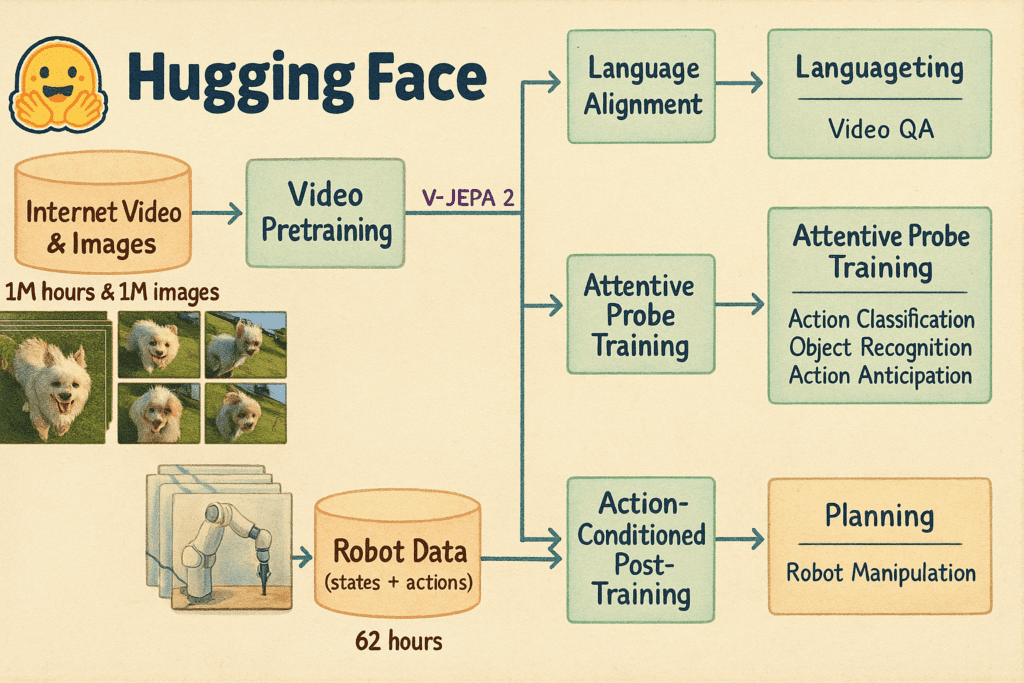

The domain of video understanding is rapidly evolving, with models capable of interpreting complex actions and interactions within video streams. Meta AI’s VJEPA-2 (Video Joint Embedding Predictive Architecture) stands out as a powerful self-supervised model, demonstrating impressive capabilities in learning nuanced visual representations for video.

Journey with us as we explore the practical application of VJEPA-2 for video classification. This post highlights the critical dataset behind its learning, provides a detailed guide to an inference script, shares our experience with a common implementation hurdle (and its resolution), and delves into essential video frame processing considerations, especially the crucial impact of temporal window size

- VJEPA-2 trained with Something-Something-v2 Dataset

- VJEPA-2 Video Classification Inference Script Walkthrough

- Conclusion

- References

VJEPA-2 trained with Something-Something-v2 Dataset

For video understanding models like VJEPA-2, particularly when aiming for action recognition tasks, the Something-Something-v2 (SSv2) dataset stands as a landmark resource. Its design philosophy and scale are critical to training models that can comprehend dynamic scenes.

What Makes SSv2 Special?

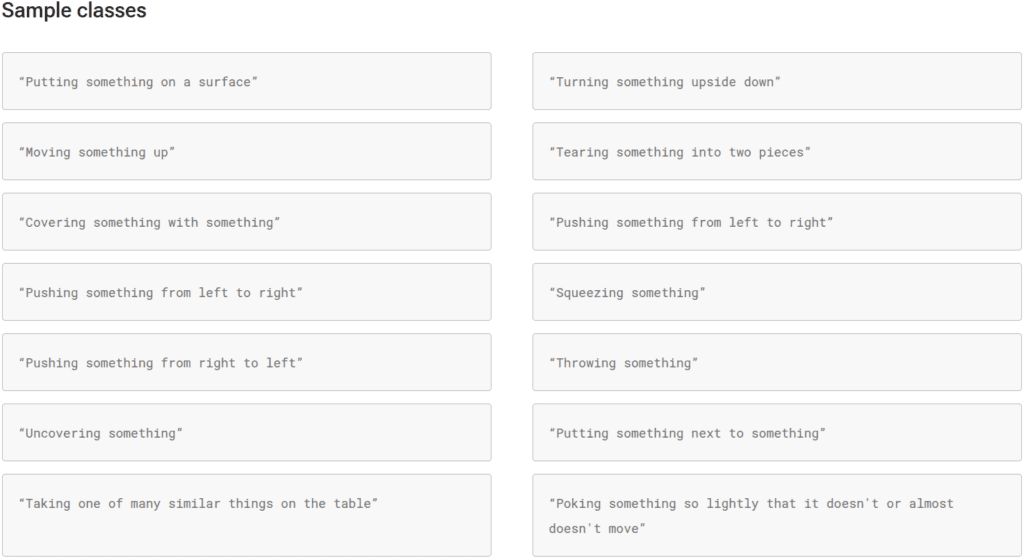

Developed by a team of professionals at Qualcomm, the Something-Something-v2 dataset was meticulously designed to push the boundaries of action recognition by focusing on fine-grained, temporally-dependent human-object interactions. A sample image of the classes used in the dataset is given below:

- Scale and Diversity: SSv2 boasts over 220,000 videos, categorized into 174 distinct action classes. These aren’t generic labels like “sports” or “cooking,” but highly specific actions such as “Folding something,” “Putting something onto a surface,” “Pretending to catch something,” or “Turning something upside down.” This sheer volume and variety provide the rich statistical patterns necessary for deep learning models.

- Focus on Interactions, Not Just Objects: A key differentiator of SSv2 is its emphasis on the interaction itself. Many datasets might help a model recognize “a hand” and “a cup.” SSv2, however, trains models to differentiate between “picking up a cup,” “putting down a cup,” “sliding a cup,” and “pretending to drink from a cup.” This forces the model to learn the subtle temporal cues and motion dynamics that define an action, rather than just identifying co-occurring objects.

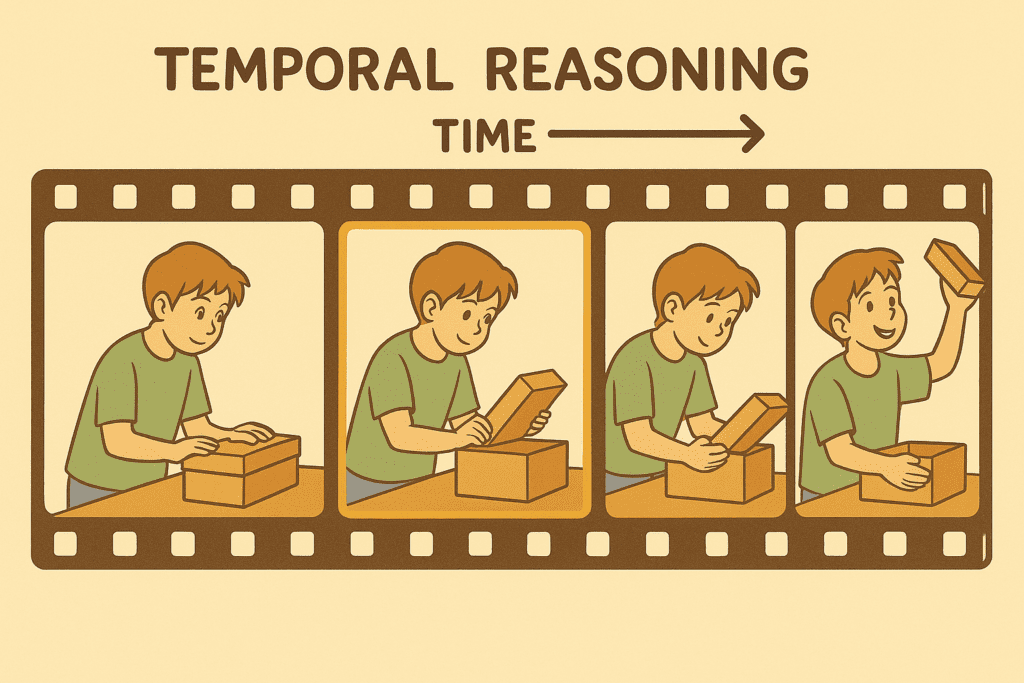

- Temporal Reasoning is Key: Many actions in SSv2 are defined by their temporal structure. The beginning, middle, and end of the video clip are all crucial for correct classification. For instance, “Opening something” looks very different at the start versus the end. This makes it an ideal training ground for models like VJEPA-2, which are designed to capture and predict how scenes evolve over time. VJEPA-2’s predictive learning approach, where it tries to predict future video segments from past context, aligns perfectly with the demands of such a temporally rich dataset.

Why is SSv2 Ideal for Models like VJEPA-2?

- Rich Supervision for Fine-Grained Tasks: While VJEPA-2 itself can be trained self-supervised on unlabeled video, fine-tuning it on a labeled dataset like SSv2 allows it to specialize its learned representations for specific, human-defined action categories. The detailed labels of SSv2 provide strong supervisory signals.

- Generalization: By learning to distinguish between a wide array of fundamental human-object interactions, models trained on SSv2 are more likely to generalize to new, unseen actions or variations. They learn the “grammar” of actions.

- Benchmarking Progress: SSv2 serves as a crucial benchmark in the academic and research community. Performance on this dataset is a widely accepted metric for evaluating the progress of video understanding models.

VJEPA-2 Video Classification Inference Script Walkthrough

Let’s understand a Python script designed to perform real-time video classification using the VJEPA2ForVideoClassification model from the Hugging Face transformers library. This script captures video from a webcam, processes it, and predicts actions.

Initial Imports and Crucial Hyperparameters: NUM_FRAMES and Resolution

import cv2

import torch

from collections import deque

# Constants

NUM_FRAMES = 7 # Critical Hyperparameter for Temporal Context

FRAME_WIDTH, FRAME_HEIGHT = 256, 256

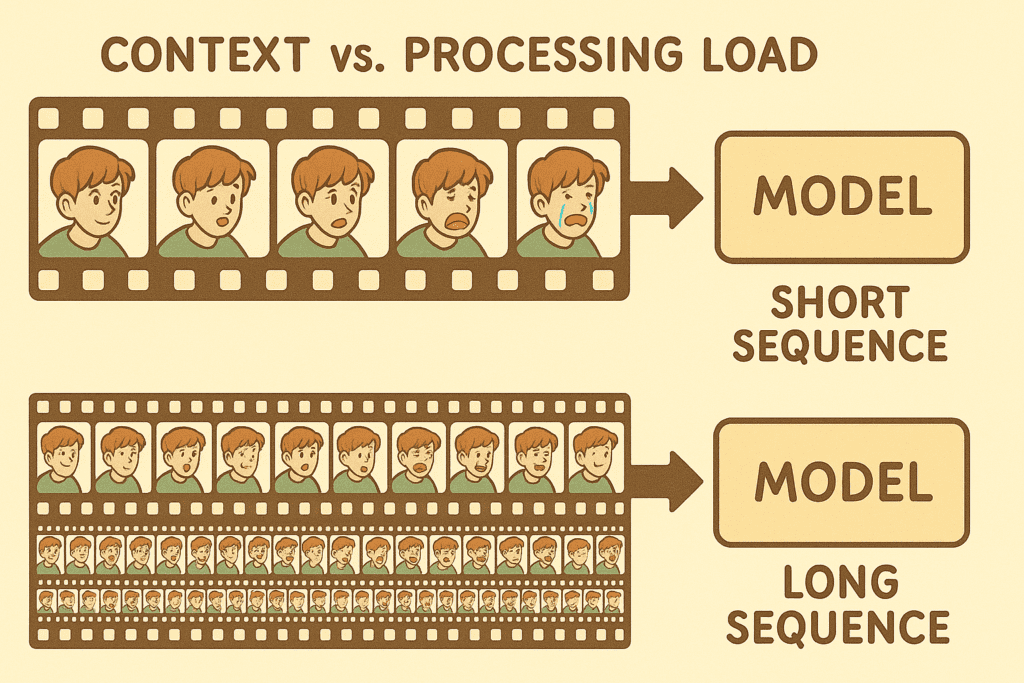

NUM_FRAMES: This constant dictates the number of consecutive video frames that are processed together as a single input to the model. The choice ofNUM_FRAMESprofoundly impacts both the model’s ability to understand actions and the real-time performance of the system.- Longer Context Window (e.g.,

NUM_FRAMES= 32 or 64): A larger value provides the model with a more extensive temporal context. Theoretically, this allows for better understanding of complex actions that unfold over longer durations. However, as we observed in our experimentation, using a significantly largerNUM_FRAMES(like 64) leads to a noticeable increase in processing time for each prediction.

The model has to handle a larger batch of frames, which is computationally more intensive. This increased load can cause the webcam feed in the OpenCV window to stutter or freeze momentarily each time a prediction is made, as the main thread waits for the model inference to complete. - Shorter Context Window (e.g.,

NUM_FRAMES= 1 or 2): Conversely, a very small number of frames drastically reduces the processing load, leading to smoother video display. However, this comes at a significant cost to prediction quality. With minimal temporal information (e.g., only one or two frames), the model lacks sufficient context to discern meaningful actions. Our experiments showed that predictions often become vague because the model cannot capture the dynamics of an event. Most actions are inherently temporal sequences, not static images. - Finding the Balance: The value of 7 used in this script (or similar values like 8 or 16 often seen in video model configurations) represents a common trade-off. It aims to provide enough temporal context for recognizing many short-to-medium duration actions without overburdening the system to the point of unusable latency in a real-time scenario. The optimal

NUM_FRAMESis application-dependent and should be tuned based on the specific actions being targeted, the model’s architecture, and the available computational resources.

- Longer Context Window (e.g.,

FRAME_WIDTH,FRAME_HEIGHT: Set to256x256, these define the spatial resolution to which each frame is resized. This specific resolution is mandated by the pre-trainedfacebook/vjepa2-vitl-fpc16-256-ssv2model. Using dimensions inconsistent with the model’s training regime would lead to input mismatches and degraded performance.

Addressing a Common OpenCV Display Anomaly

# Start GUI thread (important fix!)

# cv2.startWindowThread() # Kept commented as in the original script

cv2.namedWindow("Display", cv2.WINDOW_AUTOSIZE)

Here, we initialize an OpenCV window. As noted from the experimentations performed, initializing the OpenCV display before importing transformers was key to avoiding GUI freezes on any Linux setup.

# Delay transformer imports until after imshow is initialized

from transformers import AutoVideoProcessor, VJEPA2ForVideoClassification

This delayed import is the practical solution to the aforementioned display issue. For deeper insights into such library interactions, community forums and the official Hugging Face Transformers GitHub repository can be valuable resources, specifically refer to this github issue to understand the cv2.imshow()inconsistency in more depth.

Loading the Pre-trained VJEPA-2 Video Classification Model and Processor

# Load model and processor

processor = AutoVideoProcessor.from_pretrained("facebook/vjepa2-vitl-fpc16-256-ssv2")

model = VJEPA2ForVideoClassification.from_pretrained("facebook/vjepa2-vitl-fpc16-256-ssv2").to("cuda").eval()

We load the specified VJEPA-2 model and its corresponding processor, moving the model to the GPU .to("cuda") and setting it to evaluation mode .eval().

Webcam Initialization and Frame Buffer Setup with deque

# Open webcam

cap = cv2.VideoCapture(0)

if not cap.isOpened():

print("Error: Cannot open webcam")

exit()

frame_buffer = deque(maxlen=NUM_FRAMES)

frame_count = 0

Webcam access is initialized. The frame_buffer = deque(maxlen=NUM_FRAMES) is crucial. This double-ended queue efficiently stores the most recent NUM_FRAMES, automatically discarding the oldest frame when a new one is added and the buffer is full. This creates the sliding window of frames necessary for continuous video processing.

Preprocessing the Video Clip

def preprocess_clip(frames):

resized = [cv2.resize(f, (FRAME_WIDTH, FRAME_HEIGHT)) for f in frames]

return processor(resized, return_tensors="pt").to("cuda")

This function resizes each frame in the current clip to 256x256 and then uses the processor to convert them into the tensor format required by the model, including normalization and batching.

The Main Inference Loop: Capture, Process, Predict

try:

while True:

ret, frame = cap.read()

if not ret:

print("Failed to capture frame")

break

cv2.imshow("Display", frame)

frame_count += 1

frame_buffer.append(frame.copy())

if len(frame_buffer) == NUM_FRAMES:

inputs = preprocess_clip(list(frame_buffer))

with torch.no_grad():

outputs = model(**inputs)

logits = outputs.logits

predicted_label_id = logits.argmax(-1).item()

label = model.config.id2label[predicted_label_id]

print(f"Prediction: {label}")

frame_buffer.clear() # For distinct, non-overlapping clip predictions

if cv2.waitKey(1) & 0xFF == ord('q'):

break

except KeyboardInterrupt:

print("Interrupted by user")

finally:

cap.release()

cv2.destroyAllWindows()

print("Webcam released and windows closed.")

The core loop reads frames, displays them, and adds them to the frame_buffer. Once NUM_FRAMES are collected, they are preprocessed and fed to the model for prediction. The frame_buffer.clear() ensures that predictions are made on entirely new sets of frames. For continuous, overlapping predictions, this line would be omitted.

Conclusion

VJEPA-2, especially when leveraged with rich datasets like Something-Something-v2, offers powerful capabilities for interpreting video. However, translating this power into practical, real-time applications requires careful attention to technical details. This includes managing library interactions, adhering to model-specific input requirements like frame resolution, and critically, tuning hyperparameters such as NUM_FRAMES.

Understanding the trade-offs associated with the temporal window size—balancing contextual richness against computational load and prediction latency—is paramount for developing responsive and accurate video understanding systems. By navigating these nuances, developers can truly unlock the potential of advanced video AI.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning