The rapid advancement of Vision-Language Models (VLMs) has significantly improved the ability of AI systems to interact with graphical user interfaces (GUIs). However, existing models often struggle with action grounding. Models like GPT-4V cannot accurately map actions to specific UI elements across diverse applications and operating systems. To bridge this gap, Microsoft OmniParser introduces a pure vision-based screen parsing approach that extracts structured elements from UI screenshots, enhancing the action prediction capabilities of large multimodal models like GPT-4V.

OmniParser integrates multiple fine-tuned models into a single pipeline that detects interactable regions, describes UI elements, and overlays structured bounding boxes on the UI screenshot. This robust methodology allows AI agents to perform UI tasks without relying on additional metadata such as HTML or view hierarchies. This article provides an in-depth analysis of OmniParser’s methodology, pipeline, training strategies, and its impact on Vision-Language Models.

OmniParser Methodology

OmniParser employs a structured screen parsing approach consisting of three major components:

- Interactable Region Detection

- Icon and Text Semantics Extraction

- Bounding Box Integration with Unique Labels

Each component plays a crucial role in translating UI screenshots into actionable structured data.

Interactable Region Detection

OmniParser’s first step involves detecting interactable elements such as icons, buttons, text fields, and clickable links.

Instead of relying on predefined HTML structures, it uses a fine-tuned object detection model trained on a curated dataset of UI elements extracted from popular web pages. The Set-of-Marks (SoM) approach is used to overlay bounding boxes on detected elements, ensuring precise action mapping.

Key features of the detection model:

- Trained on 67K unique UI screenshots with bounding boxes derived from DOM trees.

- Integration with OCR to extract bounding boxes for text elements.

- Adaptive bounding box merging to remove redundant overlaps and ensure clarity.

- YOLOv8 Nano model fine-tuned for optimized screen parsing performance.

Incorporating Local Semantics for Functionality

Once interactable elements are identified, OmniParser enhances their representation by generating localized semantic descriptions. This process mitigates the cognitive burden on GPT-4V by enriching the UI understanding with functional descriptions.

The following are the models and methodologies used for icon description and icon identification:

- Fine-tuned Florence model for icon descriptions, replacing the earlier BLIP-2 approach.

- PaddleOCR for extracting text from UI elements, including text below icons.

- Context-aware icon and UI element description generation to distinguish between similar-looking components in different contexts.

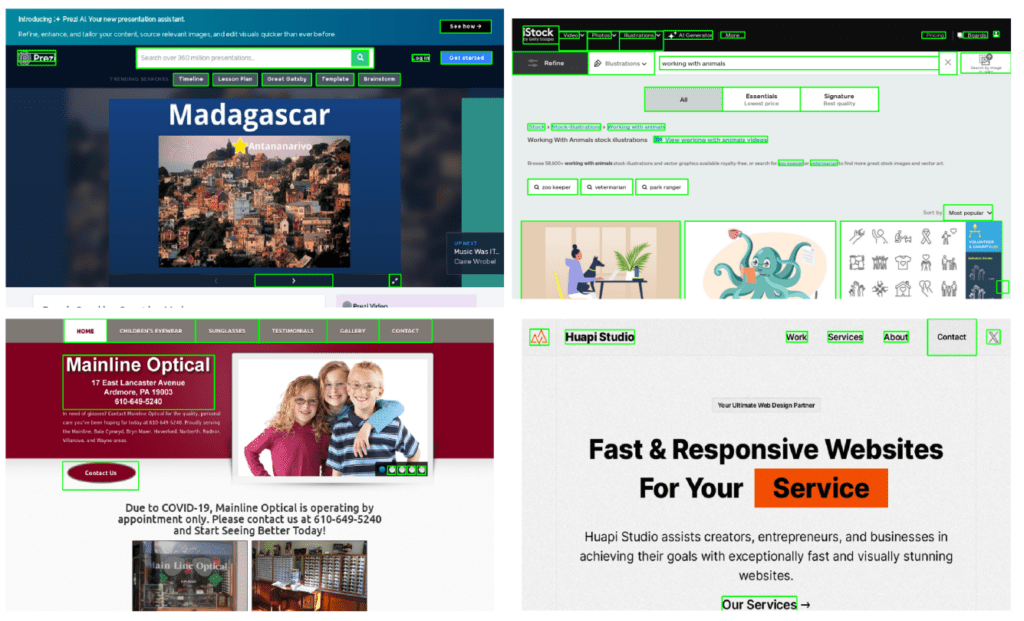

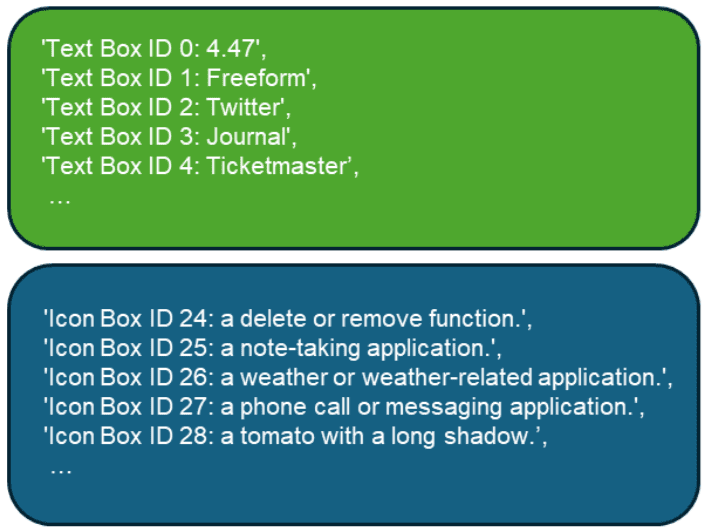

Structured Representation of UI Screens

OmniParser produces a structured DOM-like representation of UI elements, which includes:

- UI screenshots overlaid with bounding boxes and numeric IDs.

- Functional descriptions of detected icons and text elements.

- Consolidated screen parsing output for direct use in downstream action prediction models.

The following image shows what the entire screen icon detection and internal icon parsing and descriptions look like.

Model Pipeline and Training Strategy

The OmniParser pipeline consists of multiple trained models, each contributing to robust screen parsing. The training pipeline includes dataset curation, fine-tuning of detection and description models, and post-processing strategies.

Dataset Curation

To ensure high accuracy in screen parsing, Microsoft curated datasets for both detection and description tasks:

- Interactable Region Detection Dataset: Collected from 100K popular webpages, extracting bounding boxes from DOM structures.

- Icon Description Dataset: Created using GPT-4o. The model assisted in the annotation of 7K icon-description pairs, ensuring diverse UI element representations.

Model Training

- Detection Model: Fine-tuned YOLOv8 Nano on the curated detection dataset to efficiently detect and label interactable UI elements across different platforms and applications.

- OCR Module: Utilizes PaddleOCR to accurately extract and merge text descriptions from UI elements, including textual information present below icons.

- Icon Description Model: The fine-tuned Florence model generates high-quality functional descriptions for UI icons, images, graphs, and tables, ensuring accurate interpretation of screen elements in various application environments.

Performance and Evaluation

The authors evaluated OmniParser on multiple benchmarks, demonstrating superior performance over existing models.

SeeAssign Task

A benchmark designed to test bounding box ID prediction accuracy across mobile, desktop, and web platforms.

There is a task associated with each screenshot. After the screen parsing and icon detection step, the GPT-4V model is fed the output along with the task. It has to correctly predict which box ID to click.

OmniParser improved GPT-4V’s accuracy from 70.5% to 93.8% by incorporating local semantics.

ScreenSpot Benchmark

The ScreenSpot dataset is a benchmark consisting of over 600 inferences of screenshots from mobile, desktop, and web platforms. OmniParser’s structured screen parsing approach significantly outperformed baselines in UI understanding tasks:

- Text/icon widget recognition accuracy improved by over 20% compared to GPT-4V.

- Surpassed finetuned GUI models (e.g., SeeClick, CogAgent, Fuyu) on multi-platform UI tasks.

Mind2Web Benchmark

Mind2Web is a benchmark designed for evaluating web navigation models. It consists of tasks that require models to interact with and navigate through various real-world websites, simulating user interactions. Unlike traditional approaches relying on HTML parsing, OmniParser integrates its screen parsing results with GPT-4V, allowing for a purely vision-based interaction approach. This methodology:

- Outperformed HTML-based methods using only UI screenshots.

- Improved task success rate by 4.1%-5.2% in cross-website and cross-domain tasks, demonstrating OmniParser’s ability to generalize across diverse web environments.

AITW Benchmark (Mobile UI Task Navigation)

The Android in the Wild (AITW) benchmark is designed to evaluate AI-driven interactions with mobile applications. It includes a collection of real-world mobile UI tasks requiring models to detect, interpret, and interact with various UI components across different applications and platforms. By leveraging OmniParser’s vision-based parsing pipeline, GPT-4V achieved:

- 4.7% increase over GPT-4V baselines in mobile app navigation.

- Successful detection and interaction with UI elements across multiple mobile operating systems without relying on additional metadata, such as Android view hierarchies.

Discussion and Future Improvements

Despite its strong performance, OmniParser faces challenges such as:

- Ambiguity in repeated UI elements (e.g., multiple “More” buttons on the same screen).

- Coarse bounding box predictions affecting fine-grained click targets.

- Contextual misinterpretation of icons due to isolated description generation.

Future enhancements include:

- Training a joint OCR-Interactable Detection model to improve clickable text localization.

- Context-aware icon description models leveraging full-screen context.

- Adaptive hierarchical bounding box refinement to improve interaction accuracy.

UI Parsing Inference using OmniParser

The official OmniParser repository provides a Gradio demo for UI parsing. We can upload any UI image and get all the detection results for icons, text boxes, and other UI elements.

We will run the demo here which will give us a first-hand experience of how OmniParser works.

Setting Up OmniParser

Make sure you have either Anaconda or Miniconda installed on your system before moving further with the installation steps. The following steps were tested on an Ubuntu machine.

Clone the OminiParser respiratory:

git clone https://github.com/microsoft/OmniParser.git

cd OmniParser

Create a new conda environment and activate it:

conda create -n "omni" python==3.12

conda activate omni

Install the requirements:

pip install -r requirements.txt

This will install all the necessary libraries.

The final step is to download the pretrained models. Run the following command in your terminal inside the OmniParser directory.

for f in icon_detect/{train_args.yaml,model.pt,model.yaml} icon_caption/{config.json,generation_config.json,model.safetensors}; do huggingface-cli download microsoft/OmniParser-v2.0 "$f" --local-dir weights; done

mv weights/icon_caption weights/icon_caption_florence

It will download the YOLOv8 Nano model trained for icon detection and fine-tuned Florence model for icon caption generation.

We can launch the Gradio demo using the following command:

python gradio_demo.py

This will open the Gradio demo app where we can upload images and parse the screen elements.

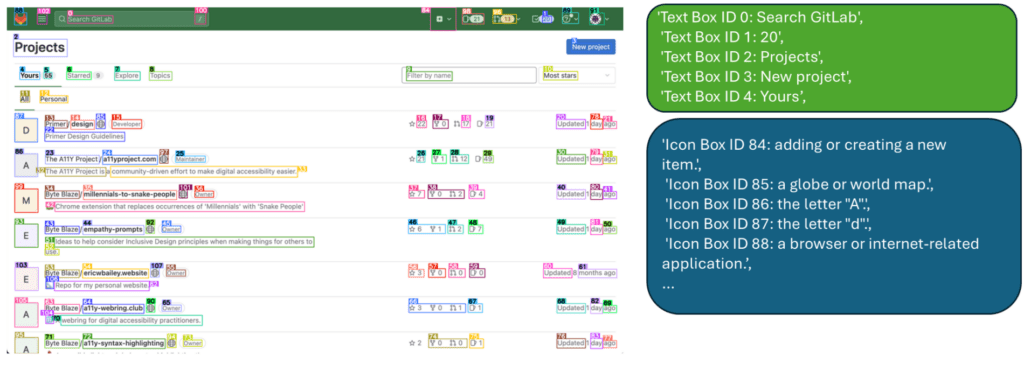

With each UI element detection result, the demo also provides a text result of the parsed detection. This helps us understand how well the combination of YOLO, PaddleOCR, and Florence understand the image.

Let’s discuss some results.

The first result that we are discussing here is the parsed result of a Google Document page. It has a combination of text, headings, icons, and document tool elements. The YOLOv8 model did a good job of detecting most of the items including the Table of Contents on the left tab. However, in some instances, it partially detects the line of text.

Let’s take a look at the parsed text result returned by the pipeline.

icon 0: {'type': 'text', 'bbox': [0.015729453414678574, 0.007022472098469734, 0.05741250514984131, 0.02738764137029648], 'interactivity': False, 'content': 'OmniParser', 'source': 'box_ocr_content_ocr'}

icon 1: {'type': 'text', 'bbox': [0.017695635557174683, 0.023876404389739037, 0.08847817778587341, 0.042134832590818405], 'interactivity': False, 'content': 'File Edit View Insert', 'source': 'box_ocr_content_ocr'}

icon 2: {'type': 'text', 'bbox': [0.13212740421295166, 0.02738764137029648, 0.1635863184928894, 0.04073033854365349], 'interactivity': False, 'content': 'Extensions', 'source': 'box_ocr_content_ocr'}

.

.

.

icon 50: {'type': 'icon', 'bbox': [0.019255181774497032, 0.6464248895645142, 0.10337236523628235, 0.6727869510650635], 'interactivity': True, 'content': 'Key Features ', 'source': 'box_yolo_content_ocr'}

icon 51: {'type': 'icon', 'bbox': [0.022174695506691933, 0.39646413922309875, 0.10094291716814041, 0.41684794425964355], 'interactivity': True, 'content': 'Performance and Evaluat. ', 'source': 'box_yolo_content_ocr'}

Each element is either recognized as text or an icon. For text boxes, it also returns the content. It does the same for the icons as well, if the icons contain text. However, for icons, one major part is determining whether it is interactable or not which the interactivity attribute signifies.

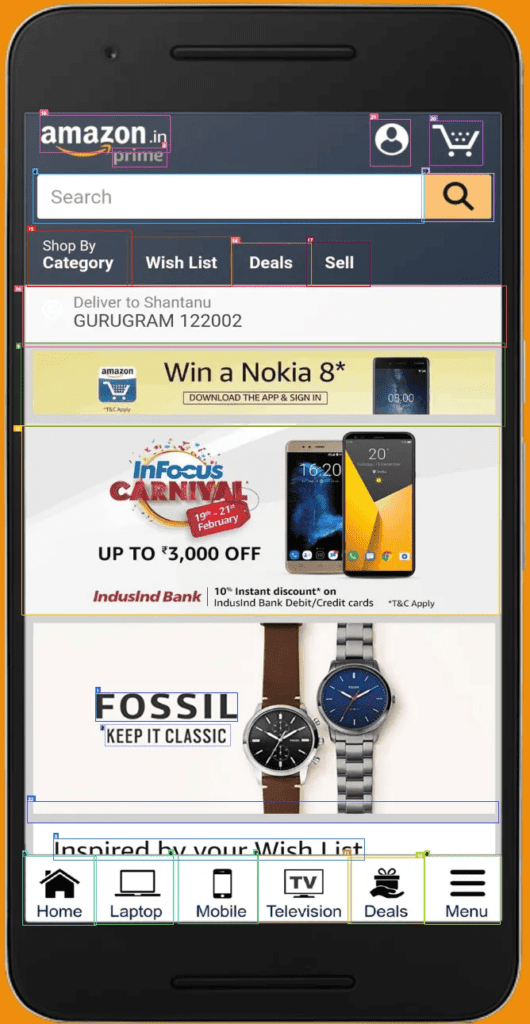

Let’s check another example containing a UI from a mobile application.

Following are the parsed contents.

icon 0: {'type': 'text', 'bbox': [0.21107783913612366, 0.14407436549663544, 0.314371258020401, 0.16266460716724396], 'interactivity': False, 'content': 'prime', 'source': 'box_ocr_content_ocr'}

icon 1: {'type': 'text', 'bbox': [0.18113772571086884, 0.6762199997901917, 0.44760480523109436, 0.7041053175926208], 'interactivity': False, 'content': 'FOSSIL', 'source': 'box_ocr_content_ocr'}

icon 2: {'type': 'text', 'bbox': [0.19760479032993317, 0.7079783082008362, 0.4341317415237427, 0.7288923263549805], 'interactivity': False, 'content': 'KEEP IT CLASSIC', 'source': 'box_ocr_content_ocr'}

icon 3: {'type': 'text', 'bbox': [0.1002994030714035, 0.8187451362609863, 0.6871257424354553, 0.8396591544151306], 'interactivity': False, 'content': 'Insnired hv vour Wish I ist', 'source': 'box_ocr_content_ocr'}

icon 4: {'type': 'icon', 'bbox': [0.06284164637327194, 0.1702609360218048, 0.799872875213623, 0.21801446378231049], 'interactivity': True, 'content': 'Search ', 'source': 'box_yolo_content_ocr'}

icon 5: {'type': 'icon', 'bbox': [0.04233129322528839, 0.8364495038986206, 0.18125209212303162, 0.9036354422569275], 'interactivity': True, 'content': 'Home ', 'source': 'box_yolo_content_ocr'}

icon 6: {'type': 'icon', 'bbox': [0.177901029586792, 0.8357771635055542, 0.32755449414253235, 0.9026133418083191], 'interactivity': True, 'content': 'Laptop ', 'source': 'box_yolo_content_ocr'}

icon 7: {'type': 'icon', 'bbox': [0.33590322732925415, 0.835365891456604, 0.48725128173828125, 0.9017225503921509], 'interactivity': True, 'content': 'Mobile ', 'source': 'box_yolo_content_ocr'}

icon 8: {'type': 'icon', 'bbox': [0.8018407821655273, 0.8358777165412903, 0.9442757368087769, 0.9030740261077881], 'interactivity': True, 'content': 'Menu ', 'source': 'box_yolo_content_ocr'}

icon 9: {'type': 'icon', 'bbox': [0.03968415409326553, 0.33482760190963745, 0.9533154368400574, 0.41663098335266113], 'interactivity': True, 'content': 'amazon Win a Nokia 8* DOWNLOAD THE APP & SIGN IN 08:00 ', 'source': 'box_yolo_content_ocr'}

icon 10: {'type': 'icon', 'bbox': [0.6559209823608398, 0.8375254273414612, 0.8008942008018494, 0.901656985282898], 'interactivity': True, 'content': 'Deals ', 'source': 'box_yolo_content_ocr'}

icon 11: {'type': 'icon', 'bbox': [0.4851638972759247, 0.8353713154792786, 0.6618077754974365, 0.901587963104248], 'interactivity': True, 'content': 'TV Television ', 'source': 'box_yolo_content_ocr'}

icon 12: {'type': 'icon', 'bbox': [0.04066063091158867, 0.41592150926589966, 0.9421791434288025, 0.6008225679397583], 'interactivity': True, 'content': '20 CARNIV 19th 21 February UPTO3,000OFF InduslndBank 10Instant discount on Indusind Bank Debit/Credit cards *T&CApply ', 'source': 'box_yolo_content_ocr'}

icon 13: {'type': 'icon', 'bbox': [0.2405502200126648, 0.23145084083080292, 0.4399498701095581, 0.2801952064037323], 'interactivity': True, 'content': 'Wish List ', 'source': 'box_yolo_content_ocr'}

icon 14: {'type': 'icon', 'bbox': [0.43703392148017883, 0.2374911904335022, 0.587538480758667, 0.2797139883041382], 'interactivity': True, 'content': 'Deals ', 'source': 'box_yolo_content_ocr'}

icon 15: {'type': 'icon', 'bbox': [0.05106184259057045, 0.2260615974664688, 0.24924203753471375, 0.279988557100296], 'interactivity': True, 'content': 'Shop By Category ', 'source': 'box_yolo_content_ocr'}

icon 16: {'type': 'icon', 'bbox': [0.042542532086372375, 0.27909427881240845, 0.9558238983154297, 0.3390219211578369], 'interactivity': True, 'content': 'Deliver to Shantanu GURUGRAM 122002 ', 'source': 'box_yolo_content_ocr'}

icon 17: {'type': 'icon', 'bbox': [0.5791370272636414, 0.2366282194852829, 0.6990264058113098, 0.27986839413642883], 'interactivity': True, 'content': 'Sell ', 'source': 'box_yolo_content_ocr'}

icon 18: {'type': 'icon', 'bbox': [0.0757032185792923, 0.11301160603761673, 0.3205451965332031, 0.1488722413778305], 'interactivity': True, 'content': 'amazon.in ', 'source': 'box_yolo_content_ocr'}

icon 19: {'type': 'icon', 'bbox': [0.79616779088974, 0.1689583659172058, 0.931917130947113, 0.21612757444381714], 'interactivity': True, 'content': 'Find', 'source': 'box_yolo_content_yolo'}

icon 20: {'type': 'icon', 'bbox': [0.8107972145080566, 0.11833497136831284, 0.9111638069152832, 0.16139522194862366], 'interactivity': True, 'content': 'shopping cart', 'source': 'box_yolo_content_yolo'}

icon 21: {'type': 'icon', 'bbox': [0.6982802748680115, 0.1165347620844841, 0.7747448086738586, 0.16223861277103424], 'interactivity': True, 'content': 'User profile', 'source': 'box_yolo_content_yolo'}

icon 22: {'type': 'icon', 'bbox': [0.05113839730620384, 0.7825599312782288, 0.9413369297981262, 0.8036486506462097], 'interactivity': True, 'content': 'Slide', 'source': 'box_yolo_content_yolo'}

The above represents a more real-life use case where a user may ask the agent to add an item to cart and proceed to checkout. Here, most of the elements are interactable icons which the pipeline has predicted correctly.

Further in the article, we will see how to use the above pipeline as a GUI agent for computer use.

OmniTool: A Local Windows VM for Computer Use

The latest OmniParser repository provides OmniTool, a comprehensive system that enables AI-driven GUI interactions within a local Windows 11 VM. It integrates OmniParser V2 with various vision models, providing an efficient testing and execution environment for agent-based automation.

This allows us to try out computer use on our own machines.

Components

- OmniParserServer – A FastAPI server running OmniParser V2.

- OmniBox – A lightweight Windows 11 VM running inside a Docker container.

- Gradio UI – A web-based interface for issuing commands and monitoring AI agent execution.

Key Features

- OmniParser V2 is 60% faster than its predecessor and supports a broader range of OS and app icons.

- OmniBox reduces disk space usage by 50% compared to traditional Windows VMs.

- Supports major vision models, including OpenAI (4o/o1/o3-mini), DeepSeek (R1), Qwen (2.5VL), and Anthropic Computer Use.

Setup Instructions

The repository provides detailed setup instructions for Omnitool in the README file inside the omnitool directory.

It is recommended to follow the instructions and set it up before carrying out your own experiments.

Here, we will discuss a few experiments that our team carried out.

We used OpenAI GPT-4o for all experiments. The experiments that we will carry out here will mostly include browser use using the agent rather than internal system use.

OmniTool Experiment 1: Downloading the OpenCV GitHub Zip File

For the first experiment, we asked the OmniTool agent to download the zip file for the OpenCV GitHub repository.

After initializing the process, the agent carried out the following steps:

- Opened Google Chrome web browser.

- Navigated to the OpenCV GitHub repository.

- Clicked on the Code button and downloaded the zip file.

All the while the left tab showed all the screenshots of the parsed screens and what steps were taken by the LLM in text.

However, in the end, after downloading the file, the agent loop did not end. It kept on downloading the file multiple times and we had to kill the process manually.

We can say that the process was a 90% success and it would have been great to see the agent end the loop.

OmniTool Experiment 2: Adding Items to Cart on Amazon

Next, we gave the OmniTool a more complex task. We asked it to go to the Amazon website, add a Dell Alienware laptop to the cart, and proceed to checkout.

We observed several interesting actions by the agent here.

Firstly, when the agent navigated to the Amazon website, it was welcomed with a captcha screen which we were not expecting. However, to our surprise, it was able to successfully pass the test.

Secondly, after some trial and error, it was able to correctly navigate to the Amazon search bar and search for the laptop. However, rather than considering the laptop we asked for, it clicked on the very first link that it was able to see. This shows the inability to keep minute details in memory when carrying out complex tasks.

Nonetheless, it proceeded. However, instead of the “Add to Cart” button, the page contained the “See All Buying Options” button. The agent kept on searching for the “Add to Cart” button and kept on scrolling down the page and the same was also being shown on the left side tab. After multiple such scrolls, we killed the operation as the button would not be present at the bottom of the page.

Key Observations from OmniTool Agent Usage

In both cases, although deemed as failures, we observed some interesting scenarios.

- In the first case, the model was able to download the zip file but did not end the agentic loop. Probably prompting with an ending instruction would have done so.

- In the second case, the model was presented with a sudden captcha test which, surprisingly, it was able to pass. However, it could not add the correct laptop to the cart.

In both cases, we observed failure and some intelligent moments as well. This shows that agentic AI and computer use, although good for simple use cases, have a long way to go.

Summary

In this article, we covered OmniParser, a UI screen parsing pipeline that helps autonomous agents with computer use. It is paired with OmniTool which integrates the results from OmniParser and several VLMs to provide users with an autonomous agent for computer use to run in a VM.

We also discussed some interesting use cases of Omnitool, which areas it aced, and where it failed.

Do give this a try on your own with some simple use cases. Maybe you will find something interesting which is worth sharing in the comment section below.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning