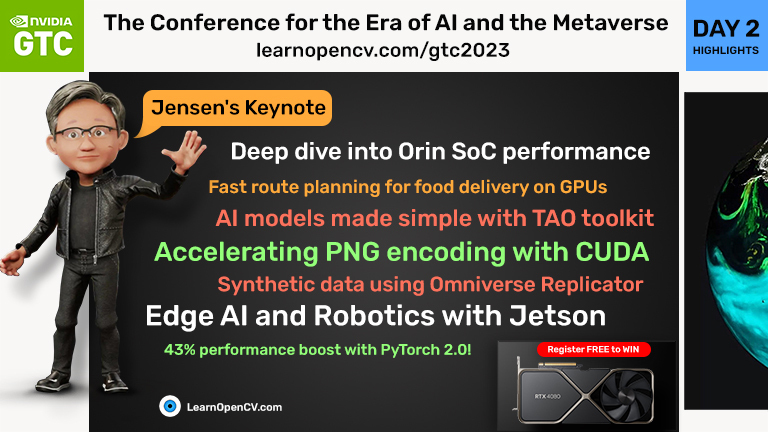

Hello and welcome to Day 2 of Spring GTC 2023 to learn about NVIDIA’s Latest Advances in AI: Revolutionizing Quantum Computing, Lithography, Cloud Computing, and more. For those who are familiar with LeanrOpenCV.com, you may remember our past coverage of this amazing event, now in its 14th year. However, for those who are new, let me give you a quick rundown. GTC, or the GPU Technology Conference, is NVIDIA’s flagship event that brings together experts from various industries and research fields that benefit from GPU compute. In fact, Jensen Huang, the CEO of NVIDIA, informed us that this edition of GTC has over 250,000 attendees! There is no doubt that AI is going fully mainstream! The question though is: are you going mainstream to GTC? If not, please sign up here and get a chance to win an RTX 4080 GPU!

Day 1 of GTC was filled with very insightful talks which we have covered here. Please feel free to browse our coverage here:

Without further ado, let’s begin with a summary of Jensen’s Keynote.

Table of Contents

- Jensen’s Keynote

- Route Planning for Food delivery on GPUs [S51564]

- AI Models made simple with TAO toolkit [S52546]

- CUDA Accelerated PNG encoding/decoding [S51286]

- Edge AI and Robotics with Jetson [CWES52132]

- Summary

Jensen’s Keynote

This keynote was totally focused on enterprise customers and cloud computing with no mention of gaming whatsoever. In fact, there were two phrases that Jensen mentioned over and over again which we assume are driving the latest round of innovations at NVIDIA.

- The first phrase was ‘accelerate for net zero’. Across the world, most enterprises still use CPUs for workloads which could be accelerated with GPUs. With the aim of reducing carbon emissions and achieving net zero footprint, Jensen emphasized that GPU acceleration could significantly reduce energy consumption and drive down cost as well as help sustainability.

- The second phrase often used by Jensen was ‘the iPhone moment of AI is here’. This obviously refers to the fact that generative AI like ChatGPT and stable diffusion are going mainstream and out of the domain of programmers into everyday use by hundreds of millions of people.

Now that we have seen the guiding premise behind this keynote, let’s take a look at the key takeaways from the presentation:

Revolutionizing Quantum Computing

NVIDIA offers a quantum computing framework called cuQuantum, which is designed to help accelerate simulations of quantum computers. Jensen announced that this quantum computing stack now enables simulations of fast error correction, thereby accelerating quantum research.

Latest Advances in Lithography

In high school, we all learn that light travels in a straight path. However, the double slit experiment shows that this is not totally true. Light can bend around edges of small objects in a phenomenon known as diffraction. Unfortunately, this phenomenon poses a huge challenge in the manufacturing of semiconductor chips. The photomask used to transfer the circuit pattern onto a silicon wafer needs to compensate for diffraction in a process called Inverse Lithography or ILT. For the past 4 years, NVIDIA has been working on a computational lithography library called cuLitho. This library simulates Maxwell’s equations and can reduce the time to process a reticle from 2 weeks to 8 hours. This is a 42x improvement! NVIDIA collaborated with TSMC, ASML, and Synopsys for four years to create this technology. Reportedly, using cuLitho, TSMC can reduce the power consumption of this workload from 35 MW to 5 MW! cuLitho is expected to start qualifying for production by June this year.

Revolutionizing Cloud Computing

Cloud computing has grown by 20% every year, and there is a mandate to reduce its carbon footprint. NVIDIA is working on accelerating every workload to reclaim power.

NVIDIA’s latest superchip, Grace, is highly energy-efficient and can be air-cooled. It connects 144 cores and offers LPDDR 1TB/sec. It is 1.3X faster than the latest x86 chips on microservices and 1.2x on big data queries. Overall, Grace gives cloud service providers (CSPs) a 2x data center throughput at isopower (same power as x86).

Inference: TensorRT, Triton, TMS

NVIDIA has developed several inference components, including TensorRT and Triton. These platforms help developers deploy AI models efficiently. NVIDIA announced Triton Management Service or TMS for model orchestration.

Jensen also mentioned CV-CUDA, an open source project that enables building efficient computer vision pipelines for cloud deployments. Currently, CV-CUDA has support for 30 common applications such as object detection, segmentation and classification.

DGX Cloud

DGX stations are AI training workstations that NVIDIA has offered for a few years at this point. As we mentioned before, Jensen stated that we are at the “iPhone moment of AI.” To enable this revolution, NVIDIA is announcing DGX Cloud, which will bring AI supercomputers to the browser. With DGX cloud, it will become easy to scale up and scale out your AI workloads without investing in hardware upfront. Oracle will be the first to offer DGX cloud.

Omniverse Cloud

In keeping with the theme of this presentation, Jensen announced that Omniverse is also available in the cloud now. Microsoft is partnering with NVIDIA to bring Omniverse into Microsoft services like Teams, so developers can hop onto a Teams call and interact with Omniverse assets directly from Teams. This could enable amazing collaborative workflows for the design industry, as they showed with BMWs virtual factory design team.

Keynote Recap

NVIDIA’s latest advances in AI have the potential to revolutionize quantum computing, lithography, and cloud computing. The company’s efforts in creating more energy-efficient technology will help reduce the carbon footprint of cloud computing. NVIDIA is at the forefront of developing AI technology and is creating an ecosystem for developers to write software for quantum computers. The company’s latest advances in AI are exciting, and it will be interesting to see how they will shape the future of various industries.

Route Planning for Food delivery on GPUs [S51564]

Meituan is a Chinese company which offers a food delivery service called Waimai. This service had a peak of 60 million orders in a single day last year. Route planning for all these orders is very difficult to optimize. To address this problem, Meituan researchers came up with a two stage algorithm, published here (page 17 of PDF). However, running this algorithm on CPUs requires a vast amount of resources. Moreover, this cannot be scaled to 100 million orders per day which they expect to get in coming years. Thus, the company has been working with NVIDIA GPUs and have accelerated the algorithm using custom CUDA kernels. Use of GPUs for this problem has massively increased the computational resources available to the company and reduced the total cost of ownership by 47%! This talk is a great example of a case study where good old CUDA programming is still required and serves a business need that off the shelf libraries cannot fulfill.

AI Models made simple with TAO toolkit [S52546]

The Train Adapt Optimize (TAO) toolkit is an offering by NVIDIA to help industries at all scales to build AI models quickly and efficiently. We have introduced TAO toolkit before, so here, we will cover only what is new in the latest 5.0 release of TAO.

Support for vision transformers: TAO now supports several SoTA vision transformer models for classification, detection and segmentation tasks. This expands on the previously available convolution based models.

AI assisted Annotation: TAO now offers AI assisted annotation, especially for semantic segmentation tasks. Given some images, bounding boxes for an object and a few segmentation masks, TAO uses state of the art transformer based segmentation networks to auto-annotate objects in new images and gets better over time with self training.

Source Open: TAO will now be source-open and developers can probe the intermediate activations of each layer to better understand network predictions. Note that ‘open source’ and ‘source open’ are not the same thing! ’Open source’ refers to software that has an open license that allows users to access and modify the source code freely. This means that the software’s source code is available for anyone to view, use, modify, and distribute. Open source software is often developed by a community of contributors, and the source code is made available to the public for collaboration and development.

On the other hand, ‘source-open’ refers to software that has the source code available for viewing but does not necessarily allow modifications or redistribution. In this case, the source code is made available for reference or educational purposes, but the software’s license may place restrictions on what can be done with it. In other words, the source code may be open, but it may not be licensed under an open source license. You will probably not be able to modify or redistribute TAO’s source code without NVIDIA’s approval.

TinyML: This was the last bastion of TensorFlow which TAO is now breaking into. It is now possible to deploy AI models trained with TAO into STM32 microcontrollers.

Faster Inference: With several optimizations, the TAO team reports that several models are faster at inference time with up to 4 performance improvements over non TAO optimized models of the same architecture.

AutoML: TAO is now available on the cloud and integrated with AutoML so that model hyper parameters can be tuned efficiently and autonomously.

Rest API: TAO now offers REST APIs for integration with cloud services.

CUDA Accelerated PNG encoding/decoding [S51286]

The best lossless format for storing images is PNG, which does not lead to any loss in image quality when saved. Unlike JPEG which uses discrete cosine transform (DCT) followed by Huffman encoding (which can be parallelized), PNG encoding uses a complicated sequential process, which can only run on the CPU — until now!

In this talk, Hongbin Liu and Tong Liu from NVIDIA went through the details of the PNG encoding process and suggested some key optimizations which can accelerate the process with CUDA. The results are really impressive. They reported a 6-10 times decrease in encoding time for most common image sizes! Moreover, CUDA based encoding can be batched for several images to increase throughput. Decoding an image from PNG to RGB values shows a more modest performance improvement of 1.5 to 2x. While the presenters didn’t announce direct availability of this new encoder into CUDA, we hope that this feature comes to CUDA in the next release.

Edge AI and Robotics with Jetson [CWES52132]

NVIDIA announced a new Jetson Orin Nano Developer Kit which comes in the same form factor as the Jetson Xavier NX kit from the previous generation. The Jetson Orin Nano has:

- 40 TOPS of INT8 performance powered by 1024 Ampere CUDA cores

- Up to 80X higher performance than Jetson Nano

- 6 core A78 ARM CPU

- 8 GB of memory, and

- comes in at a price of $499

Thus, the Jetson lineup now consists of:

- Jetson Nano (~0.5 TFLOPs) for learners and hobbyists at $150

- Jetson Orin Nano (40 TOPS INT8) for intermediate users at ~$500

- Jetson Orin AGX (275 TOPS INT8) for advanced users and companies at $2000

Summary

The keynote day is the most exciting day of any GTC. As expected, we saw major announcements in cloud computing and AI. The key takeaway is that if you want to do almost anything with NVIDIA technologies now, you can probably do it on the cloud. All of the major products like DGX stations, TAO, Isaac Sim, Omniverse have been made cloud native. Two new themes we saw were ‘GPUs for sustainability’ and the mainstreaming of AI applications. Join us tomorrow as we continue this journey for yet another day packed with amazing talks.

As a reminder, sign up here and get a chance to win an RTX 4080 GPU!