Welcome to our coverage of Day 1 of NVIDIA Spring GTC 2023. If you are a regular reader of our blog LearnOpenCV.com, you are probably familiar with this amazing event and our past coverage. If you are new, here is a quick summary.

GTC or GPU Technology Conference is NVIDIA’s flagship conference which brings experts from all industries and research fields which benefit from GPU compute. In recent years, with the domination of NVIDIA in AI, GTC has evolved into one of the most attended events by AI students, researchers and engineers. This is our third time covering the conference and we bring you the most interesting and relevant news from the domains of computer vision and machine learning. If you would like a refresher on the major announcements in the last two editions, feel free to browse our past coverage here:

GTC Spring 2022

- Spring GTC 2022 Day 1 Highlights: Brilliant Start

- Spring GTC 2022 Day 2 Highlights: Jensen’s Keynote

- Spring GTC 2022 Day 3 Highlights: Deep Dive into Hopper architecture

- Spring GTC 2022 Day 4 Highlights: Meet the new Jetson Orin

GTC Fall 2022

- NVIDIA GTC 2022 : The most important AI event this Fall

- GTC 2022 Big Bang AI announcements: Everything you need to know

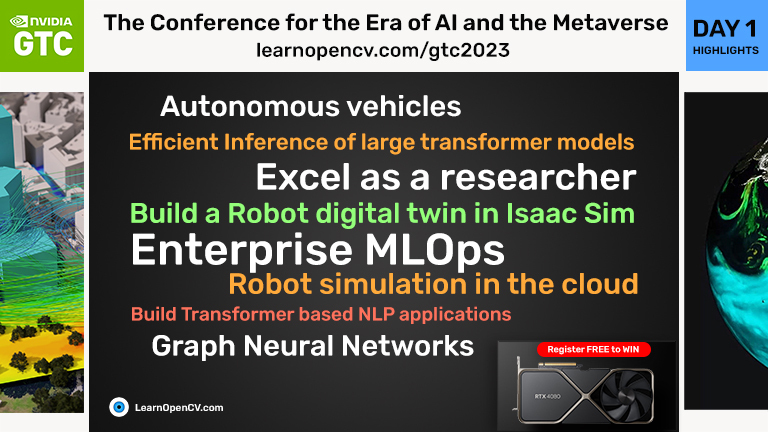

Get a Chance to Win the NVIDIA RTX 4080 GPU

While we do our best to bring you the most relevant talks, there is no substitute for attending the conference yourself, so if you haven’t yet, please register here and stand a chance to win an RTX 4080 GPU:

As a quick reference, you can find the video instructions on the registration process here.

Let’s begin with a summary of the most interesting talks that should help you jumpstart your career in AI.

Table of Contents

- Introduction to Autonomous Vehicles [S51168]

- Efficient Inference of Transformer Models [S51088]

- Excel as a Student Researcher [SE52200]

- Building a digital twin in Isaac Sim [S2569]

- Enterprise MLOps [S51616]

- Building Transformer based NLP applications [DLIW52072]

- Looking ahead

Introduction to Autonomous Vehicles [S51168]

One of the things that makes GTC special is that you don’t have to be an expert in AI or programming to benefit from it. The introduction to autonomous vehicles session is exactly meant for complete beginners in the field of AI and programming. This is a session that is held regularly at every GTC and covers the many aspects of autonomous vehicles from a systems point of view. Although the hype around self-driving cars is not the same anymore as it was in, say, 2017, this remains a very strong career path for budding data scientists and engineers.

The session was hosted by Katie Washabaugh this time around. Katie started off by giving a great overview of the five levels of autonomy, followed by a brief history of DARPA challenges which kickstarted development of some of the first proofs of concept in this field. She then went over the development workflow of autonomous vehicles which includes data curation, generation, labeling, model training, simulation and validation and so on. This is the core of the talk that allows even a beginner to understand the best practices used in this domain. The interesting thing about this talk is that the same workflow and best practices apply to a large variety of robotics and automation industries with the relevant software and hardware stack. Thus, even if you are not interested in self driving cars in particular, you will learn a lot by attending this session.

Efficient Inference of Transformer Models [S51088]

We have recently published a step by step guide to transformers, so if you are unfamiliar with the self attention mechanism, please read up here:

- Understanding Attention Mechanism in Transformer Neural Networks

- The Future of Image Recognition is Here: PyTorch Vision Transformers

Large Language Models (LLMs) like ChatGPT, GPT-4 and PaLM are revolutionizing the way we work and live. After self-driving cars, LLMs are perhaps one of the most widely deployed machine learning models and all major players like Google, Microsoft and OpenAI are racing ahead to develop and deploy LLMs into their products. As such efficient inference of these very large models becomes a very important business requirement. This talk by Stephen Gou and Bharath Venkitesh from Cohere provides three key types of optimizations to improve inference performance:

- Software framework optimizations: Framework optimizations are very attractive from the point of view of model performance since they do not lead to any loss in the accuracy of the model. However, they are hard to implement as they require a deep understanding of the software and hardware (no pun intended)

- LLMs typically don’t fit on a single GPU and model parallelism is necessary to train and infer on them. There are 3 major approaches to model parallelism:

- Tensor parallel: This is an approach in which every layer of the model is split across multiple GPUs. Each GPU operates on a part of the tensor and the final result of the layer is calculated by a gather or reduce operation.

- Pipeline parallel: This is an approach where the model is divided up into small blocks containing a few layers and the blocks live on different GPUs. During inference, each GPU calculates only a few layers of the model and passes on its output to the GPU responsible for the next set of layers.

- 3D parallelism: Both the above mentioned approaches have limitations and their performance saturates with the number of GPUs and model size. 3D parallelism combines the best of the above two approaches to mitigate these limitations.

Some of the most popular frameworks for model parallelism are

- FasterTransformer by NVIDIA for inference

- DeepSpeed for both training and inference

- Megatron-LM for training

- JAX for training and inference

- PyTorch 2.0

One of the oldest tricks in the deep learning inference bag is operator fusion. The most common example of operator fusion is fusion of convolution, batch norm and ReLU into one layer by inference frameworks like TensorRT and ONNX. In transformer land, the network has residual connections followed by layer normalization. The bias addition, residual sum and layer norm can be fused into one single kernel which greatly reduces memory accesses.

- Model optimizations: This covers the quantization options which are standard to all neural networks. However, recently some exciting work has been published about low precision inferencing of LLMs, such as the llm.int8 paper and SmoothQuant, which apply post training quantization. Another exciting model optimization is low rank decomposition as well as parameter pruning.

- Algorithmic optimizations

Finally, some algorithmic optimizations can preserve model accuracy but greatly reduce latency. The most prominent among those are caching the keys and values of previous tokens during the forward pass and FlashAttention.

Excel as a Student Researcher [SE52200]

This one is for the young and ambitious students out there! In this talk, Amulya, Neena and Tomas from NVIDIA gave insights into what it takes to succeed as a researcher in your student life. We have distilled their advice into a few key points:

- Starting early helps.

- You don’t have to specialize too early. Experimentation, failure and success are part of the process.

- Seek out challenging courses to build your foundations.

- Always keep an eye on funding opportunities for projects and internships etc.

- Expand your field of view and look for opportunities of cross disciplinary research.

- Network extensively by attending technical conferences such as GTC 🙂

- Another great way to network is by joining professional societies (they typically have discounts for student memberships).

We strongly recommend you to watch the full session yourself and learn from the fascinating journeys of the speakers, which we did not recount here.

Building a digital twin in Isaac Sim [S2569]

NVIDIA Isaac Sim is available in the cloud. This greatly expands access to robotics simulation for students and startups. In this talk, Rishabh Chadha from NVIDIA went through the Isaac Sim software stack and outlined its features. Notably, the Isaac stack supports ROS1 and ROS2 out of the box as well as ROS bridge. You can also import URDF files which are typically used for describing the geometry and physics of robots. You can simulate a large variety of sensors like cameras, LIDARs and IMUs, not to mention the environment. You can also use Isaac Replicator to create synthetic data. The mainstay of the talk was a demo of working with Isaac Sim on an AWS g5 instance.

Enterprise MLOps [S51616]

MLOps is an umbrella term for the art and science of building scalable and performant ML applications which can stand the test of time (aka data drift). If you want to understand MLOps with great examples and practical use cases, this talk is for you.

Michael and William begin by giving examples of how the MLOps workflow might look like for the two industries of autonomous driving and retail shopping. The crux of their approach is that MLOps should make it easier to produce responsible machine learning systems, maintain ML systems and understand the performance of ML systems. Rather than recommending a definitive list of libraries and platforms for ‘doing ML’, Michael and William introduce a systematic framework for thinking about MLOps as it applies to your industry or organization. Recognizing the fact that ML model development is only a tiny fraction of the pipeline, they recommend integrating your ML workflow into the existing boilerplate infrastructure. As an example, they make it clear that your hypervisors, containers, databases and compute resources should not be planned specifically for MLOps but you should rather be asking ‘how can the existing resources and processes for software development allow me to build ML models?’. This is one of the best talks on MLOps that we have come across in a long time and is definitely worth checking out.

Building Transformer based NLP applications [DLIW52072]

This was a full day paid workshop offered by NVIDIA’s Deep Learning Institute (DLI). This workshop was a hands-on tutorial on building transformer based natural language processing (NLP) applications such as categorizing documents, named entity recognition (NER), as well as to evaluate model performance. Although the title only mentions transformers, there was a healthy attention to pre-transformer techniques like word embeddings and recurrent networks. BERT and Megatron architectures were introduced and inference challenges were discussed to allow participants to deploy these models at scale.

Graph Neural Networks [S51704]

In previous editions of GTC, we have covered talks about the use of graph neural networks (GNNs) for drug discovery. In this edition, we look at another exciting application: detecting financial fraud. Payments data renders itself to graphical analysis quite naturally. In this talk, representatives of industry leaders like Mastercard and TigerGraph shared how GNNs are helping them combat bots, scammers and fraudsters. A fascinating aspect of fraud detection is that scammers are constantly evolving and looking for ways to get around any system put in place against them. Moreover, customers’ preferences and transaction history is also evolving. Thus, the graph of transactions is constantly evolving and fraud detection is required not in a static, steady state graph but in a dynamic, evolving one. Nitendra from Mastercard spoke about how specialized hardware from NVIDIA is required to train these models fast enough so that the model can keep up with customer evolution as well as stay ahead of the scammers. Although there were relatively few technical details in this session (as is typical of any financial company), this talk gave an amazing insight into the world of financial fraud and how AI is helping combat it.

Looking ahead

We are just getting started with GTC. Day 1 was a warmup for the big announcements that are to follow tomorrow. We remind you to register once again to watch the much anticipated Jensen’s Keynote tomorrow.