Introduction

Today is the final day of our coverage of the NVIDIA GTC conference. The first day of GTC was all about professional training, the second day was about Big Bang announcements. On the third day, we went deep into the hardware and software of deep learning. We will end our coverage of GTC in this blog post with a healthy dose of embedded AI and robotics. If you have not kept up with our coverage of GTC, we strongly encourage you to check out the highlights of the first 3 days, where we have curated and summarized 33 sessions of GTC and an exclusive press briefing session on the Hopper architecture, not available to GTC participants.

Please note that the assigned day for an event would be different for different time zones. For example, an event that may have taken place on Day 3 for North American participants may have taken place on Day 4 in Asia.

The last day of GTC had a huge networking component to it. If you had registered with our link, you would have gained an amazing opportunity to network and exchange ideas with professionals across many verticals of the AI industry. However, there were plenty of technical sessions too among the 300+ sessions on the last day. In this post, we have curated the following sessions for you:

- Introducing Jetson AGX Orin

- Agricultural robotics with TensorRT

- Developing Robots with ROS and NVIDIA Isaac

- Environmental Sustainability with AI

- Delivering Robotics at scale

- Human body pose estimation

- Deep Learning for safety-critical systems

- Deep Learning Accelerators on Jetson devices

- AI-driven autonomous drone

- Super-resolution for satellite imagery

We recommend watching the talks after reading about them here, since some aspects of the talk, like images and video demos, cannot be covered in a written format. Although the conference might be finished by the time you read this article, you can still register for a chance to win a 3080 Ti GPU and watch the recording of the talks.

Introducing Jetson AGX Orin [S41957]

Figure 1. Overview of the Jetson Orin architecture (Credit: S41957 slides)

Leela from NVIDIA started off this session by briefly recapping the journey of the Jetson platform over the last 8 years. Looking at the number of developers frequenting the Jetson forums, she showed that this figure sharply accelerated right after the introduction of the Jetson Nano in 2019.

Today, over 1 million developers use Jetson devices for work and hobbies. At the same time, NVIDIA has developed rich partnerships with companies that create custom solutions for domains like sensors and cameras, product design, software services, and distribution. As a result, thousands of companies have created products based on Jetson devices in domains such as retail, agriculture, manufacturing, healthcare, and logistics.

Right after this, Leela introduced the latest member of the Jetson series, the Jetson AGX Orin, and shared some specs of the new board:

- Inference throughput: The Orin board delivers up to 275 TOPS of INT8 performance when its GPU and DLA are used together. This is nearly 9x the performance of the Jetson AGX Xavier.

- CPU: The Orin has 12 CPUs based on ARM A78 architecture. This is quite significant as this is not only 50% higher than the previous Xavier’s 8 cores, but because of the fact that the A78 is a departure from the Carmel micro-architecture CPUs used in Jetson boards until now.

- GPU and DLA: The highlight of Orin’s features is the new Ampere generation GPU which has 2048 CUDA cores and 64 Tensor Cores, as well as 2 deep learning accelerators (DLAs).

- Memory: The Orin has 64 GB LPDDR5 RAM at a bandwidth of 204 GB/s, compared to the 32 GB RAM present in the Xavier. A lower memory 32 GB version of Orin will also be available for production.

- Power consumption: The 64 GB Orin can be configured to consume between 15 and 60 watts while the 32 GB version can consume between 15 and 40 watts. Even the 32 GB version can consume more than the 30W of the Xavier modules, underscoring the power inefficiency of the Samsung 8 nanometer node on which these chips are produced.

- Availability: Developer kits are now available for companies to start prototyping products with. Production modules will be available in October 2022. The developer kits allow you to ‘simulate’ the 32 GB memory version as well, since the Jetson platform is software defined.

- Pricing: The developer kits are priced at $1999, a pretty steep price, but lower than that of Jetson AGX Xavier at the time when it was released. The production boards will be $1599 for the 64 GB version and $899 for the 32 GB one. The $899 price point is the same as the AGX Xavier module, but delivers much higher performance as we will see next.

Figure. Performance, power, and pricing of all Orin modules compared to last gen. [Credit: NVIDIA]

Performance of Jetson AGX Orin

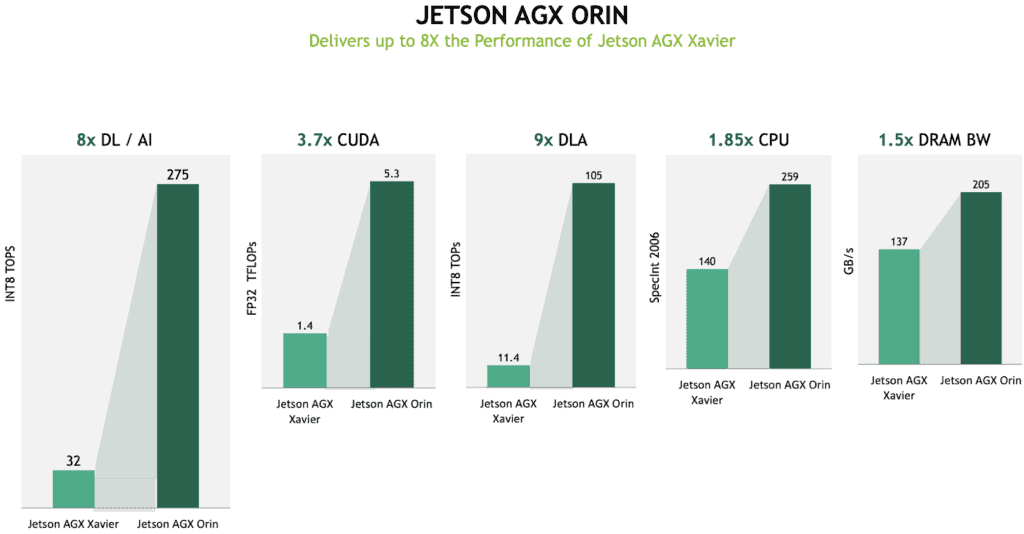

Figure. Performance of Jetson Orin compared to Jetson Xavier. [Credit: NVIDIA]

The Orin board packs a punch with up to 8x the inference throughput of the already fast Jetson AGX Xavier. As shown in the figure above, these improvements come about not only from the faster GPU but also from various other sources, such as the faster CPU, higher memory bandwidth, and the much faster DLA.

As with all first party performance claims, we cannot vouch for the correctness of these numbers since we have not had the opportunity to test out the board for ourselves. However, NVIDIA has had a very clean record on Jetson performance claims in the past, and all their numbers for previous boards have been easily reproducible by users. Thus, we think that these numbers are quite likely to be accurate.

Among these various components, the DLA deserves special attention. The DLA in the Xavier series was introduced to take over some of the workload of the GPU and operate at low power. It successfully fulfilled the latter promise but did not quite match up in the former. That is to say, the power consumption of Xavier DLA was quite low, but so was the performance. Many commonly used computer vision models could not be run on the DLA in real-time. With a massive 9x leap in performance, we expect this to change dramatically for the Jetson Orin.

If you just got a Jetson Orin Developer kit, or the last gen Xavier kit, and would like to know how to use the DLA, we wrote a detailed blog post about it, which you can read here.

Finally, before ending this session, we would like to mention that one of the surprising details revealed during this presentation was that with JetPack 5.0, the older Jetson Xavier modules are set to get a 1.5x performance boost over JetPack 4.1. This is great news for existing users of last gen Jetson devices!

Agricultural robotics with TensorRT [S42182]

Bilberry is a young agricultural robotics startup with offices in Paris and Perth. In this session, Hugo Serrat, the CTO of Bilberry gave a short but packed presentation on how deep learning can be used in agriculture. Hugo presented the following 3 scenarios:

- Keeping weeds away from railway tracks by identifying any weeds close to the tracks and spraying them with chemicals.

- Spot spraying of cereals in large agricultural farms, and

- Automated sorting of potatoes by quality

In each of these cases, deep learning delivers definite value by either reducing the amount of pesticide used or human labor. The most impressive feature of this presentation was that the Jetson ecosystem and TensorRT optimisations enabled Bilberry to convert these ideas into real products which could meet the cost, power consumption and performance requirements of the customers. On this theme, we also encourage you to check out session S42051 titled Computer Vision for Farming Robots.

Developing Robots with ROS and NVIDIA Isaac [S41833]

Many developers have a love-hate relationship with the Windows operating system due to its proprietary nature. Willow Garage, a robotics startup wanted to make sure that by the time robots come of age, the market of operating systems driving them isn’t captured by a closed source company, as had happened in personal computing with Windows and MacOS. Thus, in 2007, they started developing the Robot Operating System or ROS, the Linux of robots.

These days ROS is widely used in robotics and major hardware and software vendors provide ROS plugins for their devices. In this session, NVIDIA showed how their robotics platform, Isaac can be used with ROS to develop robots in simulation. Isaac is a comprehensive robotics platform covering all aspects of robotics development like simulation, model training, application development and deployment. Isaac offers various computer vision algorithms for robotics such as

- image resizing,

- Stereo disparity estimation,

- Image segmentation,

- Object pose estimation

- Simultaneous Localization and Mapping (SLAM), and

- Visual Odometry

The unique selling point of Isaac is that it integrates seamlessly with the Jetson ecosystem, enabling developers to deploy their algorithms on robots using Jetson. We strongly recommend checking out this session for the full details.

Environmental Sustainability with AI [S41906]

This was a non-technical session with entrepreneurs and academics working on achieving sustainability with AI. The specific innovations discussed were:

- AI to automate waste sorting

- AI to reduce pesticide usage

- Building robotic beehives to reverse the decline in bee populations

- Using AI to reduce emissions

- Using AI to protect the coastline in Denmark

This was a great talk for people looking to get inspired. AI and deep learning often get negative coverage in the media since deep models have a significant carbon footprint. This session highlighted several ways in which AI can help us fight climate change.

Delivering Robotics at scale [S42408]

Robots find it hard to achieve human level skills in mobility. The best chess player in the world is an AI but the best soccer player is still a human. Some things that humans find hard are easy for AI while some things we find easy are hard for robots. This is called Moravec’s paradox. In this session, Mostafa from Covariant shared their efforts at automating robotic arms. Their strategy for achieving autonomy rests on four pillars:

- Composability: While reinforcement learning algorithms that take in raw sensor data and output motor torque are very impressive feats of intelligence, for practical applications, such ‘model free’ approaches are brittle. For example, in the famous Deep Q-learning research for playing Atari games by Deepmind, researchers found that just changing the length of the paddle after training led to drastic reduction in performance of the policy. Such approaches are also not interpretable and are highly vulnerable to reward hacking. Thus, the first pillar of Covariant’s approach is to compose autonomy as a collection of interconnected skills.

- Self and semi-supervised learning: Supervised learning cannot scale to the large datasets required to achieve true autonomy. Leveraging the power of self supervised learning is thus the second pillar. This also includes sensor labelling.

- Simulation: There are certain scenarios which cannot be easily replicated and reproduced. This is where simulations play a key role. Covariant is able to generate humongous datasets by simulating various objects, scenes and camera angles that a robot is likely to encounter.

- Optimisation for inference: The fourth pillar of achieving autonomy is aggressive optimisation of the data processing pipeline. Covariant does everything from the moment an image is captured to the point where motor commands are produced on the GPU. They make heavy use of TensorRT, but interestingly do not use Jetson, as their robot arms are static and presumably can afford to use high performance GPUs.

Human body pose estimation [S42035]

In this talk, Jan Kautz from NVIDIA presented their work on human pose estimation from RGB images. The two existing approaches are either prone to overfitting or cannot handle re-projection ambiguities in going from 2D to 3D pose.

With the goal of achieving pose estimation that can generalise in many environments, they propose an approach based on 2.5D representations. 2.5D representations are basically 3D representations where the depth is calculated relative to a root point, rather than relative to the optical center of the camera.

Using Fully Convolutional Neural Networks (FCNNs) and regressing to 2D pose via soft argmax to keep the whole architecture differentiable, they achieve a pose estimator that beats state of the art on this problem.

He also introduced some other papers that are closer to computer graphics and game animation, so we do not cover them here in detail. The human pose estimation paper is available here.

Deep Learning for safety critical systems [S41121]

As deep learning continues to be deployed in more and more industries, we are soon arriving at a point where machine learning would significantly impact the safety of humans. For example, the failure of AI in a self-driving car has a potentially huge impact not only on the safety of the car’s occupants but also the pedestrians.

In this session, Sina and Jose from NVIDIA start by identifying the key design principles on which AI models should be developed. After establishing the taxonomy of machine learning safety, they show approaches that follow these design principles, such as

- how to identify outliers in image classifier models,

- identifying outliers in multiple-object images.

- Curating a safety-oriented training set,

- Using active learning for efficient dataset curation, and

- Performing long-tail training with image resampling and object re sampling.

They ended the talk by discussing the robustness of transformers for semantic segmentation. Evaluating deep learning in safety critical applications is still a nascent field. Many companies and industries are sceptical of using AI since deep learning models are difficult to formally verify for safety. This talk is an excellent introduction on thinking about safety if you want to apply deep learning to safety critical systems.

Deep Learning Accelerators on Jetson devices [S41670]

Deep Learning Accelerators (DLAs) are special processors on Jetson Xavier and Orin boards dedicated for deep learning inference. We have previously explained the various components of DLAs in this blog post.

In this session, Ram Cherukuri from NVIDIA presented a deep dive into the architecture of DLAs and how to use them. In addition to the various processors described in the above linked blog post, the Orin DLAs contain:

- Support for structured sparsity

- Dedicated processor for Depth-wise convolutions

- Hardware scheduler to improve efficiency

- Advanced layer fusion capabilities such as fusing convolution + pooling or element-wise operations + activation layers.

Ram also went into details of the software stack of the DLA. Luckily the users do not need to understand all the details of the software stack as TensorRT handles that for us under the hood. He then provided an example of accelerating ResNet-50 on DLA. If your workflow requires you to use DLAs on the Jetson boards, we highly recommend listening to the full talk at GTC.

AI driven autonomous drone [S41693]

In this session, Magi Chen from CIRC Inc. Introduced their Falcon drone based on Jetson Xavier NX SoC. This drone has an RGB camera, the NX SoC, as well as typical sensors like GPS and IMU. CIRC has developed a custom model for object detection and path estimation, called SkyPilotNet.

Using NVIDIA’s DeepStream and TensorRT, along with several customized blocks of the software stack, including the Linux Kernel, CIRC achieves an inference latency of 13 milli-seconds per frame, allowing the system to comfortably achieve real-time performance.

Super resolution for satellite imagery [S41687]

The last session that we will cover in this GTC will take us out of this world. In this session, Yu-Lin Tsai from the National Space Organization of Taiwan presented a novel algorithm for achieving super resolution on satellite images. Interestingly, this algorithm is not based on deep learning at all, but on solving an optimization problem.

The satellite moves at a known speed in a known direction, but wobbles slightly while scanning the ground. Thus, to recover the high resolution information from the raw data, the proposed algorithm performs image registration (a sophisticated form of template matching). Small differences among the registered images allow the algorithm to estimate the motion vectors due to wobbling of the satellite. Correcting for these motion blurs, followed by de-convolution (in optics this is known as the Wiener filter) allows recovery of the high resolution image.

It turns out that the raw images are quite large and actually running this algorithm on the GPU seems like an impossible task. To solve this, the researchers broke the problem down into matrices with only 81 trillion parameters each! This turns out to be small enough to fit in the memory of an A100 DGX Station.

Summary

We hope you liked our daily coverage of the GTC 2022 conference as much as we loved covering it. In this rapid fire series of posts, we combed through more than 1,000 sessions and summarized 44 sessions most relevant to computer vision, deep learning and robotics. If you attended GTC and came across any other interesting sessions, please let us know on our social media or down in the comments below. If, for some reason, you are still holding off on registering, we will make one last appeal to register for a chance to win a 3080 Ti GPU. GTC registration link.

This concludes our daily blog post series of GTC 2022 Highlights coverage. Thank you!

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning